Kunpeng Yao

DexFlow: A Unified Approach for Dexterous Hand Pose Retargeting and Interaction

May 02, 2025Abstract:Despite advances in hand-object interaction modeling, generating realistic dexterous manipulation data for robotic hands remains a challenge. Retargeting methods often suffer from low accuracy and fail to account for hand-object interactions, leading to artifacts like interpenetration. Generative methods, lacking human hand priors, produce limited and unnatural poses. We propose a data transformation pipeline that combines human hand and object data from multiple sources for high-precision retargeting. Our approach uses a differential loss constraint to ensure temporal consistency and generates contact maps to refine hand-object interactions. Experiments show our method significantly improves pose accuracy, naturalness, and diversity, providing a robust solution for hand-object interaction modeling.

Action Contextualization: Adaptive Task Planning and Action Tuning using Large Language Models

Apr 19, 2024

Abstract:Large Language Models (LLMs) present a promising frontier in robotic task planning by leveraging extensive human knowledge. Nevertheless, the current literature often overlooks the critical aspects of adaptability and error correction within robotic systems. This work aims to overcome this limitation by enabling robots to modify their motion strategies and select the most suitable task plans based on the context. We introduce a novel framework termed action contextualization, aimed at tailoring robot actions to the precise requirements of specific tasks, thereby enhancing adaptability through applying LLM-derived contextual insights. Our proposed motion metrics guarantee the feasibility and efficiency of adjusted motions, which evaluate robot performance and eliminate planning redundancies. Moreover, our framework supports online feedback between the robot and the LLM, enabling immediate modifications to the task plans and corrections of errors. Our framework has achieved an overall success rate of 81.25% through extensive validation. Finally, integrated with dynamic system (DS)-based robot controllers, the robotic arm-hand system demonstrates its proficiency in autonomously executing LLM-generated motion plans for sequential table-clearing tasks, rectifying errors without human intervention, and completing tasks, showcasing robustness against external disturbances. Our proposed framework features the potential to be integrated with modular control approaches, significantly enhancing robots' adaptability and autonomy in sequential task execution.

Transfer Learning in Robotics: An Upcoming Breakthrough? A Review of Promises and Challenges

Nov 29, 2023Abstract:Transfer learning is a conceptually-enticing paradigm in pursuit of truly intelligent embodied agents. The core concept -- reusing prior knowledge to learn in and from novel situations -- is successfully leveraged by humans to handle novel situations. In recent years, transfer learning has received renewed interest from the community from different perspectives, including imitation learning, domain adaptation, and transfer of experience from simulation to the real world, among others. In this paper, we unify the concept of transfer learning in robotics and provide the first taxonomy of its kind considering the key concepts of robot, task, and environment. Through a review of the promises and challenges in the field, we identify the need of transferring at different abstraction levels, the need of quantifying the transfer gap and the quality of transfer, as well as the dangers of negative transfer. Via this position paper, we hope to channel the effort of the community towards the most significant roadblocks to realize the full potential of transfer learning in robotics.

Differentiable Robot Neural Distance Function for Adaptive Grasp Synthesis on a Unified Robotic Arm-Hand System

Sep 28, 2023Abstract:Grasping is a fundamental skill for robots to interact with their environment. While grasp execution requires coordinated movement of the hand and arm to achieve a collision-free and secure grip, many grasp synthesis studies address arm and hand motion planning independently, leading to potentially unreachable grasps in practical settings. The challenge of determining integrated arm-hand configurations arises from its computational complexity and high-dimensional nature. We address this challenge by presenting a novel differentiable robot neural distance function. Our approach excels in capturing intricate geometry across various joint configurations while preserving differentiability. This innovative representation proves instrumental in efficiently addressing downstream tasks with stringent contact constraints. Leveraging this, we introduce an adaptive grasp synthesis framework that exploits the full potential of the unified arm-hand system for diverse grasping tasks. Our neural joint space distance function achieves an 84.7% error reduction compared to baseline methods. We validated our approaches on a unified robotic arm-hand system that consists of a 7-DoF robot arm and a 16-DoF multi-fingered robotic hand. Results demonstrate that our approach empowers this high-DoF system to generate and execute various arm-hand grasp configurations that adapt to the size of the target objects while ensuring whole-body movements to be collision-free.

Real-Time Motion Planning for In-Hand Manipulation with a Multi-Fingered Hand

Sep 13, 2023Abstract:Dexterous manipulation of objects once held in hand remains a challenge. Such skills are, however, necessary for robotics to move beyond gripper-based manipulation and use all the dexterity offered by anthropomorphic robotic hands. One major challenge when manipulating an object within the hand is that fingers must move around the object while avoiding collision with other fingers or the object. Such collision-free paths must be computed in real-time, as the smallest deviation from the original plan can easily lead to collisions. We present a real-time approach to computing collision-free paths in a high-dimensional space. To guide the exploration, we learn an explicit representation of the free space, retrievable in real-time. We further combine this representation with closed-loop control via dynamical systems and sampling-based motion planning and show that the combination increases performance compared to alternatives, offering efficient search of feasible paths and real-time obstacle avoidance in a multi-fingered robotic hand.

ISR-LLM: Iterative Self-Refined Large Language Model for Long-Horizon Sequential Task Planning

Aug 26, 2023

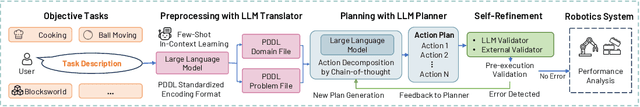

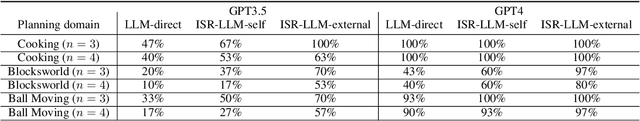

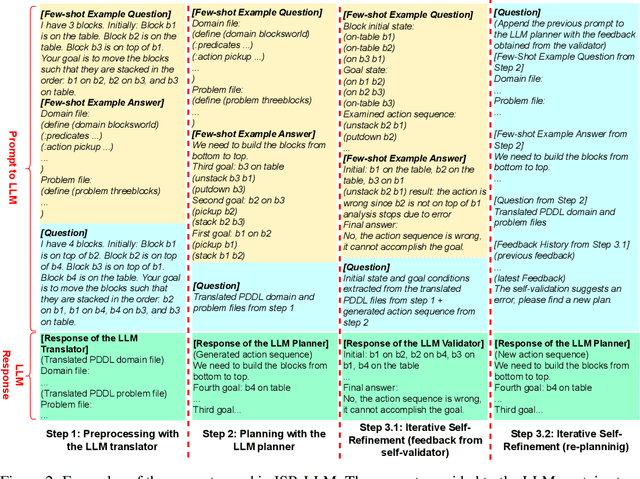

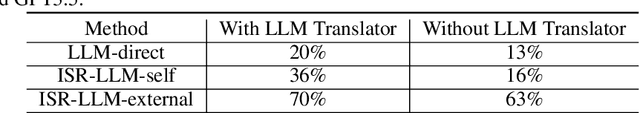

Abstract:Motivated by the substantial achievements observed in Large Language Models (LLMs) in the field of natural language processing, recent research has commenced investigations into the application of LLMs for complex, long-horizon sequential task planning challenges in robotics. LLMs are advantageous in offering the potential to enhance the generalizability as task-agnostic planners and facilitate flexible interaction between human instructors and planning systems. However, task plans generated by LLMs often lack feasibility and correctness. To address this challenge, we introduce ISR-LLM, a novel framework that improves LLM-based planning through an iterative self-refinement process. The framework operates through three sequential steps: preprocessing, planning, and iterative self-refinement. During preprocessing, an LLM translator is employed to convert natural language input into a Planning Domain Definition Language (PDDL) formulation. In the planning phase, an LLM planner formulates an initial plan, which is then assessed and refined in the iterative self-refinement step by using a validator. We examine the performance of ISR-LLM across three distinct planning domains. The results show that ISR-LLM is able to achieve markedly higher success rates in task accomplishments compared to state-of-the-art LLM-based planners. Moreover, it also preserves the broad applicability and generalizability of working with natural language instructions.

Exploiting Kinematic Redundancy for Robotic Grasping of Multiple Objects

Mar 03, 2023Abstract:Humans coordinate the abundant degrees of freedom (DoFs) of hands to dexterously perform tasks in everyday life. We imitate human strategies to advance the dexterity of multi-DoF robotic hands. Specifically, we enable a robot hand to grasp multiple objects by exploiting its kinematic redundancy, referring to all its controllable DoFs. We propose a human-like grasp synthesis algorithm to generate grasps using pairwise contacts on arbitrary opposing hand surface regions, no longer limited to fingertips or hand inner surface. To model the available space of the hand for grasp, we construct a reachability map, consisting of reachable spaces of all finger phalanges and the palm. It guides the formulation of a constrained optimization problem, solving for feasible and stable grasps. We formulate an iterative process to empower robotic hands to grasp multiple objects in sequence. Moreover, we propose a kinematic efficiency metric and an associated strategy to facilitate exploiting kinematic redundancy. We validated our approaches by generating grasps of single and multiple objects using various hand surface regions. Such grasps can be successfully replicated on a real robotic hand.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge