Karan Ahuja

WatchHAR: Real-time On-device Human Activity Recognition System for Smartwatches

Sep 05, 2025

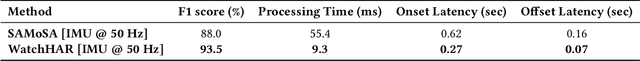

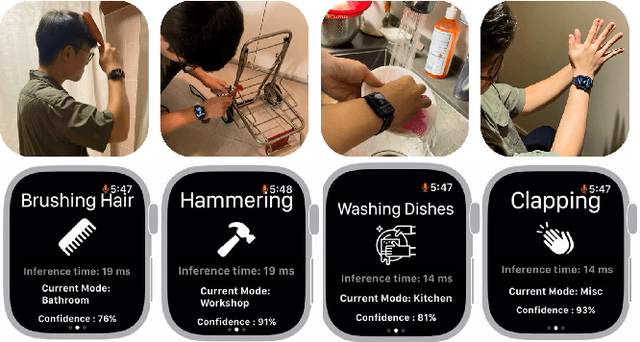

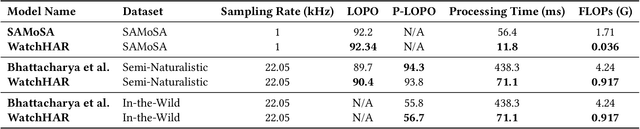

Abstract:Despite advances in practical and multimodal fine-grained Human Activity Recognition (HAR), a system that runs entirely on smartwatches in unconstrained environments remains elusive. We present WatchHAR, an audio and inertial-based HAR system that operates fully on smartwatches, addressing privacy and latency issues associated with external data processing. By optimizing each component of the pipeline, WatchHAR achieves compounding performance gains. We introduce a novel architecture that unifies sensor data preprocessing and inference into an end-to-end trainable module, achieving 5x faster processing while maintaining over 90% accuracy across more than 25 activity classes. WatchHAR outperforms state-of-the-art models for event detection and activity classification while running directly on the smartwatch, achieving 9.3 ms processing time for activity event detection and 11.8 ms for multimodal activity classification. This research advances on-device activity recognition, realizing smartwatches' potential as standalone, privacy-aware, and minimally-invasive continuous activity tracking devices.

MobilePoser: Real-Time Full-Body Pose Estimation and 3D Human Translation from IMUs in Mobile Consumer Devices

Apr 16, 2025

Abstract:There has been a continued trend towards minimizing instrumentation for full-body motion capture, going from specialized rooms and equipment, to arrays of worn sensors and recently sparse inertial pose capture methods. However, as these techniques migrate towards lower-fidelity IMUs on ubiquitous commodity devices, like phones, watches, and earbuds, challenges arise including compromised online performance, temporal consistency, and loss of global translation due to sensor noise and drift. Addressing these challenges, we introduce MobilePoser, a real-time system for full-body pose and global translation estimation using any available subset of IMUs already present in these consumer devices. MobilePoser employs a multi-stage deep neural network for kinematic pose estimation followed by a physics-based motion optimizer, achieving state-of-the-art accuracy while remaining lightweight. We conclude with a series of demonstrative applications to illustrate the unique potential of MobilePoser across a variety of fields, such as health and wellness, gaming, and indoor navigation to name a few.

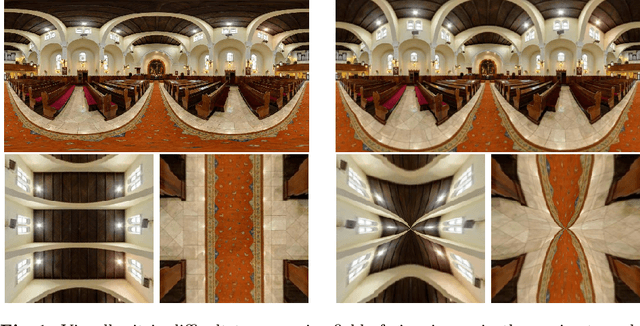

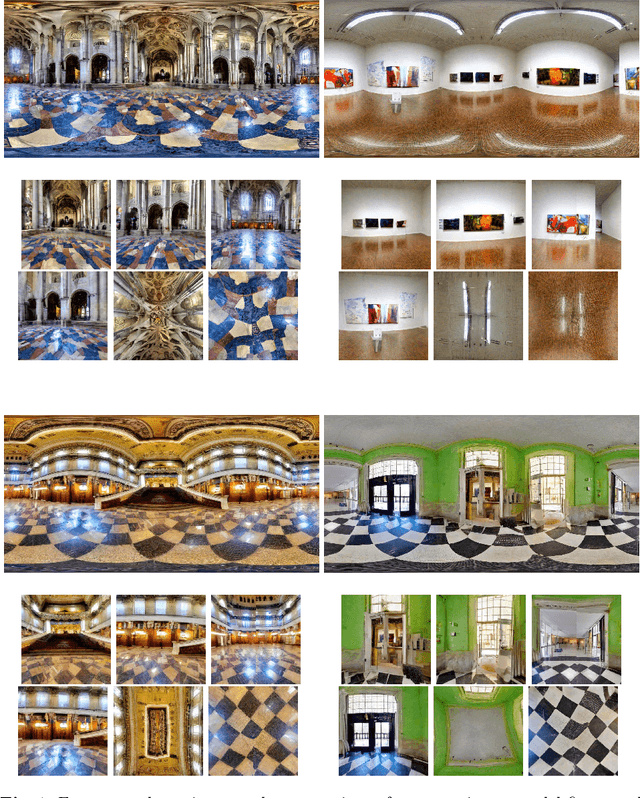

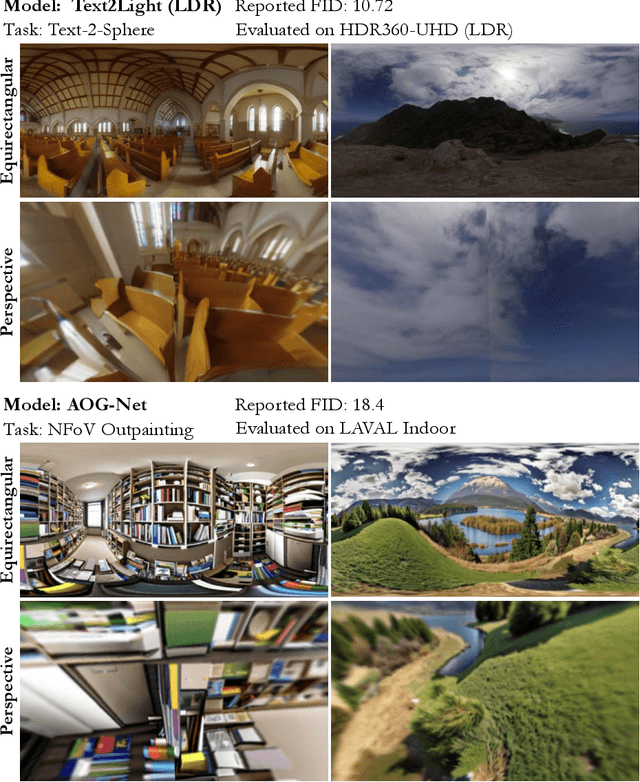

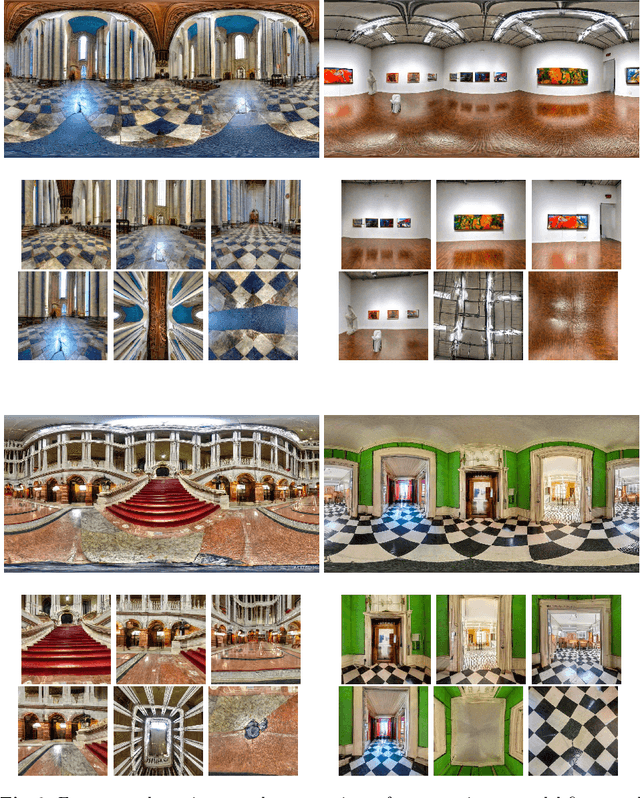

Geometry Fidelity for Spherical Images

Jul 25, 2024

Abstract:Spherical or omni-directional images offer an immersive visual format appealing to a wide range of computer vision applications. However, geometric properties of spherical images pose a major challenge for models and metrics designed for ordinary 2D images. Here, we show that direct application of Fr\'echet Inception Distance (FID) is insufficient for quantifying geometric fidelity in spherical images. We introduce two quantitative metrics accounting for geometric constraints, namely Omnidirectional FID (OmniFID) and Discontinuity Score (DS). OmniFID is an extension of FID tailored to additionally capture field-of-view requirements of the spherical format by leveraging cubemap projections. DS is a kernel-based seam alignment score of continuity across borders of 2D representations of spherical images. In experiments, OmniFID and DS quantify geometry fidelity issues that are undetected by FID.

Augmented Object Intelligence: Making the Analog World Interactable with XR-Objects

Apr 23, 2024

Abstract:Seamless integration of physical objects as interactive digital entities remains a challenge for spatial computing. This paper introduces Augmented Object Intelligence (AOI), a novel XR interaction paradigm designed to blur the lines between digital and physical by equipping real-world objects with the ability to interact as if they were digital, where every object has the potential to serve as a portal to vast digital functionalities. Our approach utilizes object segmentation and classification, combined with the power of Multimodal Large Language Models (MLLMs), to facilitate these interactions. We implement the AOI concept in the form of XR-Objects, an open-source prototype system that provides a platform for users to engage with their physical environment in rich and contextually relevant ways. This system enables analog objects to not only convey information but also to initiate digital actions, such as querying for details or executing tasks. Our contributions are threefold: (1) we define the AOI concept and detail its advantages over traditional AI assistants, (2) detail the XR-Objects system's open-source design and implementation, and (3) show its versatility through a variety of use cases and a user study.

Practical and Rich User Digitization

Feb 29, 2024

Abstract:A long-standing vision in computer science has been to evolve computing devices into proactive assistants that enhance our productivity, health and wellness, and many other facets of our lives. User digitization is crucial in achieving this vision as it allows computers to intimately understand their users, capturing activity, pose, routine, and behavior. Today's consumer devices - like smartphones and smartwatches provide a glimpse of this potential, offering coarse digital representations of users with metrics such as step count, heart rate, and a handful of human activities like running and biking. Even these very low-dimensional representations are already bringing value to millions of people's lives, but there is significant potential for improvement. On the other end, professional, high-fidelity comprehensive user digitization systems exist. For example, motion capture suits and multi-camera rigs that digitize our full body and appearance, and scanning machines such as MRI capture our detailed anatomy. However, these carry significant user practicality burdens, such as financial, privacy, ergonomic, aesthetic, and instrumentation considerations, that preclude consumer use. In general, the higher the fidelity of capture, the lower the user's practicality. Most conventional approaches strike a balance between user practicality and digitization fidelity. My research aims to break this trend, developing sensing systems that increase user digitization fidelity to create new and powerful computing experiences while retaining or even improving user practicality and accessibility, allowing such technologies to have a societal impact. Armed with such knowledge, our future devices could offer longitudinal health tracking, more productive work environments, full body avatars in extended reality, and embodied telepresence experiences, to name just a few domains.

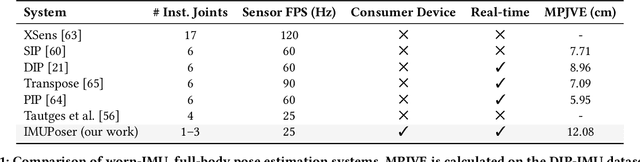

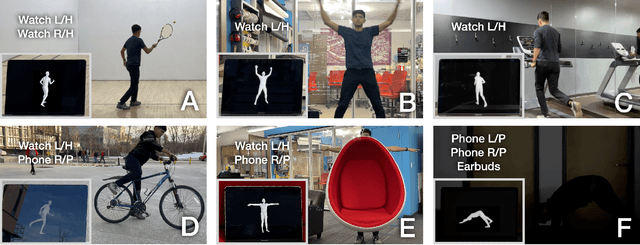

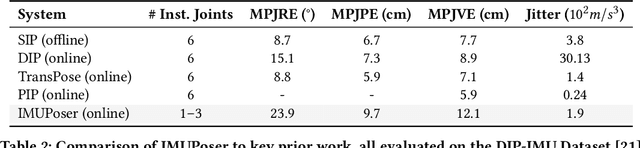

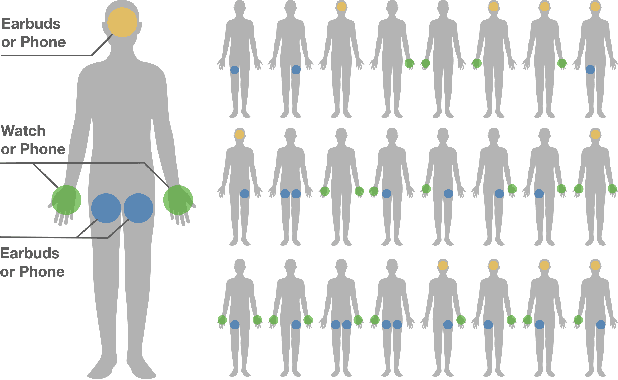

IMUPoser: Full-Body Pose Estimation using IMUs in Phones, Watches, and Earbuds

Apr 25, 2023

Abstract:Tracking body pose on-the-go could have powerful uses in fitness, mobile gaming, context-aware virtual assistants, and rehabilitation. However, users are unlikely to buy and wear special suits or sensor arrays to achieve this end. Instead, in this work, we explore the feasibility of estimating body pose using IMUs already in devices that many users own -- namely smartphones, smartwatches, and earbuds. This approach has several challenges, including noisy data from low-cost commodity IMUs, and the fact that the number of instrumentation points on a users body is both sparse and in flux. Our pipeline receives whatever subset of IMU data is available, potentially from just a single device, and produces a best-guess pose. To evaluate our model, we created the IMUPoser Dataset, collected from 10 participants wearing or holding off-the-shelf consumer devices and across a variety of activity contexts. We provide a comprehensive evaluation of our system, benchmarking it on both our own and existing IMU datasets.

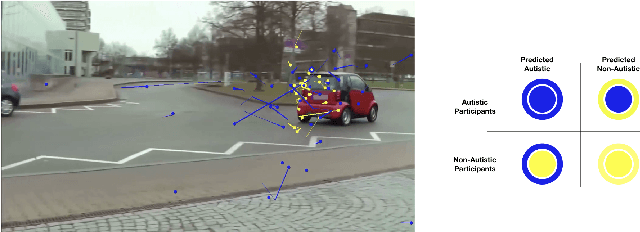

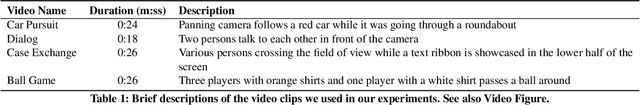

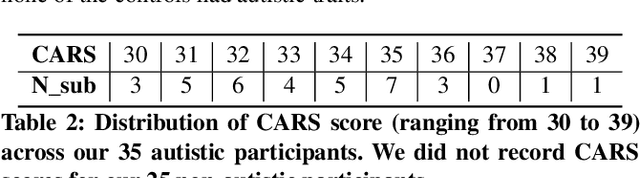

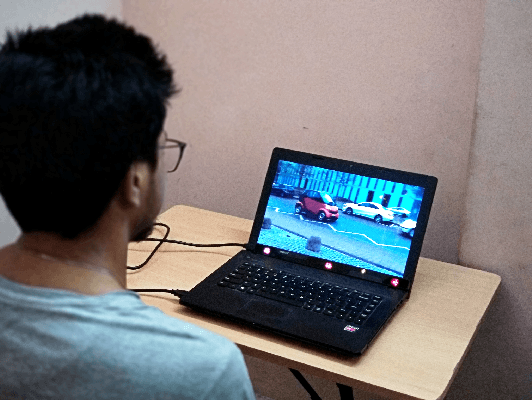

Gaze-based Autism Detection for Adolescents and Young Adults using Prosaic Videos

May 26, 2020

Abstract:Autism often remains undiagnosed in adolescents and adults. Prior research has indicated that an autistic individual often shows atypical fixation and gaze patterns. In this short paper, we demonstrate that by monitoring a user's gaze as they watch commonplace (i.e., not specialized, structured or coded) video, we can identify individuals with autism spectrum disorder. We recruited 35 autistic and 25 non-autistic individuals, and captured their gaze using an off-the-shelf eye tracker connected to a laptop. Within 15 seconds, our approach was 92.5% accurate at identifying individuals with an autism diagnosis. We envision such automatic detection being applied during e.g., the consumption of web media, which could allow for passive screening and adaptation of user interfaces.

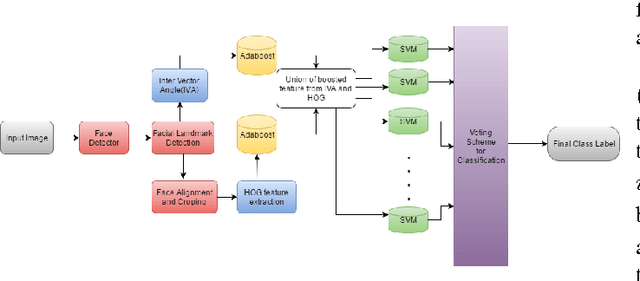

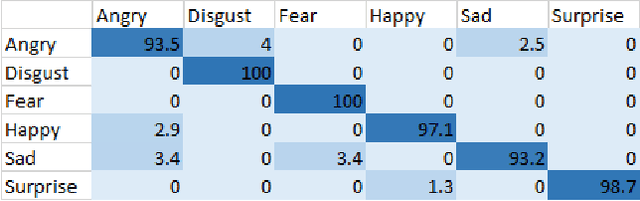

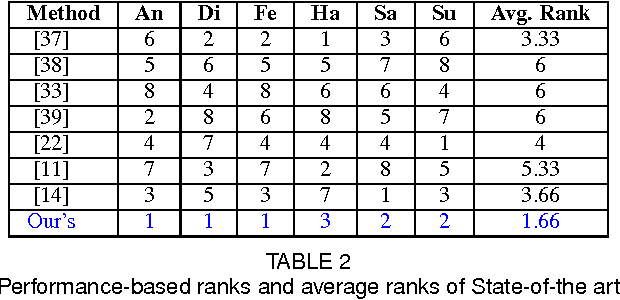

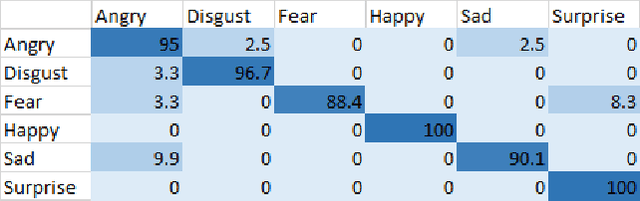

SenTion: A framework for Sensing Facial Expressions

Aug 16, 2016

Abstract:Facial expressions are an integral part of human cognition and communication, and can be applied in various real life applications. A vital precursor to accurate expression recognition is feature extraction. In this paper, we propose SenTion: A framework for sensing facial expressions. We propose a novel person independent and scale invariant method of extracting Inter Vector Angles (IVA) as geometric features, which proves to be robust and reliable across databases. SenTion employs a novel framework of combining geometric (IVA's) and appearance based features (Histogram of Gradients) to create a hybrid model, that achieves state of the art recognition accuracy. We evaluate the performance of SenTion on two famous face expression data set, namely: CK+ and JAFFE; and subsequently evaluate the viability of facial expression systems by a user study. Extensive experiments showed that SenTion framework yielded dramatic improvements in facial expression recognition and could be employed in real-world applications with low resolution imaging and minimal computational resources in real-time, achieving 15-18 fps on a 2.4 GHz CPU with no GPU.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge