Mustafa Doga Dogan

MIT CSAIL, Cambridge, MA, USA

Imprinto: Enhancing Infrared Inkjet Watermarking for Human and Machine Perception

Feb 24, 2025

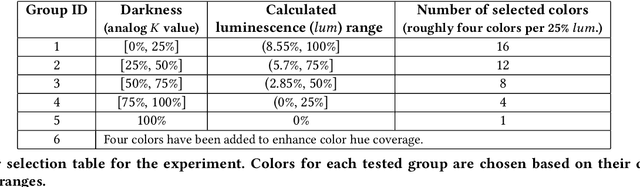

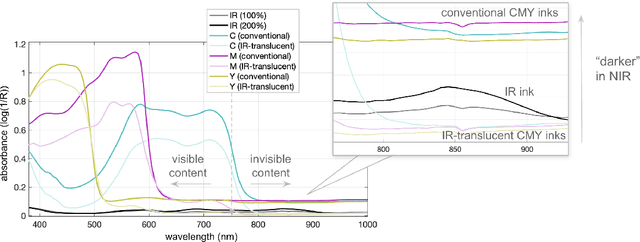

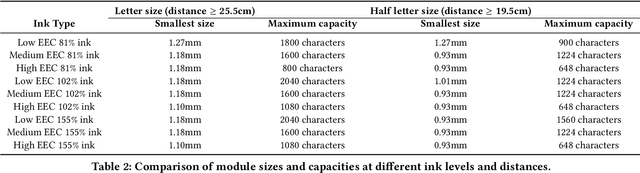

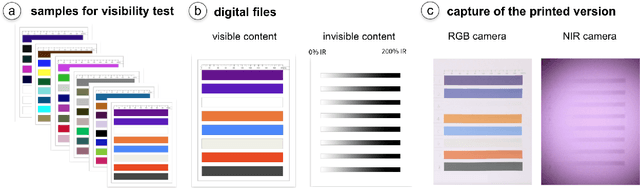

Abstract:Hybrid paper interfaces leverage augmented reality to combine the desired tangibility of paper documents with the affordances of interactive digital media. Typically, virtual content can be embedded through direct links (e.g., QR codes); however, this impacts the aesthetics of the paper print and limits the available visual content space. To address this problem, we present Imprinto, an infrared inkjet watermarking technique that allows for invisible content embeddings only by using off-the-shelf IR inks and a camera. Imprinto was established through a psychophysical experiment, studying how much IR ink can be used while remaining invisible to users regardless of background color. We demonstrate that we can detect invisible IR content through our machine learning pipeline, and we developed an authoring tool that optimizes the amount of IR ink on the color regions of an input document for machine and human detectability. Finally, we demonstrate several applications, including augmenting paper documents and objects.

RAMPA: Robotic Augmented Reality for Machine Programming and Automation

Oct 17, 2024Abstract:As robotics continue to enter various sectors beyond traditional industrial applications, the need for intuitive robot training and interaction systems becomes increasingly more important. This paper introduces Robotic Augmented Reality for Machine Programming (RAMPA), a system that utilizes the capabilities of state-of-the-art and commercially available AR headsets, e.g., Meta Quest 3, to facilitate the application of Programming from Demonstration (PfD) approaches on industrial robotic arms, such as Universal Robots UR10. Our approach enables in-situ data recording, visualization, and fine-tuning of skill demonstrations directly within the user's physical environment. RAMPA addresses critical challenges of PfD, such as safety concerns, programming barriers, and the inefficiency of collecting demonstrations on the actual hardware. The performance of our system is evaluated against the traditional method of kinesthetic control in teaching three different robotic manipulation tasks and analyzed with quantitative metrics, measuring task performance and completion time, trajectory smoothness, system usability, user experience, and task load using standardized surveys. Our findings indicate a substantial advancement in how robotic tasks are taught and refined, promising improvements in operational safety, efficiency, and user engagement in robotic programming.

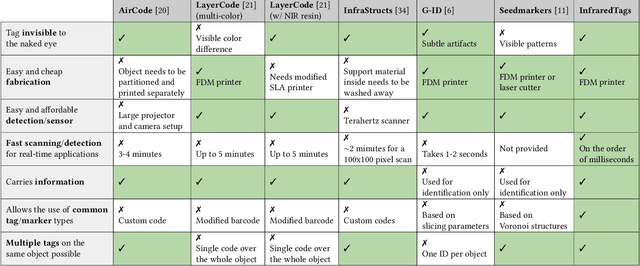

Ubiquitous Metadata: Design and Fabrication of Embedded Markers for Real-World Object Identification and Interaction

Jul 16, 2024

Abstract:The convergence of the physical and digital realms has ushered in a new era of immersive experiences and seamless interactions. As the boundaries between the real world and virtual environments blur and result in a "mixed reality," there arises a need for robust and efficient methods to connect physical objects with their virtual counterparts. In this thesis, we present a novel approach to bridging this gap through the design, fabrication, and detection of embedded machine-readable markers. We categorize the proposed marking approaches into three distinct categories: natural markers, structural markers, and internal markers. Natural markers, such as those used in SensiCut, are inherent fingerprints of objects repurposed as machine-readable identifiers, while structural markers, such as StructCode and G-ID, leverage the structural artifacts in objects that emerge during the fabrication process itself. Internal markers, such as InfraredTag and BrightMarker, are embedded inside fabricated objects using specialized materials. Leveraging a combination of methods from computer vision, machine learning, computational imaging, and material science, the presented approaches offer robust and versatile solutions for object identification, tracking, and interaction. These markers, seamlessly integrated into real-world objects, effectively communicate an object's identity, origin, function, and interaction, functioning as gateways to "ubiquitous metadata" - a concept where metadata is embedded into physical objects, similar to metadata in digital files. Across the different chapters, we demonstrate the applications of the presented methods in diverse domains, including product design, manufacturing, retail, logistics, education, entertainment, security, and sustainability.

Augmented Object Intelligence: Making the Analog World Interactable with XR-Objects

Apr 23, 2024

Abstract:Seamless integration of physical objects as interactive digital entities remains a challenge for spatial computing. This paper introduces Augmented Object Intelligence (AOI), a novel XR interaction paradigm designed to blur the lines between digital and physical by equipping real-world objects with the ability to interact as if they were digital, where every object has the potential to serve as a portal to vast digital functionalities. Our approach utilizes object segmentation and classification, combined with the power of Multimodal Large Language Models (MLLMs), to facilitate these interactions. We implement the AOI concept in the form of XR-Objects, an open-source prototype system that provides a platform for users to engage with their physical environment in rich and contextually relevant ways. This system enables analog objects to not only convey information but also to initiate digital actions, such as querying for details or executing tasks. Our contributions are threefold: (1) we define the AOI concept and detail its advantages over traditional AI assistants, (2) detail the XR-Objects system's open-source design and implementation, and (3) show its versatility through a variety of use cases and a user study.

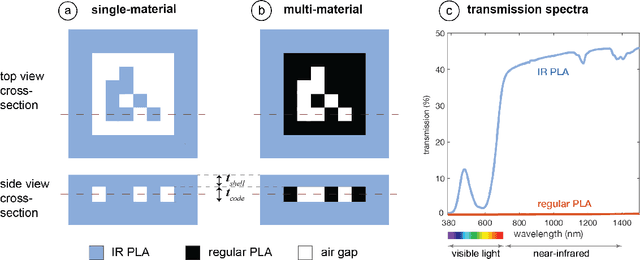

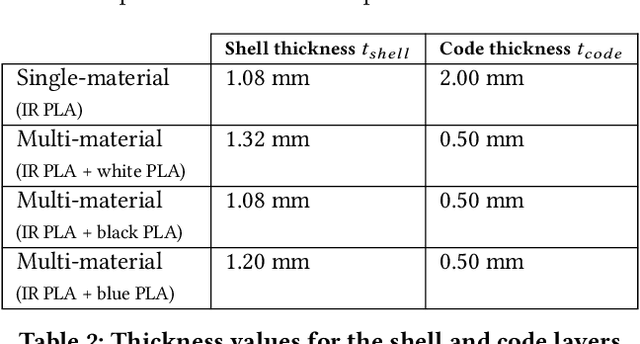

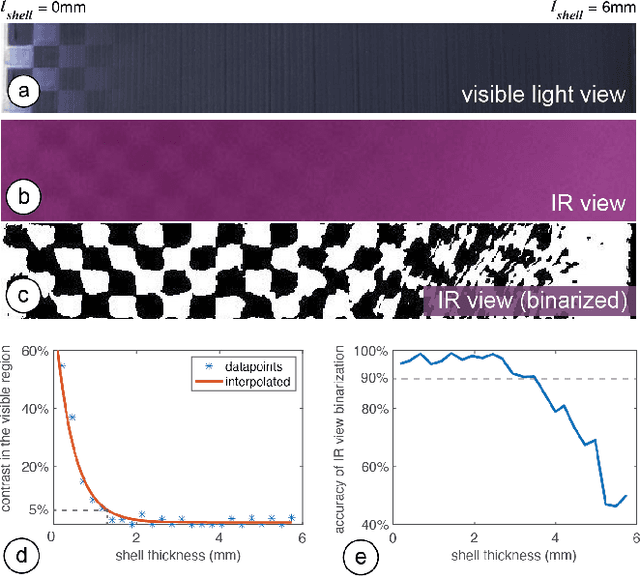

InfraredTags: Embedding Invisible AR Markers and Barcodes Using Low-Cost, Infrared-Based 3D Printing and Imaging Tools

Feb 12, 2022

Abstract:Existing approaches for embedding unobtrusive tags inside 3D objects require either complex fabrication or high-cost imaging equipment. We present InfraredTags, which are 2D markers and barcodes imperceptible to the naked eye that can be 3D printed as part of objects, and detected rapidly by low-cost near-infrared cameras. We achieve this by printing objects from an infrared-transmitting filament, which infrared cameras can see through, and by having air gaps inside for the tag's bits, which appear at a different intensity in the infrared image. We built a user interface that facilitates the integration of common tags (QR codes, ArUco markers) with the object geometry to make them 3D printable as InfraredTags. We also developed a low-cost infrared imaging module that augments existing mobile devices and decodes tags using our image processing pipeline. Our evaluation shows that the tags can be detected with little near-infrared illumination (0.2lux) and from distances as far as 250cm. We demonstrate how our method enables various applications, such as object tracking and embedding metadata for augmented reality and tangible interactions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge