Vimal Mollyn

SmartPoser: Arm Pose Estimation with a Smartphone and Smartwatch Using UWB and IMU Data

Sep 03, 2025Abstract:The ability to track a user's arm pose could be valuable in a wide range of applications, including fitness, rehabilitation, augmented reality input, life logging, and context-aware assistants. Unfortunately, this capability is not readily available to consumers. Systems either require cameras, which carry privacy issues, or utilize multiple worn IMUs or markers. In this work, we describe how an off-the-shelf smartphone and smartwatch can work together to accurately estimate arm pose. Moving beyond prior work, we take advantage of more recent ultra-wideband (UWB) functionality on these devices to capture absolute distance between the two devices. This measurement is the perfect complement to inertial data, which is relative and suffers from drift. We quantify the performance of our software-only approach using off-the-shelf devices, showing it can estimate the wrist and elbow joints with a \hl{median positional error of 11.0~cm}, without the user having to provide training data.

EclipseTouch: Touch Segmentation on Ad Hoc Surfaces using Worn Infrared Shadow Casting

Sep 03, 2025Abstract:The ability to detect touch events on uninstrumented, everyday surfaces has been a long-standing goal for mixed reality systems. Prior work has shown that virtual interfaces bound to physical surfaces offer performance and ergonomic benefits over tapping at interfaces floating in the air. A wide variety of approaches have been previously developed, to which we contribute a new headset-integrated technique called \systemname. We use a combination of a computer-triggered camera and one or more infrared emitters to create structured shadows, from which we can accurately estimate hover distance (mean error of 6.9~mm) and touch contact (98.0\% accuracy). We discuss how our technique works across a range of conditions, including surface material, interaction orientation, and environmental lighting.

WheelPoser: Sparse-IMU Based Body Pose Estimation for Wheelchair Users

Sep 13, 2024

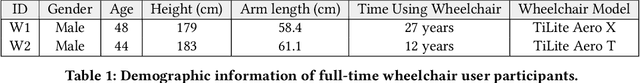

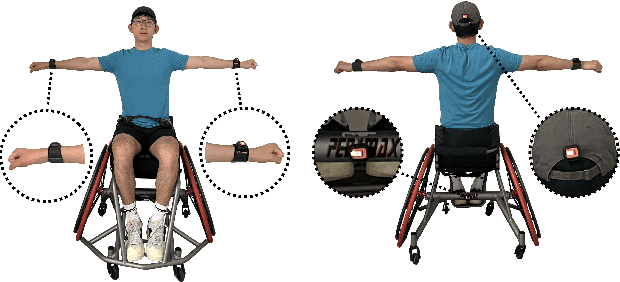

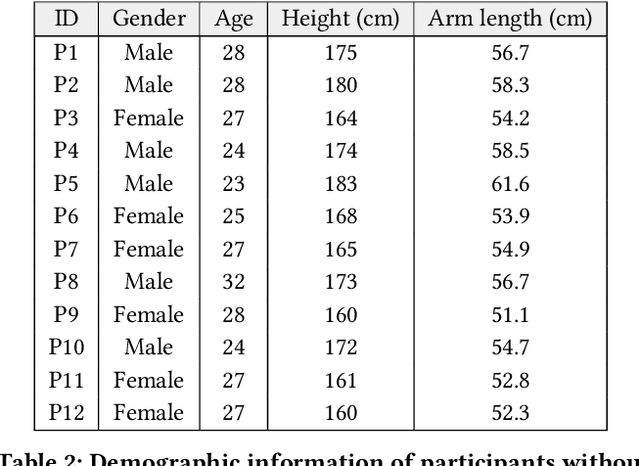

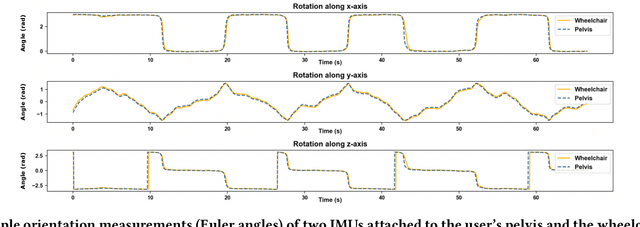

Abstract:Despite researchers having extensively studied various ways to track body pose on-the-go, most prior work does not take into account wheelchair users, leading to poor tracking performance. Wheelchair users could greatly benefit from this pose information to prevent injuries, monitor their health, identify environmental accessibility barriers, and interact with gaming and VR experiences. In this work, we present WheelPoser, a real-time pose estimation system specifically designed for wheelchair users. Our system uses only four strategically placed IMUs on the user's body and wheelchair, making it far more practical than prior systems using cameras and dense IMU arrays. WheelPoser is able to track a wheelchair user's pose with a mean joint angle error of 14.30 degrees and a mean joint position error of 6.74 cm, more than three times better than similar systems using sparse IMUs. To train our system, we collect a novel WheelPoser-IMU dataset, consisting of 167 minutes of paired IMU sensor and motion capture data of people in wheelchairs, including wheelchair-specific motions such as propulsion and pressure relief. Finally, we explore the potential application space enabled by our system and discuss future opportunities. Open-source code, models, and dataset can be found here: https://github.com/axle-lab/WheelPoser.

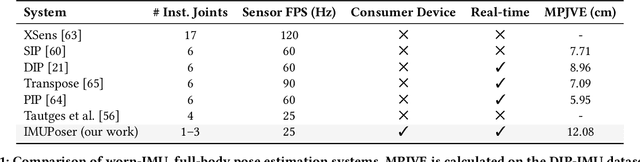

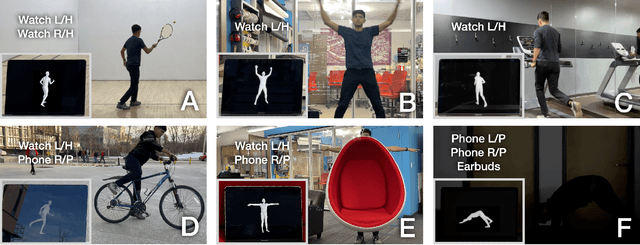

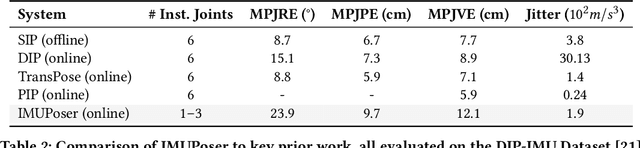

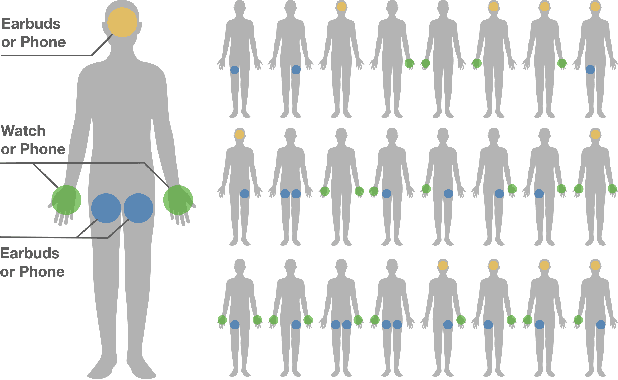

IMUPoser: Full-Body Pose Estimation using IMUs in Phones, Watches, and Earbuds

Apr 25, 2023

Abstract:Tracking body pose on-the-go could have powerful uses in fitness, mobile gaming, context-aware virtual assistants, and rehabilitation. However, users are unlikely to buy and wear special suits or sensor arrays to achieve this end. Instead, in this work, we explore the feasibility of estimating body pose using IMUs already in devices that many users own -- namely smartphones, smartwatches, and earbuds. This approach has several challenges, including noisy data from low-cost commodity IMUs, and the fact that the number of instrumentation points on a users body is both sparse and in flux. Our pipeline receives whatever subset of IMU data is available, potentially from just a single device, and produces a best-guess pose. To evaluate our model, we created the IMUPoser Dataset, collected from 10 participants wearing or holding off-the-shelf consumer devices and across a variety of activity contexts. We provide a comprehensive evaluation of our system, benchmarking it on both our own and existing IMU datasets.

Eye Gaze Controlled Robotic Arm for Persons with SSMI

May 25, 2020

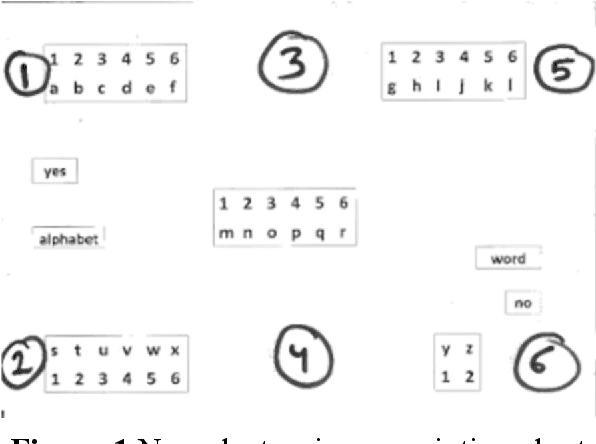

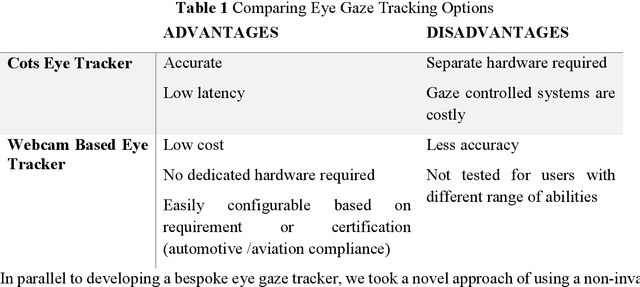

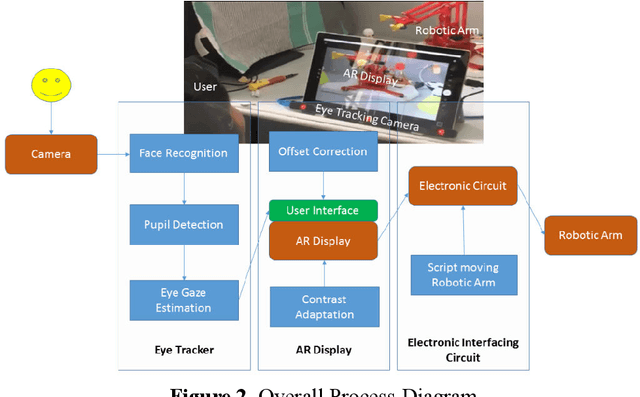

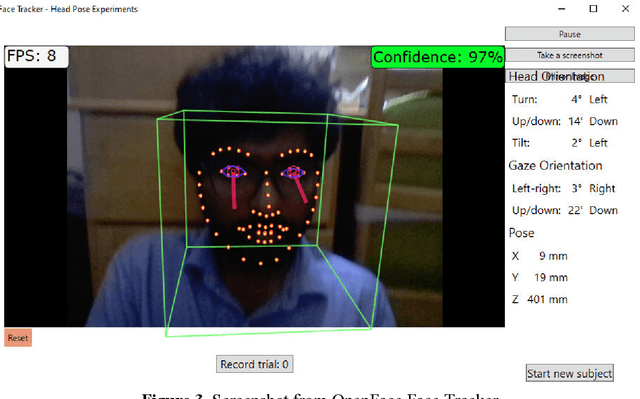

Abstract:Background: People with severe speech and motor impairment (SSMI) often uses a technique called eye pointing to communicate with outside world. One of their parents, caretakers or teachers hold a printed board in front of them and by analyzing their eye gaze manually, their intentions are interpreted. This technique is often error prone and time consuming and depends on a single caretaker. Objective: We aimed to automate the eye tracking process electronically by using commercially available tablet, computer or laptop and without requiring any dedicated hardware for eye gaze tracking. The eye gaze tracker is used to develop a video see through based AR (augmented reality) display that controls a robotic device with eye gaze and deployed for a fabric printing task. Methodology: We undertook a user centred design process and separately evaluated the web cam based gaze tracker and the video see through based human robot interaction involving users with SSMI. We also reported a user study on manipulating a robotic arm with webcam based eye gaze tracker. Results: Using our bespoke eye gaze controlled interface, able bodied users can select one of nine regions of screen at a median of less than 2 secs and users with SSMI can do so at a median of 4 secs. Using the eye gaze controlled human-robot AR display, users with SSMI could undertake representative pick and drop task at an average duration less than 15 secs and reach a randomly designated target within 60 secs using a COTS eye tracker and at an average time of 2 mins using the webcam based eye gaze tracker.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge