Patrick Carrington

OSCAR: Object Status and Contextual Awareness for Recipes to Support Non-Visual Cooking

Mar 07, 2025

Abstract:Following recipes while cooking is an important but difficult task for visually impaired individuals. We developed OSCAR (Object Status Context Awareness for Recipes), a novel approach that provides recipe progress tracking and context-aware feedback on the completion of cooking tasks through tracking object statuses. OSCAR leverages both Large-Language Models (LLMs) and Vision-Language Models (VLMs) to manipulate recipe steps, extract object status information, align visual frames with object status, and provide cooking progress tracking log. We evaluated OSCAR's recipe following functionality using 173 YouTube cooking videos and 12 real-world non-visual cooking videos to demonstrate OSCAR's capability to track cooking steps and provide contextual guidance. Our results highlight the effectiveness of using object status to improve performance compared to baseline by over 20% across different VLMs, and we present factors that impact prediction performance. Furthermore, we contribute a dataset of real-world non-visual cooking videos with step annotations as an evaluation benchmark.

WheelPoser: Sparse-IMU Based Body Pose Estimation for Wheelchair Users

Sep 13, 2024

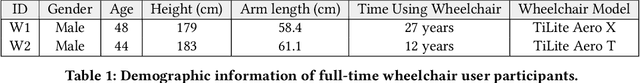

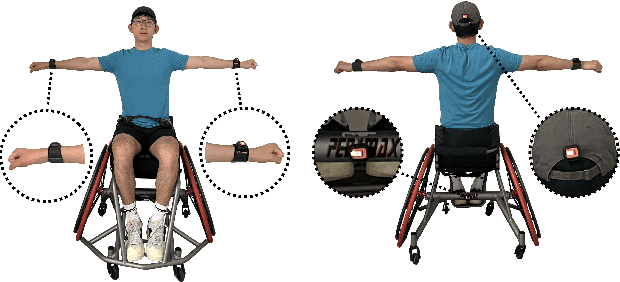

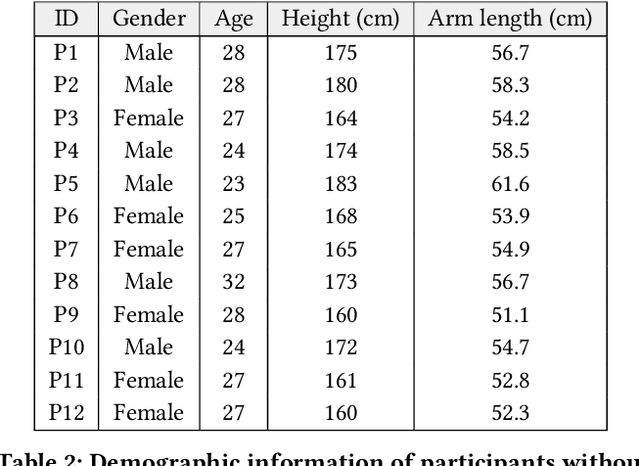

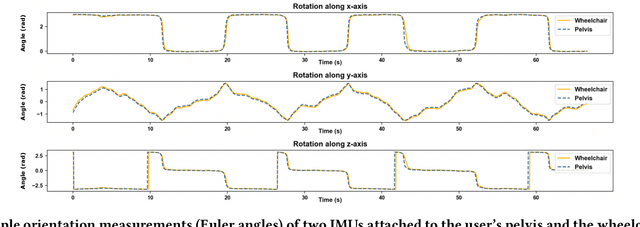

Abstract:Despite researchers having extensively studied various ways to track body pose on-the-go, most prior work does not take into account wheelchair users, leading to poor tracking performance. Wheelchair users could greatly benefit from this pose information to prevent injuries, monitor their health, identify environmental accessibility barriers, and interact with gaming and VR experiences. In this work, we present WheelPoser, a real-time pose estimation system specifically designed for wheelchair users. Our system uses only four strategically placed IMUs on the user's body and wheelchair, making it far more practical than prior systems using cameras and dense IMU arrays. WheelPoser is able to track a wheelchair user's pose with a mean joint angle error of 14.30 degrees and a mean joint position error of 6.74 cm, more than three times better than similar systems using sparse IMUs. To train our system, we collect a novel WheelPoser-IMU dataset, consisting of 167 minutes of paired IMU sensor and motion capture data of people in wheelchairs, including wheelchair-specific motions such as propulsion and pressure relief. Finally, we explore the potential application space enabled by our system and discuss future opportunities. Open-source code, models, and dataset can be found here: https://github.com/axle-lab/WheelPoser.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge