Kaiyu Zheng

Dial-insight: Fine-tuning Large Language Models with High-Quality Domain-Specific Data Preventing Capability Collapse

Mar 14, 2024Abstract:The efficacy of large language models (LLMs) is heavily dependent on the quality of the underlying data, particularly within specialized domains. A common challenge when fine-tuning LLMs for domain-specific applications is the potential degradation of the model's generalization capabilities. To address these issues, we propose a two-stage approach for the construction of production prompts designed to yield high-quality data. This method involves the generation of a diverse array of prompts that encompass a broad spectrum of tasks and exhibit a rich variety of expressions. Furthermore, we introduce a cost-effective, multi-dimensional quality assessment framework to ensure the integrity of the generated labeling data. Utilizing a dataset comprised of service provider and customer interactions from the real estate sector, we demonstrate a positive correlation between data quality and model performance. Notably, our findings indicate that the domain-specific proficiency of general LLMs can be enhanced through fine-tuning with data produced via our proposed method, without compromising their overall generalization abilities, even when exclusively domain-specific data is employed for fine-tuning.

A System for Generalized 3D Multi-Object Search

Mar 06, 2023

Abstract:Searching for objects is a fundamental skill for robots. As such, we expect object search to eventually become an off-the-shelf capability for robots, similar to e.g., object detection and SLAM. In contrast, however, no system for 3D object search exists that generalizes across real robots and environments. In this paper, building upon a recent theoretical framework that exploited the octree structure for representing belief in 3D, we present GenMOS (Generalized Multi-Object Search), the first general-purpose system for multi-object search (MOS) in a 3D region that is robot-independent and environment-agnostic. GenMOS takes as input point cloud observations of the local region, object detection results, and localization of the robot's view pose, and outputs a 6D viewpoint to move to through online planning. In particular, GenMOS uses point cloud observations in three ways: (1) to simulate occlusion; (2) to inform occupancy and initialize octree belief; and (3) to sample a belief-dependent graph of view positions that avoid obstacles. We evaluate our system both in simulation and on two real robot platforms. Our system enables, for example, a Boston Dynamics Spot robot to find a toy cat hidden underneath a couch in under one minute. We further integrate 3D local search with 2D global search to handle larger areas, demonstrating the resulting system in a 25m$^2$ lobby area.

Generalized Object Search

Jan 24, 2023

Abstract:Future collaborative robots must be capable of finding objects. As such a fundamental skill, we expect object search to eventually become an off-the-shelf capability for any robot, similar to e.g., object detection, SLAM, and motion planning. However, existing approaches either make unrealistic compromises (e.g., reduce the problem from 3D to 2D), resort to ad-hoc, greedy search strategies, or attempt to learn end-to-end policies in simulation that are yet to generalize across real robots and environments. This thesis argues that through using Partially Observable Markov Decision Processes (POMDPs) to model object search while exploiting structures in the human world (e.g., octrees, correlations) and in human-robot interaction (e.g., spatial language), a practical and effective system for generalized object search can be achieved. In support of this argument, I develop methods and systems for (multi-)object search in 3D environments under uncertainty due to limited field of view, occlusion, noisy, unreliable detectors, spatial correlations between objects, and possibly ambiguous spatial language (e.g., "The red car is behind Chase Bank"). Besides evaluation in simulators such as PyGame, AirSim, and AI2-THOR, I design and implement a robot-independent, environment-agnostic system for generalized object search in 3D and deploy it on the Boston Dynamics Spot robot, the Kinova MOVO robot, and the Universal Robots UR5e robotic arm, to perform object search in different environments. The system enables, for example, a Spot robot to find a toy cat hidden underneath a couch in a kitchen area in under one minute. This thesis also broadly surveys the object search literature, proposing taxonomies in object search problem settings, methods and systems.

Hierarchical Reinforcement Learning of Locomotion Policies in Response to Approaching Objects: A Preliminary Study

Mar 20, 2022

Abstract:Animals such as rabbits and birds can instantly generate locomotion behavior in reaction to a dynamic, approaching object, such as a person or a rock, despite having possibly never seen the object before and having limited perception of the object's properties. Recently, deep reinforcement learning has enabled complex kinematic systems such as humanoid robots to successfully move from point A to point B. Inspired by the observation of the innate reactive behavior of animals in nature, we hope to extend this progress in robot locomotion to settings where external, dynamic objects are involved whose properties are partially observable to the robot. As a first step toward this goal, we build a simulation environment in MuJoCo where a legged robot must avoid getting hit by a ball moving toward it. We explore whether prior locomotion experiences that animals typically possess benefit the learning of a reactive control policy under a proposed hierarchical reinforcement learning framework. Preliminary results support the claim that the learning becomes more efficient using this hierarchical reinforcement learning method, even when partial observability (radius-based object visibility) is taken into account.

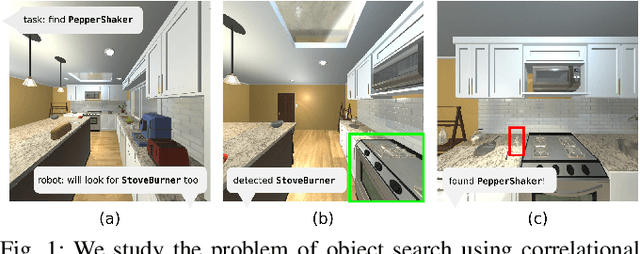

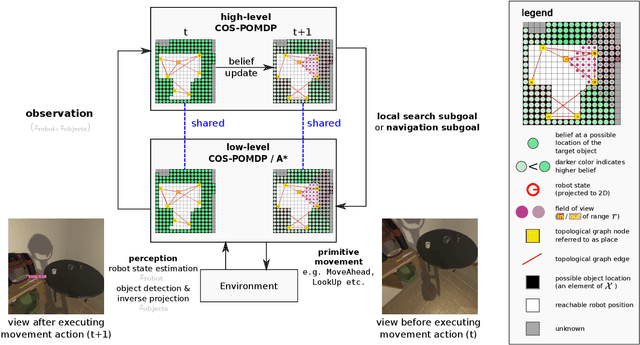

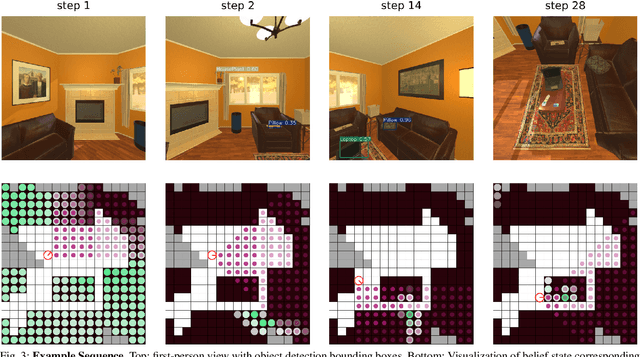

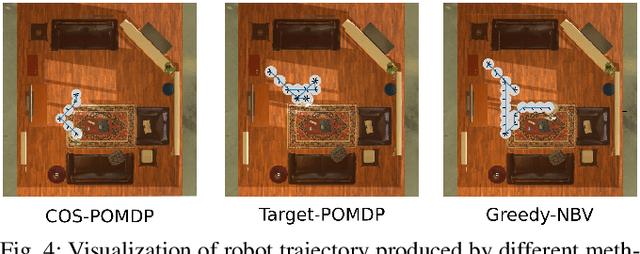

Towards Optimal Correlational Object Search

Oct 19, 2021

Abstract:In realistic applications of object search, robots will need to locate target objects in complex environments while coping with unreliable sensors, especially for small or hard-to-detect objects. In such settings, correlational information can be valuable for planning efficiently: when looking for a fork, the robot could start by locating the easier-to-detect refrigerator, since forks would probably be found nearby. Previous approaches to object search with correlational information typically resort to ad-hoc or greedy search strategies. In this paper, we propose the Correlational Object Search POMDP (COS-POMDP), which can be solved to produce search strategies that use correlational information. COS-POMDPs contain a correlation-based observation model that allows us to avoid the exponential blow-up of maintaining a joint belief about all objects, while preserving the optimal solution to this naive, exponential POMDP formulation. We propose a hierarchical planning algorithm to scale up COS-POMDP for practical domains. We conduct experiments using AI2-THOR, a realistic simulator of household environments, as well as YOLOv5, a widely-used object detector. Our results show that, particularly for hard-to-detect objects, such as scrub brush and remote control, our method offers the most robust performance compared to baselines that ignore correlations as well as a greedy, next-best view approach.

Dialogue Object Search

Jul 22, 2021

Abstract:We envision robots that can collaborate and communicate seamlessly with humans. It is necessary for such robots to decide both what to say and how to act, while interacting with humans. To this end, we introduce a new task, dialogue object search: A robot is tasked to search for a target object (e.g. fork) in a human environment (e.g., kitchen), while engaging in a "video call" with a remote human who has additional but inexact knowledge about the target's location. That is, the robot conducts speech-based dialogue with the human, while sharing the image from its mounted camera. This task is challenging at multiple levels, from data collection, algorithm and system development,to evaluation. Despite these challenges, we believe such a task blocks the path towards more intelligent and collaborative robots. In this extended abstract, we motivate and introduce the dialogue object search task and analyze examples collected from a pilot study. We then discuss our next steps and conclude with several challenges on which we hope to receive feedback.

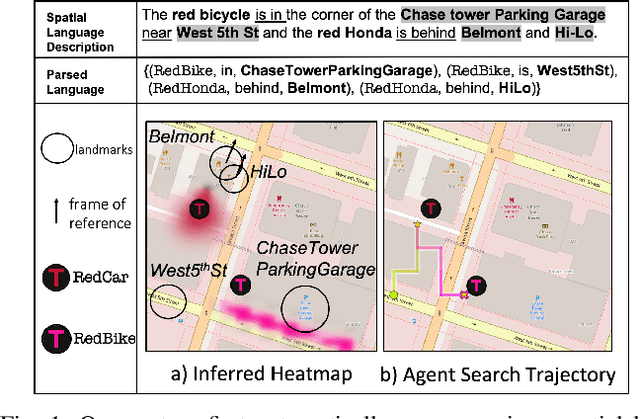

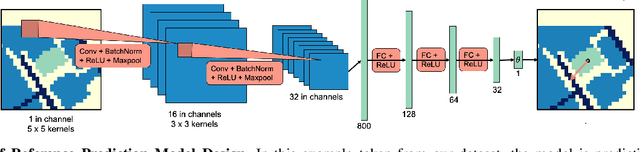

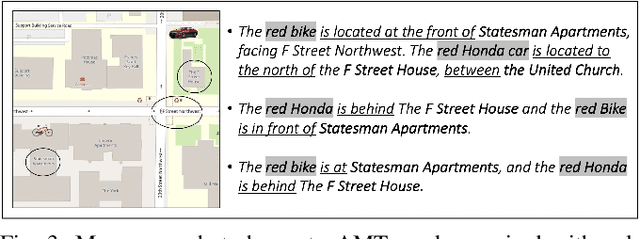

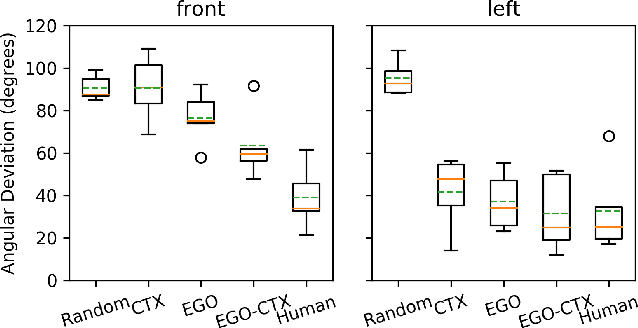

Spatial Language Understanding for Object Search in Partially Observed Cityscale Environments

Dec 04, 2020

Abstract:We present a system that enables robots to interpret spatial language as a distribution over object locations for effective search in partially observable cityscale environments. We introduce the spatial language observation space and formulate a stochastic observation model under the framework of Partially Observable Markov Decision Process (POMDP) which incorporates information extracted from the spatial language into the robot's belief. To interpret ambiguous, context-dependent prepositions (e.g.~front), we propose a convolutional neural network model that learns to predict the language provider's relative frame of reference (FoR) given environment context. We demonstrate the generalizability of our FoR prediction model and object search system through cross-validation over areas of five cities, each with a 40,000m$^2$ footprint. End-to-end experiments in simulation show that our system achieves faster search and higher success rate compared to a keyword-based baseline without spatial preposition understanding.

Multi-Resolution POMDP Planning for Multi-Object Search in 3D

May 07, 2020

Abstract:Robots operating in household environments must find objects on shelves, under tables, and in cupboards. Previous work often formulate the object search problem as a POMDP (Partially Observable Markov Decision Process), yet constrain the search space in 2D. We propose a new approach that enables the robot to efficiently search for objects in 3D, taking occlusions into account. We model the problem as an object-oriented POMDP, where the robot receives a volumetric observation from a viewing frustum and must produce a policy to efficiently search for objects. To address the challenge of large state and observation spaces, we first propose a per-voxel observation model which drastically reduces the observation size necessary for planning. Then, we present a novel octree-based belief representation which captures beliefs at different resolutions and supports efficient exact belief update. Finally, we design an online multi-resolution planning algorithm that leverages the resolution layers in the octree structure as levels of abstractions to the original POMDP problem. Our evaluation in a simulated 3D domain shows that, as the problem scales, our approach significantly outperforms baselines without resolution hierarchy by 25%-35% in cumulative reward. We demonstrate the practicality of our approach on a torso-actuated mobile robot searching for objects in areas of a cluttered lab environment where objects appear on surfaces at different heights.

pomdp_py: A Framework to Build and Solve POMDP Problems

Apr 21, 2020

Abstract:In this paper, we present pomdp_py, a general purpose Partially Observable Markov Decision Process (POMDP) library written in Python and Cython. Existing POMDP libraries often hinder accessibility and efficient prototyping due to the underlying programming language or interfaces, and require extra complexity in software toolchain to integrate with robotics systems. pomdp_py features simple and comprehensive interfaces capable of describing large discrete or continuous (PO)MDP problems. Here, we summarize the design principles and describe in detail the programming model and interfaces in pomdp_py. We also describe intuitive integration of this library with ROS (Robot Operating System), which enabled our torso-actuated robot to perform object search in 3D. Finally, we note directions to improve and extend this library for POMDP planning and beyond.

ROS Navigation Tuning Guide

Apr 08, 2019

Abstract:The ROS navigation stack is powerful for mobile robots to move from place to place reliably. The job of navigation stack is to produce a safe path for the robot to execute, by processing data from odometry, sensors and environment map. Maximizing the performance of this navigation stack requires some fine tuning of parameters, and this is not as simple as it looks. One who is sophomoric about the concepts and reasoning may try things randomly, and wastes a lot of time. This article intends to guide the reader through the process of fine tuning navigation parameters. It is the reference when someone need to know the "how" and "why" when setting the value of key parameters. This guide assumes that the reader has already set up the navigation stack and ready to optimize it. This is also a summary of my work with the ROS navigation stack.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge