Juan Pablo Munoz

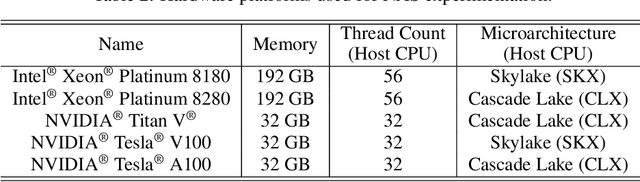

The Landscape and Challenges of HPC Research and LLMs

Feb 07, 2024

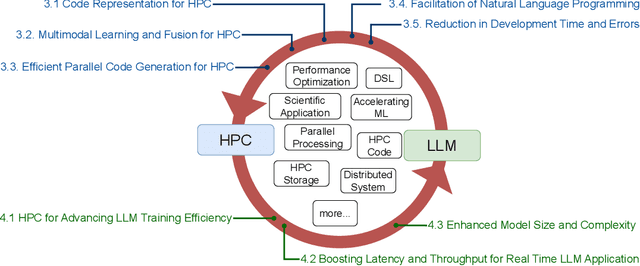

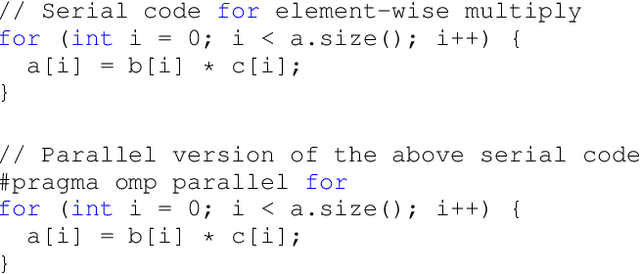

Abstract:Recently, language models (LMs), especially large language models (LLMs), have revolutionized the field of deep learning. Both encoder-decoder models and prompt-based techniques have shown immense potential for natural language processing and code-based tasks. Over the past several years, many research labs and institutions have invested heavily in high-performance computing, approaching or breaching exascale performance levels. In this paper, we posit that adapting and utilizing such language model-based techniques for tasks in high-performance computing (HPC) would be very beneficial. This study presents our reasoning behind the aforementioned position and highlights how existing ideas can be improved and adapted for HPC tasks.

A Hardware-Aware Framework for Accelerating Neural Architecture Search Across Modalities

May 19, 2022

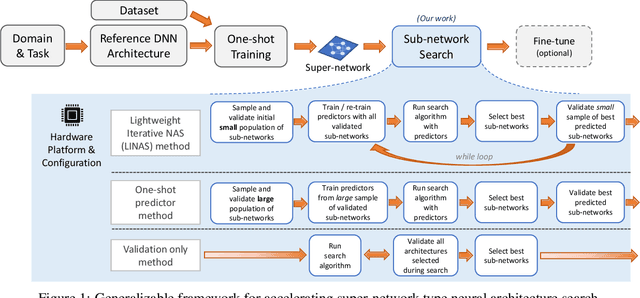

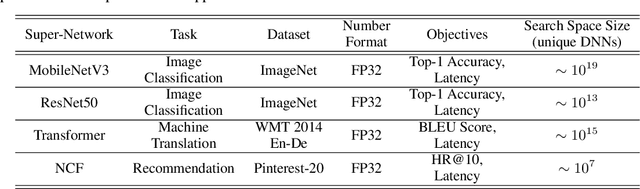

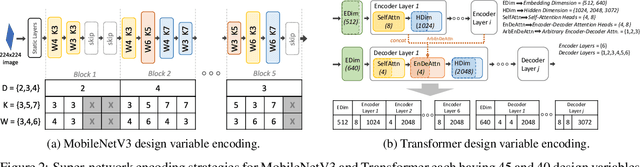

Abstract:Recent advances in Neural Architecture Search (NAS) such as one-shot NAS offer the ability to extract specialized hardware-aware sub-network configurations from a task-specific super-network. While considerable effort has been employed towards improving the first stage, namely, the training of the super-network, the search for derivative high-performing sub-networks is still under-explored. Popular methods decouple the super-network training from the sub-network search and use performance predictors to reduce the computational burden of searching on different hardware platforms. We propose a flexible search framework that automatically and efficiently finds optimal sub-networks that are optimized for different performance metrics and hardware configurations. Specifically, we show how evolutionary algorithms can be paired with lightly trained objective predictors in an iterative cycle to accelerate architecture search in a multi-objective setting for various modalities including machine translation and image classification.

Improving Place Recognition Using Dynamic Object Detection

Feb 11, 2020

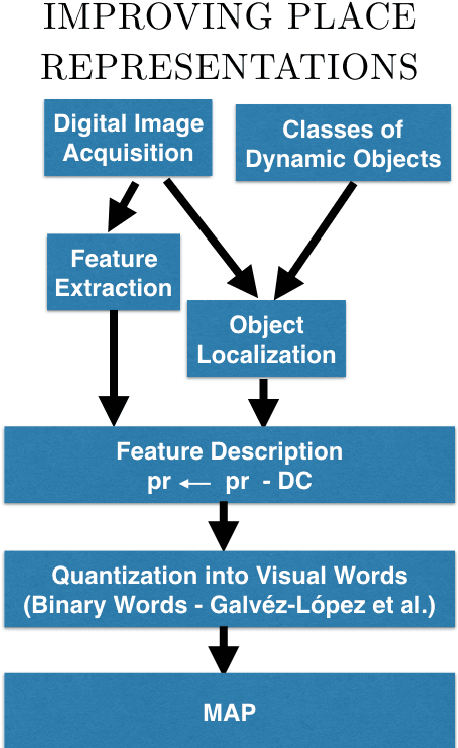

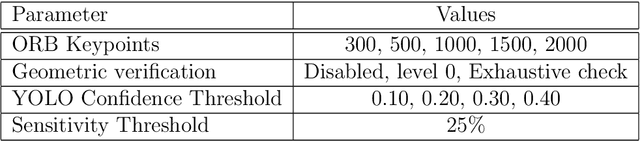

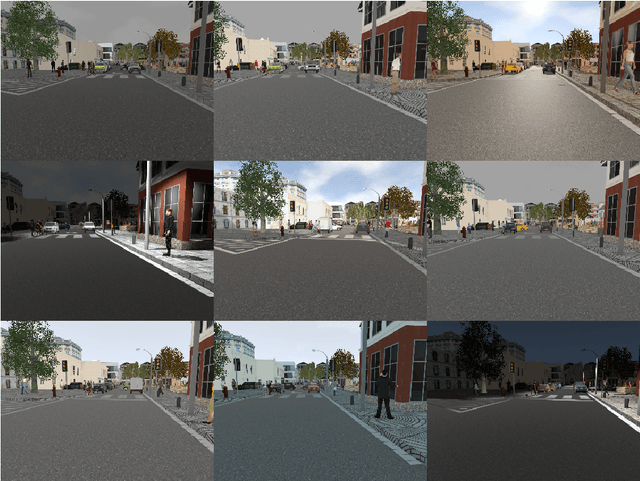

Abstract:Traditional appearance-based place recognition algorithms based on handcrafted features have proven inadequate in environments with a significant presence of dynamic objects -- objects that may or may not be present in an agent's subsequent visits. Place representations from features extracted using Deep Learning approaches have gained popularity for their robustness and because the algorithms that used them yield better accuracy. Nevertheless, handcrafted features are still popular in devices that have limited resources. This article presents a novel approach that improves place recognition in environments populated by dynamic objects by incorporating the very knowledge of these objects to improve the overall quality of the representations of places used for matching. The proposed approach fuses object detection and place description, Deep Learning and handcrafted features, with the significance of reducing memory and storage requirements. This article demonstrates that the proposed approach yields improved place recognition accuracy, and was evaluated using both synthetic and real-world datasets. The adoption of the proposed approach will significantly improve place recognition results in environments populated by dynamic objects, and explored by devices with limited resources, with particular utility in both indoor and outdoor environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge