Jincheng Zhang

Mamba-Diffusion Model with Learnable Wavelet for Controllable Symbolic Music Generation

May 06, 2025Abstract:The recent surge in the popularity of diffusion models for image synthesis has attracted new attention to their potential for generation tasks in other domains. However, their applications to symbolic music generation remain largely under-explored because symbolic music is typically represented as sequences of discrete events and standard diffusion models are not well-suited for discrete data. We represent symbolic music as image-like pianorolls, facilitating the use of diffusion models for the generation of symbolic music. Moreover, this study introduces a novel diffusion model that incorporates our proposed Transformer-Mamba block and learnable wavelet transform. Classifier-free guidance is utilised to generate symbolic music with target chords. Our evaluation shows that our method achieves compelling results in terms of music quality and controllability, outperforming the strong baseline in pianoroll generation. Our code is available at https://github.com/jinchengzhanggg/proffusion.

Breaking the Stigma! Unobtrusively Probe Symptoms in Depression Disorder Diagnosis Dialogue

Jan 25, 2025

Abstract:Stigma has emerged as one of the major obstacles to effectively diagnosing depression, as it prevents users from open conversations about their struggles. This requires advanced questioning skills to carefully probe the presence of specific symptoms in an unobtrusive manner. While recent efforts have been made on depression-diagnosis-oriented dialogue systems, they largely ignore this problem, ultimately hampering their practical utility. To this end, we propose a novel and effective method, UPSD$^{4}$, developing a series of strategies to promote a sense of unobtrusiveness within the dialogue system and assessing depression disorder by probing symptoms. We experimentally show that UPSD$^{4}$ demonstrates a significant improvement over current baselines, including unobtrusiveness evaluation of dialogue content and diagnostic accuracy. We believe our work contributes to developing more accessible and user-friendly tools for addressing the widespread need for depression diagnosis.

Feature-Aware Noise Contrastive Learning For Unsupervised Red Panda Re-Identification

May 01, 2024

Abstract:To facilitate the re-identification (Re-ID) of individual animals, existing methods primarily focus on maximizing feature similarity within the same individual and enhancing distinctiveness between different individuals. However, most of them still rely on supervised learning and require substantial labeled data, which is challenging to obtain. To avoid this issue, we propose a Feature-Aware Noise Contrastive Learning (FANCL) method to explore an unsupervised learning solution, which is then validated on the task of red panda re-ID. FANCL employs a Feature-Aware Noise Addition module to produce noised images that conceal critical features and designs two contrastive learning modules to calculate the losses. Firstly, a feature consistency module is designed to bridge the gap between the original and noised features. Secondly, the neural networks are trained through a cluster contrastive learning module. Through these more challenging learning tasks, FANCL can adaptively extract deeper representations of red pandas. The experimental results on a set of red panda images collected in both indoor and outdoor environments prove that FANCL outperforms several related state-of-the-art unsupervised methods, achieving high performance comparable to supervised learning methods.

Unveiling Ancient Maya Settlements Using Aerial LiDAR Image Segmentation

Mar 09, 2024

Abstract:Manual identification of archaeological features in LiDAR imagery is labor-intensive, costly, and requires archaeological expertise. This paper shows how recent advancements in deep learning (DL) present efficient solutions for accurately segmenting archaeological structures in aerial LiDAR images using the YOLOv8 neural network. The proposed approach uses novel pre-processing of the raw LiDAR data and dataset augmentation methods to produce trained YOLOv8 networks to improve accuracy, precision, and recall for the segmentation of two important Maya structure types: annular structures and platforms. The results show an IoU performance of 0.842 for platforms and 0.809 for annular structures which outperform existing approaches. Further, analysis via domain experts considers the topological consistency of segmented regions and performance vs. area providing important insights. The approach automates time-consuming LiDAR image labeling which significantly accelerates accurate analysis of historical landscapes.

Design and Flight Demonstration of a Quadrotor for Urban Mapping and Target Tracking Research

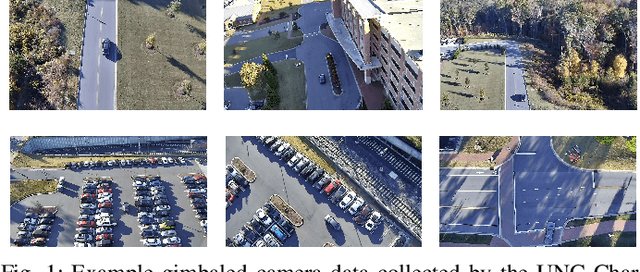

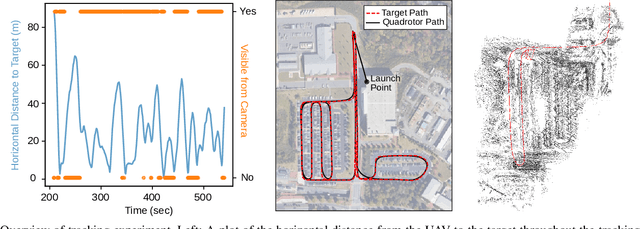

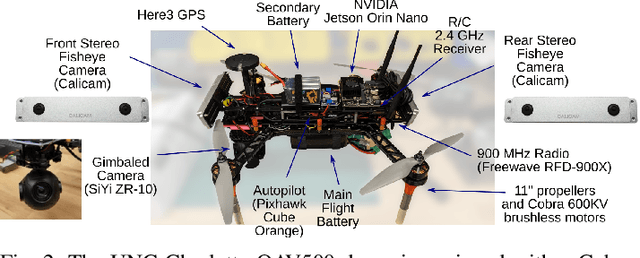

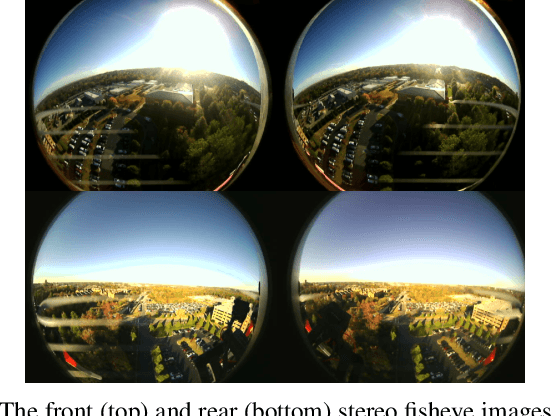

Feb 20, 2024

Abstract:This paper describes the hardware design and flight demonstration of a small quadrotor with imaging sensors for urban mapping, hazard avoidance, and target tracking research. The vehicle is equipped with five cameras, including two pairs of fisheye stereo cameras that enable a nearly omnidirectional view and a two-axis gimbaled camera. An onboard NVIDIA Jetson Orin Nano computer running the Robot Operating System software is used for data collection. An autonomous tracking behavior was implemented to coordinate the motion of the quadrotor and gimbaled camera to track a moving GPS coordinate. The data collection system was demonstrated through a flight test that tracked a moving GPS-tagged vehicle through a series of roads and parking lots. A map of the environment was reconstructed from the collected images using the Direct Sparse Odometry (DSO) algorithm. The performance of the quadrotor was also characterized by acoustic noise, communication range, battery voltage in hover, and maximum speed tests.

Cesium Tiles for High-realism Simulation and Comparing SLAM Results in Corresponding Virtual and Real-world Environments

Jan 15, 2024Abstract:This article discusses the use of a simulated environment to predict algorithm results in the real world. Simulators are crucial in allowing researchers to test algorithms, sensor integration, and navigation systems without deploying expensive hardware. This article examines how the AirSim simulator, Unreal Engine, and Cesium plugin can be used to generate simulated digital twin models of real-world locations. Several technical challenges in completing the analysis are discussed and the technical solutions are detailed in this article. Work investigates how to assess mapping results for a real-life experiment using Cesium Tiles provided by digital twins of the experimental location. This is accompanied by a description of a process for duplicating real-world flights in simulation. The performance of these methods is evaluated by analyzing real-life and experimental image telemetry with the Direct Sparse Odometry (DSO) mapping algorithm. Results indicate that Cesium Tiles environments can provide highly accurate models of ground truth geometry after careful alignment. Further, results from real-life and simulated telemetry analysis indicate that the virtual simulation results accurately predict real-life results. Findings indicate that the algorithm results in real life and in the simulated duplicate exhibited a high degree of similarity. This indicates that the use of Cesium Tiles environments as a virtual digital twin for real-life experiments will provide representative results for such algorithms. The impact of this can be significant, potentially allowing expansive virtual testing of robotic systems at specific deployment locations to develop solutions that are tailored to the environment and potentially outperforming solutions meant to work in completely generic environments.

UAV-borne Mapping Algorithms for Canopy-Level and High-Speed Drone Applications

Jan 12, 2024Abstract:This article presents a comprehensive review of and analysis of state-of-the-art mapping algorithms for UAV (Unmanned Aerial Vehicle) applications, focusing on canopy-level and high-speed scenarios. This article presents a comprehensive exploration of sensor technologies suitable for UAV mapping, assessing their capabilities to provide measurements that meet the requirements of fast UAV mapping. Furthermore, the study conducts extensive experiments in a simulated environment to evaluate the performance of three distinct mapping algorithms: Direct Sparse Odometry (DSO), Stereo DSO (SDSO), and DSO Lite (DSOL). The experiments delve into mapping accuracy and mapping speed, providing valuable insights into the strengths and limitations of each algorithm. The results highlight the versatility and shortcomings of these algorithms in meeting the demands of modern UAV applications. The findings contribute to a nuanced understanding of UAV mapping dynamics, emphasizing their applicability in complex environments and high-speed scenarios. This research not only serves as a benchmark for mapping algorithm comparisons but also offers practical guidance for selecting sensors tailored to specific UAV mapping applications.

CTC Blank Triggered Dynamic Layer-Skipping for Efficient CTC-based Speech Recognition

Jan 04, 2024Abstract:Deploying end-to-end speech recognition models with limited computing resources remains challenging, despite their impressive performance. Given the gradual increase in model size and the wide range of model applications, selectively executing model components for different inputs to improve the inference efficiency is of great interest. In this paper, we propose a dynamic layer-skipping method that leverages the CTC blank output from intermediate layers to trigger the skipping of the last few encoder layers for frames with high blank probabilities. Furthermore, we factorize the CTC output distribution and perform knowledge distillation on intermediate layers to reduce computation and improve recognition accuracy. Experimental results show that by utilizing the CTC blank, the encoder layer depth can be adjusted dynamically, resulting in 29% acceleration of the CTC model inference with minor performance degradation.

Composer Style-specific Symbolic Music Generation Using Vector Quantized Discrete Diffusion Models

Oct 21, 2023

Abstract:Emerging Denoising Diffusion Probabilistic Models (DDPM) have become increasingly utilised because of promising results they have achieved in diverse generative tasks with continuous data, such as image and sound synthesis. Nonetheless, the success of diffusion models has not been fully extended to discrete symbolic music. We propose to combine a vector quantized variational autoencoder (VQ-VAE) and discrete diffusion models for the generation of symbolic music with desired composer styles. The trained VQ-VAE can represent symbolic music as a sequence of indexes that correspond to specific entries in a learned codebook. Subsequently, a discrete diffusion model is used to model the VQ-VAE's discrete latent space. The diffusion model is trained to generate intermediate music sequences consisting of codebook indexes, which are then decoded to symbolic music using the VQ-VAE's decoder. The results demonstrate our model can generate symbolic music with target composer styles that meet the given conditions with a high accuracy of 72.36%.

Fast Diffusion GAN Model for Symbolic Music Generation Controlled by Emotions

Oct 21, 2023Abstract:Diffusion models have shown promising results for a wide range of generative tasks with continuous data, such as image and audio synthesis. However, little progress has been made on using diffusion models to generate discrete symbolic music because this new class of generative models are not well suited for discrete data while its iterative sampling process is computationally expensive. In this work, we propose a diffusion model combined with a Generative Adversarial Network, aiming to (i) alleviate one of the remaining challenges in algorithmic music generation which is the control of generation towards a target emotion, and (ii) mitigate the slow sampling drawback of diffusion models applied to symbolic music generation. We first used a trained Variational Autoencoder to obtain embeddings of a symbolic music dataset with emotion labels and then used those to train a diffusion model. Our results demonstrate the successful control of our diffusion model to generate symbolic music with a desired emotion. Our model achieves several orders of magnitude improvement in computational cost, requiring merely four time steps to denoise while the steps required by current state-of-the-art diffusion models for symbolic music generation is in the order of thousands.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge