Andrew Willis

Design and Flight Demonstration of a Quadrotor for Urban Mapping and Target Tracking Research

Feb 20, 2024

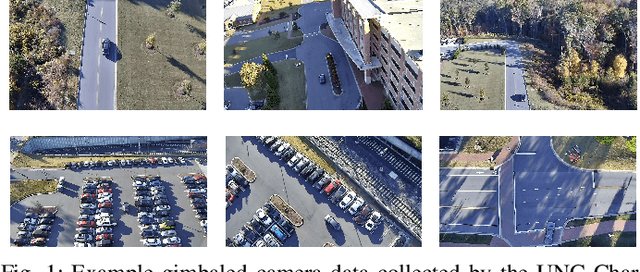

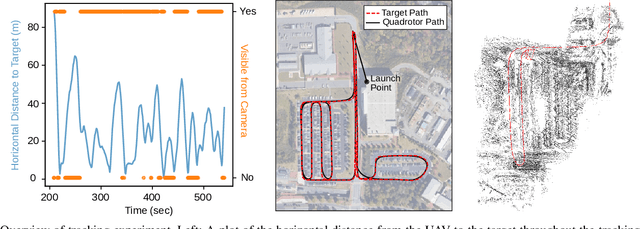

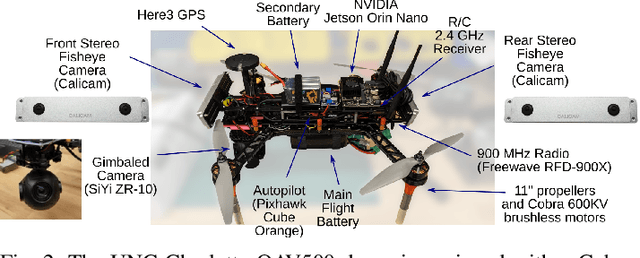

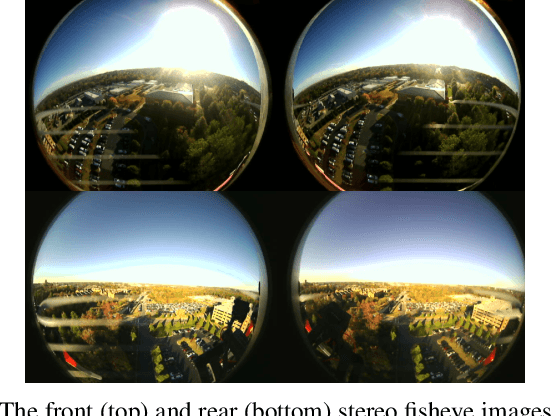

Abstract:This paper describes the hardware design and flight demonstration of a small quadrotor with imaging sensors for urban mapping, hazard avoidance, and target tracking research. The vehicle is equipped with five cameras, including two pairs of fisheye stereo cameras that enable a nearly omnidirectional view and a two-axis gimbaled camera. An onboard NVIDIA Jetson Orin Nano computer running the Robot Operating System software is used for data collection. An autonomous tracking behavior was implemented to coordinate the motion of the quadrotor and gimbaled camera to track a moving GPS coordinate. The data collection system was demonstrated through a flight test that tracked a moving GPS-tagged vehicle through a series of roads and parking lots. A map of the environment was reconstructed from the collected images using the Direct Sparse Odometry (DSO) algorithm. The performance of the quadrotor was also characterized by acoustic noise, communication range, battery voltage in hover, and maximum speed tests.

GPU-Accelerated 3D Polygon Visibility Volumes for Synergistic Perception and Navigation

Feb 05, 2024

Abstract:UAV missions often require specific geometric constraints to be satisfied between ground locations and the vehicle location. Such requirements are typical for contexts where line-of-sight must be maintained between the vehicle location and the ground control location and are also important in surveillance applications where the UAV wishes to be able to sense, e.g., with a camera sensor, a specific region within a complex geometric environment. This problem is further complicated when the ground location is generalized to a convex 2D polygonal region. This article describes the theory and implementation of a system which can quickly calculate the 3D volume that encloses all 3D coordinates from which a 2D convex planar region can be entirely viewed; referred to as a visibility volume. The proposed approach computes visibility volumes using a combination of depth map computation using GPU-acceleration and geometric boolean operations. Solutions to this problem require complex 3D geometric analysis techniques that must execute using arbitrary precision arithmetic on a collection of discontinuous and non-analytic surfaces. Post-processing steps incorporate navigational constraints to further restrict the enclosed coordinates to include both visibility and navigation constraints. Integration of sensing visibility constraints with navigational constraints yields a range of navigable space where a vehicle will satisfy both perceptual sensing and navigational needs of the mission. This algorithm then provides a synergistic perception and navigation sensitive solution yielding a volume of coordinates in 3D that satisfy both the mission path and sensing needs.

Cesium Tiles for High-realism Simulation and Comparing SLAM Results in Corresponding Virtual and Real-world Environments

Jan 15, 2024Abstract:This article discusses the use of a simulated environment to predict algorithm results in the real world. Simulators are crucial in allowing researchers to test algorithms, sensor integration, and navigation systems without deploying expensive hardware. This article examines how the AirSim simulator, Unreal Engine, and Cesium plugin can be used to generate simulated digital twin models of real-world locations. Several technical challenges in completing the analysis are discussed and the technical solutions are detailed in this article. Work investigates how to assess mapping results for a real-life experiment using Cesium Tiles provided by digital twins of the experimental location. This is accompanied by a description of a process for duplicating real-world flights in simulation. The performance of these methods is evaluated by analyzing real-life and experimental image telemetry with the Direct Sparse Odometry (DSO) mapping algorithm. Results indicate that Cesium Tiles environments can provide highly accurate models of ground truth geometry after careful alignment. Further, results from real-life and simulated telemetry analysis indicate that the virtual simulation results accurately predict real-life results. Findings indicate that the algorithm results in real life and in the simulated duplicate exhibited a high degree of similarity. This indicates that the use of Cesium Tiles environments as a virtual digital twin for real-life experiments will provide representative results for such algorithms. The impact of this can be significant, potentially allowing expansive virtual testing of robotic systems at specific deployment locations to develop solutions that are tailored to the environment and potentially outperforming solutions meant to work in completely generic environments.

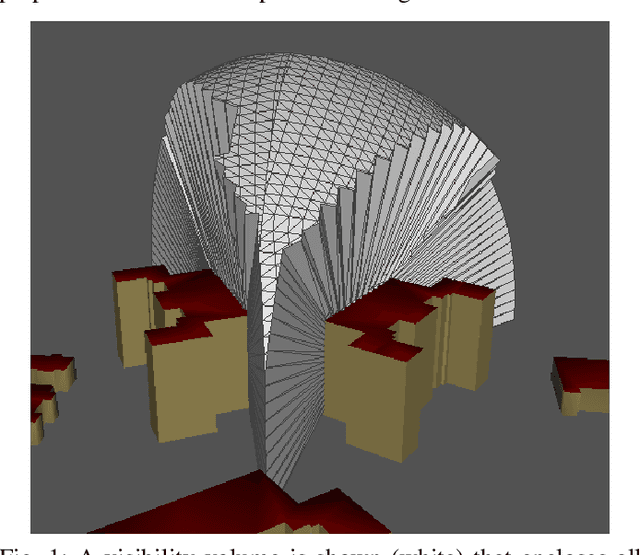

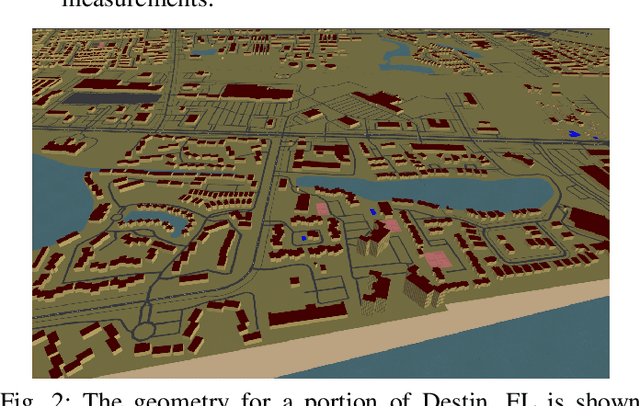

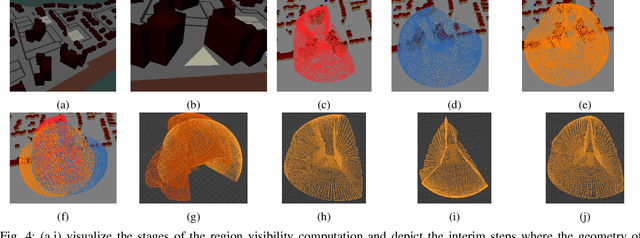

Planning Visual Inspection Tours for a 3D Dubins Airplane Model in an Urban Environment

Jan 12, 2023Abstract:This paper investigates the problem of planning a minimum-length tour for a three-dimensional Dubins airplane model to visually inspect a series of targets located on the ground or exterior surface of objects in an urban environment. Objects are 2.5D extruded polygons representing buildings or other structures. A visibility volume defines the set of admissible (occlusion-free) viewing locations for each target that satisfy feasible airspace and imaging constraints. The Dubins traveling salesperson problem with neighborhoods (DTSPN) is extended to three dimensions with visibility volumes that are approximated by triangular meshes. Four sampling algorithms are proposed for sampling vehicle configurations within each visibility volume to define vertices of the underlying DTSPN. Additionally, a heuristic approach is proposed to improve computation time by approximating edge costs of the 3D Dubins airplane with a lower bound that is used to solve for a sequence of viewing locations. The viewing locations are then assigned pitch and heading angles based on their relative geometry. The proposed sampling methods and heuristics are compared through a Monte-Carlo experiment that simulates view planning tours over a realistic urban environment.

* 18 pages, 10 figures, Presented at 2023 SciTech Intelligent Systems in Guidance Navigation and Control conference

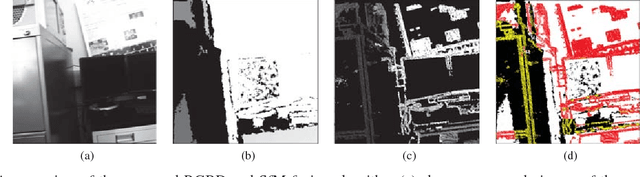

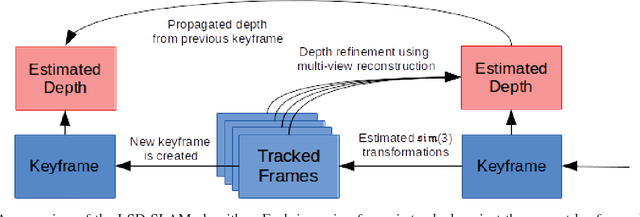

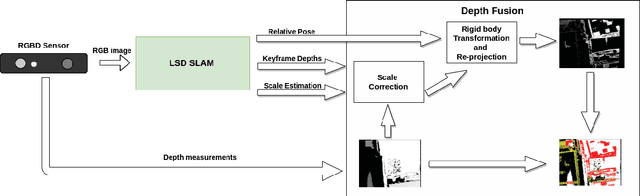

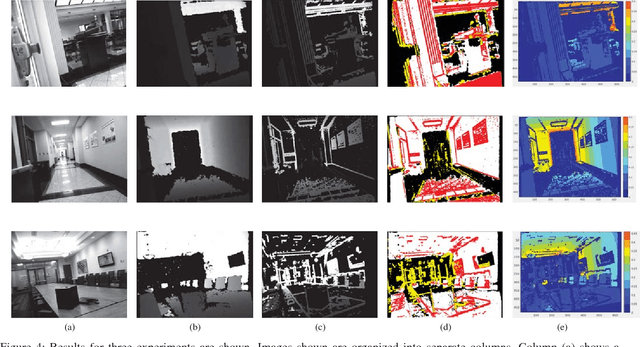

Structure-From-Motion and RGBD Depth Fusion

Mar 10, 2021

Abstract:This article describes a technique to augment a typical RGBD sensor by integrating depth estimates obtained via Structure-from-Motion (SfM) with sensor depth measurements. Limitations in the RGBD depth sensing technology prevent capturing depth measurements in four important contexts: (1) distant surfaces (>5m), (2) dark surfaces, (3) brightly lit indoor scenes and (4) sunlit outdoor scenes. SfM technology computes depth via multi-view reconstruction from the RGB image sequence alone. As such, SfM depth estimates do not suffer the same limitations and may be computed in all four of the previously listed circumstances. This work describes a novel fusion of RGBD depth data and SfM-estimated depths to generate an improved depth stream that may be processed by one of many important downstream applications such as robotic localization and mapping, as well as object recognition and tracking.

A System for 3D Reconstruction Of Comminuted Tibial Plafond Bone Fractures

Feb 23, 2021

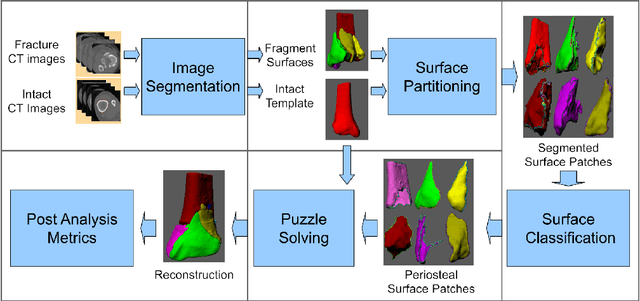

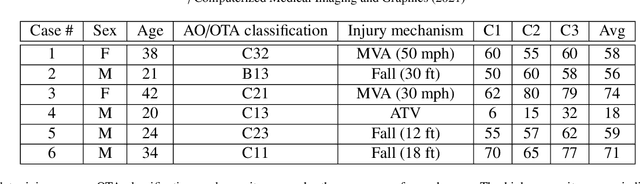

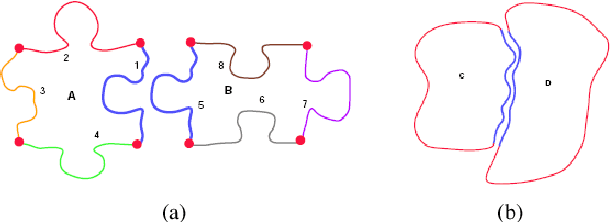

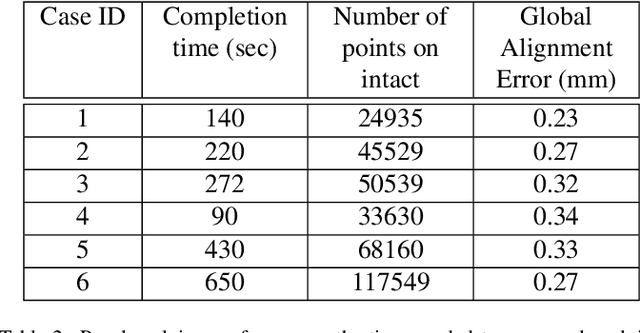

Abstract:High energy impacts at joint locations often generate highly fragmented, or comminuted, bone fractures. Current approaches for treatment require physicians to decide how to classify the fracture within a hierarchy fracture severity categories. Each category then provides a best-practice treatment scenario to obtain the best possible prognosis for the patient. This article identifies shortcomings associated with qualitative-only evaluation of fracture severity and provides new quantitative metrics that serve to address these shortcomings. We propose a system to semi-automatically extract quantitative metrics that are major indicators of fracture severity. These include: (i) fracture surface area, i.e., how much surface area was generated when the bone broke apart, and (ii) dispersion, i.e., how far the fragments have rotated and translated from their original anatomic positions. This article describes new computational tools to extract these metrics by computationally reconstructing 3D bone anatomy from CT images with a focus on tibial plafond fracture cases where difficult qualitative fracture severity cases are more prevalent. Reconstruction is accomplished within a single system that integrates several novel algorithms that identify, extract and piece-together fractured fragments in a virtual environment. Doing so provides objective quantitative measures for these fracture severity indicators. The availability of such measures provides new tools for fracture severity assessment which may lead to improved fracture treatment. This paper describes the system, the underlying algorithms and the metrics of the reconstruction results by quantitatively analyzing six clinical tibial plafond fracture cases.

A closer look at network resolution for efficient network design

Sep 27, 2019

Abstract:There is growing interest in designing lightweight neural networks for mobile and embedded vision applications. Previous works typically reduce computations from the structure level. For example, group convolution based methods reduce computations by factorizing a vanilla convolution into depth-wise and point-wise convolutions. Pruning based methods prune redundant connections in the network structure. In this paper, we explore the importance of network input for achieving optimal accuracy-efficiency trade-off. Reducing input scale is a simple yet effective way to reduce computational cost. It does not require careful network module design, specific hardware optimization and network retraining after pruning. Moreover, different input scales contain different representations to learn. We propose a framework to mutually learn from different input resolutions and network widths. With the shared knowledge, our framework is able to find better width-resolution balance and capture multi-scale representations. It achieves consistently better ImageNet top-1 accuracy over US-Net under different computation constraints, and outperforms the best compound scale model of EfficientNet by 1.5%. The superiority of our framework is also validated on COCO object detection and instance segmentation as well as transfer learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge