Pengcheng Liu

A System for 3D Reconstruction Of Comminuted Tibial Plafond Bone Fractures

Feb 23, 2021

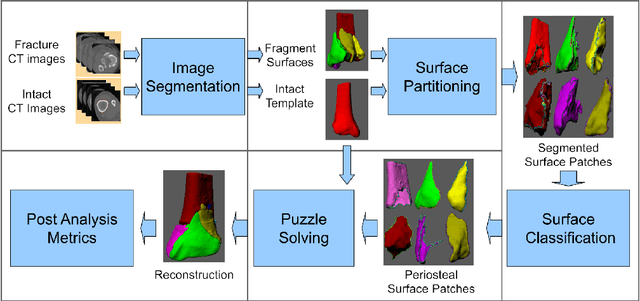

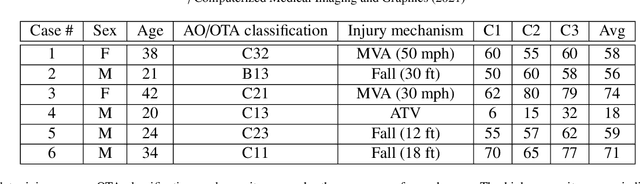

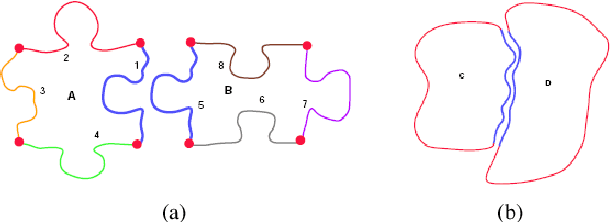

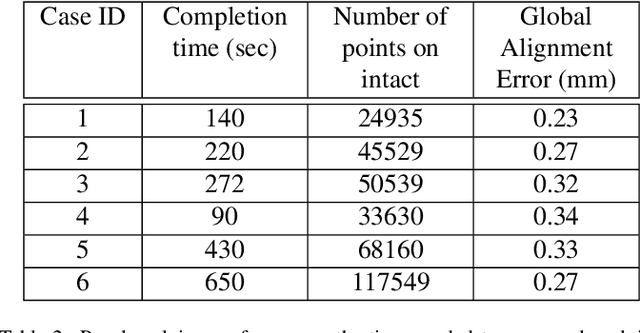

Abstract:High energy impacts at joint locations often generate highly fragmented, or comminuted, bone fractures. Current approaches for treatment require physicians to decide how to classify the fracture within a hierarchy fracture severity categories. Each category then provides a best-practice treatment scenario to obtain the best possible prognosis for the patient. This article identifies shortcomings associated with qualitative-only evaluation of fracture severity and provides new quantitative metrics that serve to address these shortcomings. We propose a system to semi-automatically extract quantitative metrics that are major indicators of fracture severity. These include: (i) fracture surface area, i.e., how much surface area was generated when the bone broke apart, and (ii) dispersion, i.e., how far the fragments have rotated and translated from their original anatomic positions. This article describes new computational tools to extract these metrics by computationally reconstructing 3D bone anatomy from CT images with a focus on tibial plafond fracture cases where difficult qualitative fracture severity cases are more prevalent. Reconstruction is accomplished within a single system that integrates several novel algorithms that identify, extract and piece-together fractured fragments in a virtual environment. Doing so provides objective quantitative measures for these fracture severity indicators. The availability of such measures provides new tools for fracture severity assessment which may lead to improved fracture treatment. This paper describes the system, the underlying algorithms and the metrics of the reconstruction results by quantitatively analyzing six clinical tibial plafond fracture cases.

Synthetic Neural Vision System Design for Motion Pattern Recognition in Dynamic Robot Scenes

Apr 15, 2019

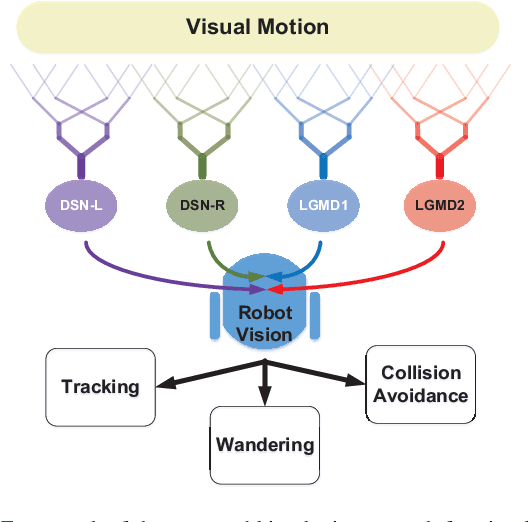

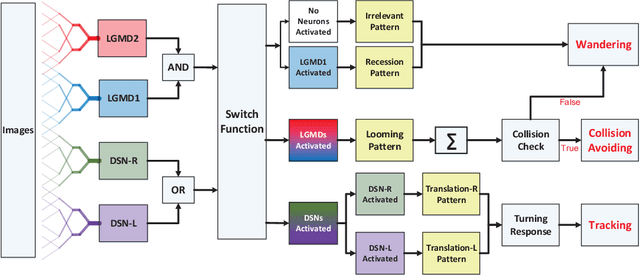

Abstract:Insects have tiny brains but complicated visual systems for motion perception. A handful of insect visual neurons have been computationally modeled and successfully applied for robotics. How different neurons collaborate on motion perception, is an open question to date. In this paper, we propose a novel embedded vision system in autonomous micro-robots, to recognize motion patterns in dynamic robot scenes. Here, the basic motion patterns are categorized into movements of looming (proximity), recession, translation, and other irrelevant ones. The presented system is a synthetic neural network, which comprises two complementary sub-systems with four spiking neurons -- the lobula giant movement detectors (LGMD1 and LGMD2) in locusts for sensing looming and recession, and the direction selective neurons (DSN-R and DSN-L) in flies for translational motion extraction. Images are transformed to spikes via spatiotemporal computations towards a switch function and decision making mechanisms, in order to invoke proper robot behaviors amongst collision avoidance, tracking and wandering, in dynamic robot scenes. Our robot experiments demonstrated two main contributions: (1) This neural vision system is effective to recognize the basic motion patterns corresponding to timely and proper robot behaviors in dynamic scenes. (2) The arena tests with multi-robots demonstrated the effectiveness in recognizing more abundant motion features for collision detection, which is a great improvement compared with former studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge