Jamie Gantert

Structure-From-Motion and RGBD Depth Fusion

Mar 10, 2021

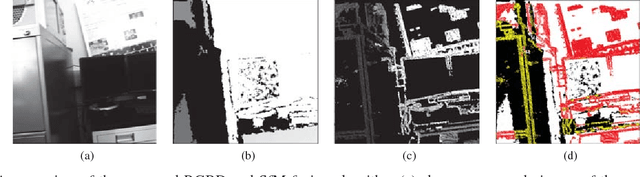

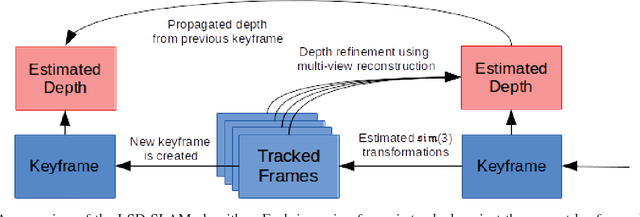

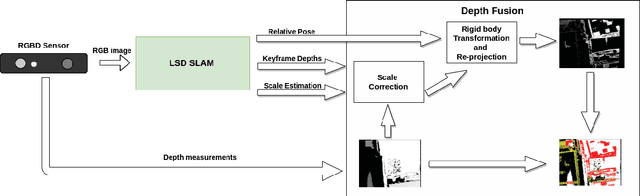

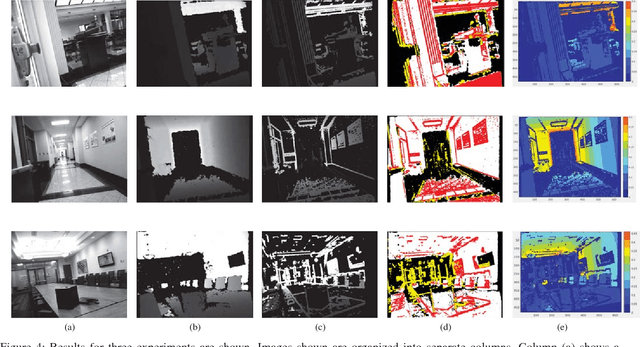

Abstract:This article describes a technique to augment a typical RGBD sensor by integrating depth estimates obtained via Structure-from-Motion (SfM) with sensor depth measurements. Limitations in the RGBD depth sensing technology prevent capturing depth measurements in four important contexts: (1) distant surfaces (>5m), (2) dark surfaces, (3) brightly lit indoor scenes and (4) sunlit outdoor scenes. SfM technology computes depth via multi-view reconstruction from the RGB image sequence alone. As such, SfM depth estimates do not suffer the same limitations and may be computed in all four of the previously listed circumstances. This work describes a novel fusion of RGBD depth data and SfM-estimated depths to generate an improved depth stream that may be processed by one of many important downstream applications such as robotic localization and mapping, as well as object recognition and tracking.

Characterizing Inter-Layer Functional Mappings of Deep Learning Models

Jul 09, 2019

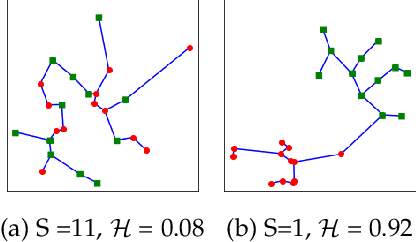

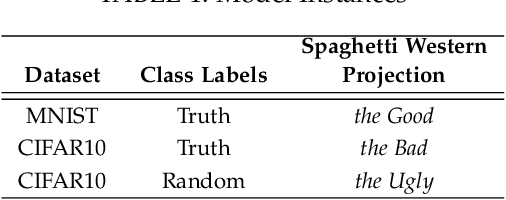

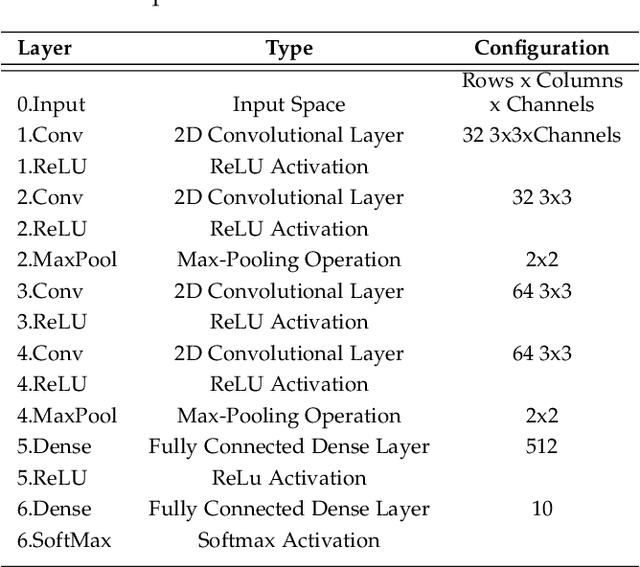

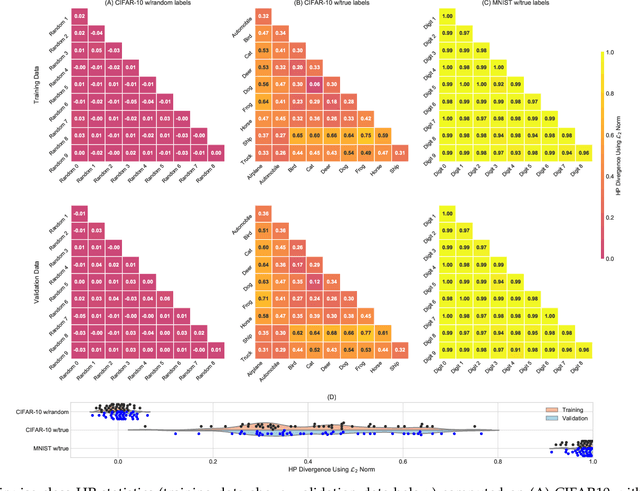

Abstract:Deep learning architectures have demonstrated state-of-the-art performance for object classification and have become ubiquitous in commercial products. These methods are often applied without understanding (a) the difficulty of a classification task given the input data, and (b) how a specific deep learning architecture transforms that data. To answer (a) and (b), we illustrate the utility of a multivariate nonparametric estimator of class separation, the Henze-Penrose (HP) statistic, in the original as well as layer-induced representations. Given an $N$-class problem, our contribution defines the $C(N,2)$ combinations of HP statistics as a sample from a distribution of class-pair separations. This allows us to characterize the distributional change to class separation induced at each layer of the model. Fisher permutation tests are used to detect statistically significant changes within a model. By comparing the HP statistic distributions between layers, one can statistically characterize: layer adaptation during training, the contribution of each layer to the classification task, and the presence or absence of consistency between training and validation data. This is demonstrated for a simple deep neural network using CIFAR10 with random-labels, CIFAR10, and MNIST datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge