Jiaying Zhou

Stable Language Guidance for Vision-Language-Action Models

Jan 07, 2026Abstract:Vision-Language-Action (VLA) models have demonstrated impressive capabilities in generalized robotic control; however, they remain notoriously brittle to linguistic perturbations. We identify a critical ``modality collapse'' phenomenon where strong visual priors overwhelm sparse linguistic signals, causing agents to overfit to specific instruction phrasings while ignoring the underlying semantic intent. To address this, we propose \textbf{Residual Semantic Steering (RSS)}, a probabilistic framework that disentangles physical affordance from semantic execution. RSS introduces two theoretical innovations: (1) \textbf{Monte Carlo Syntactic Integration}, which approximates the true semantic posterior via dense, LLM-driven distributional expansion, and (2) \textbf{Residual Affordance Steering}, a dual-stream decoding mechanism that explicitly isolates the causal influence of language by subtracting the visual affordance prior. Theoretical analysis suggests that RSS effectively maximizes the mutual information between action and intent while suppressing visual distractors. Empirical results across diverse manipulation benchmarks demonstrate that RSS achieves state-of-the-art robustness, maintaining performance even under adversarial linguistic perturbations.

Topology-aware Pathological Consistency Matching for Weakly-Paired IHC Virtual Staining

Jan 06, 2026Abstract:Immunohistochemical (IHC) staining provides crucial molecular characterization of tissue samples and plays an indispensable role in the clinical examination and diagnosis of cancers. However, compared with the commonly used Hematoxylin and Eosin (H&E) staining, IHC staining involves complex procedures and is both time-consuming and expensive, which limits its widespread clinical use. Virtual staining converts H&E images to IHC images, offering a cost-effective alternative to clinical IHC staining. Nevertheless, using adjacent slides as ground truth often results in weakly-paired data with spatial misalignment and local deformations, hindering effective supervised learning. To address these challenges, we propose a novel topology-aware framework for H&E-to-IHC virtual staining. Specifically, we introduce a Topology-aware Consistency Matching (TACM) mechanism that employs graph contrastive learning and topological perturbations to learn robust matching patterns despite spatial misalignments, ensuring structural consistency. Furthermore, we propose a Topology-constrained Pathological Matching (TCPM) mechanism that aligns pathological positive regions based on node importance to enhance pathological consistency. Extensive experiments on two benchmarks across four staining tasks demonstrate that our method outperforms state-of-the-art approaches, achieving superior generation quality with higher clinical relevance.

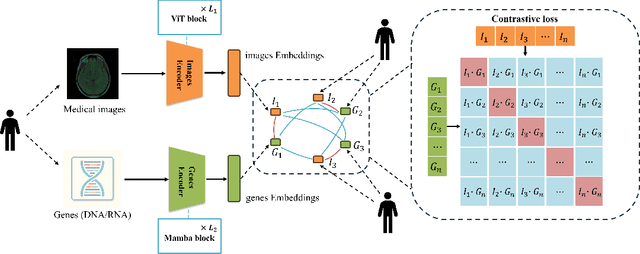

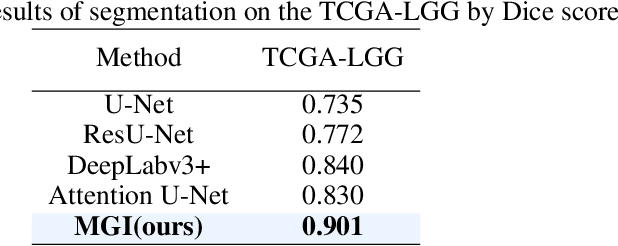

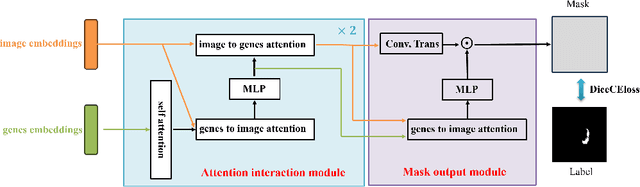

MGI: Multimodal Contrastive pre-training of Genomic and Medical Imaging

Jun 02, 2024

Abstract:Medicine is inherently a multimodal discipline. Medical images can reflect the pathological changes of cancer and tumors, while the expression of specific genes can influence their morphological characteristics. However, most deep learning models employed for these medical tasks are unimodal, making predictions using either image data or genomic data exclusively. In this paper, we propose a multimodal pre-training framework that jointly incorporates genomics and medical images for downstream tasks. To address the issues of high computational complexity and difficulty in capturing long-range dependencies in genes sequence modeling with MLP or Transformer architectures, we utilize Mamba to model these long genomic sequences. We aligns medical images and genes using a self-supervised contrastive learning approach which combines the Mamba as a genetic encoder and the Vision Transformer (ViT) as a medical image encoder. We pre-trained on the TCGA dataset using paired gene expression data and imaging data, and fine-tuned it for downstream tumor segmentation tasks. The results show that our model outperformed a wide range of related methods.

Uncertainty-Aware Adapter: Adapting Segment Anything Model (SAM) for Ambiguous Medical Image Segmentation

Mar 19, 2024

Abstract:The Segment Anything Model (SAM) gained significant success in natural image segmentation, and many methods have tried to fine-tune it to medical image segmentation. An efficient way to do so is by using Adapters, specialized modules that learn just a few parameters to tailor SAM specifically for medical images. However, unlike natural images, many tissues and lesions in medical images have blurry boundaries and may be ambiguous. Previous efforts to adapt SAM ignore this challenge and can only predict distinct segmentation. It may mislead clinicians or cause misdiagnosis, especially when encountering rare variants or situations with low model confidence. In this work, we propose a novel module called the Uncertainty-aware Adapter, which efficiently fine-tuning SAM for uncertainty-aware medical image segmentation. Utilizing a conditional variational autoencoder, we encoded stochastic samples to effectively represent the inherent uncertainty in medical imaging. We designed a new module on a standard adapter that utilizes a condition-based strategy to interact with samples to help SAM integrate uncertainty. We evaluated our method on two multi-annotated datasets with different modalities: LIDC-IDRI (lung abnormalities segmentation) and REFUGE2 (optic-cup segmentation). The experimental results show that the proposed model outperforms all the previous methods and achieves the new state-of-the-art (SOTA) on both benchmarks. We also demonstrated that our method can generate diverse segmentation hypotheses that are more realistic as well as heterogeneous.

Enhancing In-Context Learning with Answer Feedback for Multi-Span Question Answering

Jun 07, 2023Abstract:Whereas the recent emergence of large language models (LLMs) like ChatGPT has exhibited impressive general performance, it still has a large gap with fully-supervised models on specific tasks such as multi-span question answering. Previous researches found that in-context learning is an effective approach to exploiting LLM, by using a few task-related labeled data as demonstration examples to construct a few-shot prompt for answering new questions. A popular implementation is to concatenate a few questions and their correct answers through simple templates, informing LLM of the desired output. In this paper, we propose a novel way of employing labeled data such that it also informs LLM of some undesired output, by extending demonstration examples with feedback about answers predicted by an off-the-shelf model, e.g., correct, incorrect, or incomplete. Experiments on three multi-span question answering datasets as well as a keyphrase extraction dataset show that our new prompting strategy consistently improves LLM's in-context learning performance.

Clues Before Answers: Generation-Enhanced Multiple-Choice QA

Apr 30, 2022

Abstract:A trending paradigm for multiple-choice question answering (MCQA) is using a text-to-text framework. By unifying data in different tasks into a single text-to-text format, it trains a generative encoder-decoder model which is both powerful and universal. However, a side effect of twisting a generation target to fit the classification nature of MCQA is the under-utilization of the decoder and the knowledge that can be decoded. To exploit the generation capability and underlying knowledge of a pre-trained encoder-decoder model, in this paper, we propose a generation-enhanced MCQA model named GenMC. It generates a clue from the question and then leverages the clue to enhance a reader for MCQA. It outperforms text-to-text models on multiple MCQA datasets.

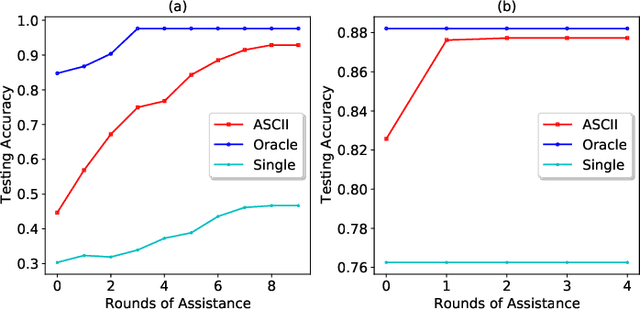

Assisted Learning for Organizations with Limited Data

Sep 21, 2021

Abstract:We develop an assisted learning framework for assisting organization-level learners to improve their learning performance with limited and imbalanced data. In particular, learners at the organization level usually have sufficient computation resource, but are subject to stringent collaboration policy and information privacy. Their limited imbalanced data often cause biased inference and sub-optimal decision-making. In our assisted learning framework, an organizational learner purchases assistance service from a service provider and aims to enhance its model performance within a few assistance rounds. We develop effective stochastic training algorithms for assisted deep learning and assisted reinforcement learning. Different from existing distributed algorithms that need to frequently transmit gradients or models, our framework allows the learner to only occasionally share information with the service provider, and still achieve a near-oracle model as if all the data were centralized.

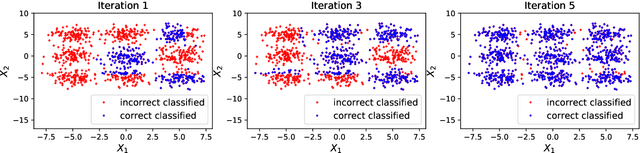

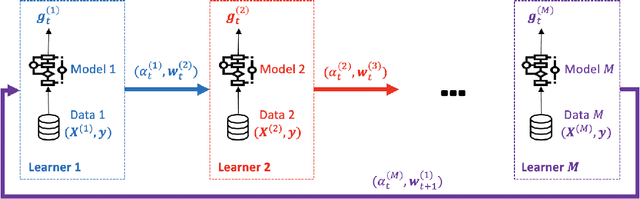

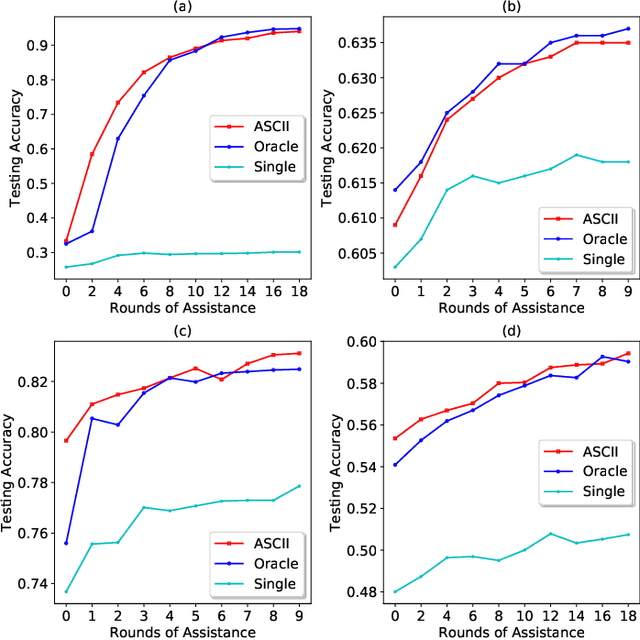

ASCII: ASsisted Classification with Ignorance Interchange

Oct 21, 2020

Abstract:The rapid development in data collecting devices and computation platforms produces an emerging number of agents, each equipped with a unique data modality over a particular population of subjects. While the predictive performance of an agent may be enhanced by transmitting other data to it, this is often unrealistic due to intractable transmission costs and security concerns. While the predictive performance of an agent may be enhanced by transmitting other data to it, this is often unrealistic due to intractable transmission costs and security concerns. In this paper, we propose a method named ASCII for an agent to improve its classification performance through assistance from other agents. The main idea is to iteratively interchange an ignorance value between 0 and 1 for each collated sample among agents, where the value represents the urgency of further assistance needed. The method is naturally suitable for privacy-aware, transmission-economical, and decentralized learning scenarios. The method is also general as it allows the agents to use arbitrary classifiers such as logistic regression, ensemble tree, and neural network, and they may be heterogeneous among agents. We demonstrate the proposed method with extensive experimental studies.

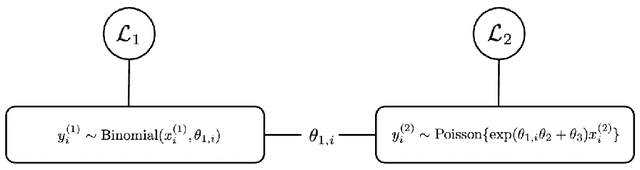

Model Linkage Selection for Cooperative Learning

Jun 14, 2020

Abstract:Rapid developments in data collecting devices and computation platforms produce an emerging number of learners and data modalities in many scientific domains. We consider the setting in which each learner holds a pair of parametric statistical model and a specific data source, with the goal of integrating information across a set of learners to enhance the prediction accuracy of a specific learner. One natural way to integrate information is to build a joint model across a set of learners that shares common parameters of interest. However, the parameter sharing patterns across a set of learners are not known a priori. Misspecifying the parameter sharing patterns and the parametric statistical model for each learner yields a biased estimator and degrades the prediction accuracy of the joint model. In this paper, we propose a novel framework for integrating information across a set of learners that is robust against model misspecification and misspecified parameter sharing patterns. The main crux is to sequentially incorporates additional learners that can enhance the prediction accuracy of an existing joint model based on a user-specified parameter sharing patterns across a set of learners, starting from a model with one learner. Theoretically, we show that the proposed method can data-adaptively select the correct parameter sharing patterns based on a user-specified parameter sharing patterns, and thus enhances the prediction accuracy of a learner. Extensive numerical studies are performed to evaluate the performance of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge