Xun Xian

Can Agentic AI Match the Performance of Human Data Scientists?

Dec 24, 2025

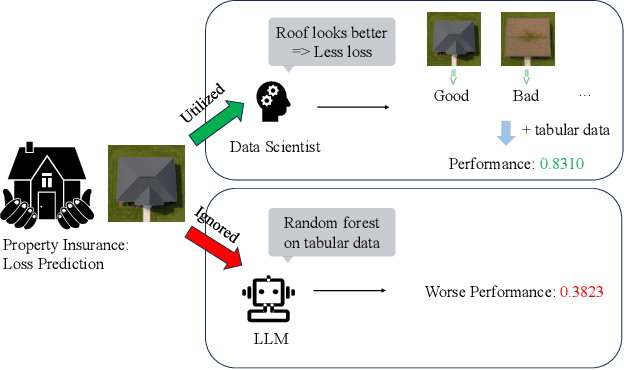

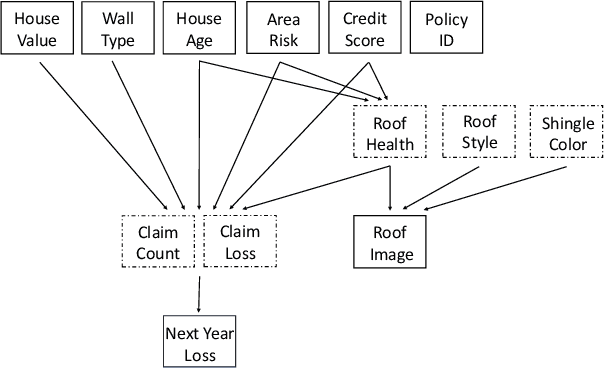

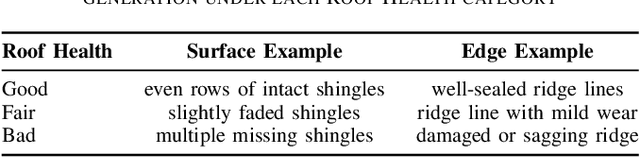

Abstract:Data science plays a critical role in transforming complex data into actionable insights across numerous domains. Recent developments in large language models (LLMs) have significantly automated data science workflows, but a fundamental question persists: Can these agentic AI systems truly match the performance of human data scientists who routinely leverage domain-specific knowledge? We explore this question by designing a prediction task where a crucial latent variable is hidden in relevant image data instead of tabular features. As a result, agentic AI that generates generic codes for modeling tabular data cannot perform well, while human experts could identify the important hidden variable using domain knowledge. We demonstrate this idea with a synthetic dataset for property insurance. Our experiments show that agentic AI that relies on generic analytics workflow falls short of methods that use domain-specific insights. This highlights a key limitation of the current agentic AI for data science and underscores the need for future research to develop agentic AI systems that can better recognize and incorporate domain knowledge.

An Outlook on the Opportunities and Challenges of Multi-Agent AI Systems

May 23, 2025Abstract:Multi-agent AI systems (MAS) offer a promising framework for distributed intelligence, enabling collaborative reasoning, planning, and decision-making across autonomous agents. This paper provides a systematic outlook on the current opportunities and challenges of MAS, drawing insights from recent advances in large language models (LLMs), federated optimization, and human-AI interaction. We formalize key concepts including agent topology, coordination protocols, and shared objectives, and identify major risks such as dependency, misalignment, and vulnerabilities arising from training data overlap. Through a biologically inspired simulation and comprehensive theoretical framing, we highlight critical pathways for developing robust, scalable, and secure MAS in real-world settings.

Ice Cream Doesn't Cause Drowning: Benchmarking LLMs Against Statistical Pitfalls in Causal Inference

May 19, 2025Abstract:Reliable causal inference is essential for making decisions in high-stakes areas like medicine, economics, and public policy. However, it remains unclear whether large language models (LLMs) can handle rigorous and trustworthy statistical causal inference. Current benchmarks usually involve simplified tasks. For example, these tasks might only ask LLMs to identify semantic causal relationships or draw conclusions directly from raw data. As a result, models may overlook important statistical pitfalls, such as Simpson's paradox or selection bias. This oversight limits the applicability of LLMs in the real world. To address these limitations, we propose CausalPitfalls, a comprehensive benchmark designed to rigorously evaluate the capability of LLMs in overcoming common causal inference pitfalls. Our benchmark features structured challenges across multiple difficulty levels, each paired with grading rubrics. This approach allows us to quantitatively measure both causal reasoning capabilities and the reliability of LLMs' responses. We evaluate models using two protocols: (1) direct prompting, which assesses intrinsic causal reasoning, and (2) code-assisted prompting, where models generate executable code for explicit statistical analysis. Additionally, we validate the effectiveness of this judge by comparing its scoring with assessments from human experts. Our results reveal significant limitations in current LLMs when performing statistical causal inference. The CausalPitfalls benchmark provides essential guidance and quantitative metrics to advance the development of trustworthy causal reasoning systems.

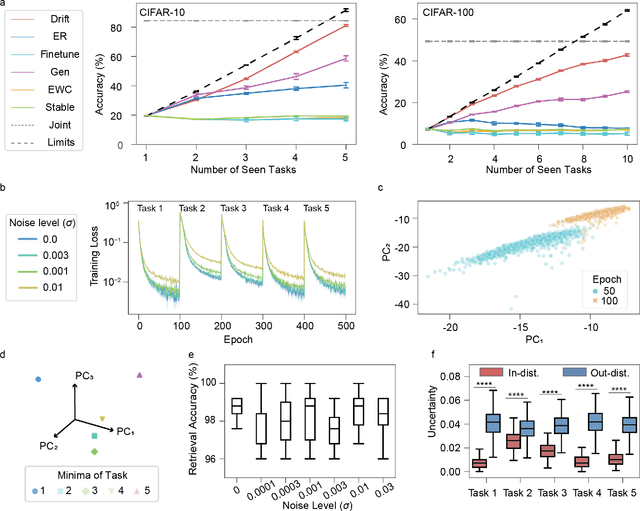

Drift to Remember

Sep 21, 2024

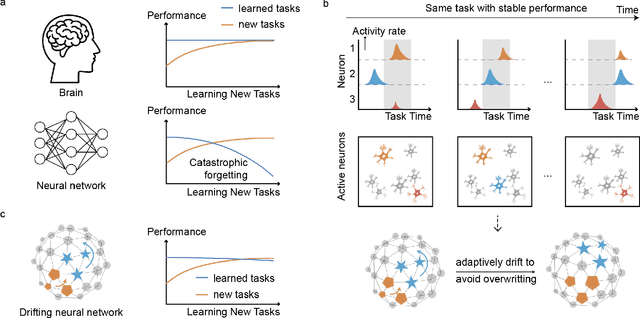

Abstract:Lifelong learning in artificial intelligence (AI) aims to mimic the biological brain's ability to continuously learn and retain knowledge, yet it faces challenges such as catastrophic forgetting. Recent neuroscience research suggests that neural activity in biological systems undergoes representational drift, where neural responses evolve over time, even with consistent inputs and tasks. We hypothesize that representational drift can alleviate catastrophic forgetting in AI during new task acquisition. To test this, we introduce DriftNet, a network designed to constantly explore various local minima in the loss landscape while dynamically retrieving relevant tasks. This approach ensures efficient integration of new information and preserves existing knowledge. Experimental studies in image classification and natural language processing demonstrate that DriftNet outperforms existing models in lifelong learning. Importantly, DriftNet is scalable in handling a sequence of tasks such as sentiment analysis and question answering using large language models (LLMs) with billions of parameters on a single Nvidia A100 GPU. DriftNet efficiently updates LLMs using only new data, avoiding the need for full dataset retraining. Tested on GPT-2 and RoBERTa, DriftNet is a robust, cost-effective solution for lifelong learning in LLMs. This study not only advances AI systems to emulate biological learning, but also provides insights into the adaptive mechanisms of biological neural systems, deepening our understanding of lifelong learning in nature.

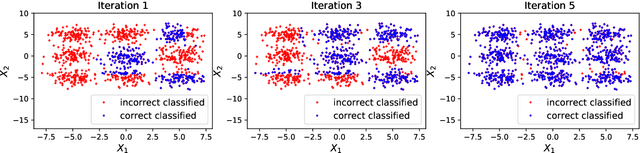

Demystifying Poisoning Backdoor Attacks from a Statistical Perspective

Oct 18, 2023

Abstract:The growing dependence on machine learning in real-world applications emphasizes the importance of understanding and ensuring its safety. Backdoor attacks pose a significant security risk due to their stealthy nature and potentially serious consequences. Such attacks involve embedding triggers within a learning model with the intention of causing malicious behavior when an active trigger is present while maintaining regular functionality without it. This paper evaluates the effectiveness of any backdoor attack incorporating a constant trigger, by establishing tight lower and upper boundaries for the performance of the compromised model on both clean and backdoor test data. The developed theory answers a series of fundamental but previously underexplored problems, including (1) what are the determining factors for a backdoor attack's success, (2) what is the direction of the most effective backdoor attack, and (3) when will a human-imperceptible trigger succeed. Our derived understanding applies to both discriminative and generative models. We also demonstrate the theory by conducting experiments using benchmark datasets and state-of-the-art backdoor attack scenarios.

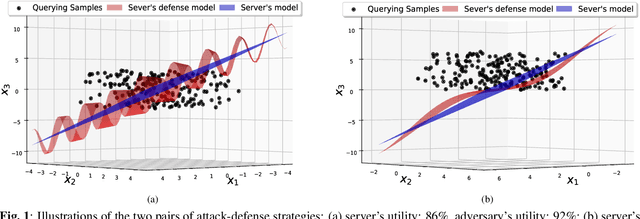

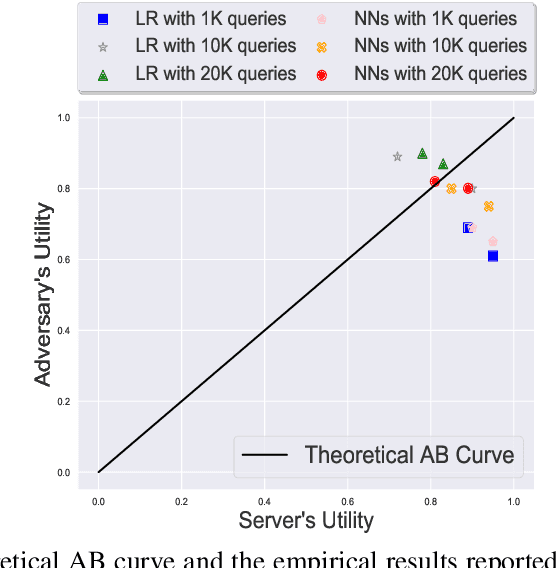

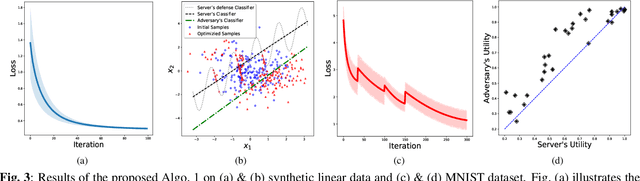

A Framework for Understanding Model Extraction Attack and Defense

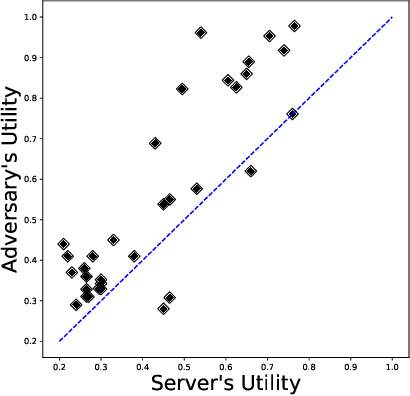

Jun 23, 2022

Abstract:The privacy of machine learning models has become a significant concern in many emerging Machine-Learning-as-a-Service applications, where prediction services based on well-trained models are offered to users via pay-per-query. The lack of a defense mechanism can impose a high risk on the privacy of the server's model since an adversary could efficiently steal the model by querying only a few `good' data points. The interplay between a server's defense and an adversary's attack inevitably leads to an arms race dilemma, as commonly seen in Adversarial Machine Learning. To study the fundamental tradeoffs between model utility from a benign user's view and privacy from an adversary's view, we develop new metrics to quantify such tradeoffs, analyze their theoretical properties, and develop an optimization problem to understand the optimal adversarial attack and defense strategies. The developed concepts and theory match the empirical findings on the `equilibrium' between privacy and utility. In terms of optimization, the key ingredient that enables our results is a unified representation of the attack-defense problem as a min-max bi-level problem. The developed results will be demonstrated by examples and experiments.

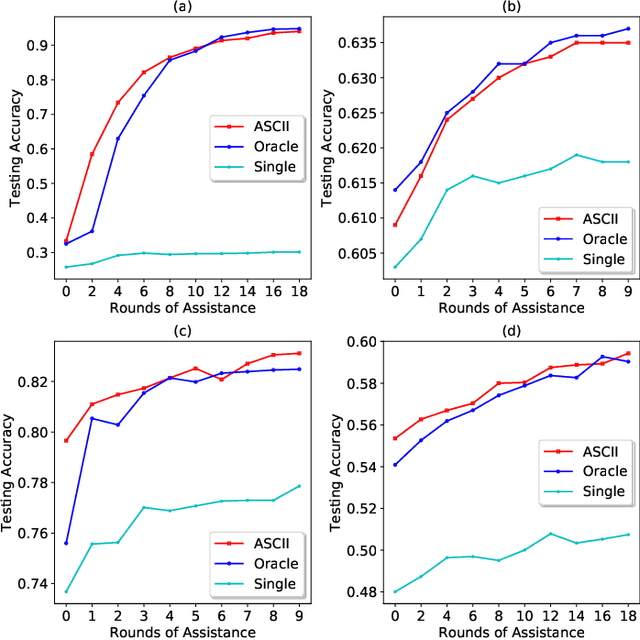

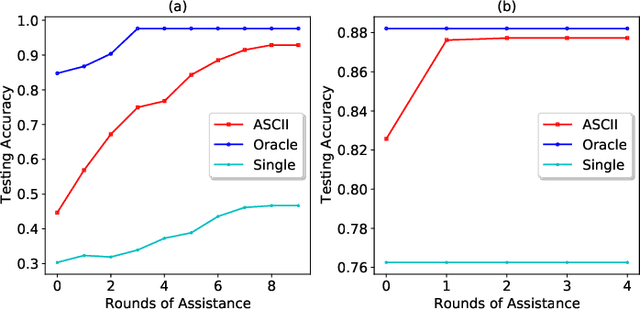

ASCII: ASsisted Classification with Ignorance Interchange

Oct 21, 2020

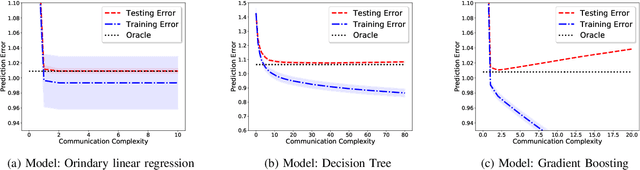

Abstract:The rapid development in data collecting devices and computation platforms produces an emerging number of agents, each equipped with a unique data modality over a particular population of subjects. While the predictive performance of an agent may be enhanced by transmitting other data to it, this is often unrealistic due to intractable transmission costs and security concerns. While the predictive performance of an agent may be enhanced by transmitting other data to it, this is often unrealistic due to intractable transmission costs and security concerns. In this paper, we propose a method named ASCII for an agent to improve its classification performance through assistance from other agents. The main idea is to iteratively interchange an ignorance value between 0 and 1 for each collated sample among agents, where the value represents the urgency of further assistance needed. The method is naturally suitable for privacy-aware, transmission-economical, and decentralized learning scenarios. The method is also general as it allows the agents to use arbitrary classifiers such as logistic regression, ensemble tree, and neural network, and they may be heterogeneous among agents. We demonstrate the proposed method with extensive experimental studies.

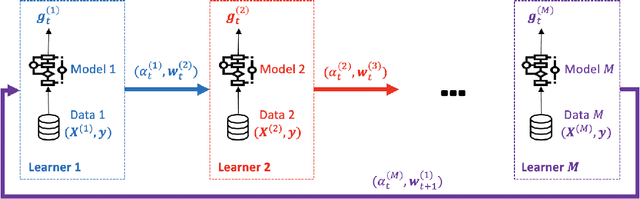

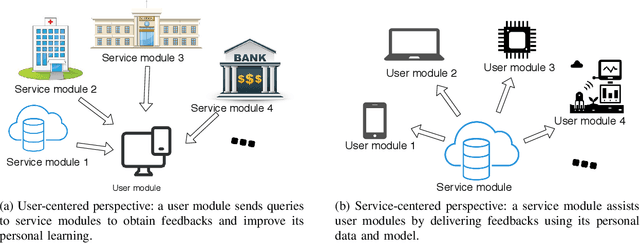

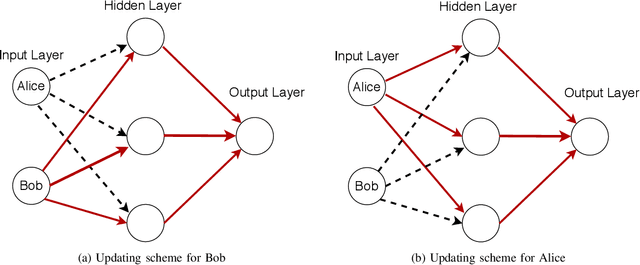

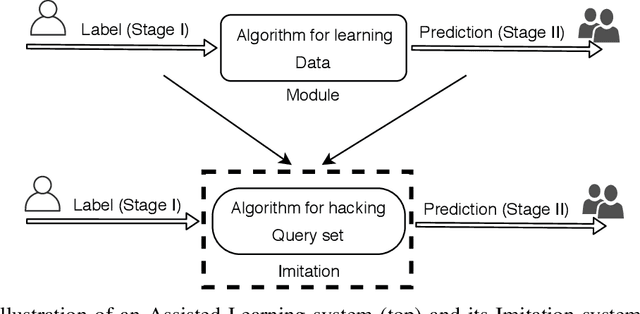

Assisted Learning and Imitation Privacy

Apr 01, 2020

Abstract:Motivated by the emerging needs of decentralized learners with personalized learning objectives, we present an Assisted Learning framework where a service provider Bob assists a learner Alice with supervised learning tasks without transmitting Bob's private algorithm or data. Bob assists Alice either by building a predictive model using Alice's labels, or by improving Alice's private learning through iterative communications where only relevant statistics are transmitted. The proposed learning framework is naturally suitable for distributed, personalized, and privacy-aware scenarios. For example, it is shown in some scenarios that two suboptimal learners could achieve much better performance through Assisted Learning. Moreover, motivated by privacy concerns in Assisted Learning, we present a new notion of privacy to quantify the privacy leakage at learning level instead of data level. This new privacy, named imitation privacy, is particularly suitable for a market of statistical learners each holding private learning algorithms as well as data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge