Jagath C. Rajapakse

FedDEAP: Adaptive Dual-Prompt Tuning for Multi-Domain Federated Learning

Oct 21, 2025Abstract:Federated learning (FL) enables multiple clients to collaboratively train machine learning models without exposing local data, balancing performance and privacy. However, domain shift and label heterogeneity across clients often hinder the generalization of the aggregated global model. Recently, large-scale vision-language models like CLIP have shown strong zero-shot classification capabilities, raising the question of how to effectively fine-tune CLIP across domains in a federated setting. In this work, we propose an adaptive federated prompt tuning framework, FedDEAP, to enhance CLIP's generalization in multi-domain scenarios. Our method includes the following three key components: (1) To mitigate the loss of domain-specific information caused by label-supervised tuning, we disentangle semantic and domain-specific features in images by using semantic and domain transformation networks with unbiased mappings; (2) To preserve domain-specific knowledge during global prompt aggregation, we introduce a dual-prompt design with a global semantic prompt and a local domain prompt to balance shared and personalized information; (3) To maximize the inclusion of semantic and domain information from images in the generated text features, we align textual and visual representations under the two learned transformations to preserve semantic and domain consistency. Theoretical analysis and extensive experiments on four datasets demonstrate the effectiveness of our method in enhancing the generalization of CLIP for federated image recognition across multiple domains.

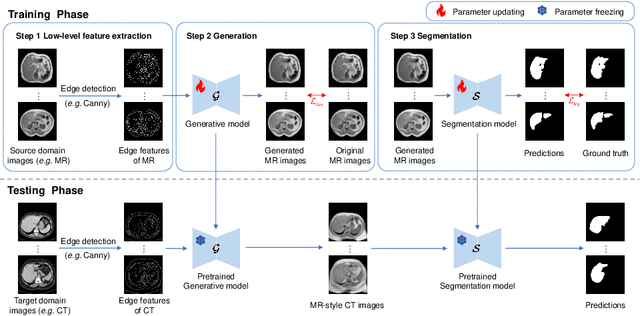

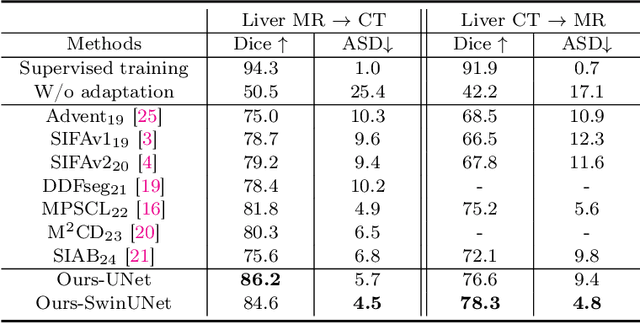

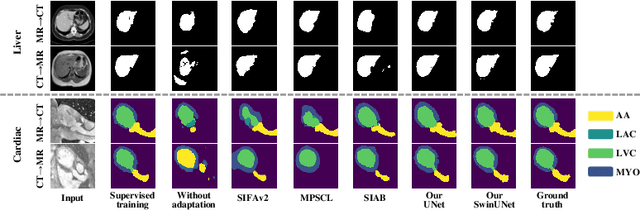

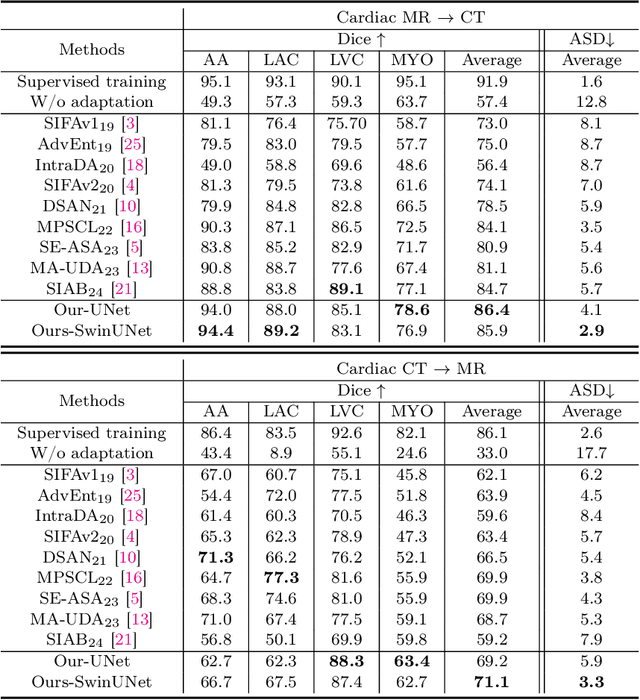

Bridging the Inter-Domain Gap through Low-Level Features for Cross-Modal Medical Image Segmentation

May 17, 2025

Abstract:This paper addresses the task of cross-modal medical image segmentation by exploring unsupervised domain adaptation (UDA) approaches. We propose a model-agnostic UDA framework, LowBridge, which builds on a simple observation that cross-modal images share some similar low-level features (e.g., edges) as they are depicting the same structures. Specifically, we first train a generative model to recover the source images from their edge features, followed by training a segmentation model on the generated source images, separately. At test time, edge features from the target images are input to the pretrained generative model to generate source-style target domain images, which are then segmented using the pretrained segmentation network. Despite its simplicity, extensive experiments on various publicly available datasets demonstrate that \proposed achieves state-of-the-art performance, outperforming eleven existing UDA approaches under different settings. Notably, further ablation studies show that \proposed is agnostic to different types of generative and segmentation models, suggesting its potential to be seamlessly plugged with the most advanced models to achieve even more outstanding results in the future. The code is available at https://github.com/JoshuaLPF/LowBridge.

Deep Fourier-embedded Network for Bi-modal Salient Object Detection

Nov 27, 2024Abstract:The rapid development of deep learning provides a significant improvement of salient object detection combining both RGB and thermal images. However, existing deep learning-based models suffer from two major shortcomings. First, the computation and memory demands of Transformer-based models with quadratic complexity are unbearable, especially in handling high-resolution bi-modal feature fusion. Second, even if learning converges to an ideal solution, there remains a frequency gap between the prediction and ground truth. Therefore, we propose a purely fast Fourier transform-based model, namely deep Fourier-embedded network (DFENet), for learning bi-modal information of RGB and thermal images. On one hand, fast Fourier transform efficiently fetches global dependencies with low complexity. Inspired by this, we design modal-coordinated perception attention to fuse the frequency gap between RGB and thermal modalities with multi-dimensional representation enhancement. To obtain reliable detailed information during decoding, we design the frequency-decomposed edge-aware module (FEM) to clarify object edges by deeply decomposing low-level features. Moreover, we equip proposed Fourier residual channel attention block in each decoder layer to prioritize high-frequency information while aligning channel global relationships. On the other hand, we propose co-focus frequency loss (CFL) to steer FEM towards minimizing the frequency gap. CFL dynamically weights hard frequencies during edge frequency reconstruction by cross-referencing the bi-modal edge information in the Fourier domain. This frequency-level refinement of edge features further contributes to the quality of the final pixel-level prediction. Extensive experiments on four bi-modal salient object detection benchmark datasets demonstrate our proposed DFENet outperforms twelve existing state-of-the-art models.

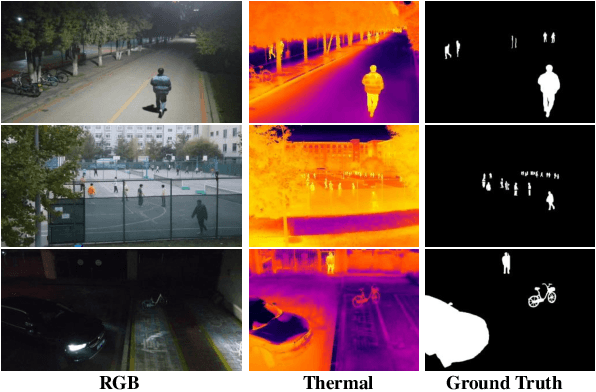

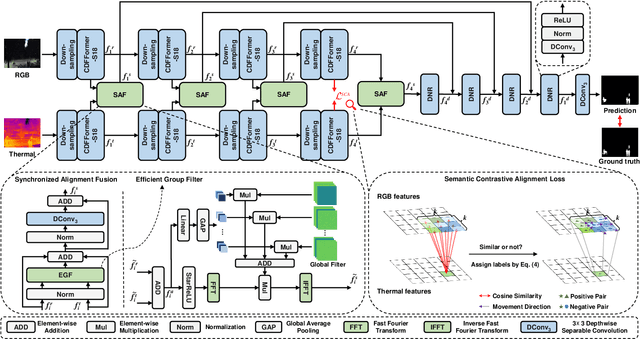

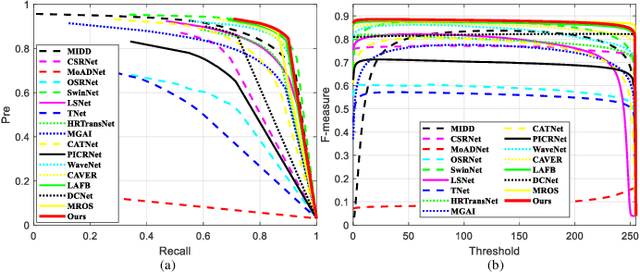

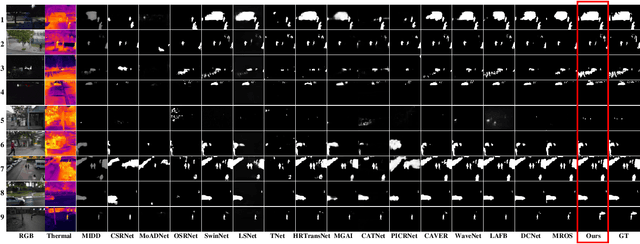

Efficient Fourier Filtering Network with Contrastive Learning for UAV-based Unaligned Bi-modal Salient Object Detection

Nov 06, 2024

Abstract:Unmanned aerial vehicle (UAV)-based bi-modal salient object detection (BSOD) aims to segment salient objects in a scene utilizing complementary cues in unaligned RGB and thermal image pairs. However, the high computational expense of existing UAV-based BSOD models limits their applicability to real-world UAV devices. To address this problem, we propose an efficient Fourier filter network with contrastive learning that achieves both real-time and accurate performance. Specifically, we first design a semantic contrastive alignment loss to align the two modalities at the semantic level, which facilitates mutual refinement in a parameter-free way. Second, inspired by the fast Fourier transform that obtains global relevance in linear complexity, we propose synchronized alignment fusion, which aligns and fuses bi-modal features in the channel and spatial dimensions by a hierarchical filtering mechanism. Our proposed model, AlignSal, reduces the number of parameters by 70.0%, decreases the floating point operations by 49.4%, and increases the inference speed by 152.5% compared to the cutting-edge BSOD model (i.e., MROS). Extensive experiments on the UAV RGB-T 2400 and three weakly aligned datasets demonstrate that AlignSal achieves both real-time inference speed and better performance and generalizability compared to sixteen state-of-the-art BSOD models across most evaluation metrics. In addition, our ablation studies further verify AlignSal's potential in boosting the performance of existing aligned BSOD models on UAV-based unaligned data. The code is available at: https://github.com/JoshuaLPF/AlignSal.

Discovering robust biomarkers of neurological disorders from functional MRI using graph neural networks: A Review

May 01, 2024

Abstract:Graph neural networks (GNN) have emerged as a popular tool for modelling functional magnetic resonance imaging (fMRI) datasets. Many recent studies have reported significant improvements in disorder classification performance via more sophisticated GNN designs and highlighted salient features that could be potential biomarkers of the disorder. In this review, we provide an overview of how GNN and model explainability techniques have been applied on fMRI datasets for disorder prediction tasks, with a particular emphasis on the robustness of biomarkers produced for neurodegenerative diseases and neuropsychiatric disorders. We found that while most studies have performant models, salient features highlighted in these studies vary greatly across studies on the same disorder and little has been done to evaluate their robustness. To address these issues, we suggest establishing new standards that are based on objective evaluation metrics to determine the robustness of these potential biomarkers. We further highlight gaps in the existing literature and put together a prediction-attribution-evaluation framework that could set the foundations for future research on improving the robustness of potential biomarkers discovered via GNNs.

Integrating Contrastive Learning into a Multitask Transformer Model for Effective Domain Adaptation

Oct 07, 2023

Abstract:While speech emotion recognition (SER) research has made significant progress, achieving generalization across various corpora continues to pose a problem. We propose a novel domain adaptation technique that embodies a multitask framework with SER as the primary task, and contrastive learning and information maximisation loss as auxiliary tasks, underpinned by fine-tuning of transformers pre-trained on large language models. Empirical results obtained through experiments on well-established datasets like IEMOCAP and MSP-IMPROV, illustrate that our proposed model achieves state-of-the-art performance in SER within cross-corpus scenarios.

Self-supervised learning for hotspot detection and isolation from thermal images

Aug 25, 2023Abstract:Hotspot detection using thermal imaging has recently become essential in several industrial applications, such as security applications, health applications, and equipment monitoring applications. Hotspot detection is of utmost importance in industrial safety where equipment can develop anomalies. Hotspots are early indicators of such anomalies. We address the problem of hotspot detection in thermal images by proposing a self-supervised learning approach. Self-supervised learning has shown potential as a competitive alternative to their supervised learning counterparts but their application to thermography has been limited. This has been due to lack of diverse data availability, domain specific pre-trained models, standardized benchmarks, etc. We propose a self-supervised representation learning approach followed by fine-tuning that improves detection of hotspots by classification. The SimSiam network based ensemble classifier decides whether an image contains hotspots or not. Detection of hotspots is followed by precise hotspot isolation. By doing so, we are able to provide a highly accurate and precise hotspot identification, applicable to a wide range of applications. We created a novel large thermal image dataset to address the issue of paucity of easily accessible thermal images. Our experiments with the dataset created by us and a publicly available segmentation dataset show the potential of our approach for hotspot detection and its ability to isolate hotspots with high accuracy. We achieve a Dice Coefficient of 0.736, the highest when compared with existing hotspot identification techniques. Our experiments also show self-supervised learning as a strong contender of supervised learning, providing competitive metrics for hotspot detection, with the highest accuracy of our approach being 97%.

Multi-modal Graph Neural Network for Early Diagnosis of Alzheimer's Disease from sMRI and PET Scans

Jul 31, 2023

Abstract:In recent years, deep learning models have been applied to neuroimaging data for early diagnosis of Alzheimer's disease (AD). Structural magnetic resonance imaging (sMRI) and positron emission tomography (PET) images provide structural and functional information about the brain, respectively. Combining these features leads to improved performance than using a single modality alone in building predictive models for AD diagnosis. However, current multi-modal approaches in deep learning, based on sMRI and PET, are mostly limited to convolutional neural networks, which do not facilitate integration of both image and phenotypic information of subjects. We propose to use graph neural networks (GNN) that are designed to deal with problems in non-Euclidean domains. In this study, we demonstrate how brain networks can be created from sMRI or PET images and be used in a population graph framework that can combine phenotypic information with imaging features of these brain networks. Then, we present a multi-modal GNN framework where each modality has its own branch of GNN and a technique is proposed to combine the multi-modal data at both the level of node vectors and adjacency matrices. Finally, we perform late fusion to combine the preliminary decisions made in each branch and produce a final prediction. As multi-modality data becomes available, multi-source and multi-modal is the trend of AD diagnosis. We conducted explorative experiments based on multi-modal imaging data combined with non-imaging phenotypic information for AD diagnosis and analyzed the impact of phenotypic information on diagnostic performance. Results from experiments demonstrated that our proposed multi-modal approach improves performance for AD diagnosis, and this study also provides technical reference and support the need for multivariate multi-modal diagnosis methods.

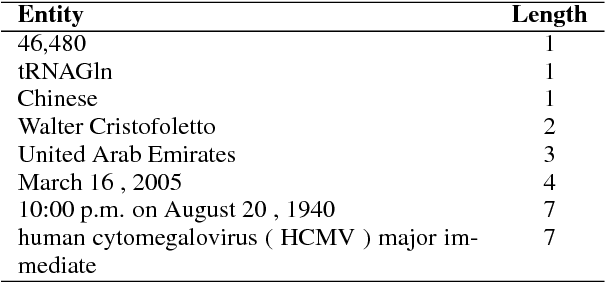

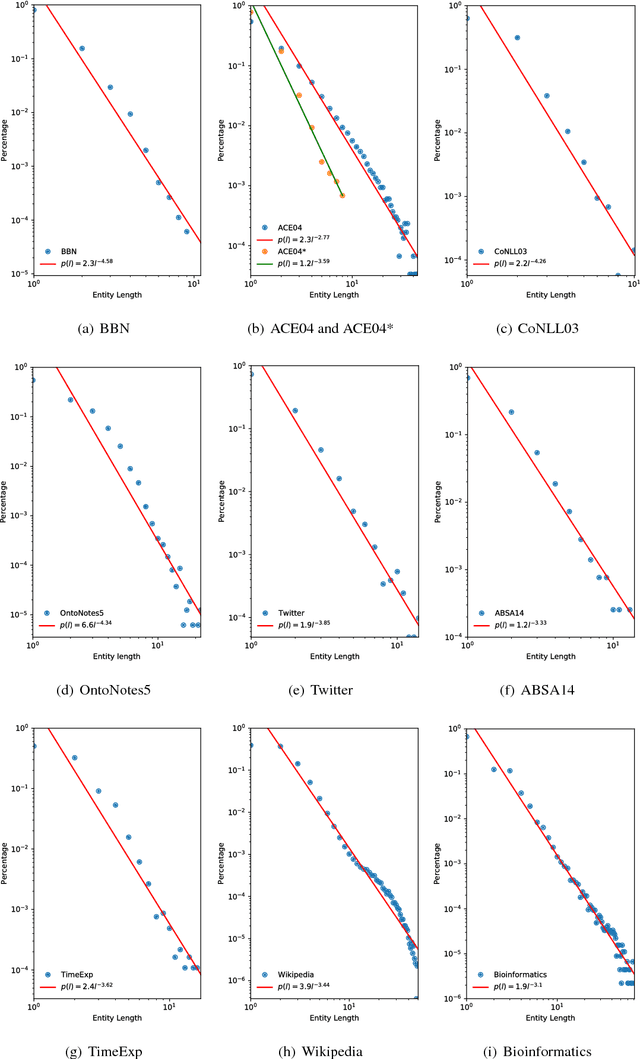

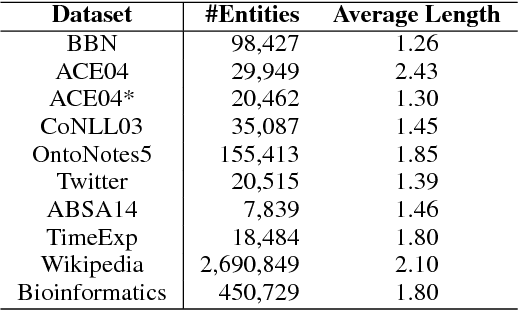

Discovering Power Laws in Entity Length

Dec 02, 2018

Abstract:This paper presents a discovery that the length of the entities in various datasets follows a family of scale-free power law distributions. The concept of entity here broadly includes the named entity, entity mention, time expression, aspect term, and domain-specific entity that are well investigated in natural language processing and related areas. The entity length denotes the number of words in an entity. The power law distributions in entity length possess the scale-free property and have well-defined means and finite variances. We explain the phenomenon of power laws in entity length by the principle of least effort in communication and the preferential mechanism.

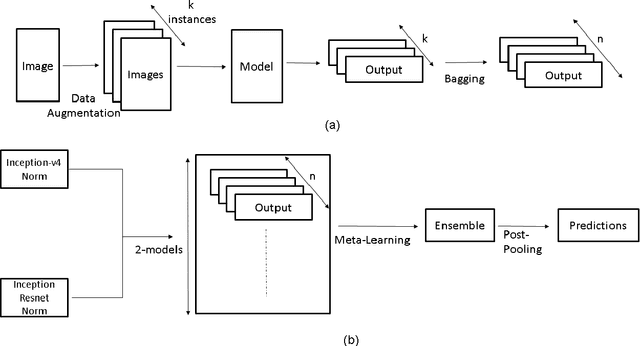

Deep neural network ensemble by data augmentation and bagging for skin lesion classification

Jul 24, 2018

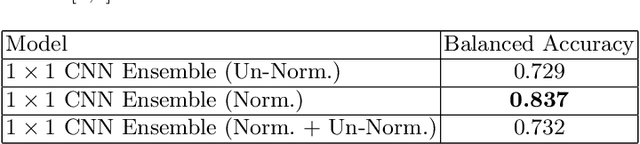

Abstract:This work summarizes our submission for the Task 3: Disease Classification of ISIC 2018 challenge in Skin Lesion Analysis Towards Melanoma Detection. We use a novel deep neural network (DNN) ensemble architecture introduced by us that can effectively classify skin lesions by using data-augmentation and bagging to address paucity of data and prevent over-fitting. The ensemble is composed of two DNN architectures: Inception-v4 and Inception-Resnet-v2. The DNN architectures are combined in to an ensemble by using a $1\times1$ convolution for fusion in a meta-learning layer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge