Pengfei Lyu

Bias-Corrected Data Synthesis for Imbalanced Learning

Oct 30, 2025Abstract:Imbalanced data, where the positive samples represent only a small proportion compared to the negative samples, makes it challenging for classification problems to balance the false positive and false negative rates. A common approach to addressing the challenge involves generating synthetic data for the minority group and then training classification models with both observed and synthetic data. However, since the synthetic data depends on the observed data and fails to replicate the original data distribution accurately, prediction accuracy is reduced when the synthetic data is naively treated as the true data. In this paper, we address the bias introduced by synthetic data and provide consistent estimators for this bias by borrowing information from the majority group. We propose a bias correction procedure to mitigate the adverse effects of synthetic data, enhancing prediction accuracy while avoiding overfitting. This procedure is extended to broader scenarios with imbalanced data, such as imbalanced multi-task learning and causal inference. Theoretical properties, including bounds on bias estimation errors and improvements in prediction accuracy, are provided. Simulation results and data analysis on handwritten digit datasets demonstrate the effectiveness of our method.

Semi-Supervised 3D Medical Segmentation from 2D Natural Images Pretrained Model

Sep 18, 2025

Abstract:This paper explores the transfer of knowledge from general vision models pretrained on 2D natural images to improve 3D medical image segmentation. We focus on the semi-supervised setting, where only a few labeled 3D medical images are available, along with a large set of unlabeled images. To tackle this, we propose a model-agnostic framework that progressively distills knowledge from a 2D pretrained model to a 3D segmentation model trained from scratch. Our approach, M&N, involves iterative co-training of the two models using pseudo-masks generated by each other, along with our proposed learning rate guided sampling that adaptively adjusts the proportion of labeled and unlabeled data in each training batch to align with the models' prediction accuracy and stability, minimizing the adverse effect caused by inaccurate pseudo-masks. Extensive experiments on multiple publicly available datasets demonstrate that M&N achieves state-of-the-art performance, outperforming thirteen existing semi-supervised segmentation approaches under all different settings. Importantly, ablation studies show that M&N remains model-agnostic, allowing seamless integration with different architectures. This ensures its adaptability as more advanced models emerge. The code is available at https://github.com/pakheiyeung/M-N.

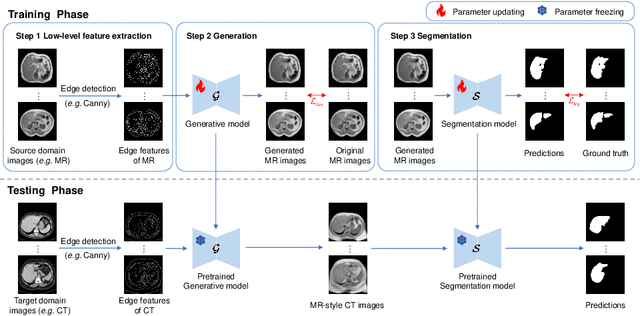

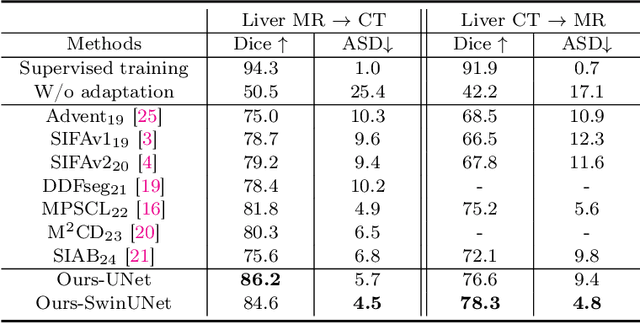

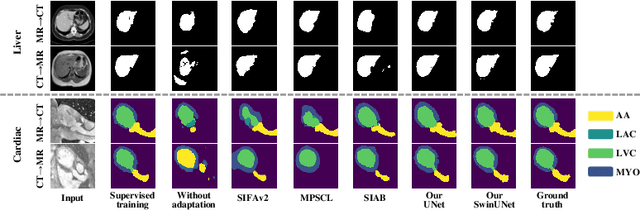

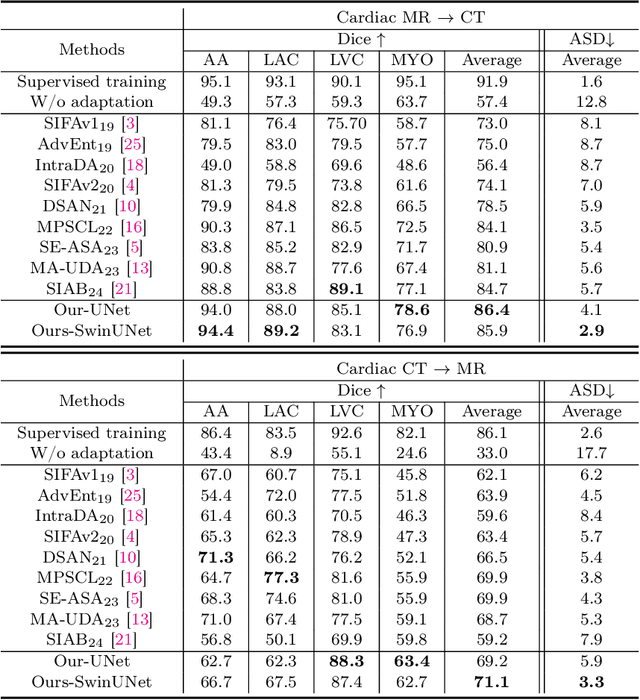

Bridging the Inter-Domain Gap through Low-Level Features for Cross-Modal Medical Image Segmentation

May 17, 2025

Abstract:This paper addresses the task of cross-modal medical image segmentation by exploring unsupervised domain adaptation (UDA) approaches. We propose a model-agnostic UDA framework, LowBridge, which builds on a simple observation that cross-modal images share some similar low-level features (e.g., edges) as they are depicting the same structures. Specifically, we first train a generative model to recover the source images from their edge features, followed by training a segmentation model on the generated source images, separately. At test time, edge features from the target images are input to the pretrained generative model to generate source-style target domain images, which are then segmented using the pretrained segmentation network. Despite its simplicity, extensive experiments on various publicly available datasets demonstrate that \proposed achieves state-of-the-art performance, outperforming eleven existing UDA approaches under different settings. Notably, further ablation studies show that \proposed is agnostic to different types of generative and segmentation models, suggesting its potential to be seamlessly plugged with the most advanced models to achieve even more outstanding results in the future. The code is available at https://github.com/JoshuaLPF/LowBridge.

Deep Fourier-embedded Network for Bi-modal Salient Object Detection

Nov 27, 2024Abstract:The rapid development of deep learning provides a significant improvement of salient object detection combining both RGB and thermal images. However, existing deep learning-based models suffer from two major shortcomings. First, the computation and memory demands of Transformer-based models with quadratic complexity are unbearable, especially in handling high-resolution bi-modal feature fusion. Second, even if learning converges to an ideal solution, there remains a frequency gap between the prediction and ground truth. Therefore, we propose a purely fast Fourier transform-based model, namely deep Fourier-embedded network (DFENet), for learning bi-modal information of RGB and thermal images. On one hand, fast Fourier transform efficiently fetches global dependencies with low complexity. Inspired by this, we design modal-coordinated perception attention to fuse the frequency gap between RGB and thermal modalities with multi-dimensional representation enhancement. To obtain reliable detailed information during decoding, we design the frequency-decomposed edge-aware module (FEM) to clarify object edges by deeply decomposing low-level features. Moreover, we equip proposed Fourier residual channel attention block in each decoder layer to prioritize high-frequency information while aligning channel global relationships. On the other hand, we propose co-focus frequency loss (CFL) to steer FEM towards minimizing the frequency gap. CFL dynamically weights hard frequencies during edge frequency reconstruction by cross-referencing the bi-modal edge information in the Fourier domain. This frequency-level refinement of edge features further contributes to the quality of the final pixel-level prediction. Extensive experiments on four bi-modal salient object detection benchmark datasets demonstrate our proposed DFENet outperforms twelve existing state-of-the-art models.

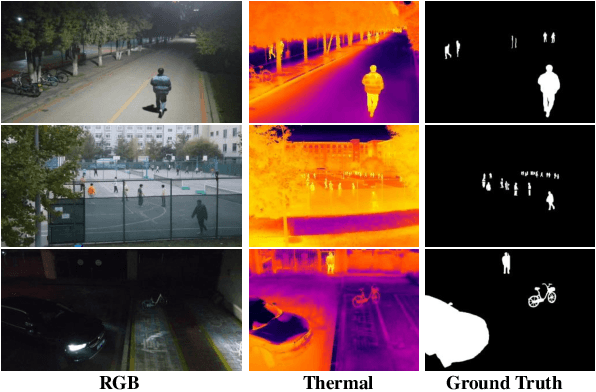

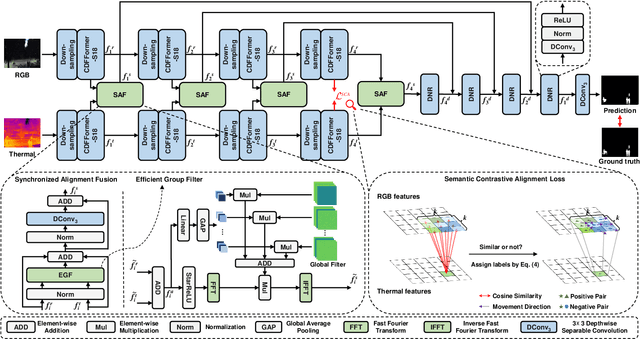

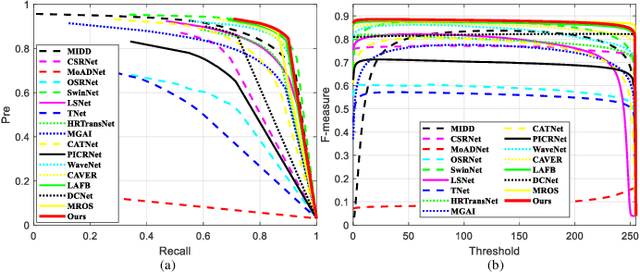

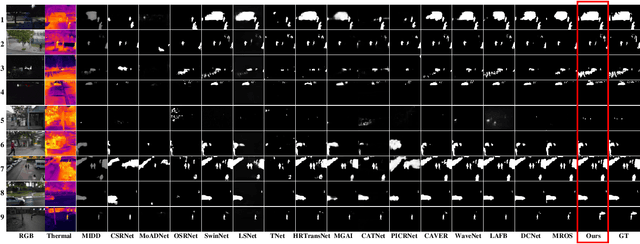

Efficient Fourier Filtering Network with Contrastive Learning for UAV-based Unaligned Bi-modal Salient Object Detection

Nov 06, 2024

Abstract:Unmanned aerial vehicle (UAV)-based bi-modal salient object detection (BSOD) aims to segment salient objects in a scene utilizing complementary cues in unaligned RGB and thermal image pairs. However, the high computational expense of existing UAV-based BSOD models limits their applicability to real-world UAV devices. To address this problem, we propose an efficient Fourier filter network with contrastive learning that achieves both real-time and accurate performance. Specifically, we first design a semantic contrastive alignment loss to align the two modalities at the semantic level, which facilitates mutual refinement in a parameter-free way. Second, inspired by the fast Fourier transform that obtains global relevance in linear complexity, we propose synchronized alignment fusion, which aligns and fuses bi-modal features in the channel and spatial dimensions by a hierarchical filtering mechanism. Our proposed model, AlignSal, reduces the number of parameters by 70.0%, decreases the floating point operations by 49.4%, and increases the inference speed by 152.5% compared to the cutting-edge BSOD model (i.e., MROS). Extensive experiments on the UAV RGB-T 2400 and three weakly aligned datasets demonstrate that AlignSal achieves both real-time inference speed and better performance and generalizability compared to sixteen state-of-the-art BSOD models across most evaluation metrics. In addition, our ablation studies further verify AlignSal's potential in boosting the performance of existing aligned BSOD models on UAV-based unaligned data. The code is available at: https://github.com/JoshuaLPF/AlignSal.

Neural-Network-based NLOS Identification in Angular Domain at 60-GHz

Jul 20, 2021

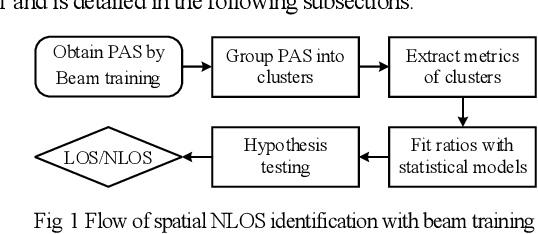

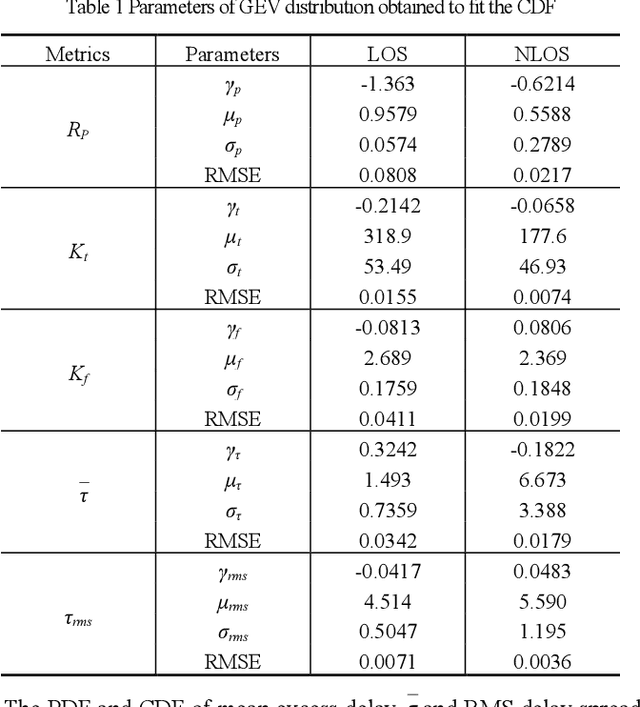

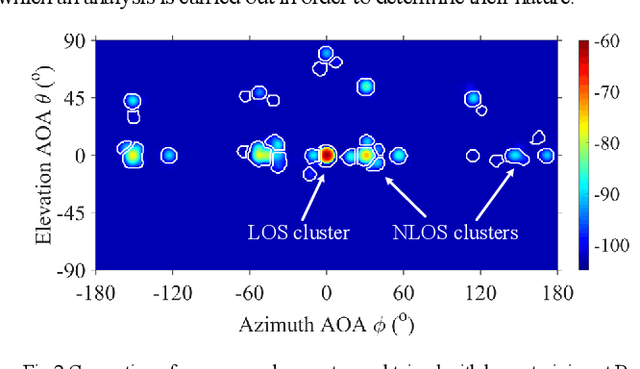

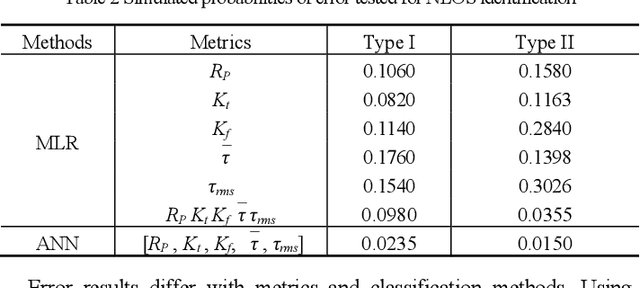

Abstract:This paper introduces an identification method that determines whether a millimeter-wave wireless transmission using directional antennas is being established over a line-of-sight (LOS) or a non-line-of-sight (NLOS) cluster for indoor localization applications. The proposed technique utilizes the channel power angular spectrum that is readily available from a beam training process. In particular, the behavior of five different channel metrics, namely the spatial-domain, time-domain, and frequency-domain channel kurtosis, the mean excess delay, and the RMS delay spread, is analyzed using maximum likelihood ratio and artificial neural network. A noticeable difference between LOS and NLOS clusters is observed and assessed for identification. Hypothesis testing shows errors as low as 0.01-0.02 in simulation and 0.04-0.07 in measurements at 60 GHz.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge