Houze Liu

Multi-Scale Transformer Architecture for Accurate Medical Image Classification

Feb 10, 2025

Abstract:This study introduces an AI-driven skin lesion classification algorithm built on an enhanced Transformer architecture, addressing the challenges of accuracy and robustness in medical image analysis. By integrating a multi-scale feature fusion mechanism and refining the self-attention process, the model effectively extracts both global and local features, enhancing its ability to detect lesions with ambiguous boundaries and intricate structures. Performance evaluation on the ISIC 2017 dataset demonstrates that the improved Transformer surpasses established AI models, including ResNet50, VGG19, ResNext, and Vision Transformer, across key metrics such as accuracy, AUC, F1-Score, and Precision. Grad-CAM visualizations further highlight the interpretability of the model, showcasing strong alignment between the algorithm's focus areas and actual lesion sites. This research underscores the transformative potential of advanced AI models in medical imaging, paving the way for more accurate and reliable diagnostic tools. Future work will explore the scalability of this approach to broader medical imaging tasks and investigate the integration of multimodal data to enhance AI-driven diagnostic frameworks for intelligent healthcare.

Adversarial Neural Networks in Medical Imaging Advancements and Challenges in Semantic Segmentation

Oct 17, 2024

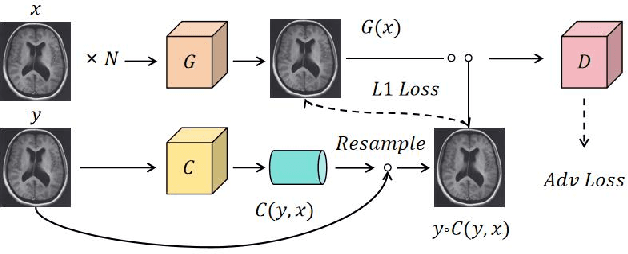

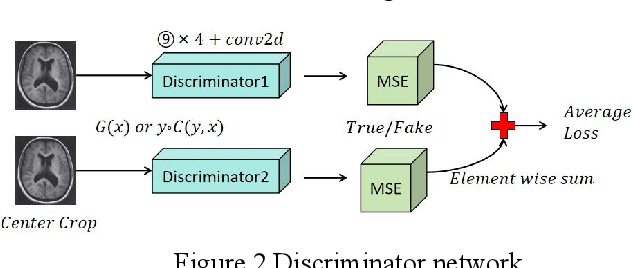

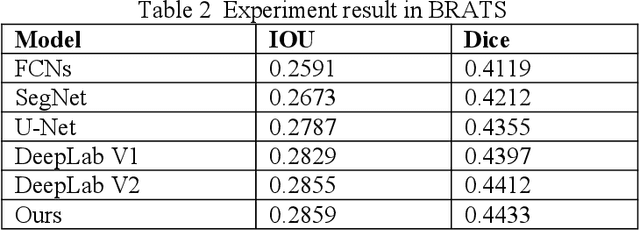

Abstract:Recent advancements in artificial intelligence (AI) have precipitated a paradigm shift in medical imaging, particularly revolutionizing the domain of brain imaging. This paper systematically investigates the integration of deep learning -- a principal branch of AI -- into the semantic segmentation of brain images. Semantic segmentation serves as an indispensable technique for the delineation of discrete anatomical structures and the identification of pathological markers, essential for the diagnosis of complex neurological disorders. Historically, the reliance on manual interpretation by radiologists, while noteworthy for its accuracy, is plagued by inherent subjectivity and inter-observer variability. This limitation becomes more pronounced with the exponential increase in imaging data, which traditional methods struggle to process efficiently and effectively. In response to these challenges, this study introduces the application of adversarial neural networks, a novel AI approach that not only automates but also refines the semantic segmentation process. By leveraging these advanced neural networks, our approach enhances the precision of diagnostic outputs, reducing human error and increasing the throughput of imaging data analysis. The paper provides a detailed discussion on how adversarial neural networks facilitate a more robust, objective, and scalable solution, thereby significantly improving diagnostic accuracies in neurological evaluations. This exploration highlights the transformative impact of AI on medical imaging, setting a new benchmark for future research and clinical practice in neurology.

Research on Deep Learning Model of Feature Extraction Based on Convolutional Neural Network

Jun 13, 2024Abstract:Neural networks with relatively shallow layers and simple structures may have limited ability in accurately identifying pneumonia. In addition, deep neural networks also have a large demand for computing resources, which may cause convolutional neural networks to be unable to be implemented on terminals. Therefore, this paper will carry out the optimal classification of convolutional neural networks. Firstly, according to the characteristics of pneumonia images, AlexNet and InceptionV3 were selected to obtain better image recognition results. Combining the features of medical images, the forward neural network with deeper and more complex structure is learned. Finally, knowledge extraction technology is used to extract the obtained data into the AlexNet model to achieve the purpose of improving computing efficiency and reducing computing costs. The results showed that the prediction accuracy, specificity, and sensitivity of the trained AlexNet model increased by 4.25 percentage points, 7.85 percentage points, and 2.32 percentage points, respectively. The graphics processing usage has decreased by 51% compared to the InceptionV3 mode.

Research on the Application of Computer Vision Based on Deep Learning in Autonomous Driving Technology

Jun 04, 2024Abstract:This research aims to explore the application of deep learning in autonomous driving computer vision technology and its impact on improving system performance. By using advanced technologies such as convolutional neural networks (CNN), multi-task joint learning methods, and deep reinforcement learning, this article analyzes in detail the application of deep learning in image recognition, real-time target tracking and classification, environment perception and decision support, and path planning and navigation. Application process in key areas. Research results show that the proposed system has an accuracy of over 98% in image recognition, target tracking and classification, and also demonstrates efficient performance and practicality in environmental perception and decision support, path planning and navigation. The conclusion points out that deep learning technology can significantly improve the accuracy and real-time response capabilities of autonomous driving systems. Although there are still challenges in environmental perception and decision support, with the advancement of technology, it is expected to achieve wider applications and greater capabilities in the future. potential.

Enhancing 3D Object Detection by Using Neural Network with Self-adaptive Thresholding

May 13, 2024Abstract:Robust 3D object detection remains a pivotal concern in the domain of autonomous field robotics. Despite notable enhancements in detection accuracy across standard datasets, real-world urban environments, characterized by their unstructured and dynamic nature, frequently precipitate an elevated incidence of false positives, thereby undermining the reliability of existing detection paradigms. In this context, our study introduces an advanced post-processing algorithm that modulates detection thresholds dynamically relative to the distance from the ego object. Traditional perception systems typically utilize a uniform threshold, which often leads to decreased efficacy in detecting distant objects. In contrast, our proposed methodology employs a Neural Network with a self-adaptive thresholding mechanism that significantly attenuates false negatives while concurrently diminishing false positives, particularly in complex urban settings. Empirical results substantiate that our algorithm not only augments the performance of 3D object detection models in diverse urban and adverse weather scenarios but also establishes a new benchmark for adaptive thresholding techniques in field robotics.

Feature Manipulation for DDPM based Change Detection

Mar 23, 2024Abstract:Change Detection is a classic task of computer vision that receives a bi-temporal image pair as input and separates the semantically changed and unchanged regions of it. The diffusion model is used in image synthesis and as a feature extractor and has been applied to various downstream tasks. Using this, a feature map is extracted from the pre-trained diffusion model from the large-scale data set, and changes are detected through the additional network. On the one hand, the current diffusion-based change detection approach focuses only on extracting a good feature map using the diffusion model. It obtains and uses differences without further adjustment to the created feature map. Our method focuses on manipulating the feature map extracted from the Diffusion Model to be more semantically useful, and for this, we propose two methods: Feature Attention and FDAF. Our model with Feature Attention achieved a state-of-the-art F1 score (90.18) and IoU (83.86) on the LEVIR-CD dataset.

Comprehensive evaluation of Mal-API-2019 dataset by machine learning in malware detection

Mar 04, 2024

Abstract:This study conducts a thorough examination of malware detection using machine learning techniques, focusing on the evaluation of various classification models using the Mal-API-2019 dataset. The aim is to advance cybersecurity capabilities by identifying and mitigating threats more effectively. Both ensemble and non-ensemble machine learning methods, such as Random Forest, XGBoost, K Nearest Neighbor (KNN), and Neural Networks, are explored. Special emphasis is placed on the importance of data pre-processing techniques, particularly TF-IDF representation and Principal Component Analysis, in improving model performance. Results indicate that ensemble methods, particularly Random Forest and XGBoost, exhibit superior accuracy, precision, and recall compared to others, highlighting their effectiveness in malware detection. The paper also discusses limitations and potential future directions, emphasizing the need for continuous adaptation to address the evolving nature of malware. This research contributes to ongoing discussions in cybersecurity and provides practical insights for developing more robust malware detection systems in the digital era.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge