Hod Lipson

Beyond Cropping and Rotation: Automated Evolution of Powerful Task-Specific Augmentations with Generative Models

Feb 03, 2026Abstract:Data augmentation has long been a cornerstone for reducing overfitting in vision models, with methods like AutoAugment automating the design of task-specific augmentations. Recent advances in generative models, such as conditional diffusion and few-shot NeRFs, offer a new paradigm for data augmentation by synthesizing data with significantly greater diversity and realism. However, unlike traditional augmentations like cropping or rotation, these methods introduce substantial changes that enhance robustness but also risk degrading performance if the augmentations are poorly matched to the task. In this work, we present EvoAug, an automated augmentation learning pipeline, which leverages these generative models alongside an efficient evolutionary algorithm to learn optimal task-specific augmentations. Our pipeline introduces a novel approach to image augmentation that learns stochastic augmentation trees that hierarchically compose augmentations, enabling more structured and adaptive transformations. We demonstrate strong performance across fine-grained classification and few-shot learning tasks. Notably, our pipeline discovers augmentations that align with domain knowledge, even in low-data settings. These results highlight the potential of learned generative augmentations, unlocking new possibilities for robust model training.

Accelerating scientific discovery with the common task framework

Nov 06, 2025Abstract:Machine learning (ML) and artificial intelligence (AI) algorithms are transforming and empowering the characterization and control of dynamic systems in the engineering, physical, and biological sciences. These emerging modeling paradigms require comparative metrics to evaluate a diverse set of scientific objectives, including forecasting, state reconstruction, generalization, and control, while also considering limited data scenarios and noisy measurements. We introduce a common task framework (CTF) for science and engineering, which features a growing collection of challenge data sets with a diverse set of practical and common objectives. The CTF is a critically enabling technology that has contributed to the rapid advance of ML/AI algorithms in traditional applications such as speech recognition, language processing, and computer vision. There is a critical need for the objective metrics of a CTF to compare the diverse algorithms being rapidly developed and deployed in practice today across science and engineering.

RL-AUX: Reinforcement Learning for Auxiliary Task Generation

Oct 27, 2025Abstract:Auxiliary Learning (AL) is a special case of Multi-task Learning (MTL) in which a network trains on auxiliary tasks to improve performance on its main task. This technique is used to improve generalization and, ultimately, performance on the network's main task. AL has been demonstrated to improve performance across multiple domains, including navigation, image classification, and natural language processing. One weakness of AL is the need for labeled auxiliary tasks, which can require human effort and domain expertise to generate. Meta Learning techniques have been used to solve this issue by learning an additional auxiliary task generation network that can create helpful tasks for the primary network. The most prominent techniques rely on Bi-Level Optimization, which incurs computational cost and increased code complexity. To avoid the need for Bi-Level Optimization, we present an RL-based approach to dynamically create auxiliary tasks. In this framework, an RL agent is tasked with selecting auxiliary labels for every data point in a training set. The agent is rewarded when their selection improves the performance on the primary task. We also experiment with learning optimal strategies for weighing the auxiliary loss per data point. On the 20-Superclass CIFAR100 problem, our RL approach outperforms human-labeled auxiliary tasks and performs as well as a prominent Bi-Level Optimization technique. Our weight learning approaches significantly outperform all of these benchmarks. For example, a Weight-Aware RL-based approach helps the VGG16 architecture achieve 80.9% test accuracy while the human-labeled auxiliary task setup achieved 75.53%. The goal of this work is to (1) prove that RL is a viable approach to dynamically generate auxiliary tasks and (2) demonstrate that per-sample auxiliary task weights can be learned alongside the auxiliary task labels and can achieve strong results.

Bi-Encoder Contrastive Learning for Fingerprint and Iris Biometrics

Oct 27, 2025Abstract:There has been a historic assumption that the biometrics of an individual are statistically uncorrelated. We test this assumption by training Bi-Encoder networks on three verification tasks, including fingerprint-to-fingerprint matching, iris-to-iris matching, and cross-modal fingerprint-to-iris matching using 274 subjects with $\sim$100k fingerprints and 7k iris images. We trained ResNet-50 and Vision Transformer backbones in Bi-Encoder architectures such that the contrastive loss between images sampled from the same individual is minimized. The iris ResNet architecture reaches 91 ROC AUC score for iris-to-iris matching, providing clear evidence that the left and right irises of an individual are correlated. Fingerprint models reproduce the positive intra-subject suggested by prior work in this space. This is the first work attempting to use Vision Transformers for this matching. Cross-modal matching rises only slightly above chance, which suggests that more data and a more sophisticated pipeline is needed to obtain compelling results. These findings continue challenge independence assumptions of biometrics and we plan to extend this work to other biometrics in the future. Code available: https://github.com/MatthewSo/bio_fingerprints_iris.

AutoURDF: Unsupervised Robot Modeling from Point Cloud Frames Using Cluster Registration

Dec 07, 2024

Abstract:Robot description models are essential for simulation and control, yet their creation often requires significant manual effort. To streamline this modeling process, we introduce AutoURDF, an unsupervised approach for constructing description files for unseen robots from point cloud frames. Our method leverages a cluster-based point cloud registration model that tracks the 6-DoF transformations of point clusters. Through analyzing cluster movements, we hierarchically address the following challenges: (1) moving part segmentation, (2) body topology inference, and (3) joint parameter estimation. The complete pipeline produces robot description files that are fully compatible with existing simulators. We validate our method across a variety of robots, using both synthetic and real-world scan data. Results indicate that our approach outperforms previous methods in registration and body topology estimation accuracy, offering a scalable solution for automated robot modeling.

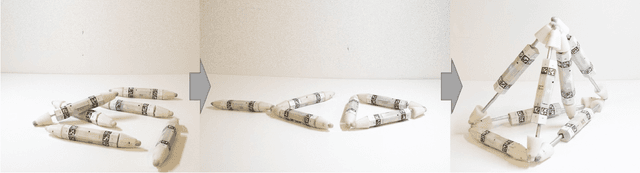

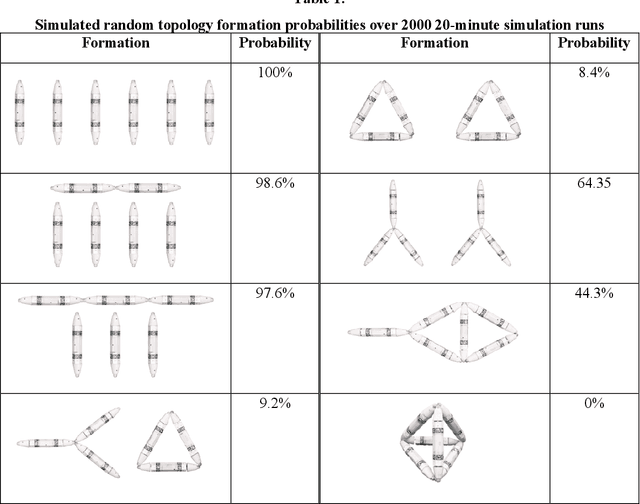

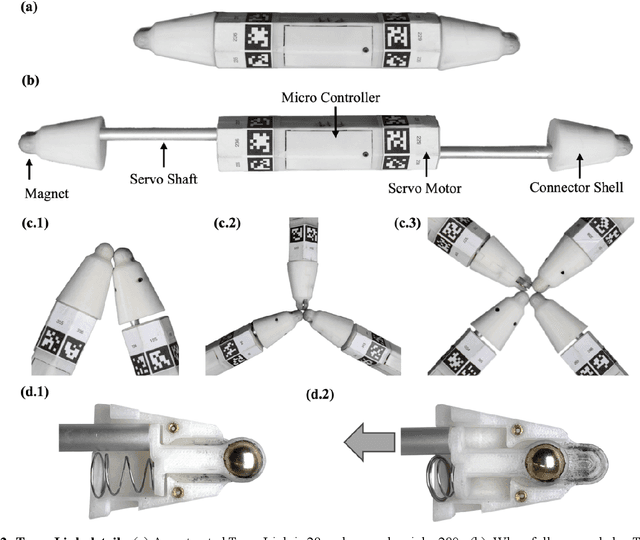

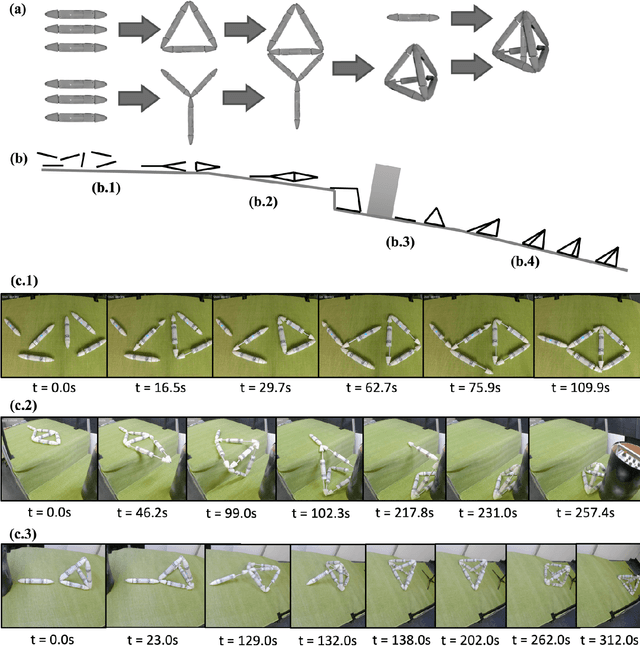

Robot Metabolism: Towards machines that can grow by consuming other machines

Nov 17, 2024

Abstract:Biological lifeforms can heal, grow, adapt, and reproduce -- abilities essential for sustained survival and development. In contrast, robots today are primarily monolithic machines with limited ability to self-repair, physically develop, or incorporate material from their environments. A key challenge to such physical adaptation has been that while robot minds are rapidly evolving new behaviors through AI, their bodies remain closed systems, unable to systematically integrate new material to grow or heal. We argue that open-ended physical adaptation is only possible when robots are designed using only a small repertoire of simple modules. This allows machines to mechanically adapt by consuming parts from other machines or their surroundings and shedding broken components. We demonstrate this principle using a truss modular robot platform composed of one-dimensional actuated bars. We show how robots in this space can grow bigger, faster, and more capable by consuming materials from their environment and from other robots. We suggest that machine metabolic processes akin to the one demonstrated here will be an essential part of any sustained future robot ecology.

Aligning AI-driven discovery with human intuition

Oct 09, 2024Abstract:As data-driven modeling of physical dynamical systems becomes more prevalent, a new challenge is emerging: making these models more compatible and aligned with existing human knowledge. AI-driven scientific modeling processes typically begin with identifying hidden state variables, then deriving governing equations, followed by predicting and analyzing future behaviors. The critical initial step of identification of an appropriate set of state variables remains challenging for two reasons. First, finding a compact set of meaningfully predictive variables is mathematically difficult and under-defined. A second reason is that variables found often lack physical significance, and are therefore difficult for human scientists to interpret. We propose a new general principle for distilling representations that are naturally more aligned with human intuition, without relying on prior physical knowledge. We demonstrate our approach on a number of experimental and simulated system where the variables generated by the AI closely resemble those chosen independently by human scientists. We suggest that this principle can help make human-AI collaboration more fruitful, as well as shed light on how humans make scientific modeling choices.

Sequencing the Neurome: Towards Scalable Exact Parameter Reconstruction of Black-Box Neural Networks

Sep 27, 2024

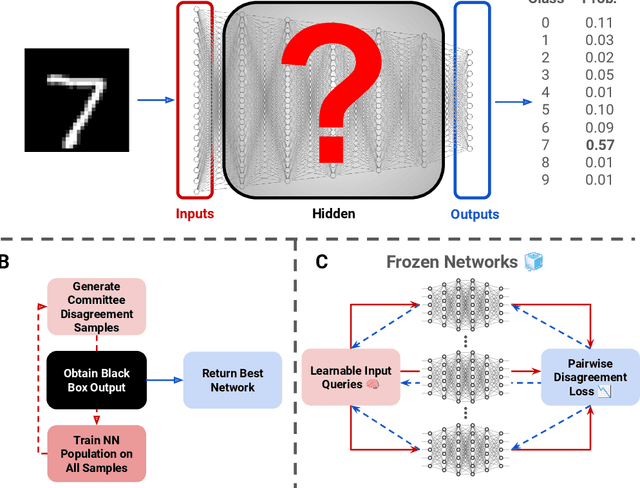

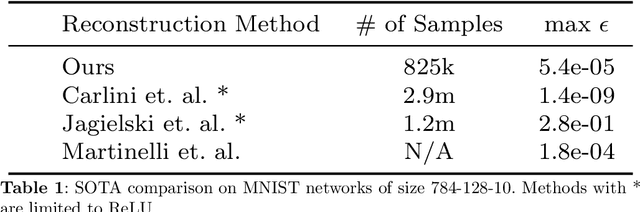

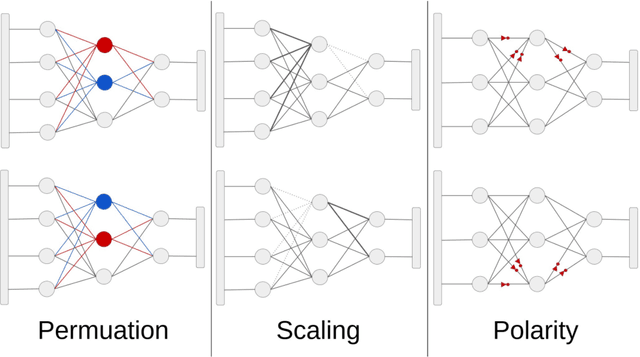

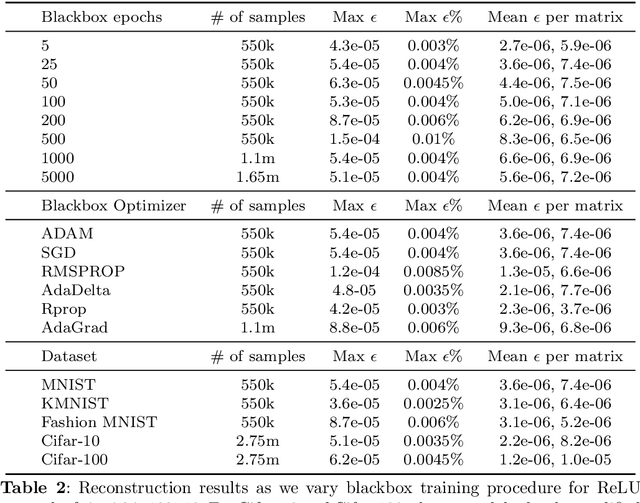

Abstract:Inferring the exact parameters of a neural network with only query access is an NP-Hard problem, with few practical existing algorithms. Solutions would have major implications for security, verification, interpretability, and understanding biological networks. The key challenges are the massive parameter space, and complex non-linear relationships between neurons. We resolve these challenges using two insights. First, we observe that almost all networks used in practice are produced by random initialization and first order optimization, an inductive bias that drastically reduces the practical parameter space. Second, we present a novel query generation algorithm that produces maximally informative samples, letting us untangle the non-linear relationships efficiently. We demonstrate reconstruction of a hidden network containing over 1.5 million parameters, and of one 7 layers deep, the largest and deepest reconstructions to date, with max parameter difference less than 0.0001, and illustrate robustness and scalability across a variety of architectures, datasets, and training procedures.

Diffusion Models Are Promising for Ab Initio Structure Solutions from Nanocrystalline Powder Diffraction Data

Jun 16, 2024

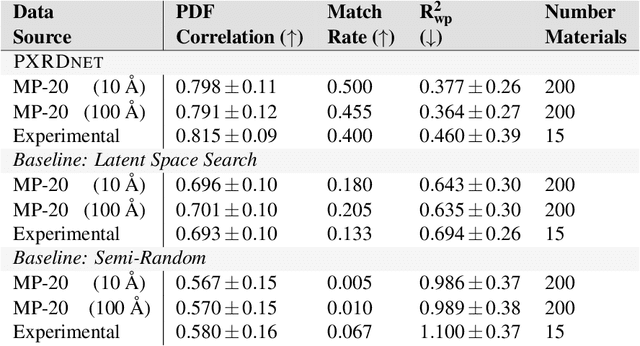

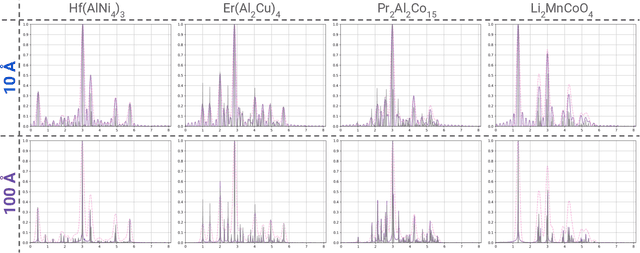

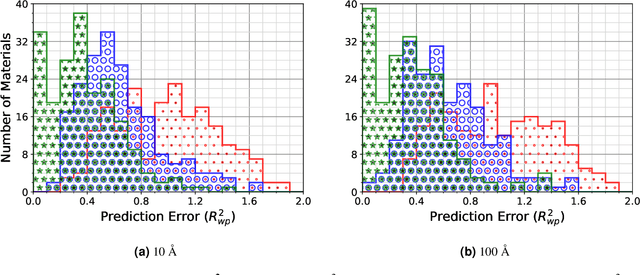

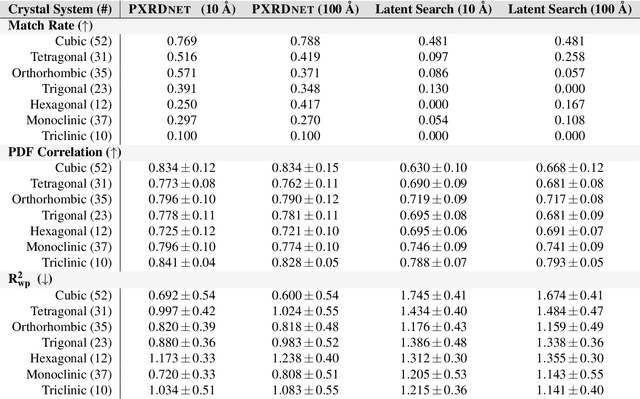

Abstract:A major challenge in materials science is the determination of the structure of nanometer sized objects. Here we present a novel approach that uses a generative machine learning model based on a Diffusion model that is trained on 45,229 known structures. The model factors both the measured diffraction pattern as well as relevant statistical priors on the unit cell of atomic cluster structures. Conditioned only on the chemical formula and the information-scarce finite-size broadened powder diffraction pattern, we find that our model, PXRDnet, can successfully solve simulated nanocrystals as small as 10 angstroms across 200 materials of varying symmetry and complexity, including structures from all seven crystal systems. We show that our model can determine structural solutions with up to $81.5\%$ accuracy, as measured by structural correlation. Furthermore, PXRDnet is capable of solving structures from noisy diffraction patterns gathered in real-world experiments. We suggest that data driven approaches, bootstrapped from theoretical simulation, will ultimately provide a path towards determining the structure of previously unsolved nano-materials.

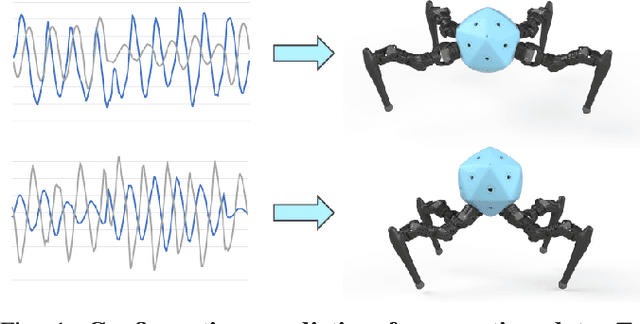

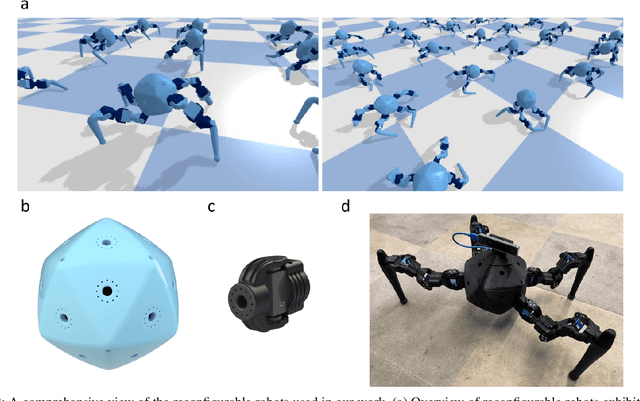

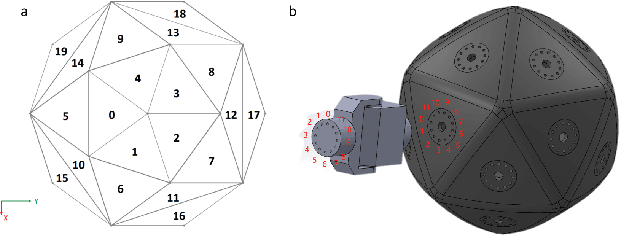

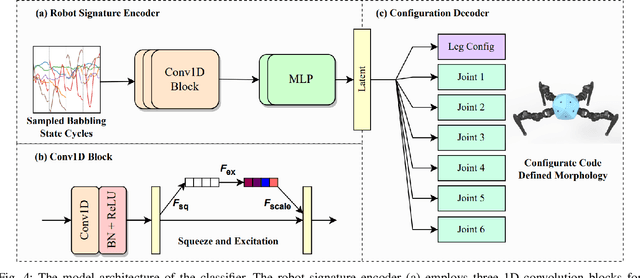

Reconfigurable Robot Identification from Motion Data

Mar 15, 2024

Abstract:Integrating Large Language Models (VLMs) and Vision-Language Models (VLMs) with robotic systems enables robots to process and understand complex natural language instructions and visual information. However, a fundamental challenge remains: for robots to fully capitalize on these advancements, they must have a deep understanding of their physical embodiment. The gap between AI models cognitive capabilities and the understanding of physical embodiment leads to the following question: Can a robot autonomously understand and adapt to its physical form and functionalities through interaction with its environment? This question underscores the transition towards developing self-modeling robots without reliance on external sensory or pre-programmed knowledge about their structure. Here, we propose a meta self modeling that can deduce robot morphology through proprioception (the internal sense of position and movement). Our study introduces a 12 DoF reconfigurable legged robot, accompanied by a diverse dataset of 200k unique configurations, to systematically investigate the relationship between robotic motion and robot morphology. Utilizing a deep neural network model comprising a robot signature encoder and a configuration decoder, we demonstrate the capability of our system to accurately predict robot configurations from proprioceptive signals. This research contributes to the field of robotic self-modeling, aiming to enhance understanding of their physical embodiment and adaptability in real world scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge