Heng Zhao

DP-CSGP: Differentially Private Stochastic Gradient Push with Compressed Communication

Dec 15, 2025Abstract:In this paper, we propose a Differentially Private Stochastic Gradient Push with Compressed communication (termed DP-CSGP) for decentralized learning over directed graphs. Different from existing works, the proposed algorithm is designed to maintain high model utility while ensuring both rigorous differential privacy (DP) guarantees and efficient communication. For general non-convex and smooth objective functions, we show that the proposed algorithm achieves a tight utility bound of $\mathcal{O}\left( \sqrt{d\log \left( \frac{1}δ \right)}/(\sqrt{n}Jε) \right)$ ($J$ and $d$ are the number of local samples and the dimension of decision variables, respectively) with $\left(ε, δ\right)$-DP guarantee for each node, matching that of decentralized counterparts with exact communication. Extensive experiments on benchmark tasks show that, under the same privacy budget, DP-CSGP achieves comparable model accuracy with significantly lower communication cost than existing decentralized counterparts with exact communication.

Video Set Distillation: Information Diversification and Temporal Densification

Nov 28, 2024

Abstract:The rapid development of AI models has led to a growing emphasis on enhancing their capabilities for complex input data such as videos. While large-scale video datasets have been introduced to support this growth, the unique challenges of reducing redundancies in video \textbf{sets} have not been explored. Compared to image datasets or individual videos, video \textbf{sets} have a two-layer nested structure, where the outer layer is the collection of individual videos, and the inner layer contains the correlations among frame-level data points to provide temporal information. Video \textbf{sets} have two dimensions of redundancies: within-sample and inter-sample redundancies. Existing methods like key frame selection, dataset pruning or dataset distillation are not addressing the unique challenge of video sets since they aimed at reducing redundancies in only one of the dimensions. In this work, we are the first to study Video Set Distillation, which synthesizes optimized video data by jointly addressing within-sample and inter-sample redundancies. Our Information Diversification and Temporal Densification (IDTD) method jointly reduces redundancies across both dimensions. This is achieved through a Feature Pool and Feature Selectors mechanism to preserve inter-sample diversity, alongside a Temporal Fusor that maintains temporal information density within synthesized videos. Our method achieves state-of-the-art results in Video Dataset Distillation, paving the way for more effective redundancy reduction and efficient AI model training on video datasets.

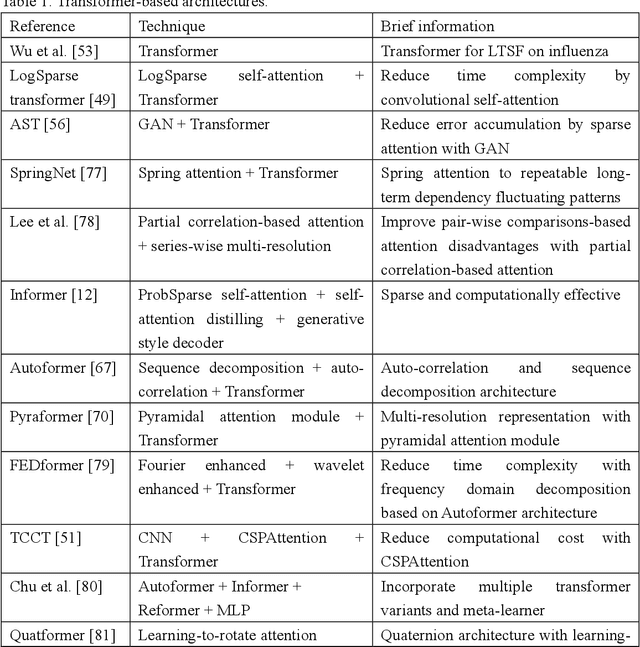

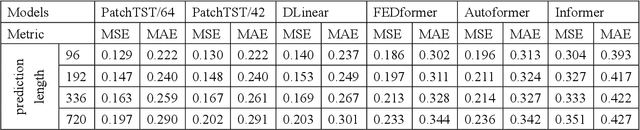

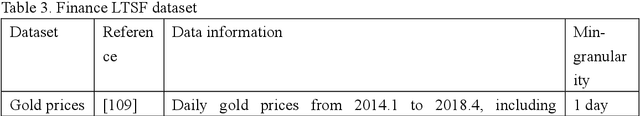

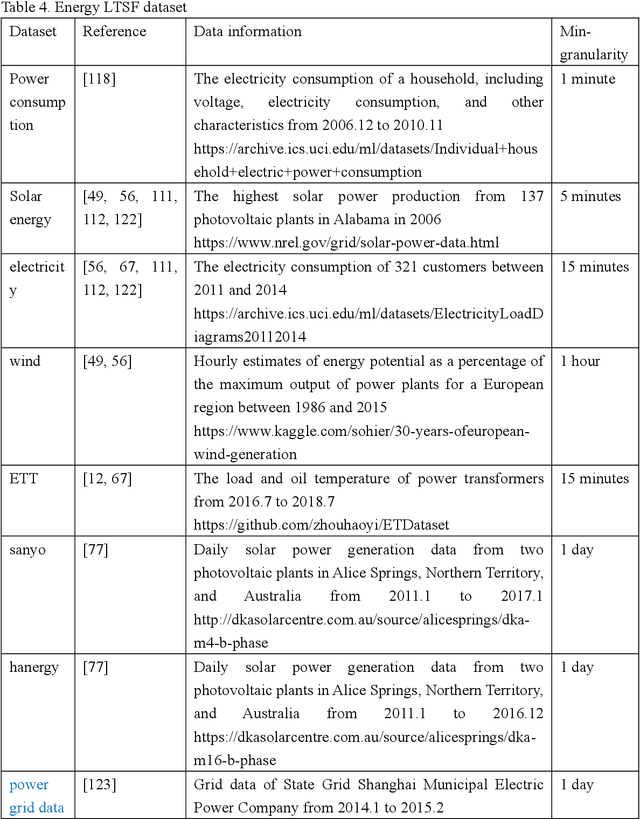

A Systematic Review for Transformer-based Long-term Series Forecasting

Oct 31, 2023

Abstract:The emergence of deep learning has yielded noteworthy advancements in time series forecasting (TSF). Transformer architectures, in particular, have witnessed broad utilization and adoption in TSF tasks. Transformers have proven to be the most successful solution to extract the semantic correlations among the elements within a long sequence. Various variants have enabled transformer architecture to effectively handle long-term time series forecasting (LTSF) tasks. In this article, we first present a comprehensive overview of transformer architectures and their subsequent enhancements developed to address various LTSF tasks. Then, we summarize the publicly available LTSF datasets and relevant evaluation metrics. Furthermore, we provide valuable insights into the best practices and techniques for effectively training transformers in the context of time-series analysis. Lastly, we propose potential research directions in this rapidly evolving field.

Composite Fixed-Length Ordered Features for Palmprint Template Protection with Diminished Performance Loss

Nov 09, 2022Abstract:Palmprint recognition has become more and more popular due to its advantages over other biometric modalities such as fingerprint, in that it is larger in area, richer in information and able to work at a distance. However, the issue of palmprint privacy and security (especially palmprint template protection) remains under-studied. Among the very few research works, most of them only use the directional and orientation features of the palmprint with transformation processing, yielding unsatisfactory protection and identification performance. Thus, this paper proposes a palmprint template protection-oriented operator that has a fixed length and is ordered in nature, by fusing point features and orientation features. Firstly, double orientations are extracted with more accuracy based on MFRAT. Then key points of SURF are extracted and converted to be fixed-length and ordered features. Finally, composite features that fuse up the double orientations and SURF points are transformed using the irreversible transformation of IOM to generate the revocable palmprint template. Experiments show that the EER after irreversible transformation on the PolyU and CASIA databases are 0.17% and 0.19% respectively, and the absolute precision loss is 0.08% and 0.07%, respectively, which proves the advantage of our method.

A Demographic Attribute Guided Approach to Age Estimation

May 20, 2022

Abstract:Face-based age estimation has attracted enormous attention due to wide applications to public security surveillance, human-computer interaction, etc. With vigorous development of deep learning, age estimation based on deep neural network has become the mainstream practice. However, seeking a more suitable problem paradigm for age change characteristics, designing the corresponding loss function and designing a more effective feature extraction module still needs to be studied. What is more, change of face age is also related to demographic attributes such as ethnicity and gender, and the dynamics of different age groups is also quite different. This problem has so far not been paid enough attention to. How to use demographic attribute information to improve the performance of age estimation remains to be further explored. In light of these issues, this research makes full use of auxiliary information of face attributes and proposes a new age estimation approach with an attribute guidance module. We first design a multi-scale attention residual convolution unit (MARCU) to extract robust facial features other than simply using other standard feature modules such as VGG and ResNet. Then, after being especially treated through full connection (FC) layers, the facial demographic attributes are weight-summed by 1*1 convolutional layer and eventually merged with the age features by a global FC layer. Lastly, we propose a new error compression ranking (ECR) loss to better converge the age regression value. Experimental results on three public datasets of UTKFace, LAP2016 and Morph show that our proposed approach achieves superior performance compared to other state-of-the-art methods.

Blind Image Inpainting with Sparse Directional Filter Dictionaries for Lightweight CNNs

May 13, 2022

Abstract:Blind inpainting algorithms based on deep learning architectures have shown a remarkable performance in recent years, typically outperforming model-based methods both in terms of image quality and run time. However, neural network strategies typically lack a theoretical explanation, which contrasts with the well-understood theory underlying model-based methods. In this work, we leverage the advantages of both approaches by integrating theoretically founded concepts from transform domain methods and sparse approximations into a CNN-based approach for blind image inpainting. To this end, we present a novel strategy to learn convolutional kernels that applies a specifically designed filter dictionary whose elements are linearly combined with trainable weights. Numerical experiments demonstrate the competitiveness of this approach. Our results show not only an improved inpainting quality compared to conventional CNNs but also significantly faster network convergence within a lightweight network design.

Automatic Facial Skin Feature Detection for Everyone

Mar 30, 2022

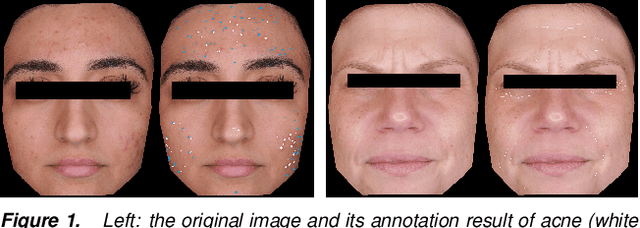

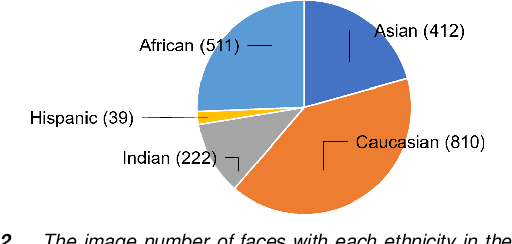

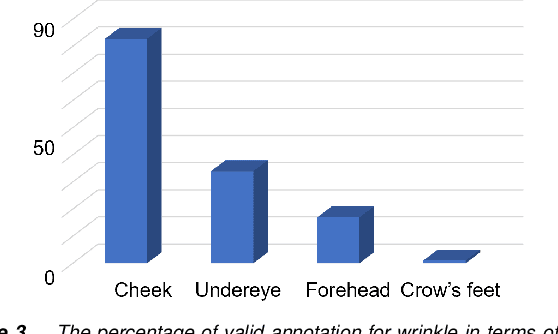

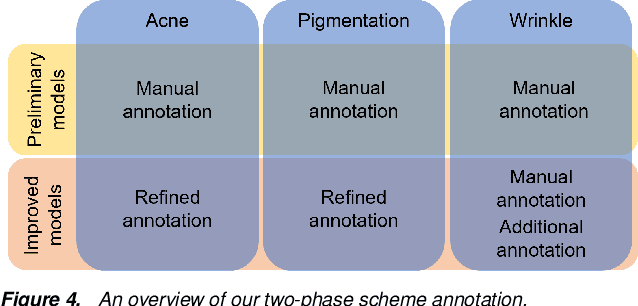

Abstract:Automatic assessment and understanding of facial skin condition have several applications, including the early detection of underlying health problems, lifestyle and dietary treatment, skin-care product recommendation, etc. Selfies in the wild serve as an excellent data resource to democratize skin quality assessment, but suffer from several data collection challenges.The key to guaranteeing an accurate assessment is accurate detection of different skin features. We present an automatic facial skin feature detection method that works across a variety of skin tones and age groups for selfies in the wild. To be specific, we annotate the locations of acne, pigmentation, and wrinkle for selfie images with different skin tone colors, severity levels, and lighting conditions. The annotation is conducted in a two-phase scheme with the help of a dermatologist to train volunteers for annotation. We employ Unet++ as the network architecture for feature detection. This work shows that the two-phase annotation scheme can robustly detect the accurate locations of acne, pigmentation, and wrinkle for selfie images with different ethnicities, skin tone colors, severity levels, age groups, and lighting conditions.

Word2Pix: Word to Pixel Cross Attention Transformer in Visual Grounding

Jul 31, 2021

Abstract:Current one-stage methods for visual grounding encode the language query as one holistic sentence embedding before fusion with visual feature. Such a formulation does not treat each word of a query sentence on par when modeling language to visual attention, therefore prone to neglect words which are less important for sentence embedding but critical for visual grounding. In this paper we propose Word2Pix: a one-stage visual grounding network based on encoder-decoder transformer architecture that enables learning for textual to visual feature correspondence via word to pixel attention. The embedding of each word from the query sentence is treated alike by attending to visual pixels individually instead of single holistic sentence embedding. In this way, each word is given equivalent opportunity to adjust the language to vision attention towards the referent target through multiple stacks of transformer decoder layers. We conduct the experiments on RefCOCO, RefCOCO+ and RefCOCOg datasets and the proposed Word2Pix outperforms existing one-stage methods by a notable margin. The results obtained also show that Word2Pix surpasses two-stage visual grounding models, while at the same time keeping the merits of one-stage paradigm namely end-to-end training and real-time inference speed intact.

ROSE: Real One-Stage Effort to Detect the Fingerprint Singular Point Based on Multi-scale Spatial Attention

Mar 09, 2020

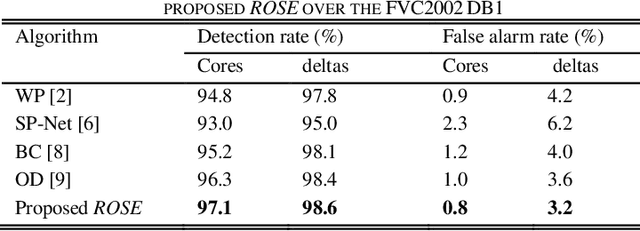

Abstract:Detecting the singular point accurately and efficiently is one of the most important tasks for fingerprint recognition. In recent years, deep learning has been gradually used in the fingerprint singular point detection. However, current deep learning-based singular point detection methods are either two-stage or multi-stage, which makes them time-consuming. More importantly, their detection accuracy is yet unsatisfactory, especially in the case of the low-quality fingerprint. In this paper, we make a Real One-Stage Effort to detect fingerprint singular points more accurately and efficiently, and therefore we name the proposed algorithm ROSE for short, in which the multi-scale spatial attention, the Gaussian heatmap and the variant of focal loss are applied together to achieve a higher detection rate. Experimental results on the datasets FVC2002 DB1 and NIST SD4 show that our ROSE outperforms the state-of-art algorithms in terms of detection rate, false alarm rate and detection speed.

Singular points detection with semantic segmentation networks

Nov 04, 2019

Abstract:Singular points detection is one of the most classical and important problem in the field of fingerprint recognition. However, current detection rates of singular points are still unsatisfactory, especially for low-quality fingerprints. Compared with traditional image processing-based detection methods, methods based on deep learning only need the original fingerprint image but not the fingerprint orientation field. In this paper, different from other detection methods based on deep learning, we treat singular points detection as a semantic segmentation problem and just use few data for training. Furthermore, we propose a new convolutional neural network called SinNet to extract the singular regions of interest and then use a blob detection method called SimpleBlobDetector to locate the singular points. The experiments are carried out on the test dataset from SPD2010, and the proposed method has much better performance than the other advanced methods in most aspects. Compared with the state-of-art algorithms in SPD2010, our method achieves an increase of 11% in the percentage of correctly detected fingerprints and an increase of more than 18% in the core detection rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge