Bingding Huang

JanusDNA: A Powerful Bi-directional Hybrid DNA Foundation Model

May 22, 2025Abstract:Large language models (LLMs) have revolutionized natural language processing and are increasingly applied to other sequential data types, including genetic sequences. However, adapting LLMs to genomics presents significant challenges. Capturing complex genomic interactions requires modeling long-range dependencies within DNA sequences, where interactions often span over 10,000 base pairs, even within a single gene, posing substantial computational burdens under conventional model architectures and training paradigms. Moreover, standard LLM training approaches are suboptimal for DNA: autoregressive training, while efficient, supports only unidirectional understanding. However, DNA is inherently bidirectional, e.g., bidirectional promoters regulate transcription in both directions and account for nearly 11% of human gene expression. Masked language models (MLMs) allow bidirectional understanding but are inefficient, as only masked tokens contribute to the loss per step. To address these limitations, we introduce JanusDNA, the first bidirectional DNA foundation model built upon a novel pretraining paradigm that combines the optimization efficiency of autoregressive modeling with the bidirectional comprehension of masked modeling. JanusDNA adopts a hybrid Mamba, Attention and Mixture of Experts (MoE) architecture, combining long-range modeling of Attention with efficient sequential learning of Mamba. MoE layers further scale model capacity via sparse activation while keeping computational cost low. Notably, JanusDNA processes up to 1 million base pairs at single nucleotide resolution on a single 80GB GPU. Extensive experiments and ablations show JanusDNA achieves new SOTA results on three genomic representation benchmarks, outperforming models with 250x more activated parameters. Code: https://github.com/Qihao-Duan/JanusDNA

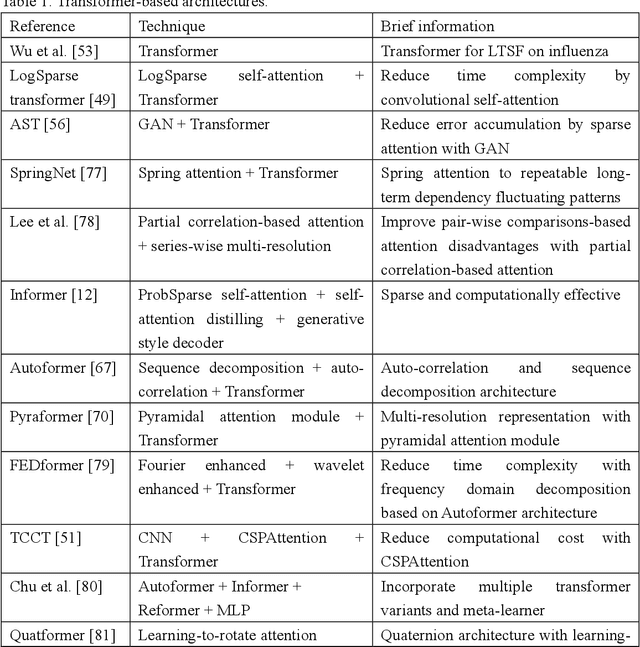

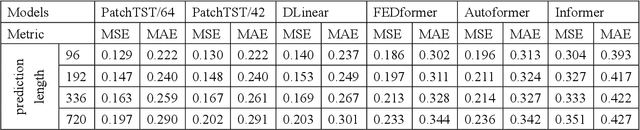

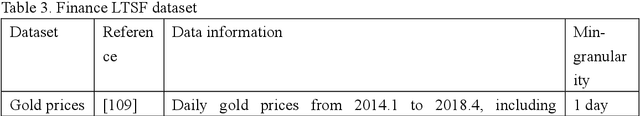

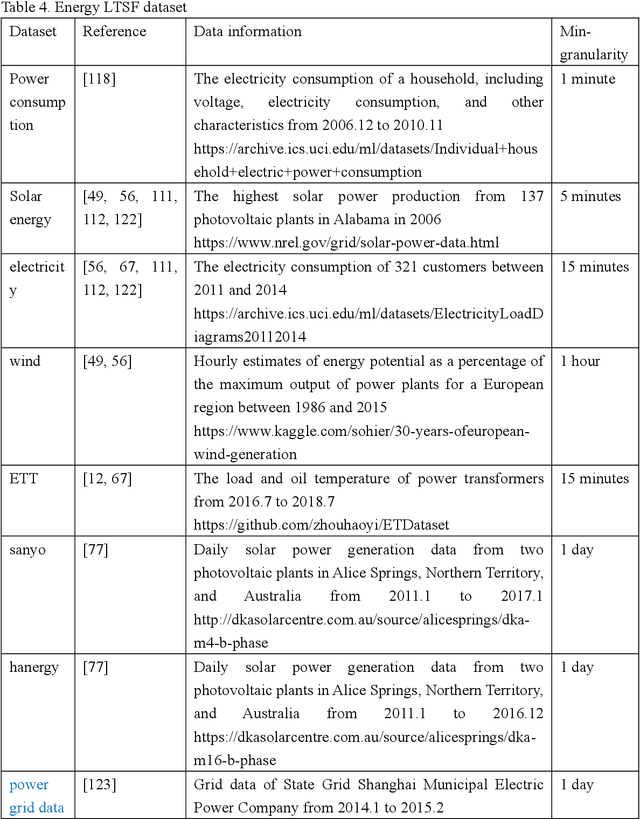

A Systematic Review for Transformer-based Long-term Series Forecasting

Oct 31, 2023

Abstract:The emergence of deep learning has yielded noteworthy advancements in time series forecasting (TSF). Transformer architectures, in particular, have witnessed broad utilization and adoption in TSF tasks. Transformers have proven to be the most successful solution to extract the semantic correlations among the elements within a long sequence. Various variants have enabled transformer architecture to effectively handle long-term time series forecasting (LTSF) tasks. In this article, we first present a comprehensive overview of transformer architectures and their subsequent enhancements developed to address various LTSF tasks. Then, we summarize the publicly available LTSF datasets and relevant evaluation metrics. Furthermore, we provide valuable insights into the best practices and techniques for effectively training transformers in the context of time-series analysis. Lastly, we propose potential research directions in this rapidly evolving field.

A Comparative Study of Pre-trained CNNs and GRU-Based Attention for Image Caption Generation

Oct 11, 2023

Abstract:Image captioning is a challenging task involving generating a textual description for an image using computer vision and natural language processing techniques. This paper proposes a deep neural framework for image caption generation using a GRU-based attention mechanism. Our approach employs multiple pre-trained convolutional neural networks as the encoder to extract features from the image and a GRU-based language model as the decoder to generate descriptive sentences. To improve performance, we integrate the Bahdanau attention model with the GRU decoder to enable learning to focus on specific image parts. We evaluate our approach using the MSCOCO and Flickr30k datasets and show that it achieves competitive scores compared to state-of-the-art methods. Our proposed framework can bridge the gap between computer vision and natural language and can be extended to specific domains.

Hybrid of representation learning and reinforcement learning for dynamic and complex robotic motion planning

Sep 07, 2023Abstract:Motion planning is the soul of robot decision making. Classical planning algorithms like graph search and reaction-based algorithms face challenges in cases of dense and dynamic obstacles. Deep learning algorithms generate suboptimal one-step predictions that cause many collisions. Reinforcement learning algorithms generate optimal or near-optimal time-sequential predictions. However, they suffer from slow convergence, suboptimal converged results, and overfittings. This paper introduces a hybrid algorithm for robotic motion planning: long short-term memory (LSTM) pooling and skip connection for attention-based discrete soft actor critic (LSA-DSAC). First, graph network (relational graph) and attention network (attention weight) interpret the environmental state for the learning of the discrete soft actor critic algorithm. The expressive power of attention network outperforms that of graph in our task by difference analysis of these two representation methods. However, attention based DSAC faces the overfitting problem in training. Second, the skip connection method is integrated to attention based DSAC to mitigate overfitting and improve convergence speed. Third, LSTM pooling is taken to replace the sum operator of attention weigh and eliminate overfitting by slightly sacrificing convergence speed at early-stage training. Experiments show that LSA-DSAC outperforms the state-of-the-art in training and most evaluations. The physical robot is also implemented and tested in the real world.

Bayesian inference for data-efficient, explainable, and safe robotic motion planning: A review

Jul 16, 2023

Abstract:Bayesian inference has many advantages in robotic motion planning over four perspectives: The uncertainty quantification of the policy, safety (risk-aware) and optimum guarantees of robot motions, data-efficiency in training of reinforcement learning, and reducing the sim2real gap when the robot is applied to real-world tasks. However, the application of Bayesian inference in robotic motion planning is lagging behind the comprehensive theory of Bayesian inference. Further, there are no comprehensive reviews to summarize the progress of Bayesian inference to give researchers a systematic understanding in robotic motion planning. This paper first provides the probabilistic theories of Bayesian inference which are the preliminary of Bayesian inference for complex cases. Second, the Bayesian estimation is given to estimate the posterior of policies or unknown functions which are used to compute the policy. Third, the classical model-based Bayesian RL and model-free Bayesian RL algorithms for robotic motion planning are summarized, while these algorithms in complex cases are also analyzed. Fourth, the analysis of Bayesian inference in inverse RL is given to infer the reward functions in a data-efficient manner. Fifth, we systematically present the hybridization of Bayesian inference and RL which is a promising direction to improve the convergence of RL for better motion planning. Sixth, given the Bayesian inference, we present the interpretable and safe robotic motion plannings which are the hot research topic recently. Finally, all algorithms reviewed in this paper are summarized analytically as the knowledge graphs, and the future of Bayesian inference for robotic motion planning is also discussed, to pave the way for data-efficient, explainable, and safe robotic motion planning strategies for practical applications.

3D Cross Pseudo Supervision : A semi-supervised nnU-Net architecture for abdominal organ segmentation

Sep 19, 2022

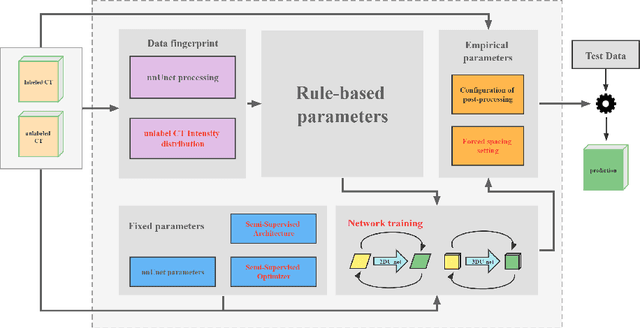

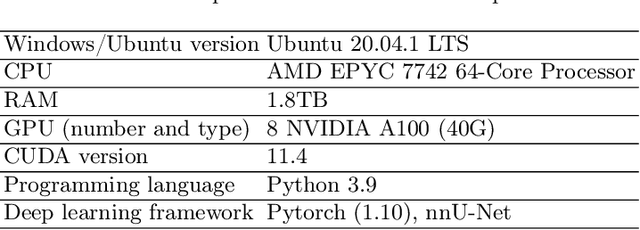

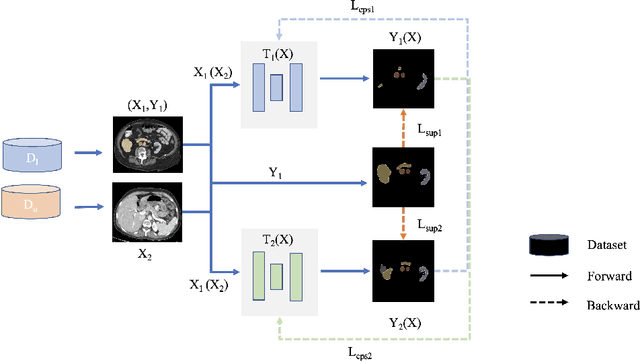

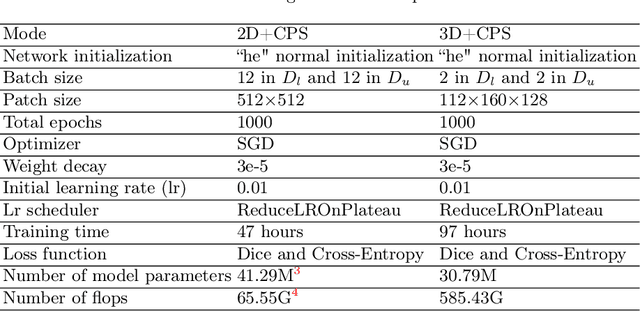

Abstract:Large curated datasets are necessary, but annotating medical images is a time-consuming, laborious, and expensive process. Therefore, recent supervised methods are focusing on utilizing a large amount of unlabeled data. However, to do so, is a challenging task. To address this problem, we propose a new 3D Cross Pseudo Supervision (3D-CPS) method, a semi-supervised network architecture based on nnU-Net with the Cross Pseudo Supervision method. We design a new nnU-Net based preprocessing method and adopt the forced spacing settings strategy in the inference stage to speed up the inference time. In addition, we set the semi-supervised loss weights to expand linearity with each epoch to prevent the model from low-quality pseudo-labels in the early training process. Our proposed method achieves an average dice similarity coefficient (DSC) of 0.881 and an average normalized surface distance (NSD) of 0.913 on the MICCAI FLARE2022 validation set (20 cases).

A review of motion planning algorithms for intelligent robotics

Feb 05, 2021

Abstract:We investigate and analyze principles of typical motion planning algorithms. These include traditional planning algorithms, supervised learning, optimal value reinforcement learning, policy gradient reinforcement learning. Traditional planning algorithms we investigated include graph search algorithms, sampling-based algorithms, and interpolating curve algorithms. Supervised learning algorithms include MSVM, LSTM, MCTS and CNN. Optimal value reinforcement learning algorithms include Q learning, DQN, double DQN, dueling DQN. Policy gradient algorithms include policy gradient method, actor-critic algorithm, A3C, A2C, DPG, DDPG, TRPO and PPO. New general criteria are also introduced to evaluate performance and application of motion planning algorithms by analytical comparisons. Convergence speed and stability of optimal value and policy gradient algorithms are specially analyzed. Future directions are presented analytically according to principles and analytical comparisons of motion planning algorithms. This paper provides researchers with a clear and comprehensive understanding about advantages, disadvantages, relationships, and future of motion planning algorithms in robotics, and paves ways for better motion planning algorithms.

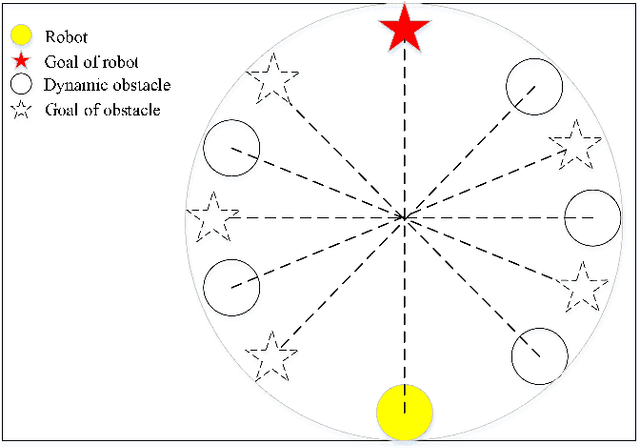

An advantage actor-critic algorithm for robotic motion planning in dense and dynamic scenarios

Feb 05, 2021

Abstract:Intelligent robots provide a new insight into efficiency improvement in industrial and service scenarios to replace human labor. However, these scenarios include dense and dynamic obstacles that make motion planning of robots challenging. Traditional algorithms like A* can plan collision-free trajectories in static environment, but their performance degrades and computational cost increases steeply in dense and dynamic scenarios. Optimal-value reinforcement learning algorithms (RL) can address these problems but suffer slow speed and instability in network convergence. Network of policy gradient RL converge fast in Atari games where action is discrete and finite, but few works have been done to address problems where continuous actions and large action space are required. In this paper, we modify existing advantage actor-critic algorithm and suit it to complex motion planning, therefore optimal speeds and directions of robot are generated. Experimental results demonstrate that our algorithm converges faster and stable than optimal-value RL. It achieves higher success rate in motion planning with lesser processing time for robot to reach its goal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge