Andrés Bruhn

RobustSpring: Benchmarking Robustness to Image Corruptions for Optical Flow, Scene Flow and Stereo

May 14, 2025

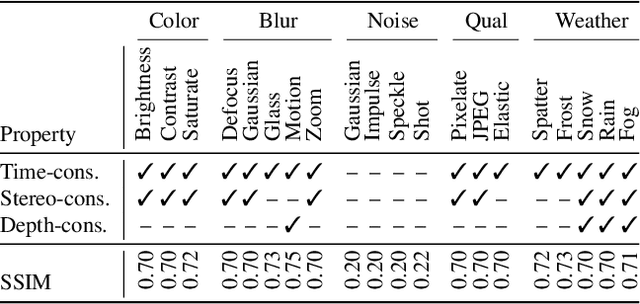

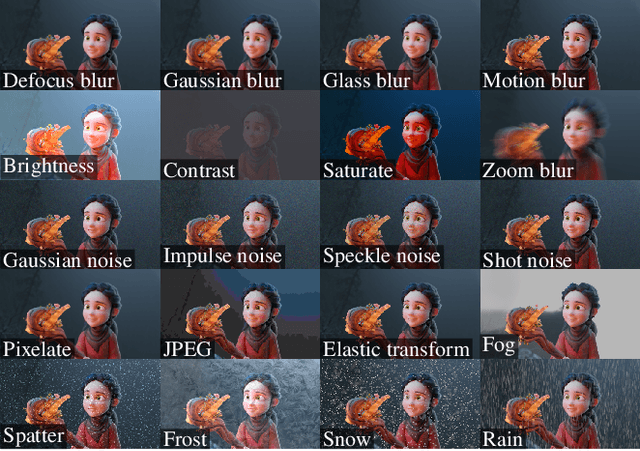

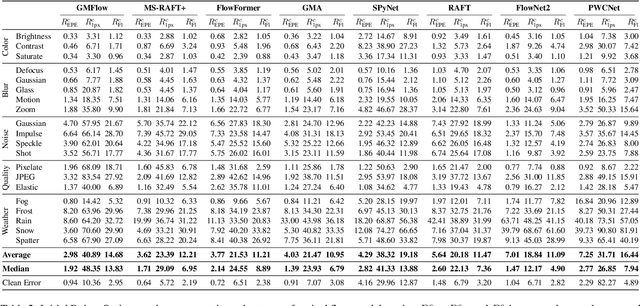

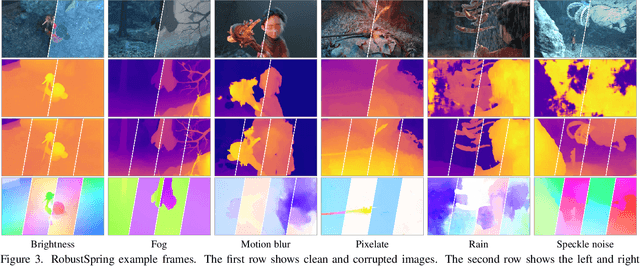

Abstract:Standard benchmarks for optical flow, scene flow, and stereo vision algorithms generally focus on model accuracy rather than robustness to image corruptions like noise or rain. Hence, the resilience of models to such real-world perturbations is largely unquantified. To address this, we present RobustSpring, a comprehensive dataset and benchmark for evaluating robustness to image corruptions for optical flow, scene flow, and stereo models. RobustSpring applies 20 different image corruptions, including noise, blur, color changes, quality degradations, and weather distortions, in a time-, stereo-, and depth-consistent manner to the high-resolution Spring dataset, creating a suite of 20,000 corrupted images that reflect challenging conditions. RobustSpring enables comparisons of model robustness via a new corruption robustness metric. Integration with the Spring benchmark enables public two-axis evaluations of both accuracy and robustness. We benchmark a curated selection of initial models, observing that accurate models are not necessarily robust and that robustness varies widely by corruption type. RobustSpring is a new computer vision benchmark that treats robustness as a first-class citizen to foster models that combine accuracy with resilience. It will be available at https://spring-benchmark.org.

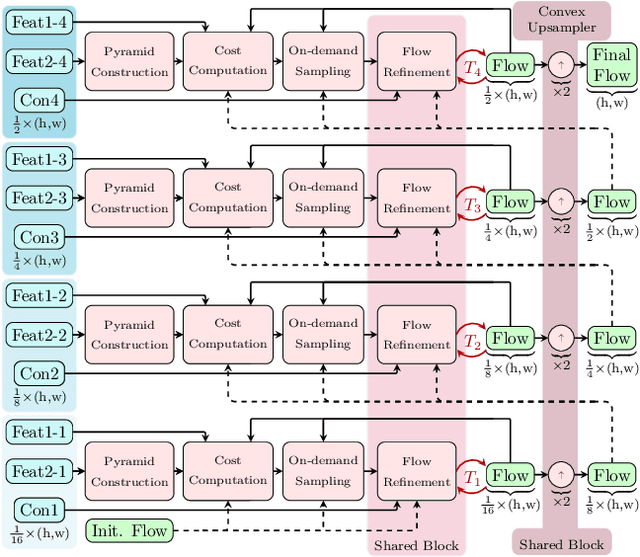

CCMR: High Resolution Optical Flow Estimation via Coarse-to-Fine Context-Guided Motion Reasoning

Nov 05, 2023

Abstract:Attention-based motion aggregation concepts have recently shown their usefulness in optical flow estimation, in particular when it comes to handling occluded regions. However, due to their complexity, such concepts have been mainly restricted to coarse-resolution single-scale approaches that fail to provide the detailed outcome of high-resolution multi-scale networks. In this paper, we hence propose CCMR: a high-resolution coarse-to-fine approach that leverages attention-based motion grouping concepts to multi-scale optical flow estimation. CCMR relies on a hierarchical two-step attention-based context-motion grouping strategy that first computes global multi-scale context features and then uses them to guide the actual motion grouping. As we iterate both steps over all coarse-to-fine scales, we adapt cross covariance image transformers to allow for an efficient realization while maintaining scale-dependent properties. Experiments and ablations demonstrate that our efforts of combining multi-scale and attention-based concepts pay off. By providing highly detailed flow fields with strong improvements in both occluded and non-occluded regions, our CCMR approach not only outperforms both the corresponding single-scale attention-based and multi-scale attention-free baselines by up to 23.0% and 21.6%, respectively, it also achieves state-of-the-art results, ranking first on KITTI 2015 and second on MPI Sintel Clean and Final. Code and trained models are available at https://github.com/cv-stuttgart /CCMR.

Detection Defenses: An Empty Promise against Adversarial Patch Attacks on Optical Flow

Nov 02, 2023

Abstract:Adversarial patches undermine the reliability of optical flow predictions when placed in arbitrary scene locations. Therefore, they pose a realistic threat to real-world motion detection and its downstream applications. Potential remedies are defense strategies that detect and remove adversarial patches, but their influence on the underlying motion prediction has not been investigated. In this paper, we thoroughly examine the currently available detect-and-remove defenses ILP and LGS for a wide selection of state-of-the-art optical flow methods, and illuminate their side effects on the quality and robustness of the final flow predictions. In particular, we implement defense-aware attacks to investigate whether current defenses are able to withstand attacks that take the defense mechanism into account. Our experiments yield two surprising results: Detect-and-remove defenses do not only lower the optical flow quality on benign scenes, in doing so, they also harm the robustness under patch attacks for all tested optical flow methods except FlowNetC. As currently employed detect-and-remove defenses fail to deliver the promised adversarial robustness for optical flow, they evoke a false sense of security. The code is available at https://github.com/cv-stuttgart/DetectionDefenses.

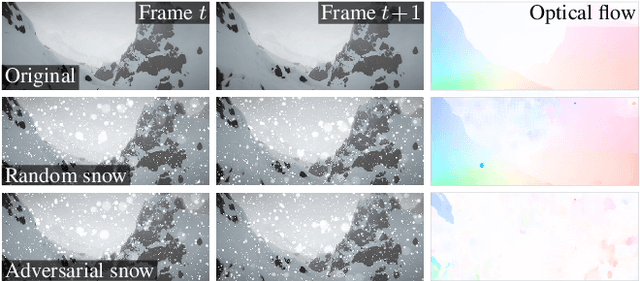

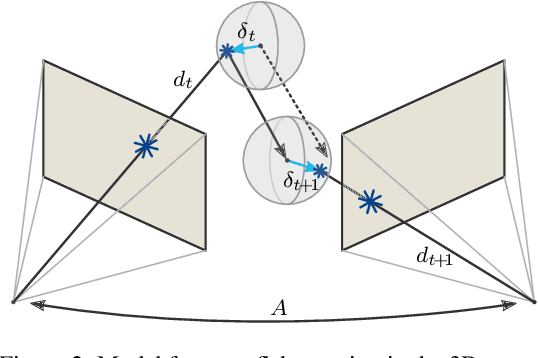

Distracting Downpour: Adversarial Weather Attacks for Motion Estimation

May 11, 2023

Abstract:Current adversarial attacks on motion estimation, or optical flow, optimize small per-pixel perturbations, which are unlikely to appear in the real world. In contrast, adverse weather conditions constitute a much more realistic threat scenario. Hence, in this work, we present a novel attack on motion estimation that exploits adversarially optimized particles to mimic weather effects like snowflakes, rain streaks or fog clouds. At the core of our attack framework is a differentiable particle rendering system that integrates particles (i) consistently over multiple time steps (ii) into the 3D space (iii) with a photo-realistic appearance. Through optimization, we obtain adversarial weather that significantly impacts the motion estimation. Surprisingly, methods that previously showed good robustness towards small per-pixel perturbations are particularly vulnerable to adversarial weather. At the same time, augmenting the training with non-optimized weather increases a method's robustness towards weather effects and improves generalizability at almost no additional cost.

Spring: A High-Resolution High-Detail Dataset and Benchmark for Scene Flow, Optical Flow and Stereo

Mar 03, 2023

Abstract:While recent methods for motion and stereo estimation recover an unprecedented amount of details, such highly detailed structures are neither adequately reflected in the data of existing benchmarks nor their evaluation methodology. Hence, we introduce Spring $-$ a large, high-resolution, high-detail, computer-generated benchmark for scene flow, optical flow, and stereo. Based on rendered scenes from the open-source Blender movie "Spring", it provides photo-realistic HD datasets with state-of-the-art visual effects and ground truth training data. Furthermore, we provide a website to upload, analyze and compare results. Using a novel evaluation methodology based on a super-resolved UHD ground truth, our Spring benchmark can assess the quality of fine structures and provides further detailed performance statistics on different image regions. Regarding the number of ground truth frames, Spring is 60$\times$ larger than the only scene flow benchmark, KITTI 2015, and 15$\times$ larger than the well-established MPI Sintel optical flow benchmark. Initial results for recent methods on our benchmark show that estimating fine details is indeed challenging, as their accuracy leaves significant room for improvement. The Spring benchmark and the corresponding datasets are available at http://spring-benchmark.org.

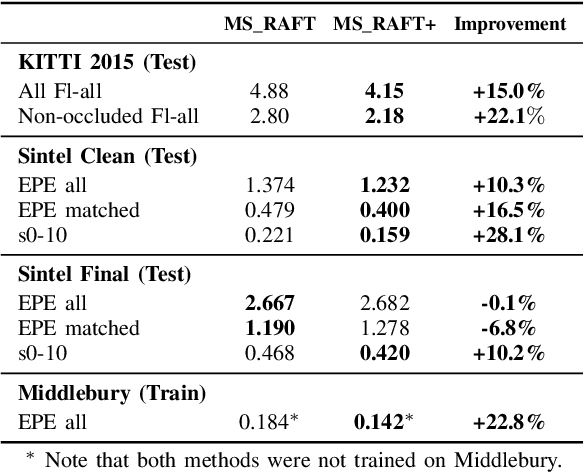

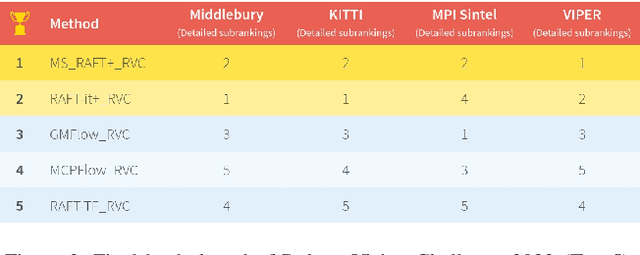

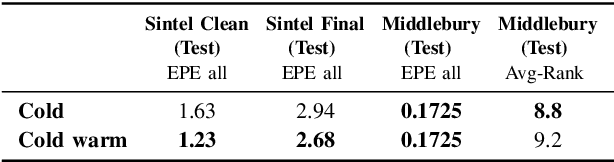

High Resolution Multi-Scale RAFT (Robust Vision Challenge 2022)

Oct 30, 2022

Abstract:In this report, we present our optical flow approach, MS-RAFT+, that won the Robust Vision Challenge 2022. It is based on the MS-RAFT method, which successfully integrates several multi-scale concepts into single-scale RAFT. Our approach extends this method by exploiting an additional finer scale for estimating the flow, which is made feasible by on-demand cost computation. This way, it can not only operate at half the original resolution, but also use MS-RAFT's shared convex upsampler to obtain full resolution flow. Moreover, our approach relies on an adjusted fine-tuning scheme during training. This in turn aims at improving the generalization across benchmarks. Among all participating methods in the Robust Vision Challenge, our approach ranks first on VIPER and second on KITTI, Sintel, and Middlebury, resulting in the first place of the overall ranking.

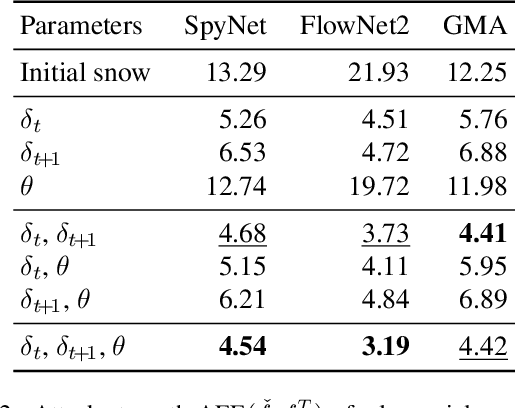

Attacking Motion Estimation with Adversarial Snow

Oct 20, 2022

Abstract:Current adversarial attacks for motion estimation (optical flow) optimize small per-pixel perturbations, which are unlikely to appear in the real world. In contrast, we exploit a real-world weather phenomenon for a novel attack with adversarially optimized snow. At the core of our attack is a differentiable renderer that consistently integrates photorealistic snowflakes with realistic motion into the 3D scene. Through optimization we obtain adversarial snow that significantly impacts the optical flow while being indistinguishable from ordinary snow. Surprisingly, the impact of our novel attack is largest on methods that previously showed a high robustness to small L_p perturbations.

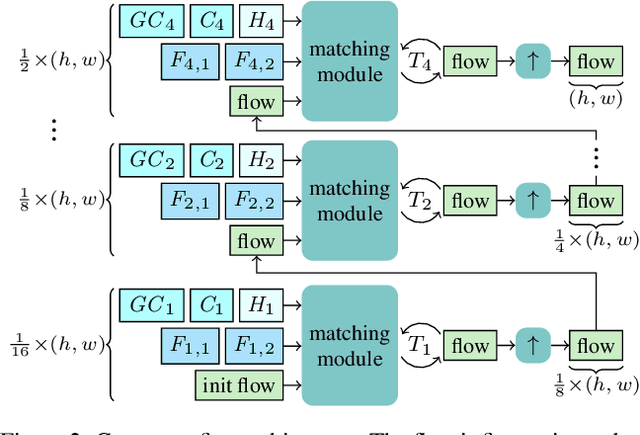

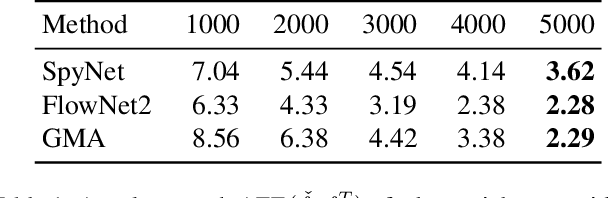

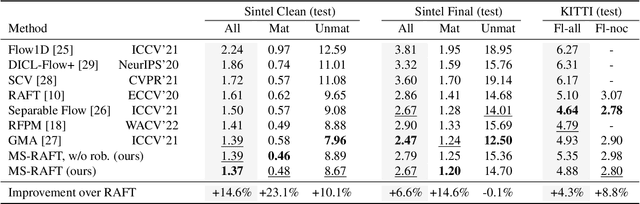

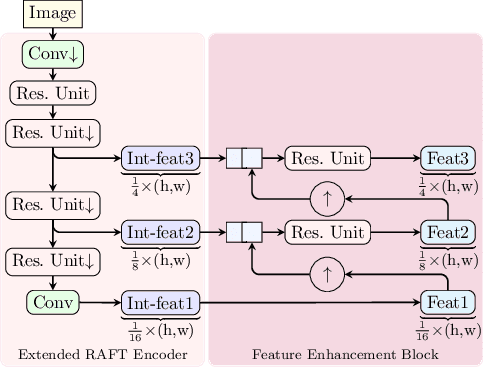

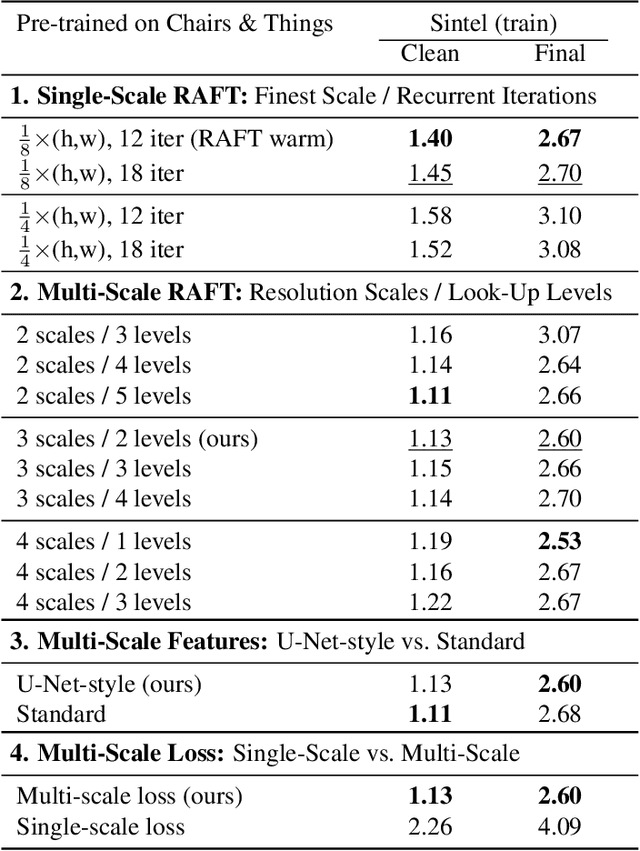

Multi-Scale RAFT: Combining Hierarchical Concepts for Learning-based Optical FLow Estimation

Jul 25, 2022

Abstract:Many classical and learning-based optical flow methods rely on hierarchical concepts to improve both accuracy and robustness. However, one of the currently most successful approaches -- RAFT -- hardly exploits such concepts. In this work, we show that multi-scale ideas are still valuable. More precisely, using RAFT as a baseline, we propose a novel multi-scale neural network that combines several hierarchical concepts within a single estimation framework. These concepts include (i) a partially shared coarse-to-fine architecture, (ii) multi-scale features, (iii) a hierarchical cost volume and (iv) a multi-scale multi-iteration loss. Experiments on MPI Sintel and KITTI clearly demonstrate the benefits of our approach. They show not only substantial improvements compared to RAFT, but also state-of-the-art results -- in particular in non-occluded regions. Code will be available at https://github.com/cv-stuttgart/MS_RAFT.

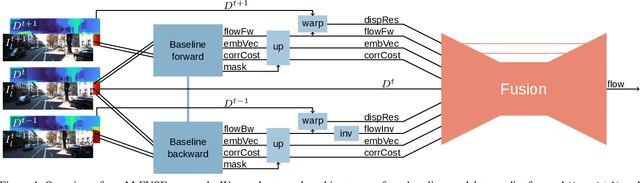

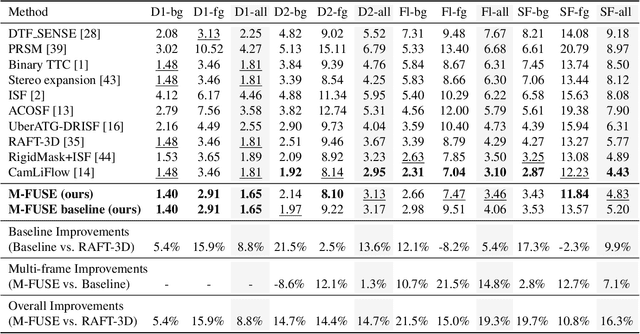

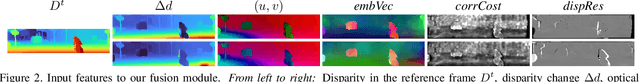

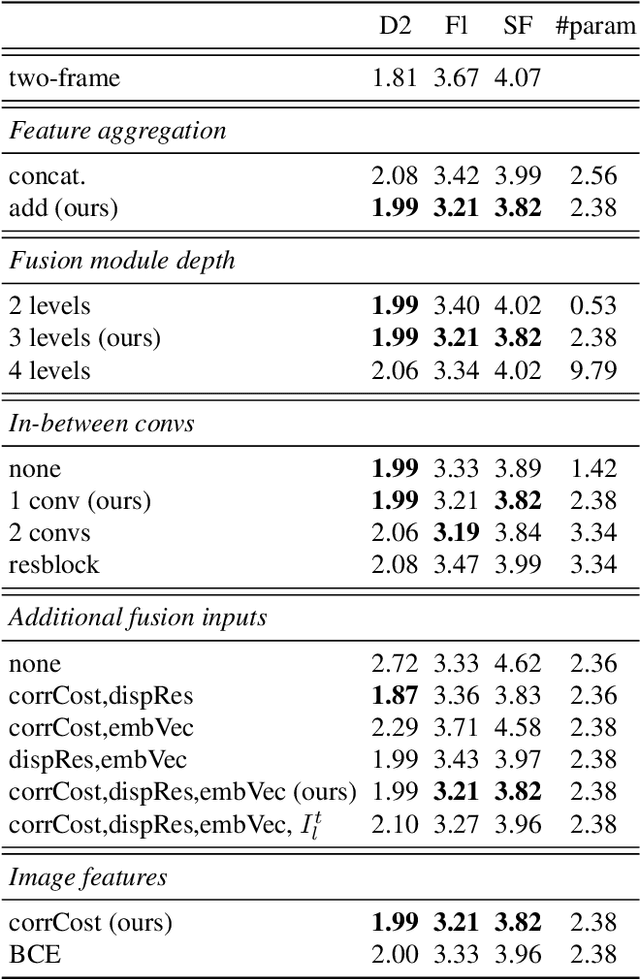

M-FUSE: Multi-frame Fusion for Scene Flow Estimation

Jul 12, 2022

Abstract:Recently, neural network for scene flow estimation show impressive results on automotive data such as the KITTI benchmark. However, despite of using sophisticated rigidity assumptions and parametrizations, such networks are typically limited to only two frame pairs which does not allow them to exploit temporal information. In our paper we address this shortcoming by proposing a novel multi-frame approach that considers an additional preceding stereo pair. To this end, we proceed in two steps: Firstly, building upon the recent RAFT-3D approach, we develop an advanced two-frame baseline by incorporating an improved stereo method. Secondly, and even more importantly, exploiting the specific modeling concepts of RAFT-3D, we propose a U-Net like architecture that performs a fusion of forward and backward flow estimates and hence allows to integrate temporal information on demand. Experiments on the KITTI benchmark do not only show that the advantages of the improved baseline and the temporal fusion approach complement each other, they also demonstrate that the computed scene flow is highly accurate. More precisely, our approach ranks second overall and first for the even more challenging foreground objects, in total outperforming the original RAFT-3D method by more than 16%. Code is available at https://github.com/cv-stuttgart/M-FUSE.

Blind Image Inpainting with Sparse Directional Filter Dictionaries for Lightweight CNNs

May 13, 2022

Abstract:Blind inpainting algorithms based on deep learning architectures have shown a remarkable performance in recent years, typically outperforming model-based methods both in terms of image quality and run time. However, neural network strategies typically lack a theoretical explanation, which contrasts with the well-understood theory underlying model-based methods. In this work, we leverage the advantages of both approaches by integrating theoretically founded concepts from transform domain methods and sparse approximations into a CNN-based approach for blind image inpainting. To this end, we present a novel strategy to learn convolutional kernels that applies a specifically designed filter dictionary whose elements are linearly combined with trainable weights. Numerical experiments demonstrate the competitiveness of this approach. Our results show not only an improved inpainting quality compared to conventional CNNs but also significantly faster network convergence within a lightweight network design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge