Zhicheng Cao

Composite Fixed-Length Ordered Features for Palmprint Template Protection with Diminished Performance Loss

Nov 09, 2022Abstract:Palmprint recognition has become more and more popular due to its advantages over other biometric modalities such as fingerprint, in that it is larger in area, richer in information and able to work at a distance. However, the issue of palmprint privacy and security (especially palmprint template protection) remains under-studied. Among the very few research works, most of them only use the directional and orientation features of the palmprint with transformation processing, yielding unsatisfactory protection and identification performance. Thus, this paper proposes a palmprint template protection-oriented operator that has a fixed length and is ordered in nature, by fusing point features and orientation features. Firstly, double orientations are extracted with more accuracy based on MFRAT. Then key points of SURF are extracted and converted to be fixed-length and ordered features. Finally, composite features that fuse up the double orientations and SURF points are transformed using the irreversible transformation of IOM to generate the revocable palmprint template. Experiments show that the EER after irreversible transformation on the PolyU and CASIA databases are 0.17% and 0.19% respectively, and the absolute precision loss is 0.08% and 0.07%, respectively, which proves the advantage of our method.

A Demographic Attribute Guided Approach to Age Estimation

May 20, 2022

Abstract:Face-based age estimation has attracted enormous attention due to wide applications to public security surveillance, human-computer interaction, etc. With vigorous development of deep learning, age estimation based on deep neural network has become the mainstream practice. However, seeking a more suitable problem paradigm for age change characteristics, designing the corresponding loss function and designing a more effective feature extraction module still needs to be studied. What is more, change of face age is also related to demographic attributes such as ethnicity and gender, and the dynamics of different age groups is also quite different. This problem has so far not been paid enough attention to. How to use demographic attribute information to improve the performance of age estimation remains to be further explored. In light of these issues, this research makes full use of auxiliary information of face attributes and proposes a new age estimation approach with an attribute guidance module. We first design a multi-scale attention residual convolution unit (MARCU) to extract robust facial features other than simply using other standard feature modules such as VGG and ResNet. Then, after being especially treated through full connection (FC) layers, the facial demographic attributes are weight-summed by 1*1 convolutional layer and eventually merged with the age features by a global FC layer. Lastly, we propose a new error compression ranking (ECR) loss to better converge the age regression value. Experimental results on three public datasets of UTKFace, LAP2016 and Morph show that our proposed approach achieves superior performance compared to other state-of-the-art methods.

A Bidirectional Conversion Network for Cross-Spectral Face Recognition

May 03, 2022

Abstract:Face recognition in the infrared (IR) band has become an important supplement to visible light face recognition due to its advantages of independent background light, strong penetration, ability of imaging under harsh environments such as nighttime, rain and fog. However, cross-spectral face recognition (i.e., VIS to IR) is very challenging due to the dramatic difference between the visible light and IR imageries as well as the lack of paired training data. This paper proposes a framework of bidirectional cross-spectral conversion (BCSC-GAN) between the heterogeneous face images, and designs an adaptive weighted fusion mechanism based on information fusion theory. The network reduces the cross-spectral recognition problem into an intra-spectral problem, and improves performance by fusing bidirectional information. Specifically, a face identity retaining module (IRM) is introduced with the ability to preserve identity features, and a new composite loss function is designed to overcome the modal differences caused by different spectral characteristics. Two datasets of TINDERS and CASIA were tested, where performance metrics of FID, recognition rate, equal error rate and normalized distance were compared. Results show that our proposed network is superior than other state-of-the-art methods. Additionally, the proposed rule of Self Adaptive Weighted Fusion (SAWF) is better than the recognition results of the unfused case and other traditional fusion rules that are commonly used, which further justifies the effectiveness and superiority of the proposed bidirectional conversion approach.

HyperFaceNet: A Hyperspectral Face Recognition Method Based on Deep Fusion

Sep 12, 2020

Abstract:Face recognition has already been well studied under the visible light and the infrared,in both intra-spectral and cross-spectral cases. However, how to fuse different light bands, i.e., hyperspectral face recognition, is still an open research problem, which has the advantages of richer information retaining and all-weather functionality over single band face recognition. Among the very few works for hyperspectral face recognition, traditional non-deep learning techniques are largely used. Thus, we in this paper bring deep learning into the topic of hyperspectral face recognition, and propose a new fusion model (termed HyperFaceNet) especially for hyperspectral faces. The proposed fusion model is characterized by residual dense learning, a feedback style encoder and a recognition-oriented loss function. During the experiments, our method is proved to be of higher recognition rates than face recognition using either visible light or the infrared. Moreover, our fusion model is shown to be superior to other general-purposed image fusion methods including state-of-the-arts, in terms of both image quality and recognition performance.

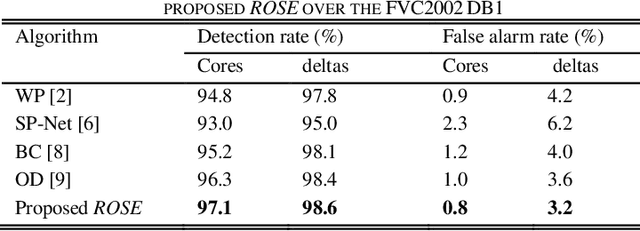

ROSE: Real One-Stage Effort to Detect the Fingerprint Singular Point Based on Multi-scale Spatial Attention

Mar 09, 2020

Abstract:Detecting the singular point accurately and efficiently is one of the most important tasks for fingerprint recognition. In recent years, deep learning has been gradually used in the fingerprint singular point detection. However, current deep learning-based singular point detection methods are either two-stage or multi-stage, which makes them time-consuming. More importantly, their detection accuracy is yet unsatisfactory, especially in the case of the low-quality fingerprint. In this paper, we make a Real One-Stage Effort to detect fingerprint singular points more accurately and efficiently, and therefore we name the proposed algorithm ROSE for short, in which the multi-scale spatial attention, the Gaussian heatmap and the variant of focal loss are applied together to achieve a higher detection rate. Experimental results on the datasets FVC2002 DB1 and NIST SD4 show that our ROSE outperforms the state-of-art algorithms in terms of detection rate, false alarm rate and detection speed.

Singular points detection with semantic segmentation networks

Nov 04, 2019

Abstract:Singular points detection is one of the most classical and important problem in the field of fingerprint recognition. However, current detection rates of singular points are still unsatisfactory, especially for low-quality fingerprints. Compared with traditional image processing-based detection methods, methods based on deep learning only need the original fingerprint image but not the fingerprint orientation field. In this paper, different from other detection methods based on deep learning, we treat singular points detection as a semantic segmentation problem and just use few data for training. Furthermore, we propose a new convolutional neural network called SinNet to extract the singular regions of interest and then use a blob detection method called SimpleBlobDetector to locate the singular points. The experiments are carried out on the test dataset from SPD2010, and the proposed method has much better performance than the other advanced methods in most aspects. Compared with the state-of-art algorithms in SPD2010, our method achieves an increase of 11% in the percentage of correctly detected fingerprints and an increase of more than 18% in the core detection rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge