He Chen

A Training-Free Guess What Vision Language Model from Snippets to Open-Vocabulary Object Detection

Jan 21, 2026Abstract:Open-Vocabulary Object Detection (OVOD) aims to develop the capability to detect anything. Although myriads of large-scale pre-training efforts have built versatile foundation models that exhibit impressive zero-shot capabilities to facilitate OVOD, the necessity of creating a universal understanding for any object cognition according to already pretrained foundation models is usually overlooked. Therefore, in this paper, a training-free Guess What Vision Language Model, called GW-VLM, is proposed to form a universal understanding paradigm based on our carefully designed Multi-Scale Visual Language Searching (MS-VLS) coupled with Contextual Concept Prompt (CCP) for OVOD. This approach can engage a pre-trained Vision Language Model (VLM) and a Large Language Model (LLM) in the game of "guess what". Wherein, MS-VLS leverages multi-scale visual-language soft-alignment for VLM to generate snippets from the results of class-agnostic object detection, while CCP can form the concept of flow referring to MS-VLS and then make LLM understand snippets for OVOD. Finally, the extensive experiments are carried out on natural and remote sensing datasets, including COCO val, Pascal VOC, DIOR, and NWPU-10, and the results indicate that our proposed GW-VLM can achieve superior OVOD performance compared to the-state-of-the-art methods without any training step.

MI-PRUN: Optimize Large Language Model Pruning via Mutual Information

Jan 12, 2026Abstract:Large Language Models (LLMs) have become indispensable across various domains, but this comes at the cost of substantial computational and memory resources. Model pruning addresses this by removing redundant components from models. In particular, block pruning can achieve significant compression and inference acceleration. However, existing block pruning methods are often unstable and struggle to attain globally optimal solutions. In this paper, we propose a mutual information based pruning method MI-PRUN for LLMs. Specifically, we leverages mutual information to identify redundant blocks by evaluating transitions in hidden states. Additionally, we incorporate the Data Processing Inequality (DPI) to reveal the relationship between the importance of entire contiguous blocks and that of individual blocks. Moreover, we develop the Fast-Block-Select algorithm, which iteratively updates block combinations to achieve a globally optimal solution while significantly improving the efficiency. Extensive experiments across various models and datasets demonstrate the stability and effectiveness of our method.

Advancing Embodied Agent Security: From Safety Benchmarks to Input Moderation

Apr 22, 2025

Abstract:Embodied agents exhibit immense potential across a multitude of domains, making the assurance of their behavioral safety a fundamental prerequisite for their widespread deployment. However, existing research predominantly concentrates on the security of general large language models, lacking specialized methodologies for establishing safety benchmarks and input moderation tailored to embodied agents. To bridge this gap, this paper introduces a novel input moderation framework, meticulously designed to safeguard embodied agents. This framework encompasses the entire pipeline, including taxonomy definition, dataset curation, moderator architecture, model training, and rigorous evaluation. Notably, we introduce EAsafetyBench, a meticulously crafted safety benchmark engineered to facilitate both the training and stringent assessment of moderators specifically designed for embodied agents. Furthermore, we propose Pinpoint, an innovative prompt-decoupled input moderation scheme that harnesses a masked attention mechanism to effectively isolate and mitigate the influence of functional prompts on moderation tasks. Extensive experiments conducted on diverse benchmark datasets and models validate the feasibility and efficacy of the proposed approach. The results demonstrate that our methodologies achieve an impressive average detection accuracy of 94.58%, surpassing the performance of existing state-of-the-art techniques, alongside an exceptional moderation processing time of merely 0.002 seconds per instance.

EarthGPT-X: Enabling MLLMs to Flexibly and Comprehensively Understand Multi-Source Remote Sensing Imagery

Apr 17, 2025Abstract:Recent advances in the visual-language area have developed natural multi-modal large language models (MLLMs) for spatial reasoning through visual prompting. However, due to remote sensing (RS) imagery containing abundant geospatial information that differs from natural images, it is challenging to effectively adapt natural spatial models to the RS domain. Moreover, current RS MLLMs are limited in overly narrow interpretation levels and interaction manner, hindering their applicability in real-world scenarios. To address those challenges, a spatial MLLM named EarthGPT-X is proposed, enabling a comprehensive understanding of multi-source RS imagery, such as optical, synthetic aperture radar (SAR), and infrared. EarthGPT-X offers zoom-in and zoom-out insight, and possesses flexible multi-grained interactive abilities. Moreover, EarthGPT-X unifies two types of critical spatial tasks (i.e., referring and grounding) into a visual prompting framework. To achieve these versatile capabilities, several key strategies are developed. The first is the multi-modal content integration method, which enhances the interplay between images, visual prompts, and text instructions. Subsequently, a cross-domain one-stage fusion training strategy is proposed, utilizing the large language model (LLM) as a unified interface for multi-source multi-task learning. Furthermore, by incorporating a pixel perception module, the referring and grounding tasks are seamlessly unified within a single framework. In addition, the experiments conducted demonstrate the superiority of the proposed EarthGPT-X in multi-grained tasks and its impressive flexibility in multi-modal interaction, revealing significant advancements of MLLM in the RS field.

FuseGrasp: Radar-Camera Fusion for Robotic Grasping of Transparent Objects

Feb 28, 2025Abstract:Transparent objects are prevalent in everyday environments, but their distinct physical properties pose significant challenges for camera-guided robotic arms. Current research is mainly dependent on camera-only approaches, which often falter in suboptimal conditions, such as low-light environments. In response to this challenge, we present FuseGrasp, the first radar-camera fusion system tailored to enhance the transparent objects manipulation. FuseGrasp exploits the weak penetrating property of millimeter-wave (mmWave) signals, which causes transparent materials to appear opaque, and combines it with the precise motion control of a robotic arm to acquire high-quality mmWave radar images of transparent objects. The system employs a carefully designed deep neural network to fuse radar and camera imagery, thereby improving depth completion and elevating the success rate of object grasping. Nevertheless, training FuseGrasp effectively is non-trivial, due to limited radar image datasets for transparent objects. We address this issue utilizing large RGB-D dataset, and propose an effective two-stage training approach: we first pre-train FuseGrasp on a large public RGB-D dataset of transparent objects, then fine-tune it on a self-built small RGB-D-Radar dataset. Furthermore, as a byproduct, FuseGrasp can determine the composition of transparent objects, such as glass or plastic, leveraging the material identification capability of mmWave radar. This identification result facilitates the robotic arm in modulating its grip force appropriately. Extensive testing reveals that FuseGrasp significantly improves the accuracy of depth reconstruction and material identification for transparent objects. Moreover, real-world robotic trials have confirmed that FuseGrasp markedly enhances the handling of transparent items. A video demonstration of FuseGrasp is available at https://youtu.be/MWDqv0sRSok.

DeepCRF: Deep Learning-Enhanced CSI-Based RF Fingerprinting for Channel-Resilient WiFi Device Identification

Nov 11, 2024

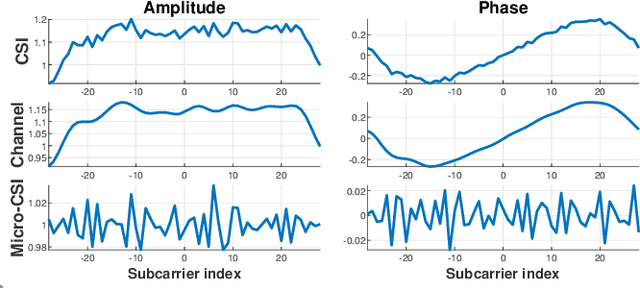

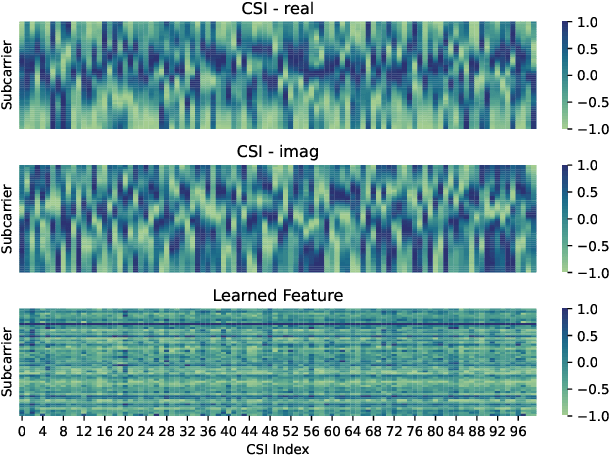

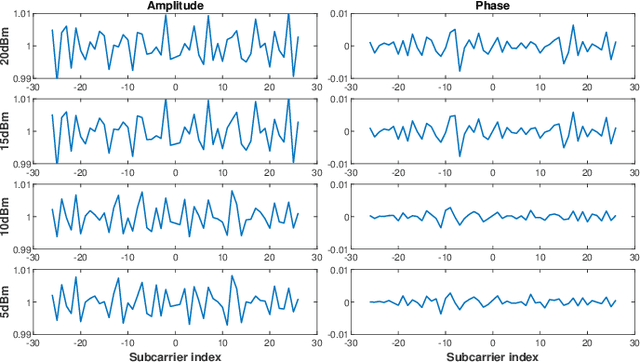

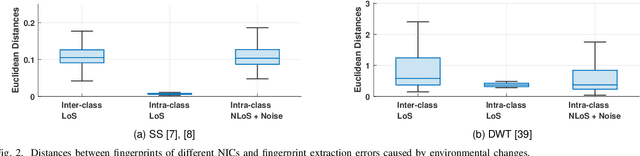

Abstract:This paper presents DeepCRF, a new framework that harnesses deep learning to extract subtle micro-signals from channel state information (CSI) measurements, enabling robust and resilient radio-frequency fingerprinting (RFF) of commercial-off-the-shelf (COTS) WiFi devices across diverse channel conditions. Building on our previous research, which demonstrated that micro-signals in CSI, termed micro-CSI, most likely originate from RF circuitry imperfections and can serve as unique RF fingerprints, we develop a new approach to overcome the limitations of our prior signal space-based method. While the signal space-based method is effective in strong line-of-sight (LoS) conditions, we show that it struggles with the complexities of non-line-of-sight (NLoS) scenarios, compromising the robustness of CSI-based RFF. To address this challenge, DeepCRF incorporates a carefully trained convolutional neural network (CNN) with model-inspired data augmentation, supervised contrastive learning, and decision fusion techniques, enhancing its generalization capabilities across unseen channel conditions and resilience against noise. Our evaluations demonstrate that DeepCRF significantly improves device identification accuracy across diverse channels, outperforming both the signal space-based baseline and state-of-the-art neural network-based benchmarks. Notably, it achieves an average identification accuracy of 99.53% among 19 COTS WiFi network interface cards in real-world unseen scenarios using 4 CSI measurements per identification procedure.

Spurious Stationarity and Hardness Results for Mirror Descent

Apr 11, 2024

Abstract:Despite the considerable success of Bregman proximal-type algorithms, such as mirror descent, in machine learning, a critical question remains: Can existing stationarity measures, often based on Bregman divergence, reliably distinguish between stationary and non-stationary points? In this paper, we present a groundbreaking finding: All existing stationarity measures necessarily imply the existence of spurious stationary points. We further establish an algorithmic independent hardness result: Bregman proximal-type algorithms are unable to escape from a spurious stationary point in finite steps when the initial point is unfavorable, even for convex problems. Our hardness result points out the inherent distinction between Euclidean and Bregman geometries, and introduces both fundamental theoretical and numerical challenges to both machine learning and optimization communities.

Towards Channel-Resilient CSI-Based RF Fingerprinting using Deep Learning

Mar 23, 2024Abstract:This work introduces DeepCRF, a deep learning framework designed for channel state information-based radio frequency fingerprinting (CSI-RFF). The considered CSI-RFF is built on micro-CSI, a recently discovered radio-frequency (RF) fingerprint that manifests as micro-signals appearing on the channel state information (CSI) curves of commercial WiFi devices. Micro-CSI facilitates CSI-RFF which is more streamlined and easily implementable compared to existing schemes that rely on raw I/Q samples. The primary challenge resides in the precise extraction of micro-CSI from the inherently fluctuating CSI measurements, a process critical for reliable RFF. The construction of a framework that is resilient to channel variability is essential for the practical deployment of CSI-RFF techniques. DeepCRF addresses this challenge with a thoughtfully trained convolutional neural network (CNN). This network's performance is significantly enhanced by employing effective and strategic data augmentation techniques, which bolster its ability to generalize to novel, unseen channel conditions. Furthermore, DeepCRF incorporates supervised contrastive learning to enhance its robustness against noises. Our evaluations demonstrate that DeepCRF significantly enhances the accuracy of device identification across previously unencountered channels. It outperforms both the conventional model-based methods and standard CNN that lack our specialized training and enhancement strategies.

Physical-Layer Authentication of Commodity Wi-Fi Devices via Micro-Signals on CSI Curves

Aug 01, 2023

Abstract:This paper presents a new radiometric fingerprint that is revealed by micro-signals in the channel state information (CSI) curves extracted from commodity Wi-Fi devices. We refer to this new fingerprint as "micro-CSI". Our experiments show that micro-CSI is likely to be caused by imperfections in the radio-frequency circuitry and is present in Wi-Fi 4/5/6 network interface cards (NICs). We conducted further experiments to determine the most effective CSI collection configuration to stabilize micro-CSI. To extract micro-CSI from varying CSI curves, we developed a signal space-based extraction algorithm that effectively separates distortions caused by wireless channels and hardware imperfections under line-of-sight (LoS) scenarios. Finally, we implemented a micro-CSI-based device authentication algorithm that uses the k-Nearest Neighbors (KNN) method to identify 11 COTS Wi-Fi NICs from the same manufacturer in typical indoor environments. Our experimental results demonstrate that the micro-CSI-based authentication algorithm can achieve an average attack detection rate of over 99% with a false alarm rate of 0%.

Pulse Shape-Aided Multipath Delay Estimation for Fine-Grained WiFi Sensing

Jun 27, 2023

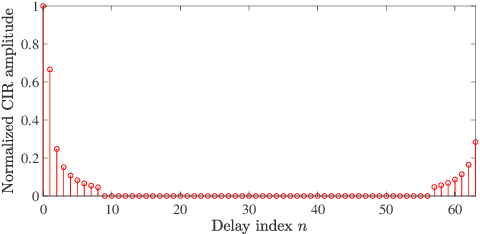

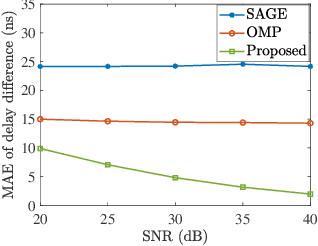

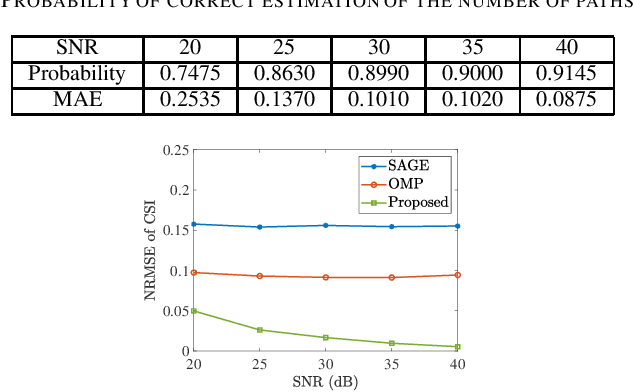

Abstract:Due to the finite bandwidth of practical wireless systems, one multipath component can manifest itself as a discrete pulse consisting of multiple taps in the digital delay domain. This effect is called channel leakage, which complicates the multipath delay estimation problem. In this paper, we develop a new algorithm to estimate multipath delays of leaked channels by leveraging the knowledge of pulse-shaping functions, which can be used to support fine-grained WiFi sensing applications. Specifically, we express the channel impulse response (CIR) as a linear combination of overcomplete basis vectors corresponding to different delays. Considering the limited number of paths in physical environments, we formulate the multipath delay estimation as a sparse recovery problem. We then propose a sparse Bayesian learning (SBL) method to estimate the sparse vector and determine the number of physical paths and their associated delay parameters from the positions of the nonzero entries in the sparse vector. Simulation results show that our algorithm can accurately determine the number of paths, and achieve superior accuracy in path delay estimation and channel reconstruction compared to two benchmarking schemes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge