Hang Shi

Physics-informed Diffusion Generation for Geomagnetic Map Interpolation

Jan 31, 2026Abstract:Geomagnetic map interpolation aims to infer unobserved geomagnetic data at spatial points, yielding critical applications in navigation and resource exploration. However, existing methods for scattered data interpolation are not specifically designed for geomagnetic maps, which inevitably leads to suboptimal performance due to detection noise and the laws of physics. Therefore, we propose a Physics-informed Diffusion Generation framework~(PDG) to interpolate incomplete geomagnetic maps. First, we design a physics-informed mask strategy to guide the diffusion generation process based on a local receptive field, effectively eliminating noise interference. Second, we impose a physics-informed constraint on the diffusion generation results following the kriging principle of geomagnetic maps, ensuring strict adherence to the laws of physics. Extensive experiments and in-depth analyses on four real-world datasets demonstrate the superiority and effectiveness of each component of PDG.

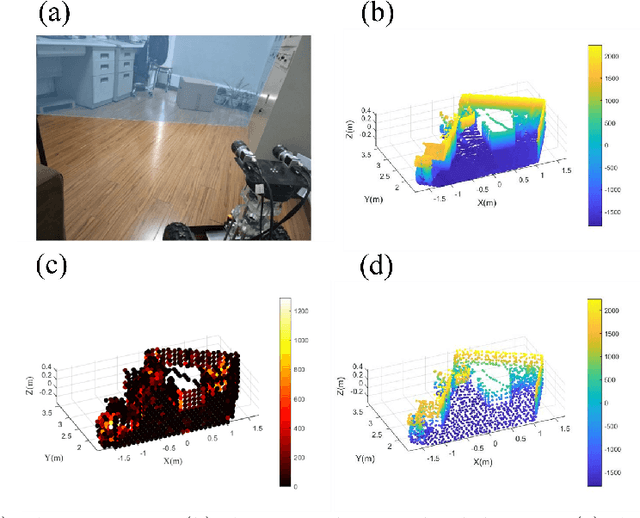

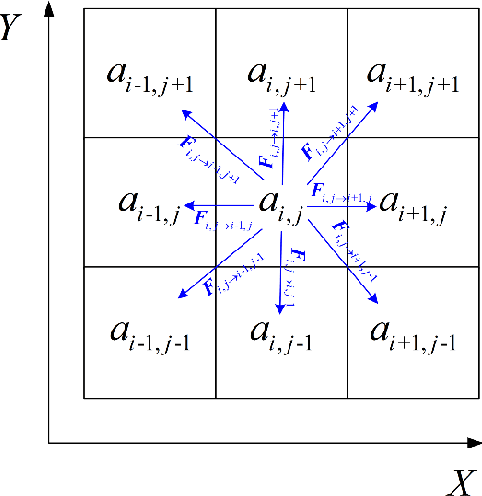

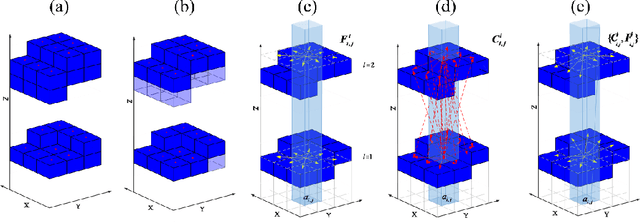

Path Planning on Multi-level Point Cloud with a Weighted Traversability Graph

Apr 30, 2025

Abstract:This article proposes a new path planning method for addressing multi-level terrain situations. The proposed method includes innovations in three aspects: 1) the pre-processing of point cloud maps with a multi-level skip-list structure and data-slimming algorithm for well-organized and simplified map formalization and management, 2) the direct acquisition of local traversability indexes through vehicle and point cloud interaction analysis, which saves work in surface fitting, and 3) the assignment of traversability indexes on a multi-level connectivity graph to generate a weighted traversability graph for generally search-based path planning. The A* algorithm is modified to utilize the traversability graph to generate a short and safe path. The effectiveness and reliability of the proposed method are verified through indoor and outdoor experiments conducted in various environments, including multi-floor buildings, woodland, and rugged mountainous regions. The results demonstrate that the proposed method can properly address 3D path planning problems for ground vehicles in a wide range of situations.

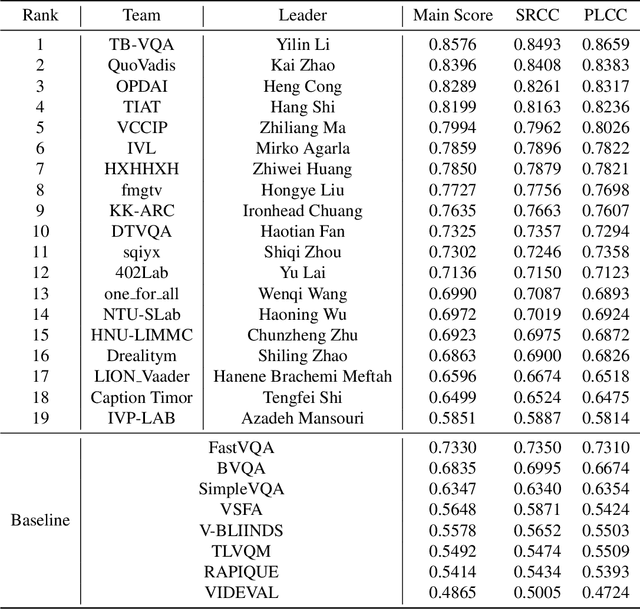

NTIRE 2023 Quality Assessment of Video Enhancement Challenge

Jul 19, 2023

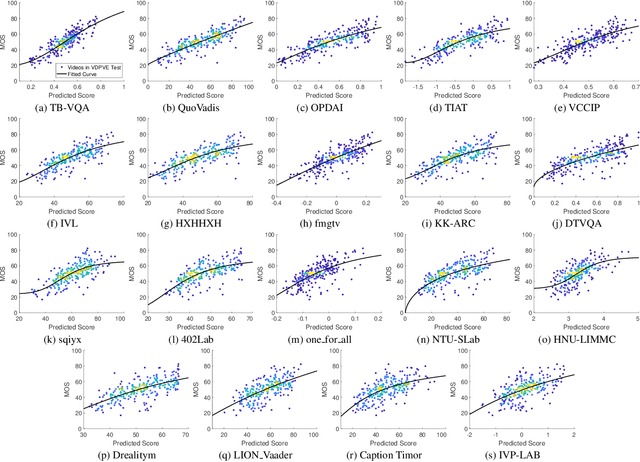

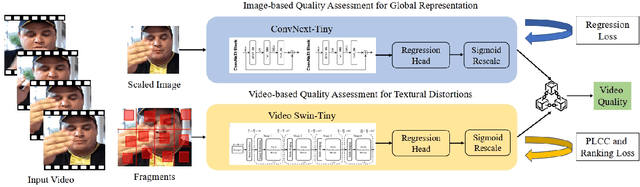

Abstract:This paper reports on the NTIRE 2023 Quality Assessment of Video Enhancement Challenge, which will be held in conjunction with the New Trends in Image Restoration and Enhancement Workshop (NTIRE) at CVPR 2023. This challenge is to address a major challenge in the field of video processing, namely, video quality assessment (VQA) for enhanced videos. The challenge uses the VQA Dataset for Perceptual Video Enhancement (VDPVE), which has a total of 1211 enhanced videos, including 600 videos with color, brightness, and contrast enhancements, 310 videos with deblurring, and 301 deshaked videos. The challenge has a total of 167 registered participants. 61 participating teams submitted their prediction results during the development phase, with a total of 3168 submissions. A total of 176 submissions were submitted by 37 participating teams during the final testing phase. Finally, 19 participating teams submitted their models and fact sheets, and detailed the methods they used. Some methods have achieved better results than baseline methods, and the winning methods have demonstrated superior prediction performance.

Ammunition Component Classification Using Deep Learning

Aug 26, 2022

Abstract:Ammunition scrap inspection is an essential step in the process of recycling ammunition metal scrap. Most ammunition is composed of a number of components, including case, primer, powder, and projectile. Ammo scrap containing energetics is considered to be potentially dangerous and should be separated before the recycling process. Manually inspecting each piece of scrap is tedious and time-consuming. We have gathered a dataset of ammunition components with the goal of applying artificial intelligence for classifying safe and unsafe scrap pieces automatically. First, two training datasets are manually created from visual and x-ray images of ammo. Second, the x-ray dataset is augmented using the spatial transforms of histogram equalization, averaging, sharpening, power law, and Gaussian blurring in order to compensate for the lack of sufficient training data. Lastly, the representative YOLOv4 object detection method is applied to detect the ammo components and classify the scrap pieces into safe and unsafe classes, respectively. The trained models are tested against unseen data in order to evaluate the performance of the applied method. The experiments demonstrate the feasibility of ammo component detection and classification using deep learning. The datasets and the pre-trained models are available at https://github.com/hadi-ghnd/Scrap-Classification.

* Conference: International Conference on Machine Learning and Data Mining MLDM 2022 link: http://www.ibai-publishing.org/html/proceedings_2022/pdf/proceedings_mldm_2022.pdf ISBN: 978-3-942952-93-4

Real-Time Accident Detection in Traffic Surveillance Using Deep Learning

Aug 12, 2022

Abstract:Automatic detection of traffic accidents is an important emerging topic in traffic monitoring systems. Nowadays many urban intersections are equipped with surveillance cameras connected to traffic management systems. Therefore, computer vision techniques can be viable tools for automatic accident detection. This paper presents a new efficient framework for accident detection at intersections for traffic surveillance applications. The proposed framework consists of three hierarchical steps, including efficient and accurate object detection based on the state-of-the-art YOLOv4 method, object tracking based on Kalman filter coupled with the Hungarian algorithm for association, and accident detection by trajectory conflict analysis. A new cost function is applied for object association to accommodate for occlusion, overlapping objects, and shape changes in the object tracking step. The object trajectories are analyzed in terms of velocity, angle, and distance in order to detect different types of trajectory conflicts including vehicle-to-vehicle, vehicle-to-pedestrian, and vehicle-to-bicycle. Experimental results using real traffic video data show the feasibility of the proposed method in real-time applications of traffic surveillance. In particular, trajectory conflicts, including near-accidents and accidents occurring at urban intersections are detected with a low false alarm rate and a high detection rate. The robustness of the proposed framework is evaluated using video sequences collected from YouTube with diverse illumination conditions. The dataset is publicly available at: http://github.com/hadi-ghnd/AccidentDetection.

* link to IEEE: https://ieeexplore.ieee.org/abstract/document/9827736

Smart Traffic Monitoring System using Computer Vision and Edge Computing

Sep 07, 2021

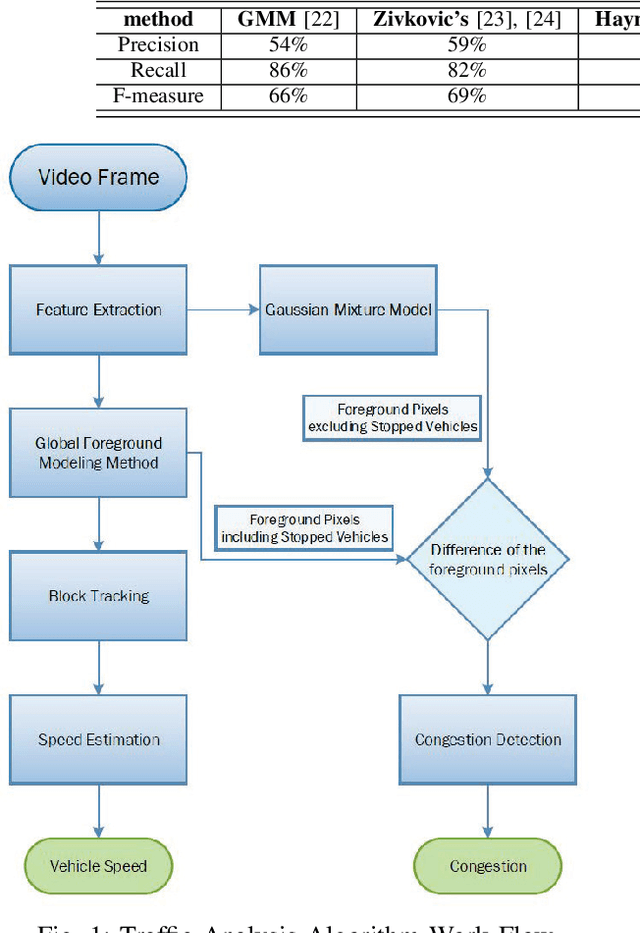

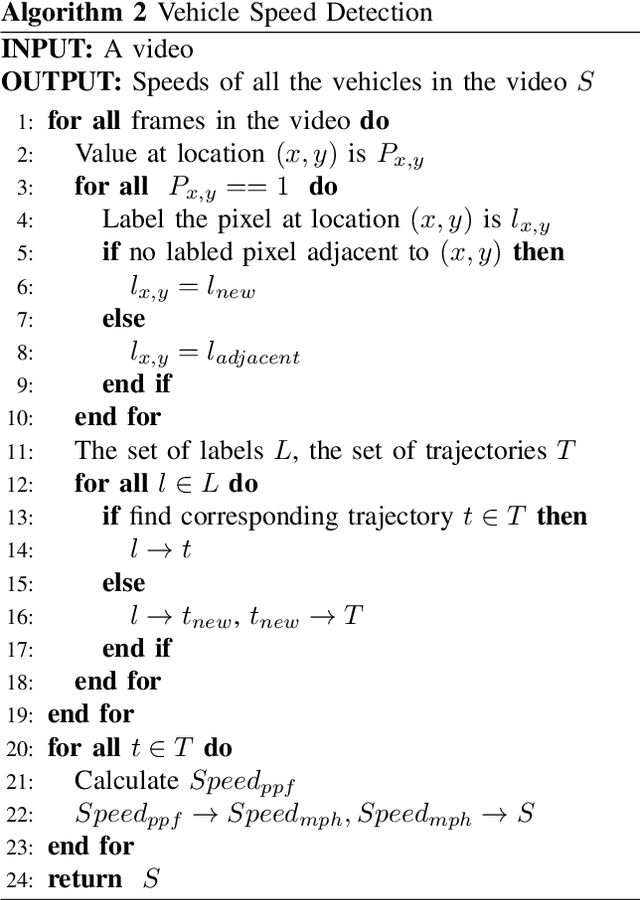

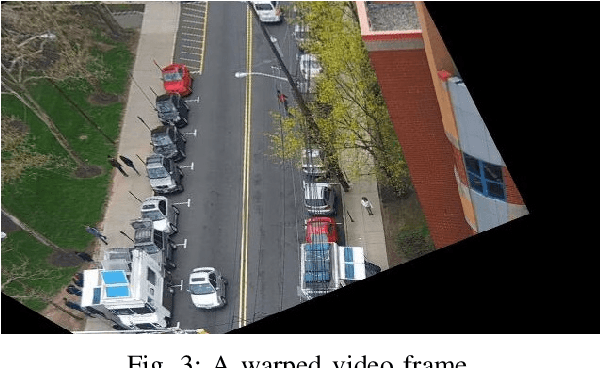

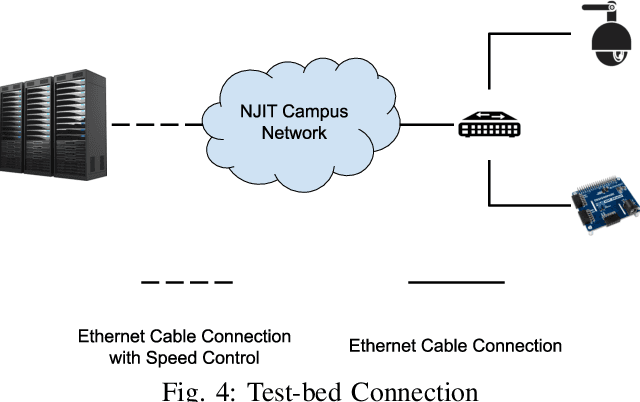

Abstract:Traffic management systems capture tremendous video data and leverage advances in video processing to detect and monitor traffic incidents. The collected data are traditionally forwarded to the traffic management center (TMC) for in-depth analysis and may thus exacerbate the network paths to the TMC. To alleviate such bottlenecks, we propose to utilize edge computing by equipping edge nodes that are close to cameras with computing resources (e.g. cloudlets). A cloudlet, with limited computing resources as compared to TMC, provides limited video processing capabilities. In this paper, we focus on two common traffic monitoring tasks, congestion detection, and speed detection, and propose a two-tier edge computing based model that takes into account of both the limited computing capability in cloudlets and the unstable network condition to the TMC. Our solution utilizes two algorithms for each task, one implemented at the edge and the other one at the TMC, which are designed with the consideration of different computing resources. While the TMC provides strong computation power, the video quality it receives depends on the underlying network conditions. On the other hand, the edge processes very high-quality video but with limited computing resources. Our model captures this trade-off. We evaluate the performance of the proposed two-tier model as well as the traffic monitoring algorithms via test-bed experiments under different weather as well as network conditions and show that our proposed hybrid edge-cloud solution outperforms both the cloud-only and edge-only solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge