Guohui Wang

Formalization of Robot Collision Detection Method based on Conformal Geometric Algebra

Dec 06, 2023Abstract:Cooperative robots can significantly assist people in their productive activities, improving the quality of their works. Collision detection is vital to ensure the safe and stable operation of cooperative robots in productive activities. As an advanced geometric language, conformal geometric algebra can simplify the construction of the robot collision model and the calculation of collision distance. Compared with the formal method based on conformal geometric algebra, the traditional method may have some defects which are difficult to find in the modelling and calculation. We use the formal method based on conformal geometric algebra to study the collision detection problem of cooperative robots. This paper builds formal models of geometric primitives and the robot body based on the conformal geometric algebra library in HOL Light. We analyse the shortest distance between geometric primitives and prove their collision determination conditions. Based on the above contents, we construct a formal verification framework for the robot collision detection method. By the end of this paper, we apply the proposed framework to collision detection between two single-arm industrial cooperative robots. The flexibility and reliability of the proposed framework are verified by constructing a general collision model and a special collision model for two single-arm industrial cooperative robots.

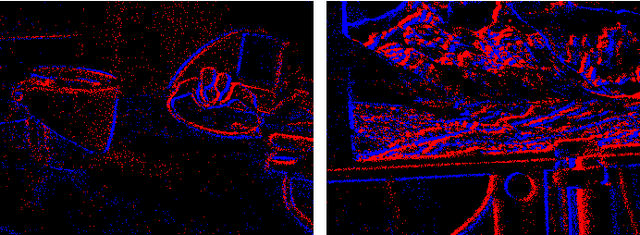

A Preliminary Research on Space Situational Awareness Based on Event Cameras

Mar 25, 2022

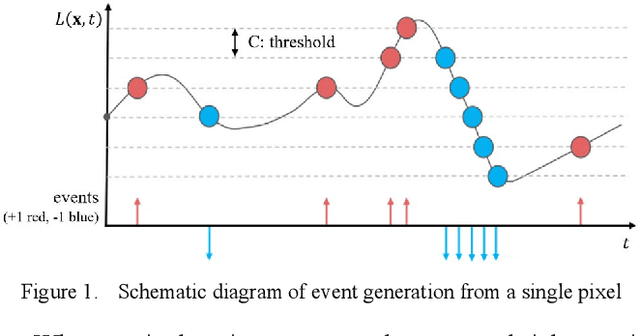

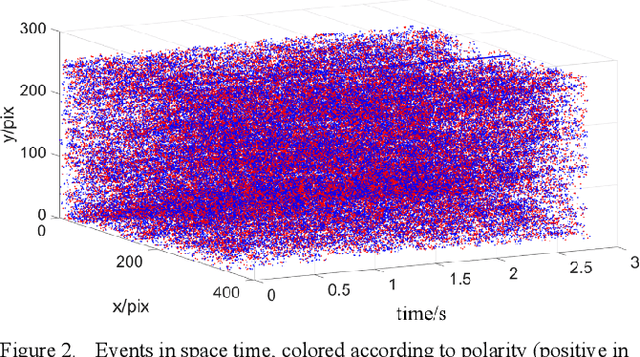

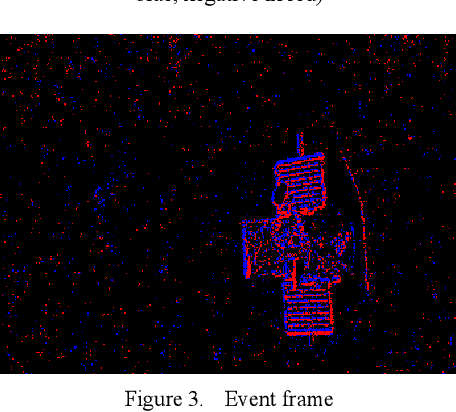

Abstract:Event camera is a new type of sensor that is different from traditional cameras. Each pixel is triggered asynchronously by an event. The trigger event is the change of the brightness irradiated on the pixel. If the increment or decrement is higher than a certain threshold, the event is output. Compared with traditional cameras, event cameras have the advantages of high temporal resolution, low latency, high dynamic range, low bandwidth and low power consumption. We carried out a series of observation experiments in a simulated space lighting environment. The experimental results show that the event camera can give full play to the above advantages in space situational awareness. This article first introduces the basic principles of the event camera, then analyzes its advantages and disadvantages, then introduces the observation experiment and analyzes the experimental results, and finally, a workflow of space situational awareness based on event cameras is given.

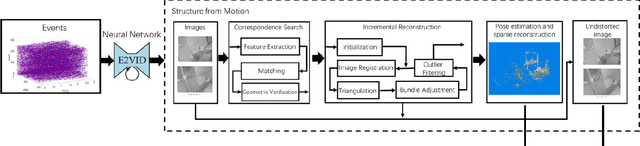

Event-Based Dense Reconstruction Pipeline

Mar 23, 2022

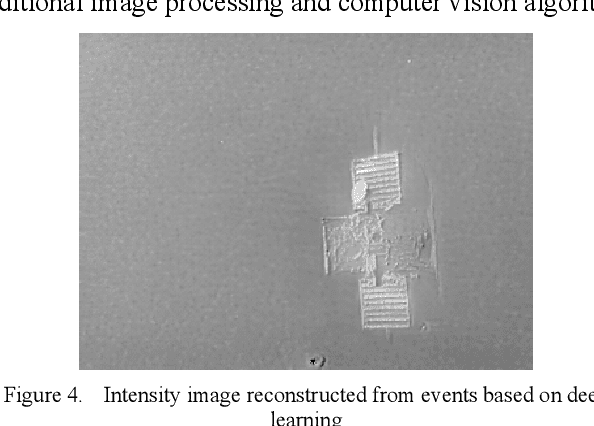

Abstract:Event cameras are a new type of sensors that are different from traditional cameras. Each pixel is triggered asynchronously by event. The trigger event is the change of the brightness irradiated on the pixel. If the increment or decrement of brightness is higher than a certain threshold, an event is output. Compared with traditional cameras, event cameras have the advantages of high dynamic range and no motion blur. Since events are caused by the apparent motion of intensity edges, the majority of 3D reconstructed maps consist only of scene edges, i.e., semi-dense maps, which is not enough for some applications. In this paper, we propose a pipeline to realize event-based dense reconstruction. First, deep learning is used to reconstruct intensity images from events. And then, structure from motion (SfM) is used to estimate camera intrinsic, extrinsic and sparse point cloud. Finally, multi-view stereo (MVS) is used to complete dense reconstruction.

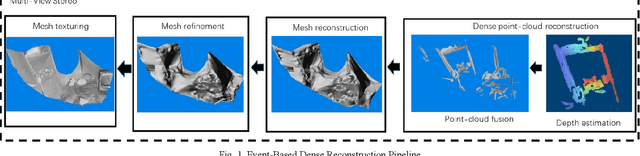

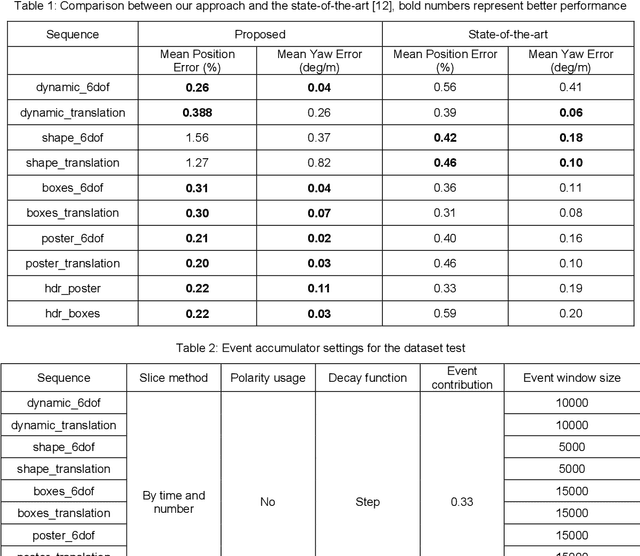

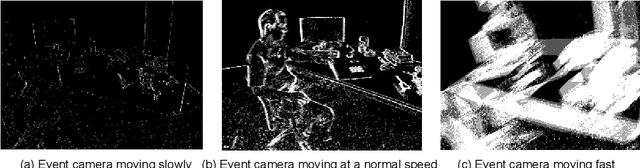

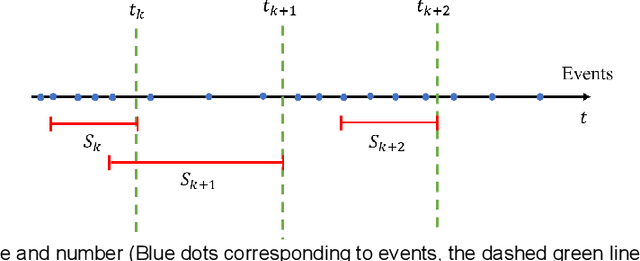

Research on Event Accumulator Settings for Event-Based SLAM

Dec 01, 2021

Abstract:Event cameras are a new type of sensors that are different from traditional cameras. Each pixel is triggered asynchronously by event. The trigger event is the change of the brightness irradiated on the pixel. If the increment or decrement of brightness is higher than a certain threshold, an event is output. Compared with traditional cameras, event cameras have the advantages of high dynamic range and no motion blur. Accumulating events to frames and using traditional SLAM algorithm is a direct and efficient way for event-based SLAM. Different event accumulator settings, such as slice method of event stream, processing method for no motion, using polarity or not, decay function and event contribution, can cause quite different accumulating results. We conducted the research on how to accumulate event frames to achieve a better event-based SLAM performance. For experiment verification, accumulated event frames are fed to the traditional SLAM system to construct an event-based SLAM system. Our strategy of setting event accumulator has been evaluated on the public dataset. The experiment results show that our method can achieve better performance in most sequences compared with the state-of-the-art event frame based SLAM algorithm. In addition, the proposed approach has been tested on a quadrotor UAV to show the potential of applications in real scenario. Code and results are open sourced to benefit the research community of event cameras

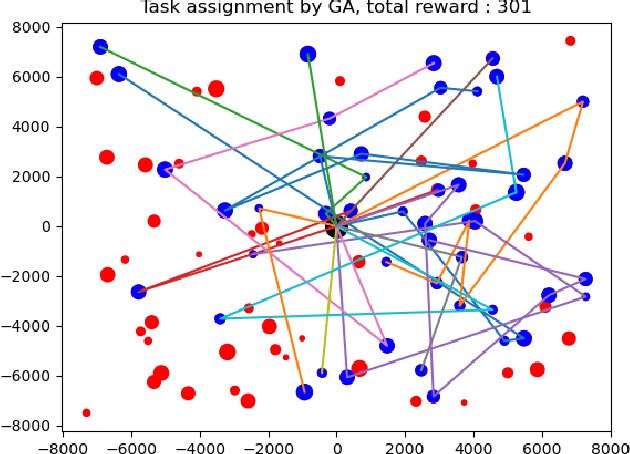

A Benchmark for Multi-UAV Task Assignment of an Extended Team Orienteering Problem

Sep 01, 2020

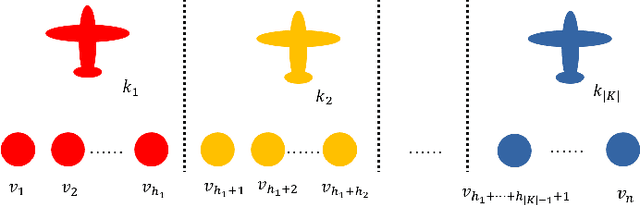

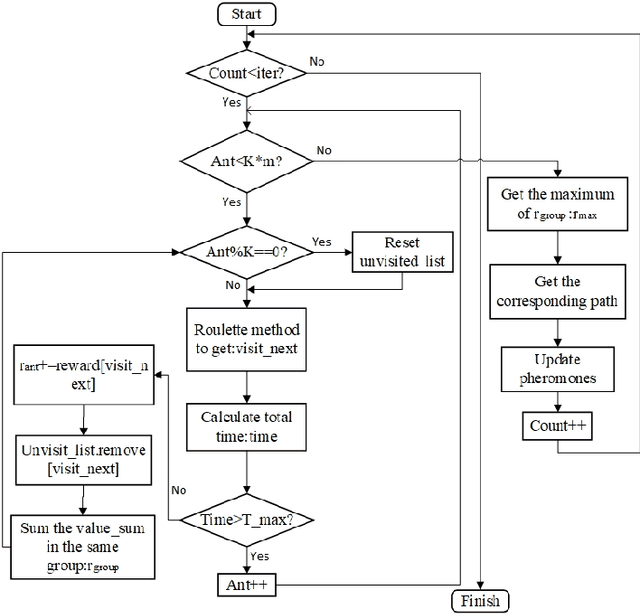

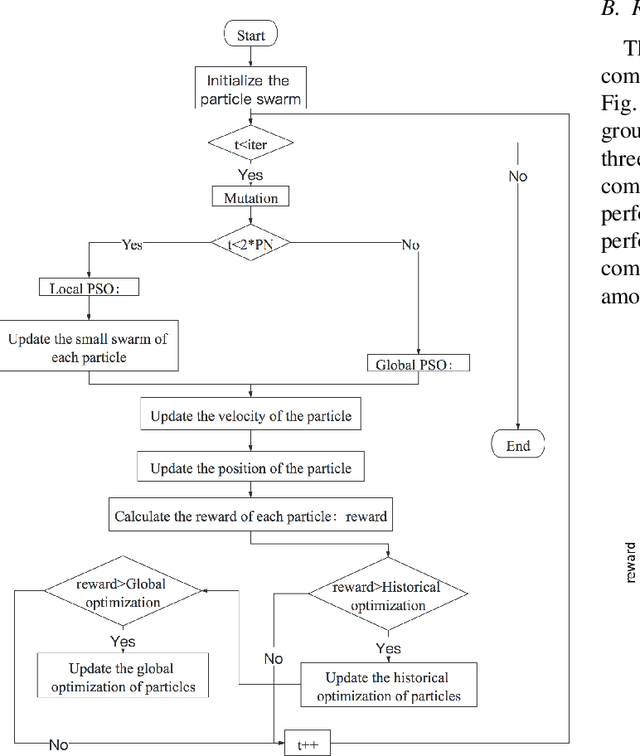

Abstract:A benchmark for multi-UAV task assignment is presented in order to evaluate different algorithms. An extended Team Orienteering Problem is modeled for a kind of multi-UAV task assignment problem. Three intelligent algorithms, i.e., Genetic Algorithm, Ant Colony Optimization and Particle Swarm Optimization are implemented to solve the problem. A series of experiments with different settings are conducted to evaluate three algorithms. The modeled problem and the evaluation results constitute a benchmark, which can be used to evaluate other algorithms used for multi-UAV task assignment problems.

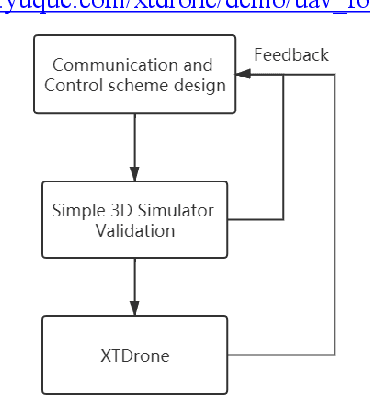

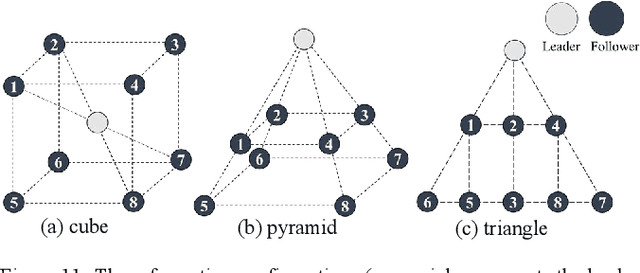

XTDrone: A Customizable Multi-Rotor UAVs Simulation Platform

Mar 26, 2020

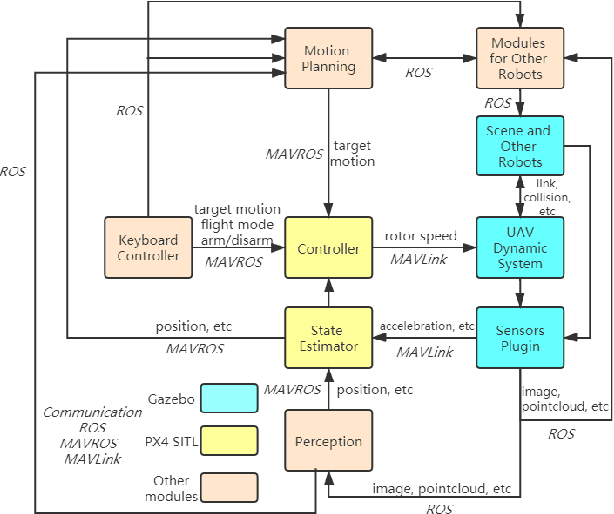

Abstract:A customizable multi-rotor UAVs simulation platform based on ROS, Gazebo and PX4 is presented. The platform, which is called XTDrone, integrates dynamic models, sensor models, control algorithm, state estimation algorithm, and 3D scenes. The platform supports multi UAVs and other robots. The platform is modular and each module can be modified, which means that users can test its own algorithm, such as SLAM, object detection, motion planning, attitude control, multi-UAV cooperation, and cooperation with other robots on the platform. The platform runs in lockstep, so the simulation speed can be adjusted according to the computer performance. In this paper, two cases, evaluating different visual SLAM algorithm and realizing UAV formation, are shown to demonstrate how the platform works.

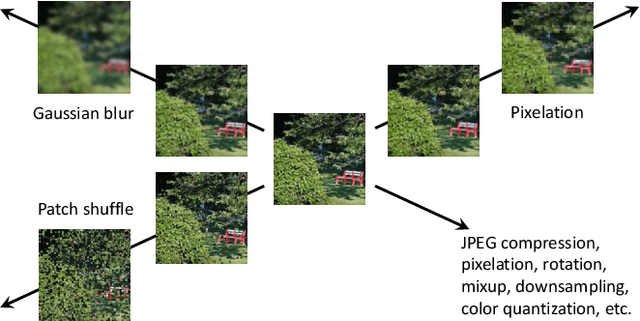

Revisiting Image Aesthetic Assessment via Self-Supervised Feature Learning

Nov 26, 2019

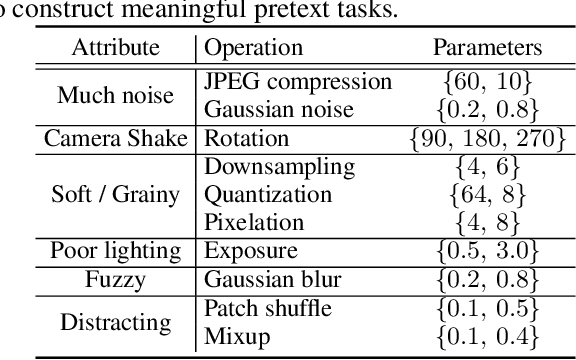

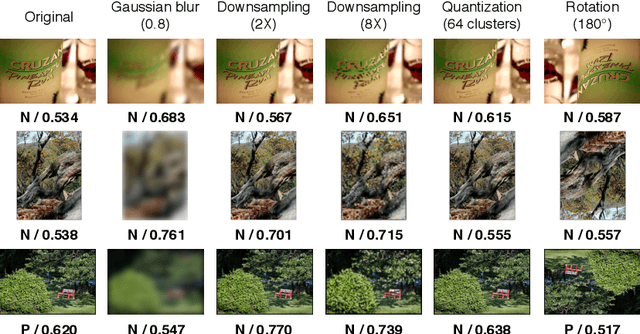

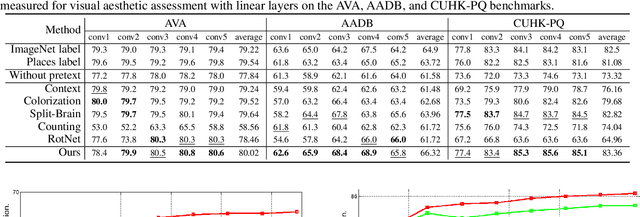

Abstract:Visual aesthetic assessment has been an active research field for decades. Although latest methods have achieved promising performance on benchmark datasets, they typically rely on a large number of manual annotations including both aesthetic labels and related image attributes. In this paper, we revisit the problem of image aesthetic assessment from the self-supervised feature learning perspective. Our motivation is that a suitable feature representation for image aesthetic assessment should be able to distinguish different expert-designed image manipulations, which have close relationships with negative aesthetic effects. To this end, we design two novel pretext tasks to identify the types and parameters of editing operations applied to synthetic instances. The features from our pretext tasks are then adapted for a one-layer linear classifier to evaluate the performance in terms of binary aesthetic classification. We conduct extensive quantitative experiments on three benchmark datasets and demonstrate that our approach can faithfully extract aesthetics-aware features and outperform alternative pretext schemes. Moreover, we achieve comparable results to state-of-the-art supervised methods that use 10 million labels from ImageNet.

Computer Vision Accelerators for Mobile Systems based on OpenCL GPGPU Co-Processing

Mar 17, 2014

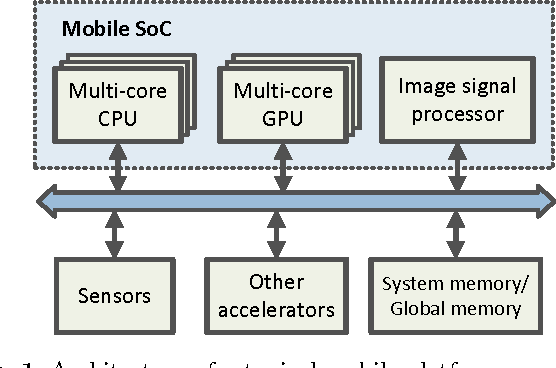

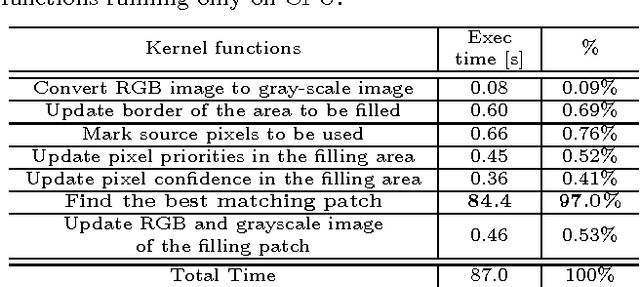

Abstract:In this paper, we present an OpenCL-based heterogeneous implementation of a computer vision algorithm -- image inpainting-based object removal algorithm -- on mobile devices. To take advantage of the computation power of the mobile processor, the algorithm workflow is partitioned between the CPU and the GPU based on the profiling results on mobile devices, so that the computationally-intensive kernels are accelerated by the mobile GPGPU (general-purpose computing using graphics processing units). By exploring the implementation trade-offs and utilizing the proposed optimization strategies at different levels including algorithm optimization, parallelism optimization, and memory access optimization, we significantly speed up the algorithm with the CPU-GPU heterogeneous implementation, while preserving the quality of the output images. Experimental results show that heterogeneous computing based on GPGPU co-processing can significantly speed up the computer vision algorithms and makes them practical on real-world mobile devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge