Guisik Kim

Naturalness-Aware Curriculum Learning with Dynamic Temperature for Speech Deepfake Detection

May 20, 2025Abstract:Recent advances in speech deepfake detection (SDD) have significantly improved artifacts-based detection in spoofed speech. However, most models overlook speech naturalness, a crucial cue for distinguishing bona fide speech from spoofed speech. This study proposes naturalness-aware curriculum learning, a novel training framework that leverages speech naturalness to enhance the robustness and generalization of SDD. This approach measures sample difficulty using both ground-truth labels and mean opinion scores, and adjusts the training schedule to progressively introduce more challenging samples. To further improve generalization, a dynamic temperature scaling method based on speech naturalness is incorporated into the training process. A 23% relative reduction in the EER was achieved in the experiments on the ASVspoof 2021 DF dataset, without modifying the model architecture. Ablation studies confirmed the effectiveness of naturalness-aware training strategies for SDD tasks.

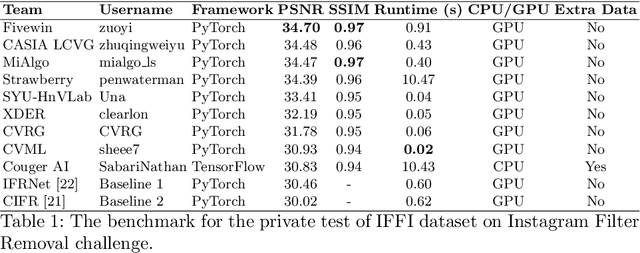

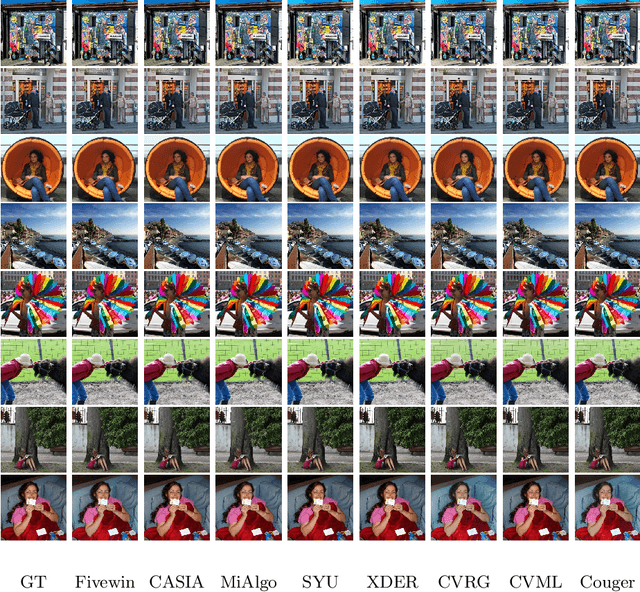

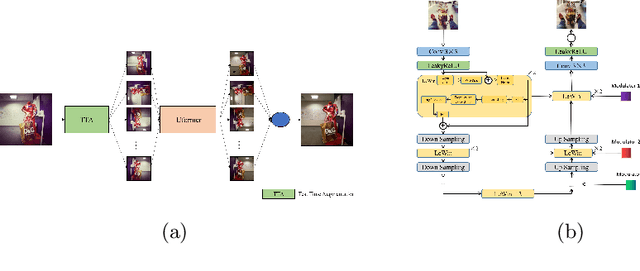

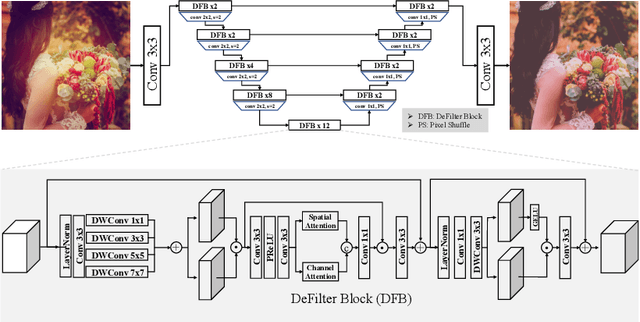

AIM 2022 Challenge on Instagram Filter Removal: Methods and Results

Oct 17, 2022

Abstract:This paper introduces the methods and the results of AIM 2022 challenge on Instagram Filter Removal. Social media filters transform the images by consecutive non-linear operations, and the feature maps of the original content may be interpolated into a different domain. This reduces the overall performance of the recent deep learning strategies. The main goal of this challenge is to produce realistic and visually plausible images where the impact of the filters applied is mitigated while preserving the content. The proposed solutions are ranked in terms of the PSNR value with respect to the original images. There are two prior studies on this task as the baseline, and a total of 9 teams have competed in the final phase of the challenge. The comparison of qualitative results of the proposed solutions and the benchmark for the challenge are presented in this report.

Style Transfer with Target Feature Palette and Attention Coloring

Nov 07, 2021

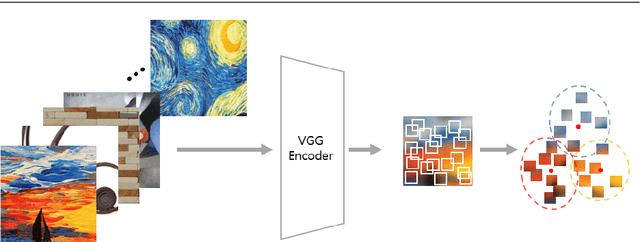

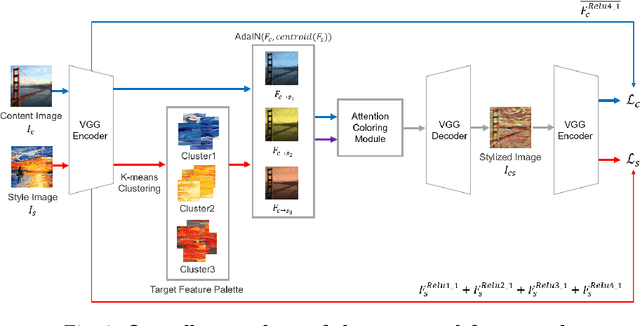

Abstract:Style transfer has attracted a lot of attentions, as it can change a given image into one with splendid artistic styles while preserving the image structure. However, conventional approaches easily lose image details and tend to produce unpleasant artifacts during style transfer. In this paper, to solve these problems, a novel artistic stylization method with target feature palettes is proposed, which can transfer key features accurately. Specifically, our method contains two modules, namely feature palette composition (FPC) and attention coloring (AC) modules. The FPC module captures representative features based on K-means clustering and produces a feature target palette. The following AC module calculates attention maps between content and style images, and transfers colors and patterns based on the attention map and the target palette. These modules enable the proposed stylization to focus on key features and generate plausibly transferred images. Thus, the contributions of the proposed method are to propose a novel deep learning-based style transfer method and present target feature palette and attention coloring modules, and provide in-depth analysis and insight on the proposed method via exhaustive ablation study. Qualitative and quantitative results show that our stylized images exhibit state-of-the-art performance, with strength in preserving core structures and details of the content image.

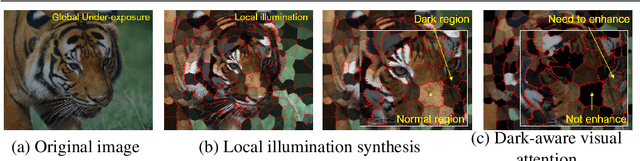

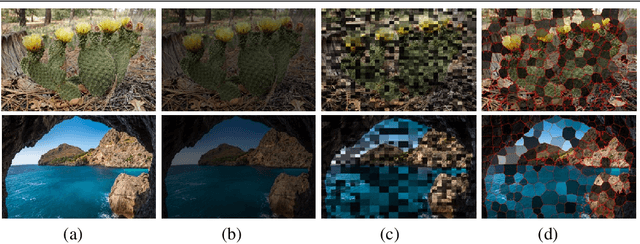

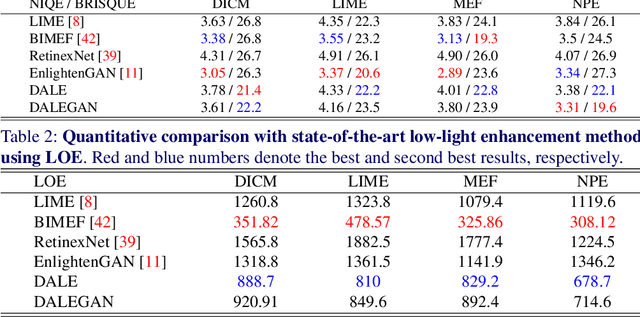

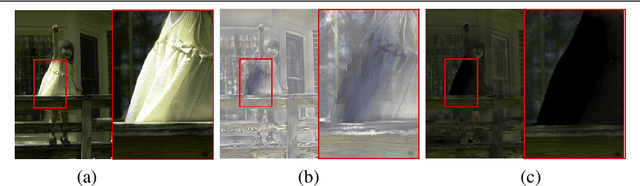

DALE : Dark Region-Aware Low-light Image Enhancement

Aug 28, 2020

Abstract:In this paper, we present a novel low-light image enhancement method called dark region-aware low-light image enhancement (DALE), where dark regions are accurately recognized by the proposed visual attention module and their brightness are intensively enhanced. Our method can estimate the visual attention in an efficient manner using super-pixels without any complicated process. Thus, the method can preserve the color, tone, and brightness of original images and prevents normally illuminated areas of the images from being saturated and distorted. Experimental results show that our method accurately identifies dark regions via the proposed visual attention, and qualitatively and quantitatively outperforms state-of-the-art methods.

AIM 2019 Challenge on Real-World Image Super-Resolution: Methods and Results

Nov 19, 2019

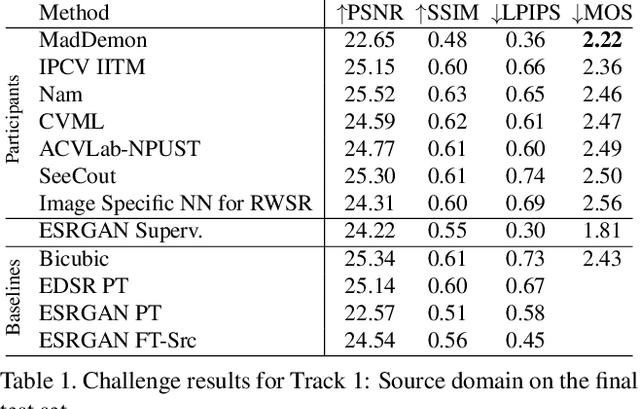

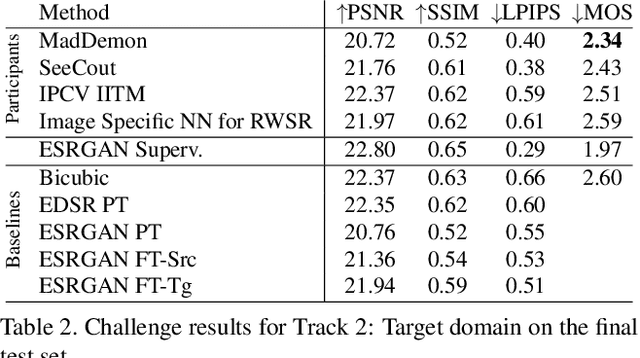

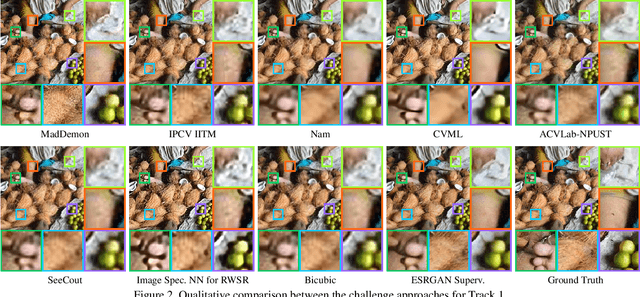

Abstract:This paper reviews the AIM 2019 challenge on real world super-resolution. It focuses on the participating methods and final results. The challenge addresses the real world setting, where paired true high and low-resolution images are unavailable. For training, only one set of source input images is therefore provided in the challenge. In Track 1: Source Domain the aim is to super-resolve such images while preserving the low level image characteristics of the source input domain. In Track 2: Target Domain a set of high-quality images is also provided for training, that defines the output domain and desired quality of the super-resolved images. To allow for quantitative evaluation, the source input images in both tracks are constructed using artificial, but realistic, image degradations. The challenge is the first of its kind, aiming to advance the state-of-the-art and provide a standard benchmark for this newly emerging task. In total 7 teams competed in the final testing phase, demonstrating new and innovative solutions to the problem.

LED2Net: Deep Illumination-aware Dehazing with Low-light and Detail Enhancement

Jul 26, 2019

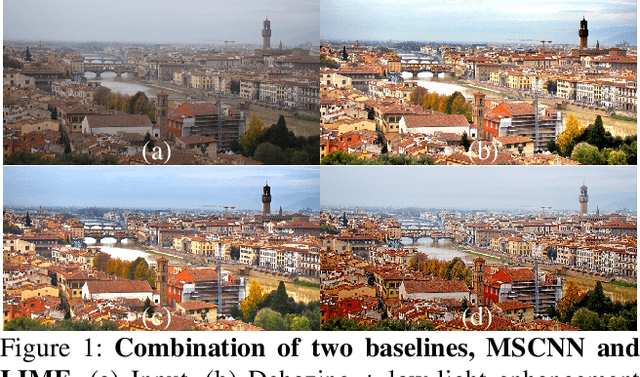

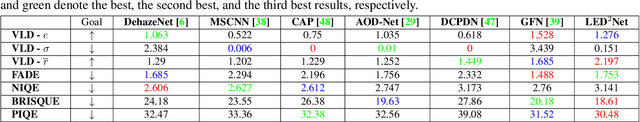

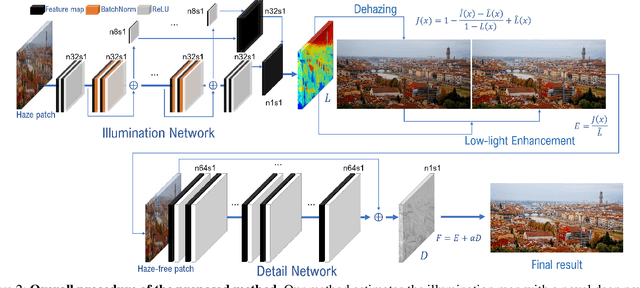

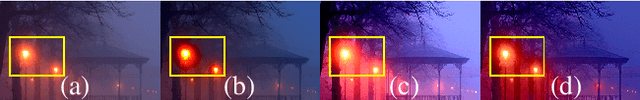

Abstract:We present a novel dehazing and low-light enhancement method based on an illumination map that is accurately estimated by a convolutional neural network (CNN). In this paper, the illumination map is used as a component for three different tasks, namely, atmospheric light estimation, transmission map estimation, and low-light enhancement. To train CNNs for dehazing and low-light enhancement simultaneously based on the retinex theory, we synthesize numerous low-light and hazy images from normal hazy images from the FADE data set. In addition, we further improve the network using detail enhancement. Experimental results demonstrate that our method surpasses recent state-of-theart algorithms quantitatively and qualitatively. In particular, our haze-free images present vivid colors and enhance visibility without a halo effect or color distortion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge