Suhyeon Ha

AdvPaint: Protecting Images from Inpainting Manipulation via Adversarial Attention Disruption

Mar 13, 2025

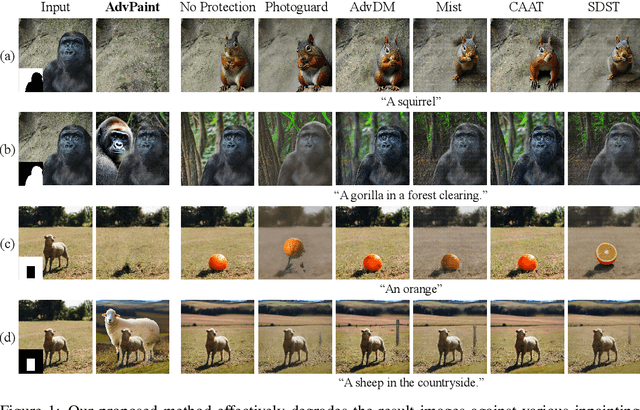

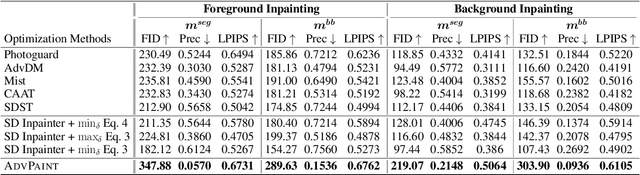

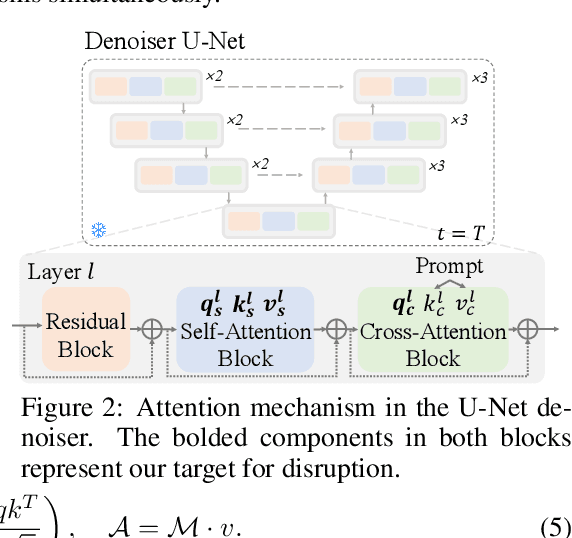

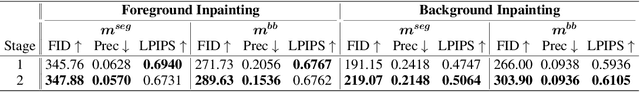

Abstract:The outstanding capability of diffusion models in generating high-quality images poses significant threats when misused by adversaries. In particular, we assume malicious adversaries exploiting diffusion models for inpainting tasks, such as replacing a specific region with a celebrity. While existing methods for protecting images from manipulation in diffusion-based generative models have primarily focused on image-to-image and text-to-image tasks, the challenge of preventing unauthorized inpainting has been rarely addressed, often resulting in suboptimal protection performance. To mitigate inpainting abuses, we propose ADVPAINT, a novel defensive framework that generates adversarial perturbations that effectively disrupt the adversary's inpainting tasks. ADVPAINT targets the self- and cross-attention blocks in a target diffusion inpainting model to distract semantic understanding and prompt interactions during image generation. ADVPAINT also employs a two-stage perturbation strategy, dividing the perturbation region based on an enlarged bounding box around the object, enhancing robustness across diverse masks of varying shapes and sizes. Our experimental results demonstrate that ADVPAINT's perturbations are highly effective in disrupting the adversary's inpainting tasks, outperforming existing methods; ADVPAINT attains over a 100-point increase in FID and substantial decreases in precision.

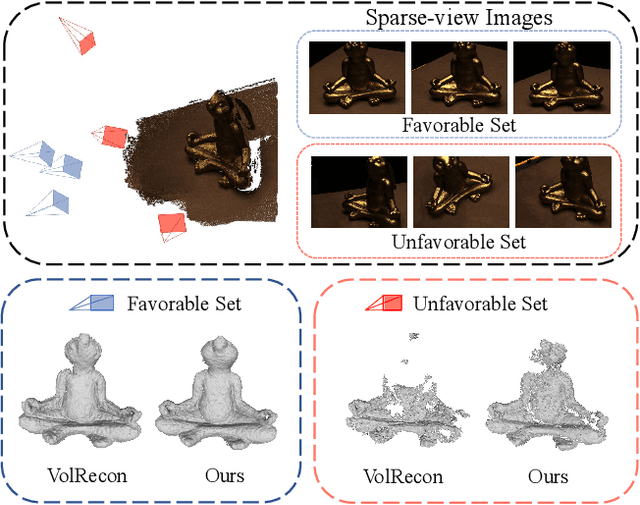

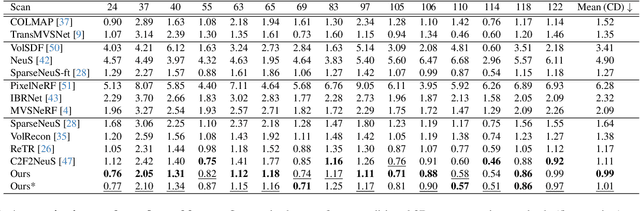

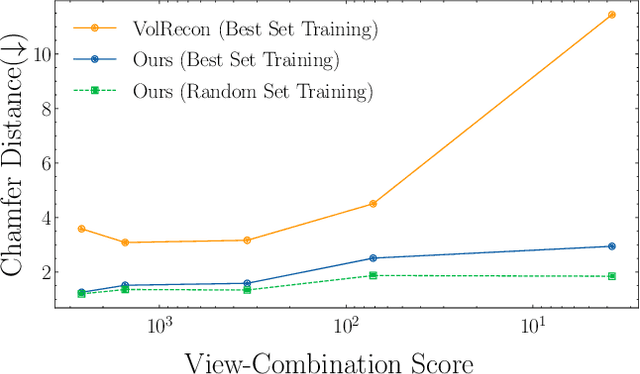

UFORecon: Generalizable Sparse-View Surface Reconstruction from Arbitrary and UnFavOrable Sets

Mar 11, 2024

Abstract:Generalizable neural implicit surface reconstruction aims to obtain an accurate underlying geometry given a limited number of multi-view images from unseen scenes. However, existing methods select only informative and relevant views using predefined scores for training and testing phases. This constraint renders the model impractical in real-world scenarios, where the availability of favorable combinations cannot always be ensured. We introduce and validate a view-combination score to indicate the effectiveness of the input view combination. We observe that previous methods output degenerate solutions under arbitrary and unfavorable sets. Building upon this finding, we propose UFORecon, a robust view-combination generalizable surface reconstruction framework. To achieve this, we apply cross-view matching transformers to model interactions between source images and build correlation frustums to capture global correlations. Additionally, we explicitly encode pairwise feature similarities as view-consistent priors. Our proposed framework significantly outperforms previous methods in terms of view-combination generalizability and also in the conventional generalizable protocol trained with favorable view-combinations. The code is available at https://github.com/Youngju-Na/UFORecon.

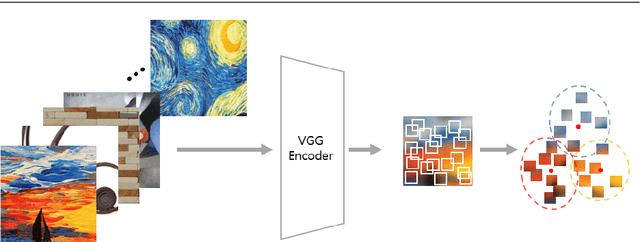

Style Transfer with Target Feature Palette and Attention Coloring

Nov 07, 2021

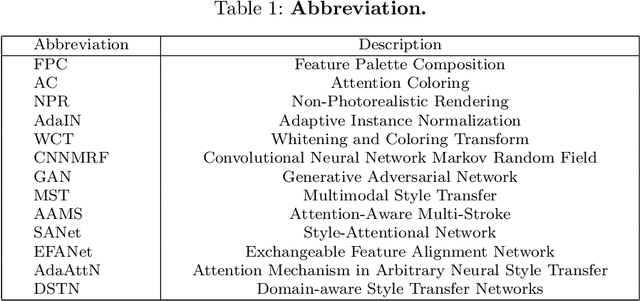

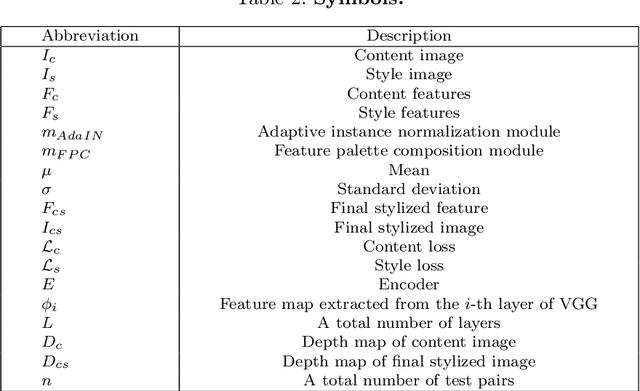

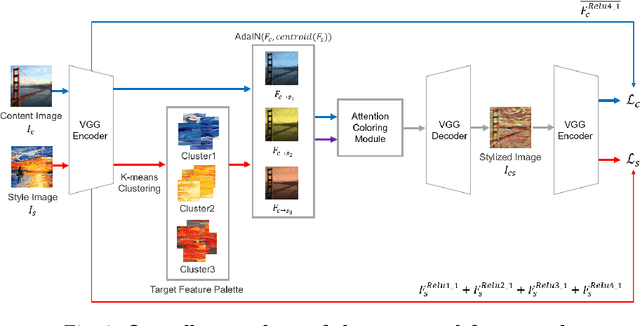

Abstract:Style transfer has attracted a lot of attentions, as it can change a given image into one with splendid artistic styles while preserving the image structure. However, conventional approaches easily lose image details and tend to produce unpleasant artifacts during style transfer. In this paper, to solve these problems, a novel artistic stylization method with target feature palettes is proposed, which can transfer key features accurately. Specifically, our method contains two modules, namely feature palette composition (FPC) and attention coloring (AC) modules. The FPC module captures representative features based on K-means clustering and produces a feature target palette. The following AC module calculates attention maps between content and style images, and transfers colors and patterns based on the attention map and the target palette. These modules enable the proposed stylization to focus on key features and generate plausibly transferred images. Thus, the contributions of the proposed method are to propose a novel deep learning-based style transfer method and present target feature palette and attention coloring modules, and provide in-depth analysis and insight on the proposed method via exhaustive ablation study. Qualitative and quantitative results show that our stylized images exhibit state-of-the-art performance, with strength in preserving core structures and details of the content image.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge