Guanyu Chen

Value-Action Alignment in Large Language Models under Privacy-Prosocial Conflict

Jan 07, 2026Abstract:Large language models (LLMs) are increasingly used to simulate decision-making tasks involving personal data sharing, where privacy concerns and prosocial motivations can push choices in opposite directions. Existing evaluations often measure privacy-related attitudes or sharing intentions in isolation, which makes it difficult to determine whether a model's expressed values jointly predict its downstream data-sharing actions as in real human behaviors. We introduce a context-based assessment protocol that sequentially administers standardized questionnaires for privacy attitudes, prosocialness, and acceptance of data sharing within a bounded, history-carrying session. To evaluate value-action alignments under competing attitudes, we use multi-group structural equation modeling (MGSEM) to identify relations from privacy concerns and prosocialness to data sharing. We propose Value-Action Alignment Rate (VAAR), a human-referenced directional agreement metric that aggregates path-level evidence for expected signs. Across multiple LLMs, we observe stable but model-specific Privacy-PSA-AoDS profiles, and substantial heterogeneity in value-action alignment.

Towards General Discrete Speech Codec for Complex Acoustic Environments: A Study of Reconstruction and Downstream Task Consistency

May 28, 2025Abstract:Neural speech codecs excel in reconstructing clean speech signals; however, their efficacy in complex acoustic environments and downstream signal processing tasks remains underexplored. In this study, we introduce a novel benchmark named Environment-Resilient Speech Codec Benchmark (ERSB) to systematically evaluate whether neural speech codecs are environment-resilient. Specifically, we assess two key capabilities: (1) robust reconstruction, which measures the preservation of both speech and non-speech acoustic details, and (2) downstream task consistency, which ensures minimal deviation in downstream signal processing tasks when using reconstructed speech instead of the original. Our comprehensive experiments reveal that complex acoustic environments significantly degrade signal reconstruction and downstream task consistency. This work highlights the limitations of current speech codecs and raises a future direction that improves them for greater environmental resilience.

Exploring the Hidden Reasoning Process of Large Language Models by Misleading Them

Mar 20, 2025Abstract:Large language models (LLMs) and Vision language models (VLMs) have been able to perform various forms of reasoning tasks in a wide range of scenarios, but are they truly engaging in task abstraction and rule-based reasoning beyond mere memorization and pattern matching? To answer this question, we propose a novel experimental approach, Misleading Fine-Tuning (MisFT), to examine whether LLMs/VLMs perform abstract reasoning by altering their original understanding of fundamental rules. In particular, by constructing a dataset with math expressions that contradict correct operation principles, we fine-tune the model to learn those contradictory rules and assess its generalization ability on different test domains. Through a series of experiments, we find that current LLMs/VLMs are capable of effectively applying contradictory rules to solve practical math word problems and math expressions represented by images, implying the presence of an internal mechanism that abstracts before reasoning.

DGNet: A Neural Network Framework Induced by Discontinuous Galerkin Methods

Mar 13, 2025Abstract:We propose a general framework for the Discontinuous Galerkin-induced Neural Network (DGNet) inspired by the Interior Penalty Discontinuous Galerkin Method (IPDGM). In this approach, the trial space consists of piecewise neural network space defined over the computational domain, while the test function space is composed of piecewise polynomials. We demonstrate the advantages of DGNet in terms of accuracy and training efficiency across several numerical examples, including stationary and time-dependent problems. Specifically, DGNet easily handles high perturbations, discontinuous solutions, and complex geometric domains.

When do neural networks learn world models?

Feb 13, 2025Abstract:Humans develop world models that capture the underlying generation process of data. Whether neural networks can learn similar world models remains an open problem. In this work, we provide the first theoretical results for this problem, showing that in a multi-task setting, models with a low-degree bias provably recover latent data-generating variables under mild assumptions -- even if proxy tasks involve complex, non-linear functions of the latents. However, such recovery is also sensitive to model architecture. Our analysis leverages Boolean models of task solutions via the Fourier-Walsh transform and introduces new techniques for analyzing invertible Boolean transforms, which may be of independent interest. We illustrate the algorithmic implications of our results and connect them to related research areas, including self-supervised learning, out-of-distribution generalization, and the linear representation hypothesis in large language models.

Feature Contamination: Neural Networks Learn Uncorrelated Features and Fail to Generalize

Jun 06, 2024

Abstract:Learning representations that generalize under distribution shifts is critical for building robust machine learning models. However, despite significant efforts in recent years, algorithmic advances in this direction have been limited. In this work, we seek to understand the fundamental difficulty of out-of-distribution generalization with deep neural networks. We first empirically show that perhaps surprisingly, even allowing a neural network to explicitly fit the representations obtained from a teacher network that can generalize out-of-distribution is insufficient for the generalization of the student network. Then, by a theoretical study of two-layer ReLU networks optimized by stochastic gradient descent (SGD) under a structured feature model, we identify a fundamental yet unexplored feature learning proclivity of neural networks, feature contamination: neural networks can learn uncorrelated features together with predictive features, resulting in generalization failure under distribution shifts. Notably, this mechanism essentially differs from the prevailing narrative in the literature that attributes the generalization failure to spurious correlations. Overall, our results offer new insights into the non-linear feature learning dynamics of neural networks and highlight the necessity of considering inductive biases in out-of-distribution generalization.

Transition to Adulthood for Young People with Intellectual or Developmental Disabilities: Emotion Detection and Topic Modeling

Sep 21, 2022Abstract:Transition to Adulthood is an essential life stage for many families. The prior research has shown that young people with intellectual or development disabil-ities (IDD) have more challenges than their peers. This study is to explore how to use natural language processing (NLP) methods, especially unsupervised machine learning, to assist psychologists to analyze emotions and sentiments and to use topic modeling to identify common issues and challenges that young people with IDD and their families have. Additionally, the results were compared to those obtained from young people without IDD who were in tran-sition to adulthood. The findings showed that NLP methods can be very useful for psychologists to analyze emotions, conduct cross-case analysis, and sum-marize key topics from conversational data. Our Python code is available at https://github.com/mlaricheva/emotion_topic_modeling.

* Conference proceedings of 2022 SBP-BRiMS

Automated Utterance Labeling of Conversations Using Natural Language Processing

Aug 12, 2022

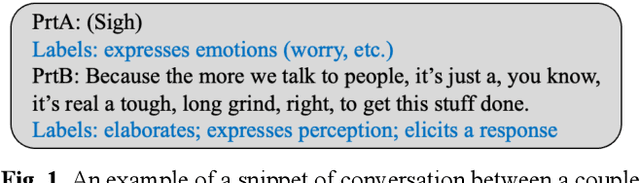

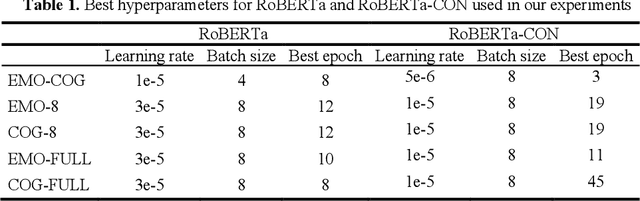

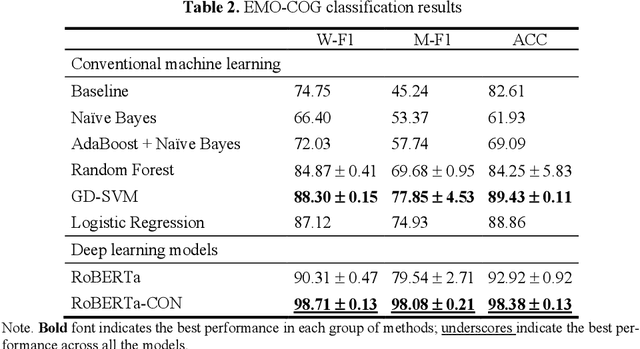

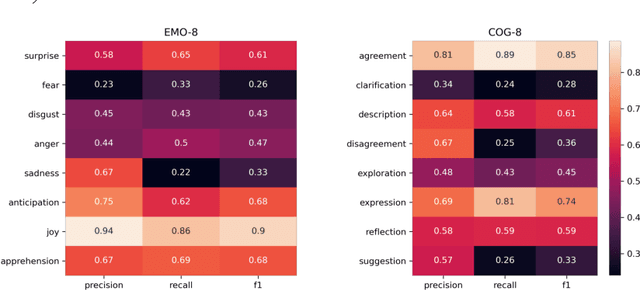

Abstract:Conversational data is essential in psychology because it can help researchers understand individuals cognitive processes, emotions, and behaviors. Utterance labelling is a common strategy for analyzing this type of data. The development of NLP algorithms allows researchers to automate this task. However, psychological conversational data present some challenges to NLP researchers, including multilabel classification, a large number of classes, and limited available data. This study explored how automated labels generated by NLP methods are comparable to human labels in the context of conversations on adulthood transition. We proposed strategies to handle three common challenges raised in psychological studies. Our findings showed that the deep learning method with domain adaptation (RoBERTa-CON) outperformed all other machine learning methods; and the hierarchical labelling system that we proposed was shown to help researchers strategically analyze conversational data. Our Python code and NLP model are available at https://github.com/mlaricheva/automated_labeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge