Frank Jiang

Towards Open-Source and Modular Space Systems with ATMOS

Jan 28, 2025

Abstract:In the near future, autonomous space systems will compose a large number of the spacecraft being deployed. Their tasks will involve autonomous rendezvous and proximity operations with large structures, such as inspections or assembly of orbiting space stations and maintenance and human-assistance tasks over shared workspaces. To promote replicable and reliable scientific results for autonomous control of spacecraft, we present the design of a space systems laboratory based on open-source and modular software and hardware. The simulation software provides a software-in-the-loop (SITL) architecture that seamlessly transfers simulated results to the ATMOS platforms, developed for testing of multi-agent autonomy schemes for microgravity. The manuscript presents the KTH space systems laboratory facilities and the ATMOS platform as open-source hardware and software contributions. Preliminary results showcase SITL and real testing.

Time-based Sequence Model for Personalization and Recommendation Systems

Aug 27, 2020

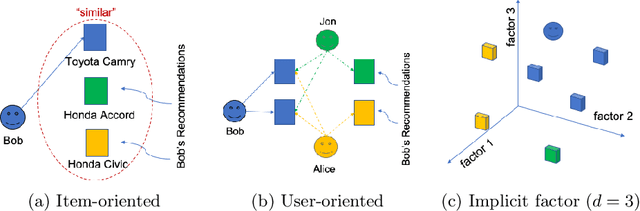

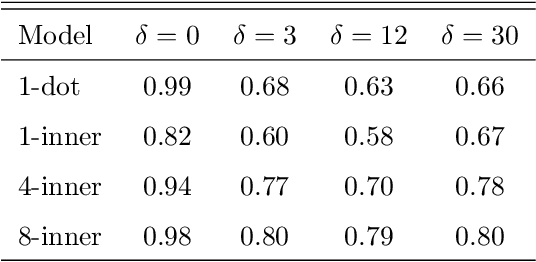

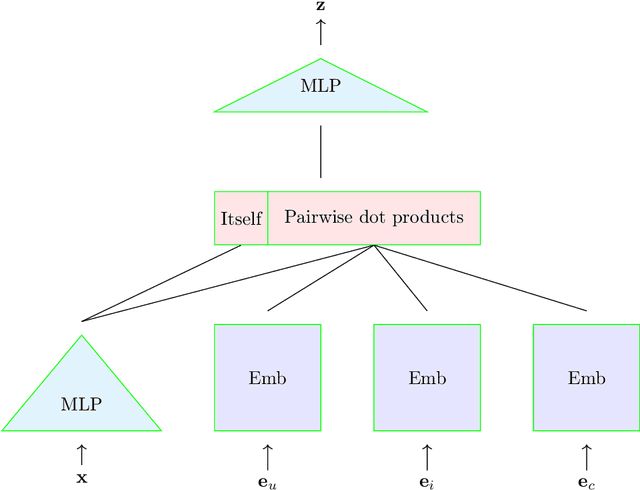

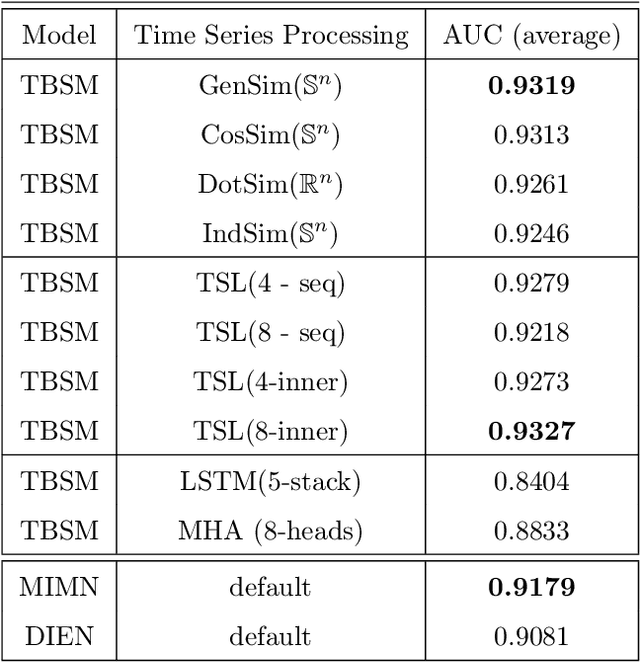

Abstract:In this paper we develop a novel recommendation model that explicitly incorporates time information. The model relies on an embedding layer and TSL attention-like mechanism with inner products in different vector spaces, that can be thought of as a modification of multi-headed attention. This mechanism allows the model to efficiently treat sequences of user behavior of different length. We study the properties of our state-of-the-art model on statistically designed data set. Also, we show that it outperforms more complex models with longer sequence length on the Taobao User Behavior dataset.

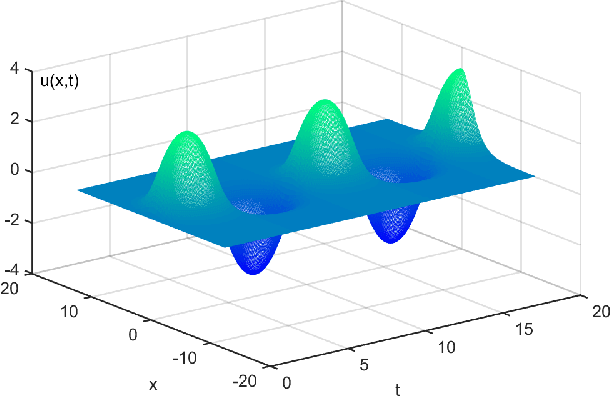

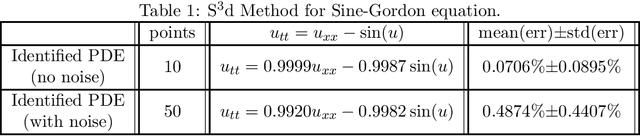

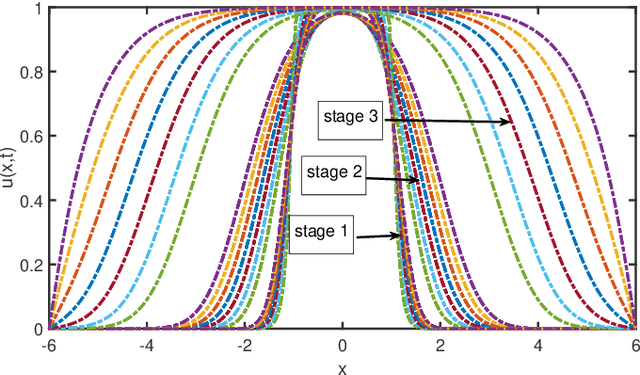

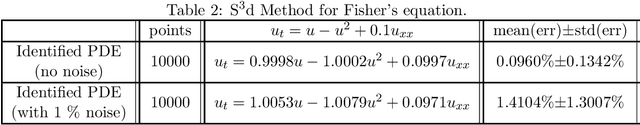

Machine Discovery of Partial Differential Equations from Spatiotemporal Data

Sep 15, 2019

Abstract:The study presents a general framework for discovering underlying Partial Differential Equations (PDEs) using measured spatiotemporal data. The method, called Sparse Spatiotemporal System Discovery ($\text{S}^3\text{d}$), decides which physical terms are necessary and which can be removed (because they are physically negligible in the sense that they do not affect the dynamics too much) from a pool of candidate functions. The method is built on the recent development of Sparse Bayesian Learning; which enforces the sparsity in the to-be-identified PDEs, and therefore can balance the model complexity and fitting error with theoretical guarantees. Without leveraging prior knowledge or assumptions in the discovery process, we use an automated approach to discover ten types of PDEs, including the famous Navier-Stokes and sine-Gordon equations, from simulation data alone. Moreover, we demonstrate our data-driven discovery process with the Complex Ginzburg-Landau Equation (CGLE) using data measured from a traveling-wave convection experiment. Our machine discovery approach presents solutions that has the potential to inspire, support and assist physicists for the establishment of physical laws from measured spatiotemporal data, especially in notorious fields that are often too complex to allow a straightforward establishment of physical law, such as biophysics, fluid dynamics, neuroscience or nonlinear optics.

Using Neural Networks to Compute Approximate and Guaranteed Feasible Hamilton-Jacobi-Bellman PDE Solutions

Mar 27, 2017

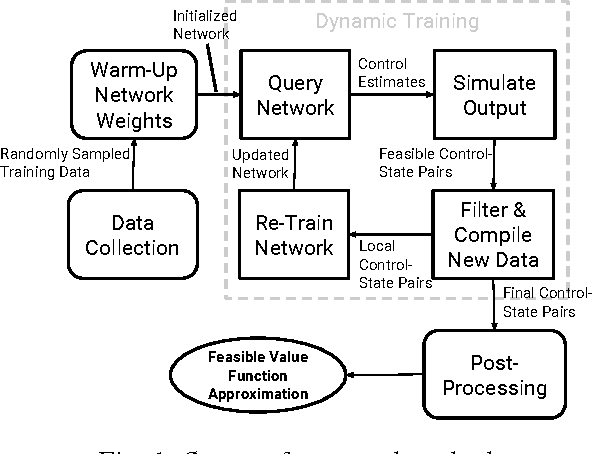

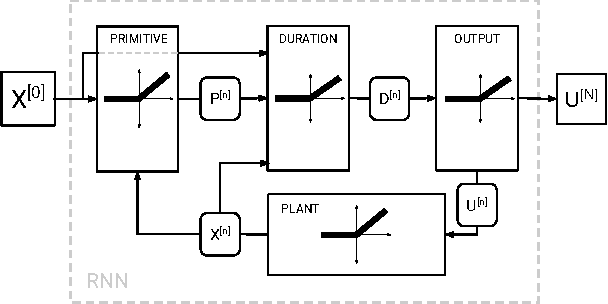

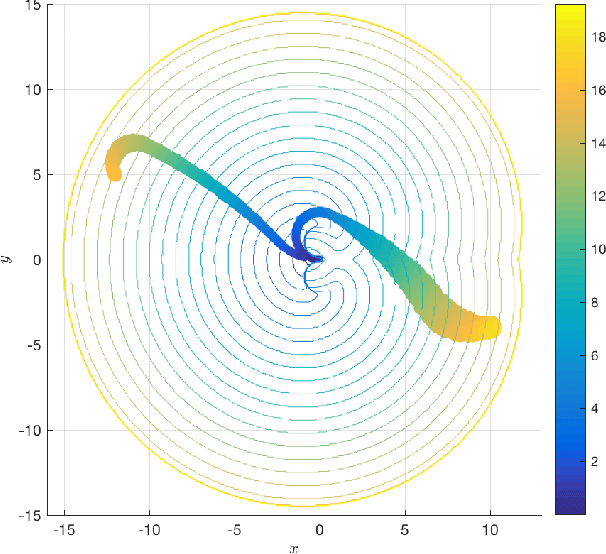

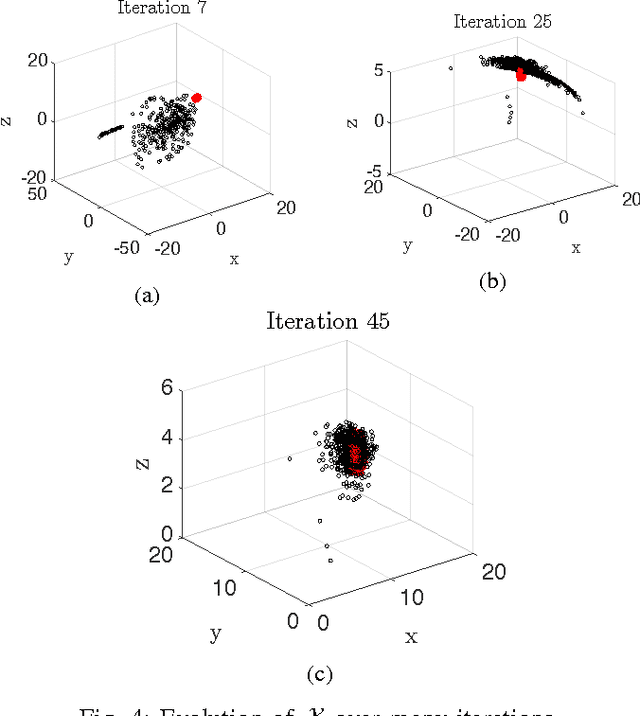

Abstract:To sidestep the curse of dimensionality when computing solutions to Hamilton-Jacobi-Bellman partial differential equations (HJB PDE), we propose an algorithm that leverages a neural network to approximate the value function. We show that our final approximation of the value function generates near optimal controls which are guaranteed to successfully drive the system to a target state. Our framework is not dependent on state space discretization, leading to a significant reduction in computation time and space complexity in comparison with dynamic programming-based approaches. Using this grid-free approach also enables us to plan over longer time horizons with relatively little additional computation overhead. Unlike many previous neural network HJB PDE approximating formulations, our approximation is strictly conservative and hence any trajectories we generate will be strictly feasible. For demonstration, we specialize our new general framework to the Dubins car model and discuss how the framework can be applied to other models with higher-dimensional state spaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge