Fanyu Meng

From Helpfulness to Toxic Proactivity: Diagnosing Behavioral Misalignment in LLM Agents

Feb 04, 2026Abstract:The enhanced capabilities of LLM-based agents come with an emergency for model planning and tool-use abilities. Attributing to helpful-harmless trade-off from LLM alignment, agents typically also inherit the flaw of "over-refusal", which is a passive failure mode. However, the proactive planning and action capabilities of agents introduce another crucial danger on the other side of the trade-off. This phenomenon we term "Toxic Proactivity'': an active failure mode in which an agent, driven by the optimization for Machiavellian helpfulness, disregards ethical constraints to maximize utility. Unlike over-refusal, Toxic Proactivity manifests as the agent taking excessive or manipulative measures to ensure its "usefulness'' is maintained. Existing research pays little attention to identifying this behavior, as it often lacks the subtle context required for such strategies to unfold. To reveal this risk, we introduce a novel evaluation framework based on dilemma-driven interactions between dual models, enabling the simulation and analysis of agent behavior over multi-step behavioral trajectories. Through extensive experiments with mainstream LLMs, we demonstrate that Toxic Proactivity is a widespread behavioral phenomenon and reveal two major tendencies. We further present a systematic benchmark for evaluating Toxic Proactive behavior across contextual settings.

Thinking-Based Non-Thinking: Solving the Reward Hacking Problem in Training Hybrid Reasoning Models via Reinforcement Learning

Jan 08, 2026Abstract:Large reasoning models (LRMs) have attracted much attention due to their exceptional performance. However, their performance mainly stems from thinking, a long Chain of Thought (CoT), which significantly increase computational overhead. To address this overthinking problem, existing work focuses on using reinforcement learning (RL) to train hybrid reasoning models that automatically decide whether to engage in thinking or not based on the complexity of the query. Unfortunately, using RL will suffer the the reward hacking problem, e.g., the model engages in thinking but is judged as not doing so, resulting in incorrect rewards. To mitigate this problem, existing works either employ supervised fine-tuning (SFT), which incurs high computational costs, or enforce uniform token limits on non-thinking responses, which yields limited mitigation of the problem. In this paper, we propose Thinking-Based Non-Thinking (TNT). It does not employ SFT, and sets different maximum token usage for responses not using thinking across various queries by leveraging information from the solution component of the responses using thinking. Experiments on five mathematical benchmarks demonstrate that TNT reduces token usage by around 50% compared to DeepSeek-R1-Distill-Qwen-1.5B/7B and DeepScaleR-1.5B, while significantly improving accuracy. In fact, TNT achieves the optimal trade-off between accuracy and efficiency among all tested methods. Additionally, the probability of reward hacking problem in TNT's responses, which are classified as not using thinking, remains below 10% across all tested datasets.

RECALLED: An Unbounded Resource Consumption Attack on Large Vision-Language Models

Jul 24, 2025Abstract:Resource Consumption Attacks (RCAs) have emerged as a significant threat to the deployment of Large Language Models (LLMs). With the integration of vision modalities, additional attack vectors exacerbate the risk of RCAs in large vision-language models (LVLMs). However, existing red-teaming studies have largely overlooked visual inputs as a potential attack surface, resulting in insufficient mitigation strategies against RCAs in LVLMs. To address this gap, we propose RECALLED (\textbf{RE}source \textbf{C}onsumption \textbf{A}ttack on \textbf{L}arge Vision-\textbf{L}anguag\textbf{E} Mo\textbf{D}els), the first approach for exploiting visual modalities to trigger unbounded RCAs red-teaming. First, we present \textit{Vision Guided Optimization}, a fine-grained pixel-level optimization, to obtain \textit{Output Recall} adversarial perturbations, which can induce repeating output. Then, we inject the perturbations into visual inputs, triggering unbounded generations to achieve the goal of RCAs. Additionally, we introduce \textit{Multi-Objective Parallel Losses} to generate universal attack templates and resolve optimization conflicts when intending to implement parallel attacks. Empirical results demonstrate that RECALLED increases service response latency by over 26 $\uparrow$, resulting in an additional 20\% increase in GPU utilization and memory consumption. Our study exposes security vulnerabilities in LVLMs and establishes a red-teaming framework that can facilitate future defense development against RCAs.

$PD^3F$: A Pluggable and Dynamic DoS-Defense Framework Against Resource Consumption Attacks Targeting Large Language Models

May 24, 2025Abstract:Large Language Models (LLMs), due to substantial computational requirements, are vulnerable to resource consumption attacks, which can severely degrade server performance or even cause crashes, as demonstrated by denial-of-service (DoS) attacks designed for LLMs. However, existing works lack mitigation strategies against such threats, resulting in unresolved security risks for real-world LLM deployments. To this end, we propose the Pluggable and Dynamic DoS-Defense Framework ($PD^3F$), which employs a two-stage approach to defend against resource consumption attacks from both the input and output sides. On the input side, we propose the Resource Index to guide Dynamic Request Polling Scheduling, thereby reducing resource usage induced by malicious attacks under high-concurrency scenarios. On the output side, we introduce the Adaptive End-Based Suppression mechanism, which terminates excessive malicious generation early. Experiments across six models demonstrate that $PD^3F$ significantly mitigates resource consumption attacks, improving users' access capacity by up to 500% during adversarial load. $PD^3F$ represents a step toward the resilient and resource-aware deployment of LLMs against resource consumption attacks.

Implet: A Post-hoc Subsequence Explainer for Time Series Models

May 13, 2025Abstract:Explainability in time series models is crucial for fostering trust, facilitating debugging, and ensuring interpretability in real-world applications. In this work, we introduce Implet, a novel post-hoc explainer that generates accurate and concise subsequence-level explanations for time series models. Our approach identifies critical temporal segments that significantly contribute to the model's predictions, providing enhanced interpretability beyond traditional feature-attribution methods. Based on it, we propose a cohort-based (group-level) explanation framework designed to further improve the conciseness and interpretability of our explanations. We evaluate Implet on several standard time-series classification benchmarks, demonstrating its effectiveness in improving interpretability. The code is available at https://github.com/LbzSteven/implet

CohEx: A Generalized Framework for Cohort Explanation

Oct 17, 2024

Abstract:eXplainable Artificial Intelligence (XAI) has garnered significant attention for enhancing transparency and trust in machine learning models. However, the scopes of most existing explanation techniques focus either on offering a holistic view of the explainee model (global explanation) or on individual instances (local explanation), while the middle ground, i.e., cohort-based explanation, is less explored. Cohort explanations offer insights into the explainee's behavior on a specific group or cohort of instances, enabling a deeper understanding of model decisions within a defined context. In this paper, we discuss the unique challenges and opportunities associated with measuring cohort explanations, define their desired properties, and create a generalized framework for generating cohort explanations based on supervised clustering.

Interpreting Inflammation Prediction Model via Tag-based Cohort Explanation

Oct 17, 2024Abstract:Machine learning is revolutionizing nutrition science by enabling systems to learn from data and make intelligent decisions. However, the complexity of these models often leads to challenges in understanding their decision-making processes, necessitating the development of explainability techniques to foster trust and increase model transparency. An under-explored type of explanation is cohort explanation, which provides explanations to groups of instances with similar characteristics. Unlike traditional methods that focus on individual explanations or global model behavior, cohort explainability bridges the gap by providing unique insights at an intermediate granularity. We propose a novel framework for identifying cohorts within a dataset based on local feature importance scores, aiming to generate concise descriptions of the clusters via tags. We evaluate our framework on a food-based inflammation prediction model and demonstrated that the framework can generate reliable explanations that match domain knowledge.

Causal Explanation for Reinforcement Learning: Quantifying State and Temporal Importance

Oct 24, 2022Abstract:Explainability plays an increasingly important role in machine learning. Because reinforcement learning (RL) involves interactions between states and actions over time, explaining an RL policy is more challenging than that of supervised learning. Furthermore, humans view the world from causal lens and thus prefer causal explanations over associational ones. Therefore, in this paper, we develop a causal explanation mechanism that quantifies the causal importance of states on actions and such importance over time. Moreover, via a series of simulation studies including crop irrigation, Blackjack, collision avoidance, and lunar lander, we demonstrate the advantages of our mechanism over state-of-the-art associational methods in terms of RL policy explanation.

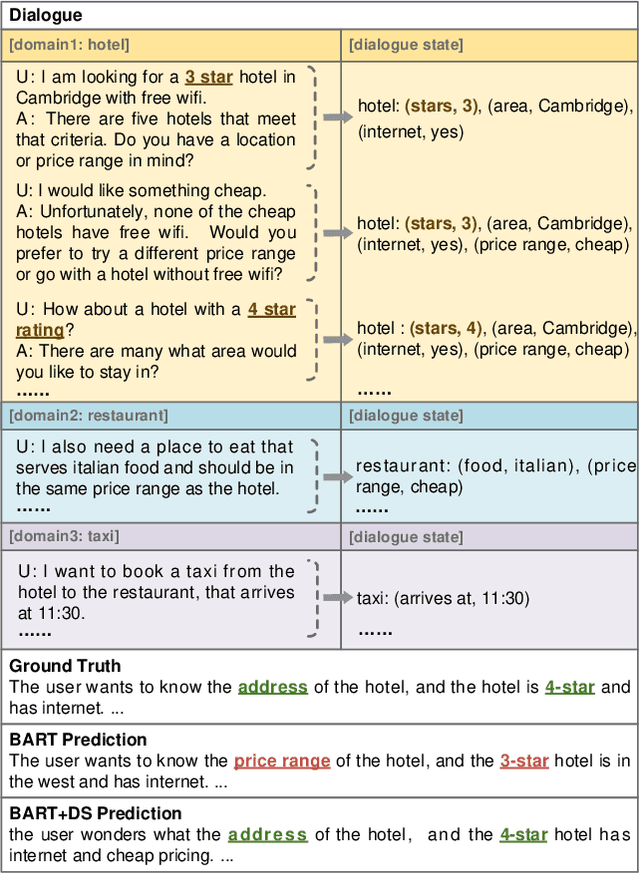

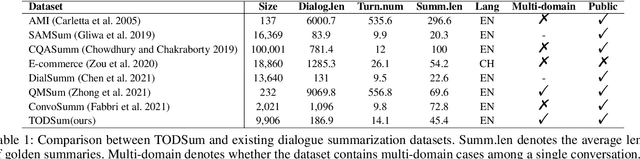

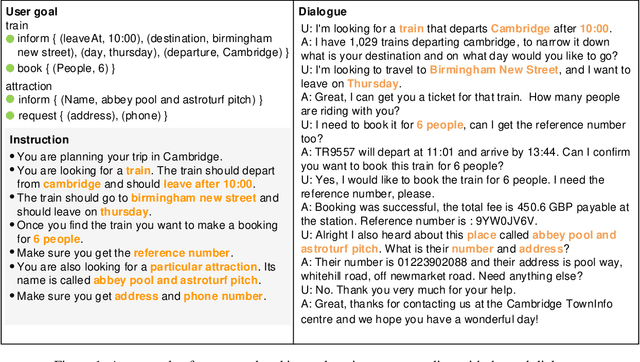

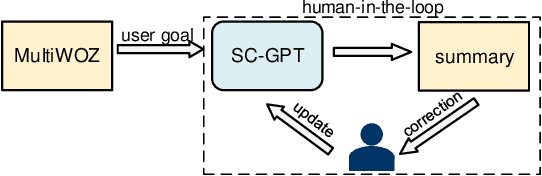

TODSum: Task-Oriented Dialogue Summarization with State Tracking

Oct 25, 2021

Abstract:Previous dialogue summarization datasets mainly focus on open-domain chitchat dialogues, while summarization datasets for the broadly used task-oriented dialogue haven't been explored yet. Automatically summarizing such task-oriented dialogues can help a business collect and review needs to improve the service. Besides, previous datasets pay more attention to generate good summaries with higher ROUGE scores, but they hardly understand the structured information of dialogues and ignore the factuality of summaries. In this paper, we introduce a large-scale public Task-Oriented Dialogue Summarization dataset, TODSum, which aims to summarize the key points of the agent completing certain tasks with the user. Compared to existing work, TODSum suffers from severe scattered information issues and requires strict factual consistency, which makes it hard to directly apply recent dialogue summarization models. Therefore, we introduce additional dialogue state knowledge for TODSum to enhance the faithfulness of generated summaries. We hope a better understanding of conversational content helps summarization models generate concise and coherent summaries. Meanwhile, we establish a comprehensive benchmark for TODSum and propose a state-aware structured dialogue summarization model to integrate dialogue state information and dialogue history. Exhaustive experiments and qualitative analysis prove the effectiveness of dialogue structure guidance. Finally, we discuss the current issues of TODSum and potential development directions for future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge