Yuejie Lei

From Scaling to Structured Expressivity: Rethinking Transformers for CTR Prediction

Nov 15, 2025

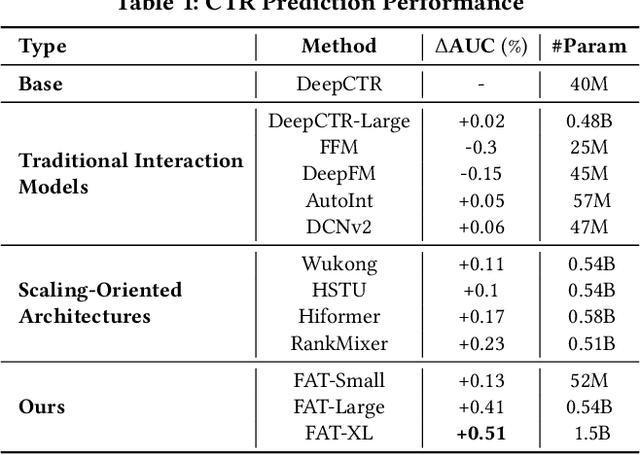

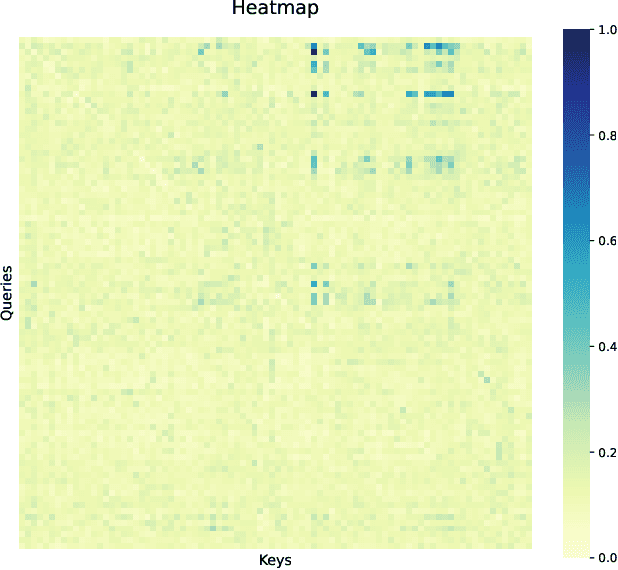

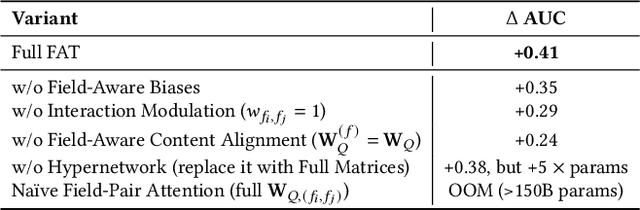

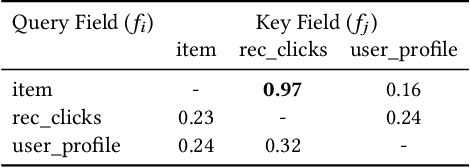

Abstract:Despite massive investments in scale, deep models for click-through rate (CTR) prediction often exhibit rapidly diminishing returns - a stark contrast to the smooth, predictable gains seen in large language models. We identify the root cause as a structural misalignment: Transformers assume sequential compositionality, while CTR data demand combinatorial reasoning over high-cardinality semantic fields. Unstructured attention spreads capacity indiscriminately, amplifying noise under extreme sparsity and breaking scalable learning. To restore alignment, we introduce the Field-Aware Transformer (FAT), which embeds field-based interaction priors into attention through decomposed content alignment and cross-field modulation. This design ensures model complexity scales with the number of fields F, not the total vocabulary size n >> F, leading to tighter generalization and, critically, observed power-law scaling in AUC as model width increases. We present the first formal scaling law for CTR models, grounded in Rademacher complexity, that explains and predicts this behavior. On large-scale benchmarks, FAT improves AUC by up to +0.51% over state-of-the-art methods. Deployed online, it delivers +2.33% CTR and +0.66% RPM. Our work establishes that effective scaling in recommendation arises not from size, but from structured expressivity-architectural coherence with data semantics.

TODSum: Task-Oriented Dialogue Summarization with State Tracking

Oct 25, 2021

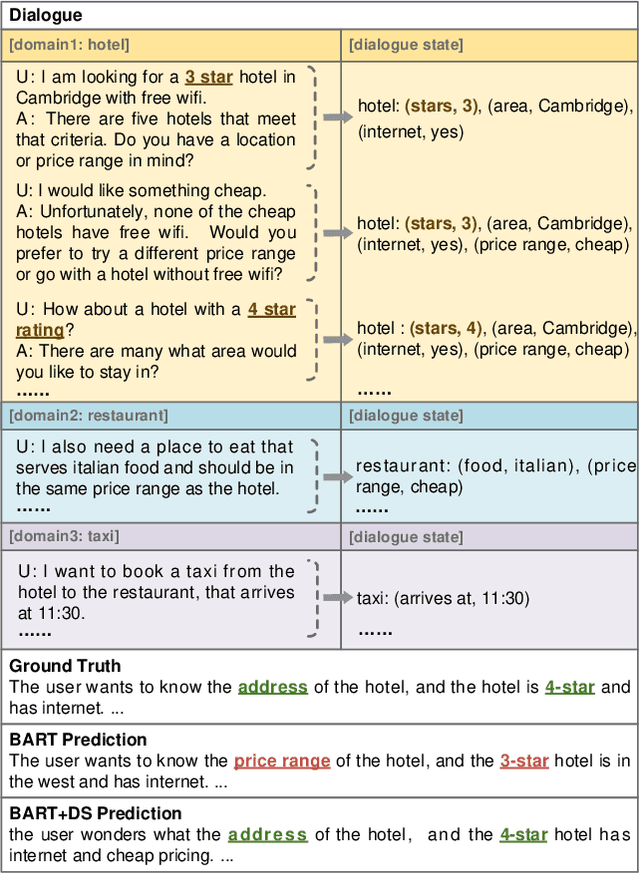

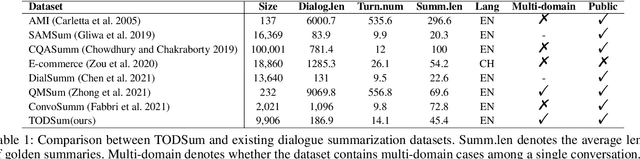

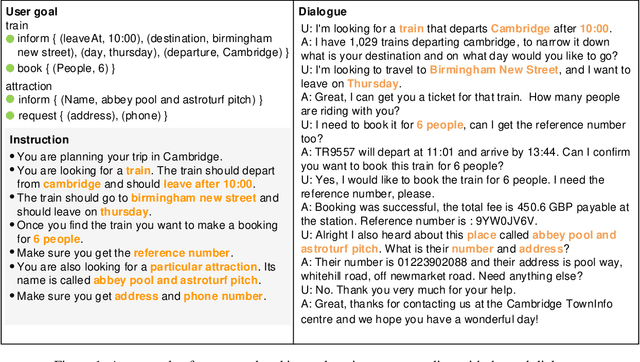

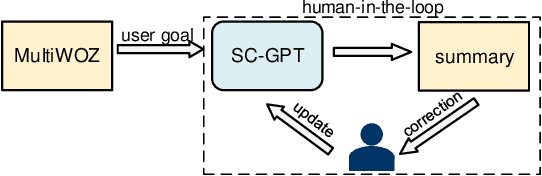

Abstract:Previous dialogue summarization datasets mainly focus on open-domain chitchat dialogues, while summarization datasets for the broadly used task-oriented dialogue haven't been explored yet. Automatically summarizing such task-oriented dialogues can help a business collect and review needs to improve the service. Besides, previous datasets pay more attention to generate good summaries with higher ROUGE scores, but they hardly understand the structured information of dialogues and ignore the factuality of summaries. In this paper, we introduce a large-scale public Task-Oriented Dialogue Summarization dataset, TODSum, which aims to summarize the key points of the agent completing certain tasks with the user. Compared to existing work, TODSum suffers from severe scattered information issues and requires strict factual consistency, which makes it hard to directly apply recent dialogue summarization models. Therefore, we introduce additional dialogue state knowledge for TODSum to enhance the faithfulness of generated summaries. We hope a better understanding of conversational content helps summarization models generate concise and coherent summaries. Meanwhile, we establish a comprehensive benchmark for TODSum and propose a state-aware structured dialogue summarization model to integrate dialogue state information and dialogue history. Exhaustive experiments and qualitative analysis prove the effectiveness of dialogue structure guidance. Finally, we discuss the current issues of TODSum and potential development directions for future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge