Eyal Klang

Cloud Platforms for Developing Generative AI Solutions: A Scoping Review of Tools and Services

Dec 08, 2024Abstract:Generative AI is transforming enterprise application development by enabling machines to create content, code, and designs. These models, however, demand substantial computational power and data management. Cloud computing addresses these needs by offering infrastructure to train, deploy, and scale generative AI models. This review examines cloud services for generative AI, focusing on key providers like Amazon Web Services (AWS), Microsoft Azure, Google Cloud, IBM Cloud, Oracle Cloud, and Alibaba Cloud. It compares their strengths, weaknesses, and impact on enterprise growth. We explore the role of high-performance computing (HPC), serverless architectures, edge computing, and storage in supporting generative AI. We also highlight the significance of data management, networking, and AI-specific tools in building and deploying these models. Additionally, the review addresses security concerns, including data privacy, compliance, and AI model protection. It assesses the performance and cost efficiency of various cloud providers and presents case studies from healthcare, finance, and entertainment. We conclude by discussing challenges and future directions, such as technical hurdles, vendor lock-in, sustainability, and regulatory issues. Put together, this work can serve as a guide for practitioners and researchers looking to adopt cloud-based generative AI solutions, serving as a valuable guide to navigating the intricacies of this evolving field.

X-ray2CTPA: Generating 3D CTPA scans from 2D X-ray conditioning

Jun 25, 2024Abstract:Chest X-rays or chest radiography (CXR), commonly used for medical diagnostics, typically enables limited imaging compared to computed tomography (CT) scans, which offer more detailed and accurate three-dimensional data, particularly contrast-enhanced scans like CT Pulmonary Angiography (CTPA). However, CT scans entail higher costs, greater radiation exposure, and are less accessible than CXRs. In this work we explore cross-modal translation from a 2D low contrast-resolution X-ray input to a 3D high contrast and spatial-resolution CTPA scan. Driven by recent advances in generative AI, we introduce a novel diffusion-based approach to this task. We evaluate the models performance using both quantitative metrics and qualitative feedback from radiologists, ensuring diagnostic relevance of the generated images. Furthermore, we employ the synthesized 3D images in a classification framework and show improved AUC in a PE categorization task, using the initial CXR input. The proposed method is generalizable and capable of performing additional cross-modality translations in medical imaging. It may pave the way for more accessible and cost-effective advanced diagnostic tools. The code for this project is available: https://github.com/NoaCahan/X-ray2CTPA .

Weakly Supervised Attention Model for RV StrainClassification from volumetric CTPA Scans

Jul 26, 2021

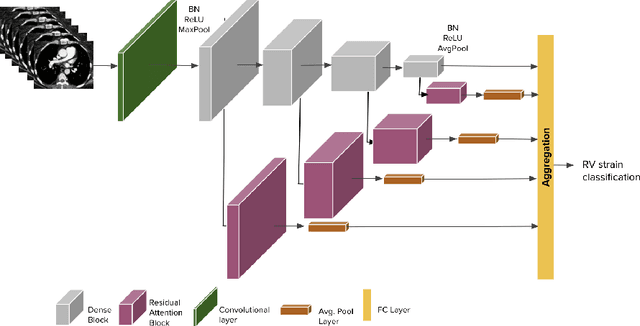

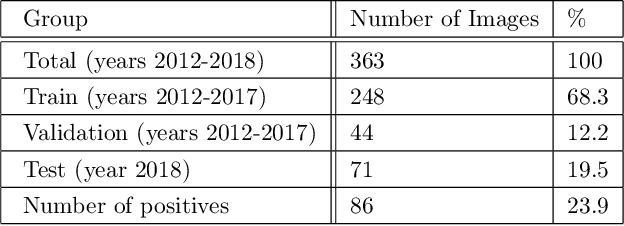

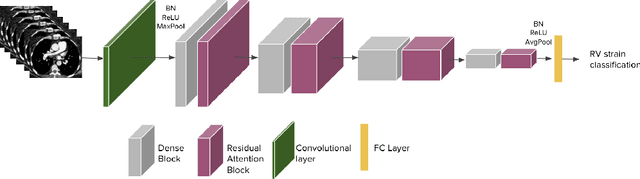

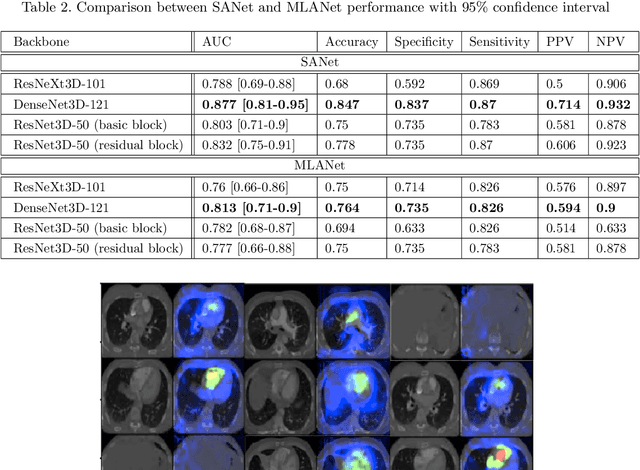

Abstract:Pulmonary embolus (PE) refers to obstruction of pulmonary arteries by blood clots. PE accounts for approximately 100,000 deaths per year in the United States alone. The clinical presentation of PE is often nonspecific, making the diagnosis challenging. Thus, rapid and accurate risk stratification is of paramount importance. High-risk PE is caused by right ventricular (RV) dysfunction from acute pressure overload, which in return can help identify which patients require more aggressive therapy. Reconstructed four-chamber views of the heart on chest CT can detect right ventricular enlargement. CT pulmonary angiography (CTPA) is the golden standard in the diagnostic workup of suspected PE. Therefore, it can link between diagnosis and risk stratification strategies. We developed a weakly supervised deep learning algorithm, with an emphasis on a novel attention mechanism, to automatically classify RV strain on CTPA. Our method is a 3D DenseNet model with integrated 3D residual attention blocks. We evaluated our model on a dataset of CTPAs of emergency department (ED) PE patients. This model achieved an area under the receiver operating characteristic curve (AUC) of 0.88 for classifying RV strain. The model showed a sensitivity of 87% and specificity of 83.7%. Our solution outperforms state-of-the-art 3D CNN networks. The proposed design allows for a fully automated network that can be trained easily in an end-to-end manner without requiring computationally intensive and time-consuming preprocessing or strenuous labeling of the data.We infer that unmarked CTPAs can be used for effective RV strain classification. This could be used as a second reader, alerting for high-risk PE patients. To the best of our knowledge, there are no previous deep learning-based studies that attempted to solve this problem.

The Liver Tumor Segmentation Benchmark (LiTS)

Jan 13, 2019

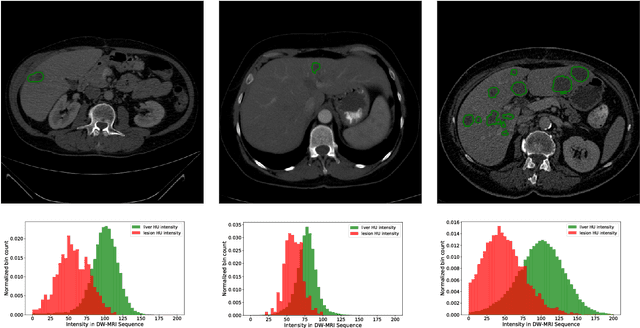

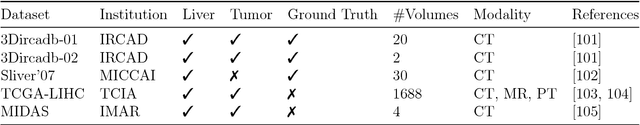

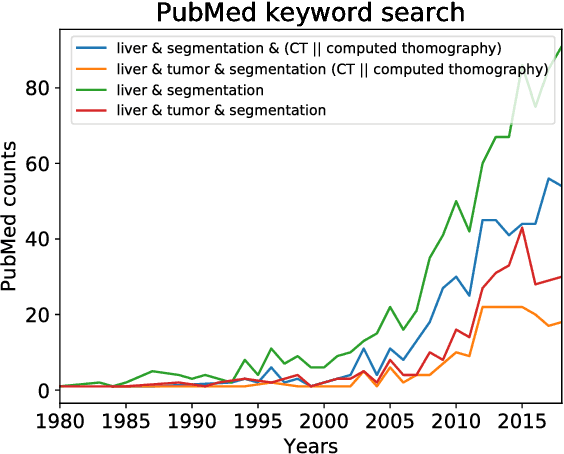

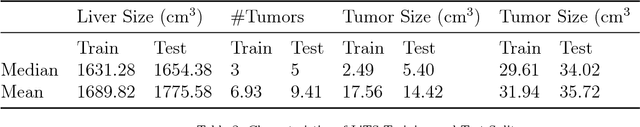

Abstract:In this work, we report the set-up and results of the Liver Tumor Segmentation Benchmark (LITS) organized in conjunction with the IEEE International Symposium on Biomedical Imaging (ISBI) 2016 and International Conference On Medical Image Computing Computer Assisted Intervention (MICCAI) 2017. Twenty four valid state-of-the-art liver and liver tumor segmentation algorithms were applied to a set of 131 computed tomography (CT) volumes with different types of tumor contrast levels (hyper-/hypo-intense), abnormalities in tissues (metastasectomie) size and varying amount of lesions. The submitted algorithms have been tested on 70 undisclosed volumes. The dataset is created in collaboration with seven hospitals and research institutions and manually reviewed by independent three radiologists. We found that not a single algorithm performed best for liver and tumors. The best liver segmentation algorithm achieved a Dice score of 0.96(MICCAI) whereas for tumor segmentation the best algorithm evaluated at 0.67(ISBI) and 0.70(MICCAI). The LITS image data and manual annotations continue to be publicly available through an online evaluation system as an ongoing benchmarking resource.

Cross-Modality Synthesis from CT to PET using FCN and GAN Networks for Improved Automated Lesion Detection

Jul 23, 2018

Abstract:In this work we present a novel system for generation of virtual PET images using CT scans. We combine a fully convolutional network (FCN) with a conditional generative adversarial network (GAN) to generate simulated PET data from given input CT data. The synthesized PET can be used for false-positive reduction in lesion detection solutions. Clinically, such solutions may enable lesion detection and drug treatment evaluation in a CT-only environment, thus reducing the need for the more expensive and radioactive PET/CT scan. Our dataset includes 60 PET/CT scans from Sheba Medical center. We used 23 scans for training and 37 for testing. Different schemes to achieve the synthesized output were qualitatively compared. Quantitative evaluation was conducted using an existing lesion detection software, combining the synthesized PET as a false positive reduction layer for the detection of malignant lesions in the liver. Current results look promising showing a 28% reduction in the average false positive per case from 2.9 to 2.1. The suggested solution is comprehensive and can be expanded to additional body organs, and different modalities.

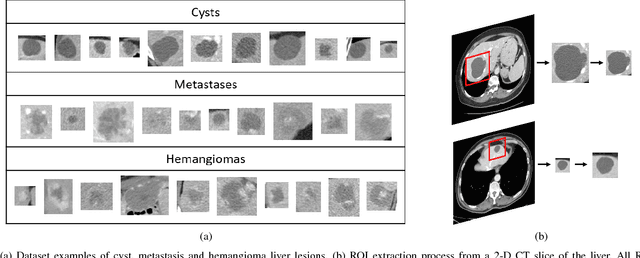

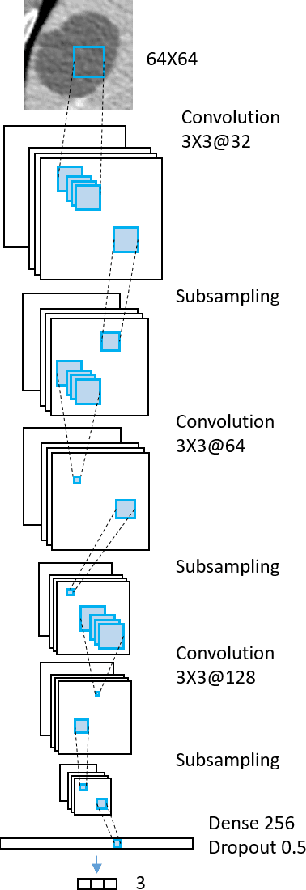

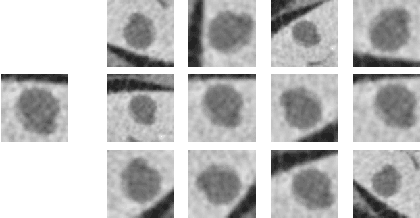

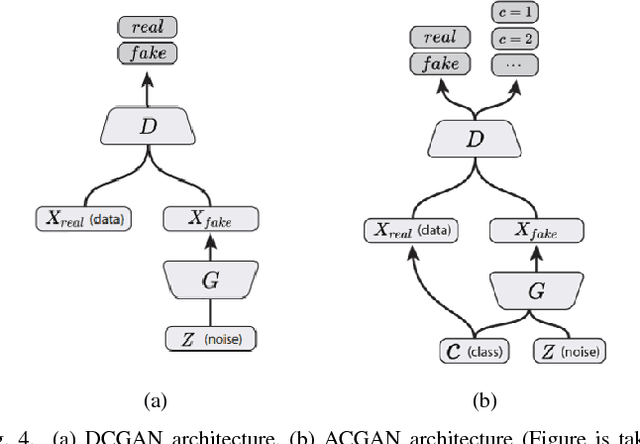

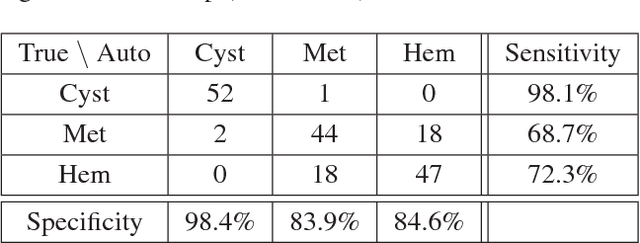

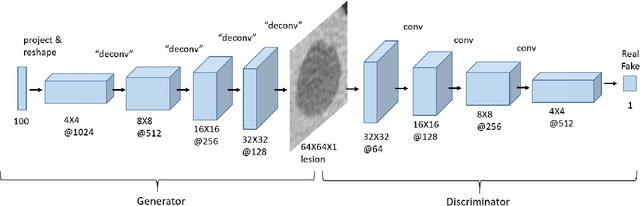

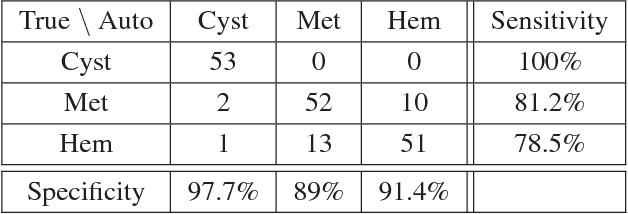

GAN-based Synthetic Medical Image Augmentation for increased CNN Performance in Liver Lesion Classification

Mar 03, 2018

Abstract:Deep learning methods, and in particular convolutional neural networks (CNNs), have led to an enormous breakthrough in a wide range of computer vision tasks, primarily by using large-scale annotated datasets. However, obtaining such datasets in the medical domain remains a challenge. In this paper, we present methods for generating synthetic medical images using recently presented deep learning Generative Adversarial Networks (GANs). Furthermore, we show that generated medical images can be used for synthetic data augmentation, and improve the performance of CNN for medical image classification. Our novel method is demonstrated on a limited dataset of computed tomography (CT) images of 182 liver lesions (53 cysts, 64 metastases and 65 hemangiomas). We first exploit GAN architectures for synthesizing high quality liver lesion ROIs. Then we present a novel scheme for liver lesion classification using CNN. Finally, we train the CNN using classic data augmentation and our synthetic data augmentation and compare performance. In addition, we explore the quality of our synthesized examples using visualization and expert assessment. The classification performance using only classic data augmentation yielded 78.6% sensitivity and 88.4% specificity. By adding the synthetic data augmentation the results increased to 85.7% sensitivity and 92.4% specificity. We believe that this approach to synthetic data augmentation can generalize to other medical classification applications and thus support radiologists' efforts to improve diagnosis.

Synthetic Data Augmentation using GAN for Improved Liver Lesion Classification

Jan 08, 2018

Abstract:In this paper, we present a data augmentation method that generates synthetic medical images using Generative Adversarial Networks (GANs). We propose a training scheme that first uses classical data augmentation to enlarge the training set and then further enlarges the data size and its diversity by applying GAN techniques for synthetic data augmentation. Our method is demonstrated on a limited dataset of computed tomography (CT) images of 182 liver lesions (53 cysts, 64 metastases and 65 hemangiomas). The classification performance using only classic data augmentation yielded 78.6% sensitivity and 88.4% specificity. By adding the synthetic data augmentation the results significantly increased to 85.7% sensitivity and 92.4% specificity.

Anatomical Data Augmentation For CNN based Pixel-wise Classification

Jan 07, 2018

Abstract:In this work we propose a method for anatomical data augmentation that is based on using slices of computed tomography (CT) examinations that are adjacent to labeled slices as another resource of labeled data for training the network. The extended labeled data is used to train a U-net network for a pixel-wise classification into different hepatic lesions and normal liver tissues. Our dataset contains CT examinations from 140 patients with 333 CT images annotated by an expert radiologist. We tested our approach and compared it to the conventional training process. Results indicate superiority of our method. Using the anatomical data augmentation we achieved an improvement of 3% in the success rate, 5% in the classification accuracy, and 4% in Dice.

Virtual PET Images from CT Data Using Deep Convolutional Networks: Initial Results

Jul 30, 2017

Abstract:In this work we present a novel system for PET estimation using CT scans. We explore the use of fully convolutional networks (FCN) and conditional generative adversarial networks (GAN) to export PET data from CT data. Our dataset includes 25 pairs of PET and CT scans where 17 were used for training and 8 for testing. The system was tested for detection of malignant tumors in the liver region. Initial results look promising showing high detection performance with a TPR of 92.3% and FPR of 0.25 per case. Future work entails expansion of the current system to the entire body using a much larger dataset. Such a system can be used for tumor detection and drug treatment evaluation in a CT-only environment instead of the expansive and radioactive PET-CT scan.

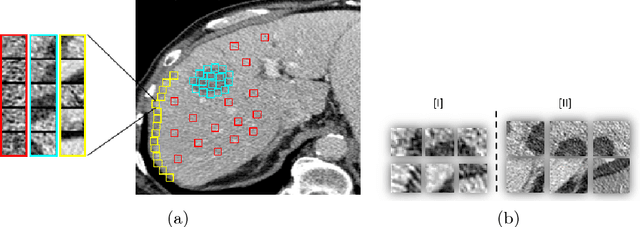

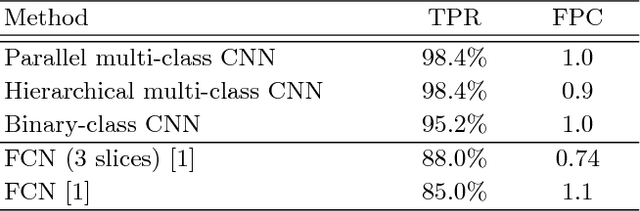

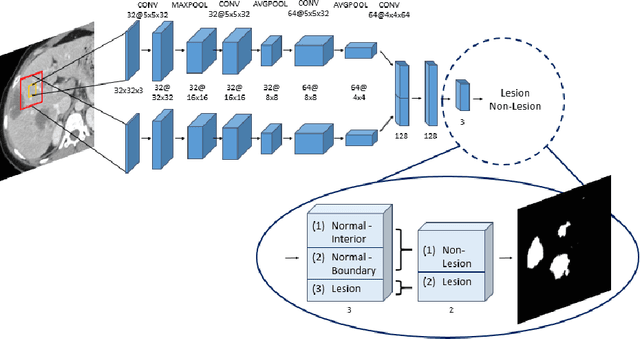

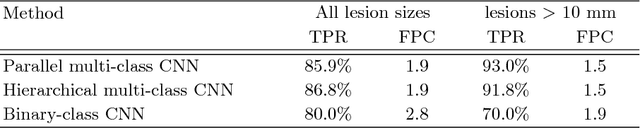

Modeling the Intra-class Variability for Liver Lesion Detection using a Multi-class Patch-based CNN

Jul 20, 2017

Abstract:Automatic detection of liver lesions in CT images poses a great challenge for researchers. In this work we present a deep learning approach that models explicitly the variability within the non-lesion class, based on prior knowledge of the data, to support an automated lesion detection system. A multi-class convolutional neural network (CNN) is proposed to categorize input image patches into sub-categories of boundary and interior patches, the decisions of which are fused to reach a binary lesion vs non-lesion decision. For validation of our system, we use CT images of 132 livers and 498 lesions. Our approach shows highly improved detection results that outperform the state-of-the-art fully convolutional network. Automated computerized tools, as shown in this work, have the potential in the future to support the radiologists towards improved detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge