Erkang Cheng

HiP-AD: Hierarchical and Multi-Granularity Planning with Deformable Attention for Autonomous Driving in a Single Decoder

Mar 11, 2025Abstract:Although end-to-end autonomous driving (E2E-AD) technologies have made significant progress in recent years, there remains an unsatisfactory performance on closed-loop evaluation. The potential of leveraging planning in query design and interaction has not yet been fully explored. In this paper, we introduce a multi-granularity planning query representation that integrates heterogeneous waypoints, including spatial, temporal, and driving-style waypoints across various sampling patterns. It provides additional supervision for trajectory prediction, enhancing precise closed-loop control for the ego vehicle. Additionally, we explicitly utilize the geometric properties of planning trajectories to effectively retrieve relevant image features based on physical locations using deformable attention. By combining these strategies, we propose a novel end-to-end autonomous driving framework, termed HiP-AD, which simultaneously performs perception, prediction, and planning within a unified decoder. HiP-AD enables comprehensive interaction by allowing planning queries to iteratively interact with perception queries in the BEV space while dynamically extracting image features from perspective views. Experiments demonstrate that HiP-AD outperforms all existing end-to-end autonomous driving methods on the closed-loop benchmark Bench2Drive and achieves competitive performance on the real-world dataset nuScenes.

SimPB: A Single Model for 2D and 3D Object Detection from Multiple Cameras

Mar 15, 2024

Abstract:The field of autonomous driving has attracted considerable interest in approaches that directly infer 3D objects in the Bird's Eye View (BEV) from multiple cameras. Some attempts have also explored utilizing 2D detectors from single images to enhance the performance of 3D detection. However, these approaches rely on a two-stage process with separate detectors, where the 2D detection results are utilized only once for token selection or query initialization. In this paper, we present a single model termed SimPB, which simultaneously detects 2D objects in the perspective view and 3D objects in the BEV space from multiple cameras. To achieve this, we introduce a hybrid decoder consisting of several multi-view 2D decoder layers and several 3D decoder layers, specifically designed for their respective detection tasks. A Dynamic Query Allocation module and an Adaptive Query Aggregation module are proposed to continuously update and refine the interaction between 2D and 3D results, in a cyclic 3D-2D-3D manner. Additionally, Query-group Attention is utilized to strengthen the interaction among 2D queries within each camera group. In the experiments, we evaluate our method on the nuScenes dataset and demonstrate promising results for both 2D and 3D detection tasks. Our code is available at: https://github.com/nullmax-vision/SimPB.

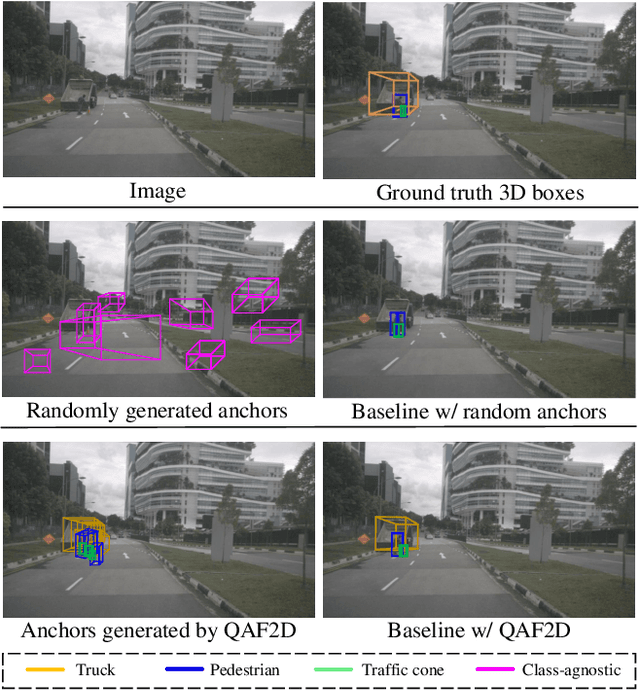

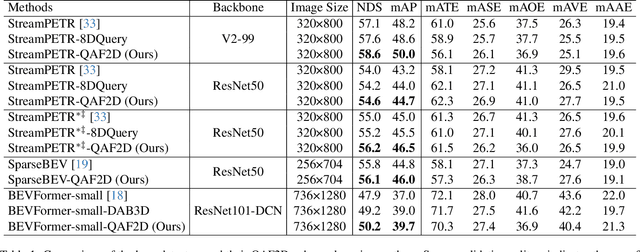

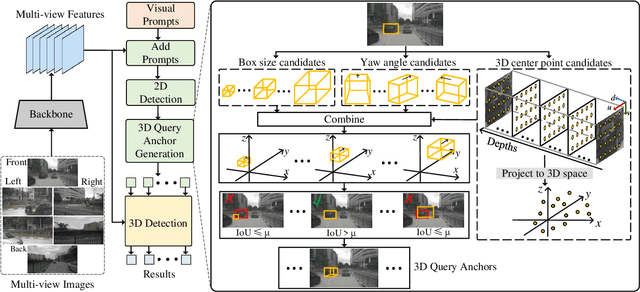

Enhancing 3D Object Detection with 2D Detection-Guided Query Anchors

Mar 10, 2024

Abstract:Multi-camera-based 3D object detection has made notable progress in the past several years. However, we observe that there are cases (e.g. faraway regions) in which popular 2D object detectors are more reliable than state-of-the-art 3D detectors. In this paper, to improve the performance of query-based 3D object detectors, we present a novel query generating approach termed QAF2D, which infers 3D query anchors from 2D detection results. A 2D bounding box of an object in an image is lifted to a set of 3D anchors by associating each sampled point within the box with depth, yaw angle, and size candidates. Then, the validity of each 3D anchor is verified by comparing its projection in the image with its corresponding 2D box, and only valid anchors are kept and used to construct queries. The class information of the 2D bounding box associated with each query is also utilized to match the predicted boxes with ground truth for the set-based loss. The image feature extraction backbone is shared between the 3D detector and 2D detector by adding a small number of prompt parameters. We integrate QAF2D into three popular query-based 3D object detectors and carry out comprehensive evaluations on the nuScenes dataset. The largest improvement that QAF2D can bring about on the nuScenes validation subset is $2.3\%$ NDS and $2.7\%$ mAP. Code is available at https://github.com/nullmax-vision/QAF2D.

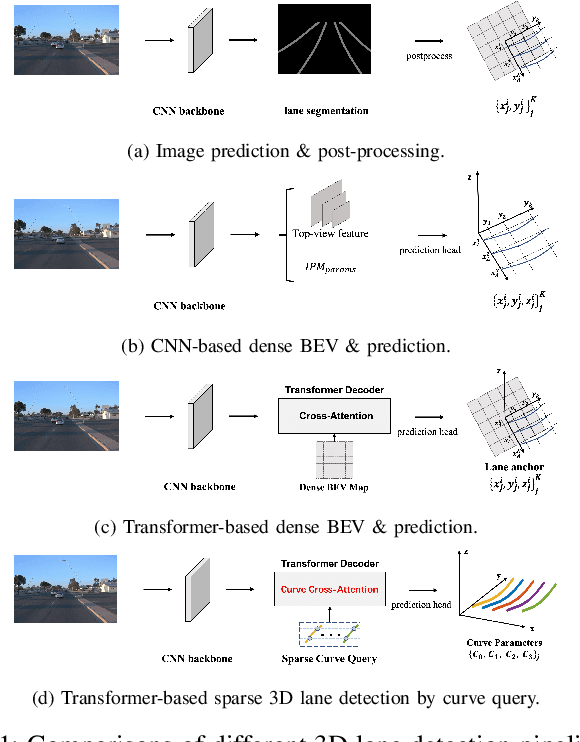

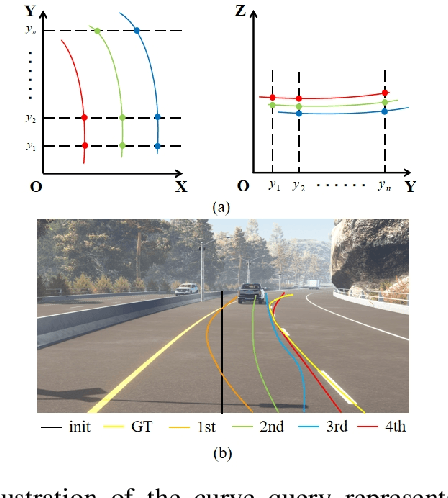

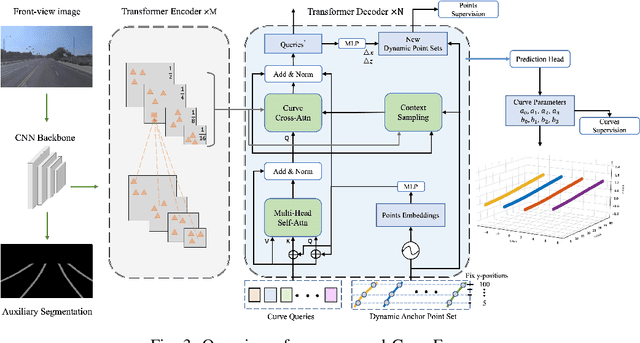

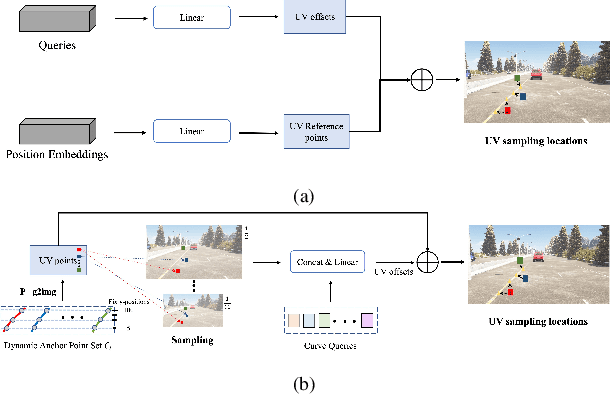

CurveFormer++: 3D Lane Detection by Curve Propagation with Temporal Curve Queries and Attention

Feb 09, 2024Abstract:In autonomous driving, 3D lane detection using monocular cameras is an important task for various downstream planning and control tasks. Recent CNN and Transformer approaches usually apply a two-stage scheme in the model design. The first stage transforms the image feature from a front image into a bird's-eye-view (BEV) representation. Subsequently, a sub-network processes the BEV feature map to generate the 3D detection results. However, these approaches heavily rely on a challenging image feature transformation module from a perspective view to a BEV representation. In our work, we present CurveFormer++, a single-stage Transformer-based method that does not require the image feature view transform module and directly infers 3D lane detection results from the perspective image features. Specifically, our approach models the 3D detection task as a curve propagation problem, where each lane is represented by a curve query with a dynamic and ordered anchor point set. By employing a Transformer decoder, the model can iteratively refine the 3D lane detection results. A curve cross-attention module is introduced in the Transformer decoder to calculate similarities between image features and curve queries of lanes. To handle varying lane lengths, we employ context sampling and anchor point restriction techniques to compute more relevant image features for a curve query. Furthermore, we apply a temporal fusion module that incorporates selected informative sparse curve queries and their corresponding anchor point sets to leverage historical lane information. In the experiments, we evaluate our approach for the 3D lane detection task on two publicly available real-world datasets. The results demonstrate that our method provides outstanding performance compared with both CNN and Transformer based methods. We also conduct ablation studies to analyze the impact of each component in our approach.

Multi-Correlation Siamese Transformer Network with Dense Connection for 3D Single Object Tracking

Dec 18, 2023

Abstract:Point cloud-based 3D object tracking is an important task in autonomous driving. Though great advances regarding Siamese-based 3D tracking have been made recently, it remains challenging to learn the correlation between the template and search branches effectively with the sparse LIDAR point cloud data. Instead of performing correlation of the two branches at just one point in the network, in this paper, we present a multi-correlation Siamese Transformer network that has multiple stages and carries out feature correlation at the end of each stage based on sparse pillars. More specifically, in each stage, self-attention is first applied to each branch separately to capture the non-local context information. Then, cross-attention is used to inject the template information into the search area. This strategy allows the feature learning of the search area to be aware of the template while keeping the individual characteristics of the template intact. To enable the network to easily preserve the information learned at different stages and ease the optimization, for the search area, we densely connect the initial input sparse pillars and the output of each stage to all subsequent stages and the target localization network, which converts pillars to bird's eye view (BEV) feature maps and predicts the state of the target with a small densely connected convolution network. Deep supervision is added to each stage to further boost the performance as well. The proposed algorithm is evaluated on the popular KITTI, nuScenes, and Waymo datasets, and the experimental results show that our method achieves promising performance compared with the state-of-the-art. Ablation study that shows the effectiveness of each component is provided as well. Code is available at https://github.com/liangp/MCSTN-3DSOT.

* Preprint version for IEEE Robotics and Automation Letters (RAL)

CircleFormer: Circular Nuclei Detection in Whole Slide Images with Circle Queries and Attention

Aug 31, 2023

Abstract:Both CNN-based and Transformer-based object detection with bounding box representation have been extensively studied in computer vision and medical image analysis, but circular object detection in medical images is still underexplored. Inspired by the recent anchor free CNN-based circular object detection method (CircleNet) for ball-shape glomeruli detection in renal pathology, in this paper, we present CircleFormer, a Transformer-based circular medical object detection with dynamic anchor circles. Specifically, queries with circle representation in Transformer decoder iteratively refine the circular object detection results, and a circle cross attention module is introduced to compute the similarity between circular queries and image features. A generalized circle IoU (gCIoU) is proposed to serve as a new regression loss of circular object detection as well. Moreover, our approach is easy to generalize to the segmentation task by adding a simple segmentation branch to CircleFormer. We evaluate our method in circular nuclei detection and segmentation on the public MoNuSeg dataset, and the experimental results show that our method achieves promising performance compared with the state-of-the-art approaches. The effectiveness of each component is validated via ablation studies as well. Our code is released at https://github.com/zhanghx-iim-ahu/CircleFormer.

CurveFormer: 3D Lane Detection by Curve Propagation with Curve Queries and Attention

Sep 16, 2022

Abstract:3D lane detection is an integral part of autonomous driving systems. Previous CNN and Transformer-based methods usually first generate a bird's-eye-view (BEV) feature map from the front view image, and then use a sub-network with BEV feature map as input to predict 3D lanes. Such approaches require an explicit view transformation between BEV and front view, which itself is still a challenging problem. In this paper, we propose CurveFormer, a single-stage Transformer-based method that directly calculates 3D lane parameters and can circumvent the difficult view transformation step. Specifically, we formulate 3D lane detection as a curve propagation problem by using curve queries. A 3D lane query is represented by a dynamic and ordered anchor point set. In this way, queries with curve representation in Transformer decoder iteratively refine the 3D lane detection results. Moreover, a curve cross-attention module is introduced to compute the similarities between curve queries and image features. Additionally, a context sampling module that can capture more relative image features of a curve query is provided to further boost the 3D lane detection performance. We evaluate our method for 3D lane detection on both synthetic and real-world datasets, and the experimental results show that our method achieves promising performance compared with the state-of-the-art approaches. The effectiveness of each component is validated via ablation studies as well.

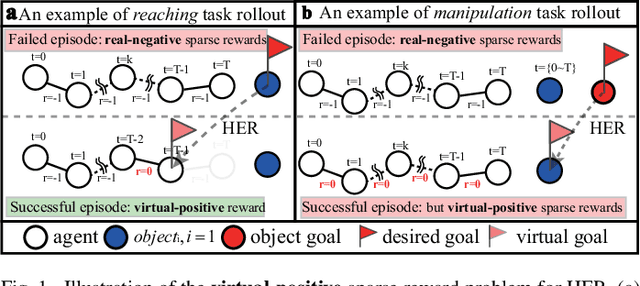

Relay Hindsight Experience Replay: Continual Reinforcement Learning for Robot Manipulation Tasks with Sparse Rewards

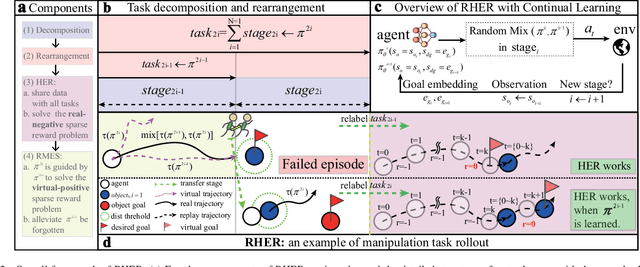

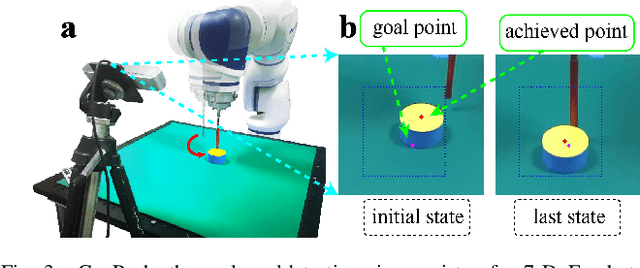

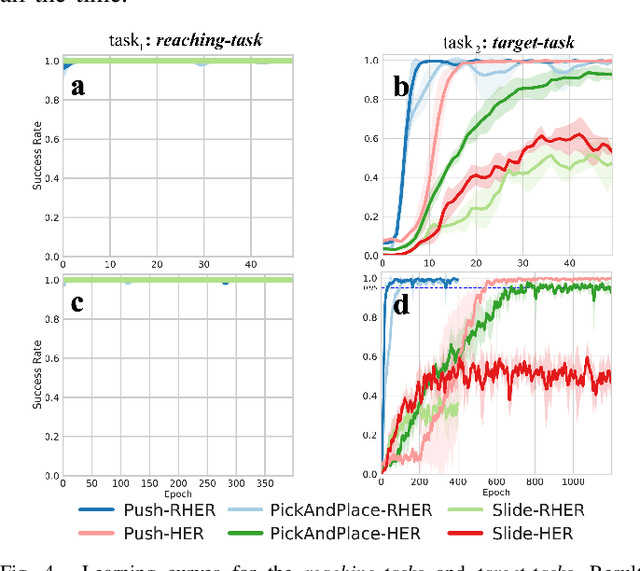

Aug 01, 2022

Abstract:Learning with sparse rewards is usually inefficient in Reinforcement Learning (RL). Hindsight Experience Replay (HER) has been shown an effective solution to handle the low sample efficiency that results from sparse rewards by goal relabeling. However, the HER still has an implicit virtual-positive sparse reward problem caused by invariant achieved goals, especially for robot manipulation tasks. To solve this problem, we propose a novel model-free continual RL algorithm, called Relay-HER (RHER). The proposed method first decomposes and rearranges the original long-horizon task into new sub-tasks with incremental complexity. Subsequently, a multi-task network is designed to learn the sub-tasks in ascending order of complexity. To solve the virtual-positive sparse reward problem, we propose a Random-Mixed Exploration Strategy (RMES), in which the achieved goals of the sub-task with higher complexity are quickly changed under the guidance of the one with lower complexity. The experimental results indicate the significant improvements in sample efficiency of RHER compared to vanilla-HER in five typical robot manipulation tasks, including Push, PickAndPlace, Drawer, Insert, and ObstaclePush. The proposed RHER method has also been applied to learn a contact-rich push task on a physical robot from scratch, and the success rate reached 10/10 with only 250 episodes.

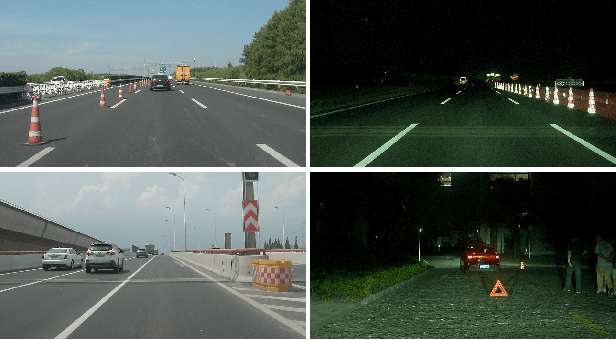

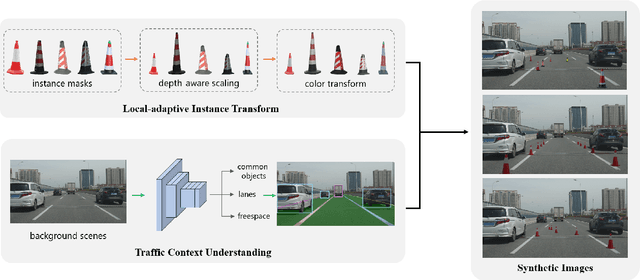

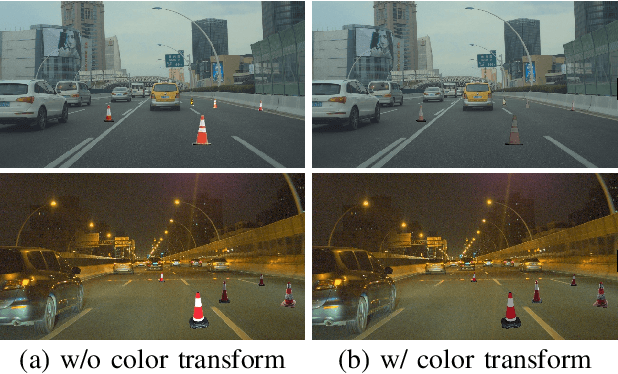

Traffic Context Aware Data Augmentation for Rare Object Detection in Autonomous Driving

May 01, 2022

Abstract:Detection of rare objects (e.g., traffic cones, traffic barrels and traffic warning triangles) is an important perception task to improve the safety of autonomous driving. Training of such models typically requires a large number of annotated data which is expensive and time consuming to obtain. To address the above problem, an emerging approach is to apply data augmentation to automatically generate cost-free training samples. In this work, we propose a systematic study on simple Copy-Paste data augmentation for rare object detection in autonomous driving. Specifically, local adaptive instance-level image transformation is introduced to generate realistic rare object masks from source domain to the target domain. Moreover, traffic scene context is utilized to guide the placement of masks of rare objects. To this end, our data augmentation generates training data with high quality and realistic characteristics by leveraging both local and global consistency. In addition, we build a new dataset named NM10k consisting 10k training images, 4k validation images and the corresponding labels with a diverse range of scenarios in autonomous driving. Experiments on NM10k show that our method achieves promising results on rare object detection. We also present a thorough study to illustrate the effectiveness of our local-adaptive and global constraints based Copy-Paste data augmentation for rare object detection. The data, development kit and more information of NM10k dataset are available online at: \url{https://nullmax-vision.github.io}.

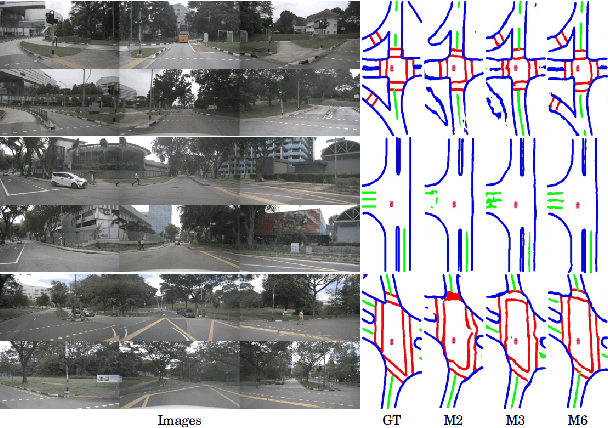

BEVSegFormer: Bird's Eye View Semantic Segmentation From Arbitrary Camera Rigs

Mar 13, 2022

Abstract:Semantic segmentation in bird's eye view (BEV) is an important task for autonomous driving. Though this task has attracted a large amount of research efforts, it is still challenging to flexibly cope with arbitrary (single or multiple) camera sensors equipped on the autonomous vehicle. In this paper, we present BEVSegFormer, an effective transformer-based method for BEV semantic segmentation from arbitrary camera rigs. Specifically, our method first encodes image features from arbitrary cameras with a shared backbone. These image features are then enhanced by a deformable transformer-based encoder. Moreover, we introduce a BEV transformer decoder module to parse BEV semantic segmentation results. An efficient multi-camera deformable attention unit is designed to carry out the BEV-to-image view transformation. Finally, the queries are reshaped according the layout of grids in the BEV, and upsampled to produce the semantic segmentation result in a supervised manner. We evaluate the proposed algorithm on the public nuScenes dataset and a self-collected dataset. Experimental results show that our method achieves promising performance on BEV semantic segmentation from arbitrary camera rigs. We also demonstrate the effectiveness of each component via ablation study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge