Ella Rabinovich

School of Computer Science, The Academic College of Tel-Aviv Yaffo, Israel

Exploring the Interplay between Musical Preferences and Personality through the Lens of Language

Aug 25, 2025Abstract:Music serves as a powerful reflection of individual identity, often aligning with deeper psychological traits. Prior research has established correlations between musical preferences and personality traits, while separate studies have demonstrated that personality is detectable through linguistic analysis. Our study bridges these two research domains by investigating whether individuals' musical preferences are recognizable in their spontaneous language through the lens of the Big Five personality traits (Openness, Conscientiousness, Extroversion, Agreeableness, and Neuroticism). Using a carefully curated dataset of over 500,000 text samples from nearly 5,000 authors with reliably identified musical preferences, we build advanced models to assess personality characteristics. Our results reveal significant personality differences across fans of five musical genres. We release resources for future research at the intersection of computational linguistics, music psychology and personality analysis.

Towards Enforcing Company Policy Adherence in Agentic Workflows

Jul 22, 2025Abstract:Large Language Model (LLM) agents hold promise for a flexible and scalable alternative to traditional business process automation, but struggle to reliably follow complex company policies. In this study we introduce a deterministic, transparent, and modular framework for enforcing business policy adherence in agentic workflows. Our method operates in two phases: (1) an offline buildtime stage that compiles policy documents into verifiable guard code associated with tool use, and (2) a runtime integration where these guards ensure compliance before each agent action. We demonstrate our approach on the challenging $\tau$-bench Airlines domain, showing encouraging preliminary results in policy enforcement, and further outline key challenges for real-world deployments.

On the Robustness of Agentic Function Calling

Apr 01, 2025

Abstract:Large Language Models (LLMs) are increasingly acting as autonomous agents, with function calling (FC) capabilities enabling them to invoke specific tools for tasks. While prior research has primarily focused on improving FC accuracy, little attention has been given to the robustness of these agents to perturbations in their input. We introduce a benchmark assessing FC robustness in two key areas: resilience to naturalistic query variations, and stability in function calling when the toolkit expands with semantically related tools. Evaluating best-performing FC models on a carefully expanded subset of the Berkeley function calling leaderboard (BFCL), we identify critical weaknesses in existing evaluation methodologies, and highlight areas for improvement in real-world agentic deployments.

A Novel Metric for Measuring the Robustness of Large Language Models in Non-adversarial Scenarios

Aug 04, 2024Abstract:We evaluate the robustness of several large language models on multiple datasets. Robustness here refers to the relative insensitivity of the model's answers to meaning-preserving variants of their input. Benchmark datasets are constructed by introducing naturally-occurring, non-malicious perturbations, or by generating semantically equivalent paraphrases of input questions or statements. We further propose a novel metric for assessing a model robustness, and demonstrate its benefits in the non-adversarial scenario by empirical evaluation of several models on the created datasets.

Automatic Extraction of Disease Risk Factors from Medical Publications

Jul 10, 2024

Abstract:We present a novel approach to automating the identification of risk factors for diseases from medical literature, leveraging pre-trained models in the bio-medical domain, while tuning them for the specific task. Faced with the challenges of the diverse and unstructured nature of medical articles, our study introduces a multi-step system to first identify relevant articles, then classify them based on the presence of risk factor discussions and, finally, extract specific risk factor information for a disease through a question-answering model. Our contributions include the development of a comprehensive pipeline for the automated extraction of risk factors and the compilation of several datasets, which can serve as valuable resources for further research in this area. These datasets encompass a wide range of diseases, as well as their associated risk factors, meticulously identified and validated through a fine-grained evaluation scheme. We conducted both automatic and thorough manual evaluation, demonstrating encouraging results. We also highlight the importance of improving models and expanding dataset comprehensiveness to keep pace with the rapidly evolving field of medical research.

That's Optional: A Contemporary Exploration of "that" Omission in English Subordinate Clauses

May 31, 2024Abstract:The Uniform Information Density (UID) hypothesis posits that speakers optimize the communicative properties of their utterances by avoiding spikes in information, thereby maintaining a relatively uniform information profile over time. This paper investigates the impact of UID principles on syntactic reduction, specifically focusing on the optional omission of the connector "that" in English subordinate clauses. Building upon previous research, we extend our investigation to a larger corpus of written English, utilize contemporary large language models (LLMs) and extend the information-uniformity principles by the notion of entropy, to estimate the UID manifestations in the usecase of syntactic reduction choices.

The Knesset Corpus: An Annotated Corpus of Hebrew Parliamentary Proceedings

May 28, 2024Abstract:We present the Knesset Corpus, a corpus of Hebrew parliamentary proceedings containing over 30 million sentences (over 384 million tokens) from all the (plenary and committee) protocols held in the Israeli parliament between 1998 and 2022. Sentences are annotated with morpho-syntactic information and are associated with detailed meta-information reflecting demographic and political properties of the speakers, based on a large database of parliament members and factions that we compiled. We discuss the structure and composition of the corpus and the various processing steps we applied to it. To demonstrate the utility of this novel dataset we present two use cases. We show that the corpus can be used to examine historical developments in the style of political discussions by showing a reduction in lexical richness in the proceedings over time. We also investigate some differences between the styles of men and women speakers. These use cases exemplify the potential of the corpus to shed light on important trends in the Israeli society, supporting research in linguistics, political science, communication, law, etc.

Predicting Question-Answering Performance of Large Language Models through Semantic Consistency

Nov 02, 2023

Abstract:Semantic consistency of a language model is broadly defined as the model's ability to produce semantically-equivalent outputs, given semantically-equivalent inputs. We address the task of assessing question-answering (QA) semantic consistency of contemporary large language models (LLMs) by manually creating a benchmark dataset with high-quality paraphrases for factual questions, and release the dataset to the community. We further combine the semantic consistency metric with additional measurements suggested in prior work as correlating with LLM QA accuracy, for building and evaluating a framework for factual QA reference-less performance prediction -- predicting the likelihood of a language model to accurately answer a question. Evaluating the framework on five contemporary LLMs, we demonstrate encouraging, significantly outperforming baselines, results.

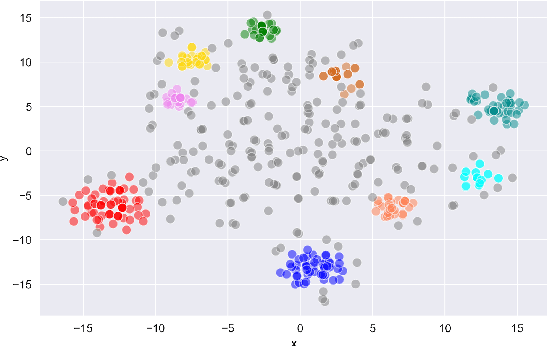

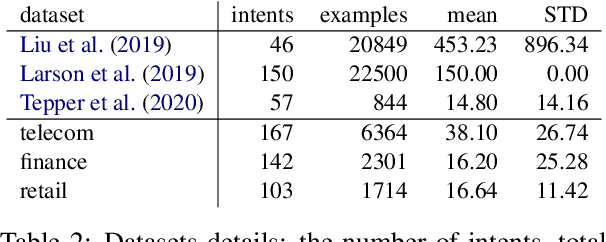

Reliable and Interpretable Drift Detection in Streams of Short Texts

May 28, 2023Abstract:Data drift is the change in model input data that is one of the key factors leading to machine learning models performance degradation over time. Monitoring drift helps detecting these issues and preventing their harmful consequences. Meaningful drift interpretation is a fundamental step towards effective re-training of the model. In this study we propose an end-to-end framework for reliable model-agnostic change-point detection and interpretation in large task-oriented dialog systems, proven effective in multiple customer deployments. We evaluate our approach and demonstrate its benefits with a novel variant of intent classification training dataset, simulating customer requests to a dialog system. We make the data publicly available.

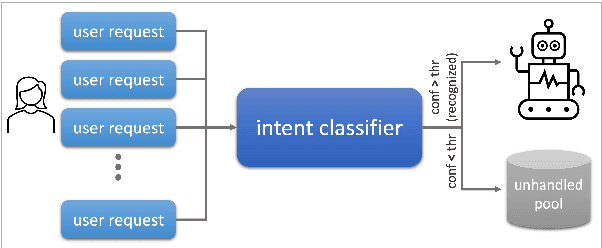

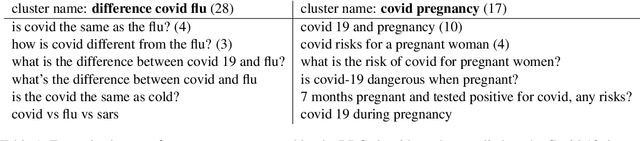

Gaining Insights into Unrecognized User Utterances in Task-Oriented Dialog Systems

Apr 11, 2022

Abstract:The rapidly growing market demand for dialogue agents capable of goal-oriented behavior has caused many tech-industry leaders to invest considerable efforts into task-oriented dialog systems. The performance and success of these systems is highly dependent on the accuracy of their intent identification -- the process of deducing the goal or meaning of the user's request and mapping it to one of the known intents for further processing. Gaining insights into unrecognized utterances -- user requests the systems fails to attribute to a known intent -- is therefore a key process in continuous improvement of goal-oriented dialog systems. We present an end-to-end pipeline for processing unrecognized user utterances, including a specifically-tailored clustering algorithm, a novel approach to cluster representative extraction, and cluster naming. We evaluated the proposed clustering algorithm and compared its performance to out-of-the-box SOTA solutions, demonstrating its benefits in the analysis of unrecognized user requests.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge