Dongyuan Shi

A Stabilized Hybrid Active Noise Control Algorithm of GFANC and FxNLMS with Online Clustering

Jan 22, 2026Abstract:The Filtered-x Normalized Least Mean Square (FxNLMS) algorithm suffers from slow convergence and a risk of divergence, although it can achieve low steady-state errors after sufficient adaptation. In contrast, the Generative Fixed-Filter Active Noise Control (GFANC) method offers fast response speed, but its lack of adaptability may lead to large steady-state errors. This paper proposes a hybrid GFANC-FxNLMS algorithm to leverage the complementary advantages of both approaches. In the hybrid GFANC-FxNLMS algorithm, GFANC provides a frame-level control filter as an initialization for FxNLMS, while FxNLMS performs continuous adaptation at the sampling rate. Small variations in the GFANC-generated filter may repeatedly reinitialize FxNLMS, interrupting its adaptation process and destabilizing the system. An online clustering module is introduced to avoid unnecessary re-initializations and improve system stability. Simulation results show that the proposed algorithm achieves fast response, very low steady-state error, and high stability, requiring only one pre-trained broadband filter.

Distributed Multichannel Active Noise Control with Asynchronous Communication

Jan 22, 2026Abstract:Distributed multichannel active noise control (DMCANC) offers effective noise reduction across large spatial areas by distributing the computational load of centralized control to multiple low-cost nodes. Conventional DMCANC methods, however, typically assume synchronous communication and require frequent data exchange, resulting in high communication overhead. To enhance efficiency and adaptability, this work proposes an asynchronous communication strategy where each node executes a weight-constrained filtered-x LMS (WCFxLMS) algorithm and independently requests communication only when its local noise reduction performance degrades. Upon request, other nodes transmit the weight difference between their local control filter and the center point in WCFxLMS, which are then integrated to update both the control filter and the center point. This design enables nodes to operate asynchronously while preserving cooperative behavior. Simulation results demonstrate that the proposed asynchronous communication DMCANC (ACDMCANC) system maintains effective noise reduction with significantly reduced communication load, offering improved scalability for heterogeneous networks.

Co-Initialization of Control Filter and Secondary Path via Meta-Learning for Active Noise Control

Jan 20, 2026Abstract:Active noise control (ANC) must adapt quickly when the acoustic environment changes, yet early performance is largely dictated by initialization. We address this with a Model-Agnostic Meta-Learning (MAML) co-initialization that jointly sets the control filter and the secondary-path model for FxLMS-based ANC while keeping the runtime algorithm unchanged. The initializer is pre-trained on a small set of measured paths using short two-phase inner loops that mimic identification followed by residual-noise reduction, and is applied by simply setting the learned initial coefficients. In an online secondary path modeling FxLMS testbed, it yields lower early-stage error, shorter time-to-target, reduced auxiliary-noise energy, and faster recovery after path changes than a baseline without re-initialization. The method provides a simple fast start for feedforward ANC under environment changes, requiring a small set of paths to pre-train.

Directional Selective Fixed-Filter Active Noise Control Based on a Convolutional Neural Network in Reverberant Environments

Jan 11, 2026Abstract:Selective fixed-filter active noise control (SFANC) is a novel approach capable of mitigating noise with varying frequency characteristics. It offers faster response and greater computational efficiency compared to traditional adaptive algorithms. However, spatial factors, particularly the influence of the noise source location, are often overlooked. Some existing studies have explored the impact of the direction-of-arrival (DoA) of the noise source on ANC performance, but they are mostly limited to free-field conditions and do not consider the more complex indoor reverberant environments. To address this gap, this paper proposes a learning-based directional SFANC method that incorporates the DoA of the noise source in reverberant environments. In this framework, multiple reference signals are processed by a convolutional neural network (CNN) to estimate the azimuth and elevation angles of the noise source, as well as to identify the most appropriate control filter for effective noise cancellation. Compared to traditional adaptive algorithms, the proposed approach achieves superior noise reduction with shorter response times, even in the presence of reverberations.

DOA Estimation with Lightweight Network on LLM-Aided Simulated Acoustic Scenes

Nov 11, 2025Abstract:Direction-of-Arrival (DOA) estimation is critical in spatial audio and acoustic signal processing, with wide-ranging applications in real-world. Most existing DOA models are trained on synthetic data by convolving clean speech with room impulse responses (RIRs), which limits their generalizability due to constrained acoustic diversity. In this paper, we revisit DOA estimation using a recently introduced dataset constructed with the assistance of large language models (LLMs), which provides more realistic and diverse spatial audio scenes. We benchmark several representative neural-based DOA methods on this dataset and propose LightDOA, a lightweight DOA estimation model based on depthwise separable convolutions, specifically designed for mutil-channel input in varying environments. Experimental results show that LightDOA achieves satisfactory accuracy and robustness across various acoustic scenes while maintaining low computational complexity. This study not only highlights the potential of spatial audio synthesized with the assistance of LLMs in advancing robust and efficient DOA estimation research, but also highlights LightDOA as efficient solution for resource-constrained applications.

Self-Boosted Weight-Constrained FxLMS: A Robustness Distributed Active Noise Control Algorithm Without Internode Communication

Jul 16, 2025Abstract:Compared to the conventional centralized multichannel active noise control (MCANC) algorithm, which requires substantial computational resources, decentralized approaches exhibit higher computational efficiency but typically result in inferior noise reduction performance. To enhance performance, distributed ANC methods have been introduced, enabling information exchange among ANC nodes; however, the resulting communication latency often compromises system stability. To overcome these limitations, we propose a self-boosted weight-constrained filtered-reference least mean square (SB-WCFxLMS) algorithm for the distributed MCANC system without internode communication. The WCFxLMS algorithm is specifically designed to mitigate divergence issues caused by the internode cross-talk effect. The self-boosted strategy lets each ANC node independently adapt its constraint parameters based on its local noise reduction performance, thus ensuring effective noise cancellation without the need for inter-node communication. With the assistance of this mechanism, this approach significantly reduces both computational complexity and communication overhead. Numerical simulations employing real acoustic paths and compressor noise validate the effectiveness and robustness of the proposed system. The results demonstrate that our proposed method achieves satisfactory noise cancellation performance with minimal resource requirements.

Efficient Extreme Operating Condition Search for Online Relay Setting Calculation in Renewable Power Systems Based on Parallel Graph Neural Network

Jun 24, 2025Abstract:The Extreme Operating Conditions Search (EOCS) problem is one of the key problems in relay setting calculation, which is used to ensure that the setting values of protection relays can adapt to the changing operating conditions of power systems over a period of time after deployment. The high penetration of renewable energy and the wide application of inverter-based resources make the operating conditions of renewable power systems more volatile, which urges the adoption of the online relay setting calculation strategy. However, the computation speed of existing EOCS methods based on local enumeration, heuristic algorithms, and mathematical programming cannot meet the efficiency requirement of online relay setting calculation. To reduce the time overhead, this paper, for the first time, proposes an efficient deep learning-based EOCS method suitable for online relay setting calculation. First, the power system information is formulated as four layers, i.e., a component parameter layer, a topological connection layer, an electrical distance layer, and a graph distance layer, which are fed into a parallel graph neural network (PGNN) model for feature extraction. Then, the four feature layers corresponding to each node are spliced and stretched, and then fed into the decision network to predict the extreme operating condition of the system. Finally, the proposed PGNN method is validated on the modified IEEE 39-bus and 118-bus test systems, where some of the synchronous generators are replaced by renewable generation units. The nonlinear fault characteristics of renewables are fully considered when computing fault currents. The experiment results show that the proposed PGNN method achieves higher accuracy than the existing methods in solving the EOCS problem. Meanwhile, it also provides greater improvements in online computation time.

Fast Searching of Extreme Operating Conditions for Relay Protection Setting Calculation Based on Graph Neural Network and Reinforcement Learning

Jan 16, 2025

Abstract:Searching for the Extreme Operating Conditions (EOCs) is one of the core problems of power system relay protection setting calculation. The current methods based on brute-force search, heuristic algorithms, and mathematical programming can hardly meet the requirements of today's power systems in terms of computation speed due to the drastic changes in operating conditions induced by renewables and power electronics. This paper proposes an EOC fast search method, named Graph Dueling Double Deep Q Network (Graph D3QN), which combines graph neural network and deep reinforcement learning to address this challenge. First, the EOC search problem is modeled as a Markov decision process, where the information of the underlying power system is extracted using graph neural networks, so that the EOC of the system can be found via deep reinforcement learning. Then, a two-stage Guided Learning and Free Exploration (GLFE) training framework is constructed to accelerate the convergence speed of reinforcement learning. Finally, the proposed Graph D3QN method is validated through case studies of searching maximum fault current for relay protection setting calculation on the IEEE 39-bus and 118-bus systems. The experimental results demonstrate that Graph D3QN can reduce the computation time by 10 to 1000 times while guaranteeing the accuracy of the selected EOCs.

Preventing output saturation in active noise control: An output-constrained Kalman filter approach

Dec 25, 2024Abstract:The Kalman filter (KF)-based active noise control (ANC) system demonstrates superior tracking and faster convergence compared to the least mean square (LMS) method, particularly in dynamic noise cancellation scenarios. However, in environments with extremely high noise levels, the power of the control signal can exceed the system's rated output power due to hardware limitations, leading to output saturation and subsequent non-linearity. To mitigate this issue, a modified KF with an output constraint is proposed. In this approach, the disturbance treated as an measurement is re-scaled by a constraint factor, which is determined by the system's rated power, the secondary path gain, and the disturbance power. As a result, the output power of the system, i.e. the control signal, is indirectly constrained within the maximum output of the system, ensuring stability. Simulation results indicate that the proposed algorithm not only achieves rapid suppression of dynamic noise but also effectively prevents non-linearity due to output saturation, highlighting its practical significance.

AudioSetCaps: An Enriched Audio-Caption Dataset using Automated Generation Pipeline with Large Audio and Language Models

Nov 28, 2024

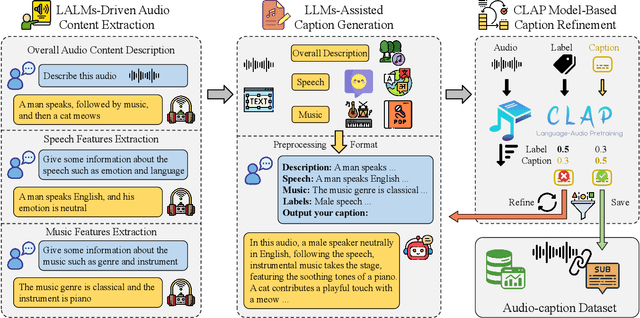

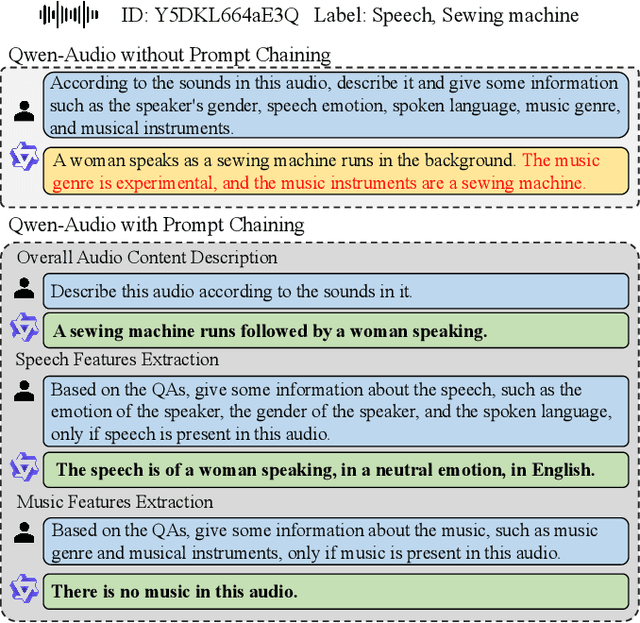

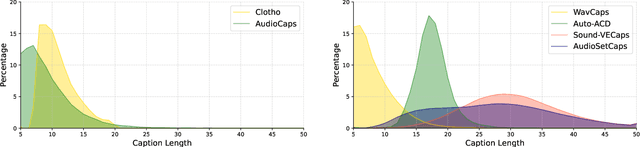

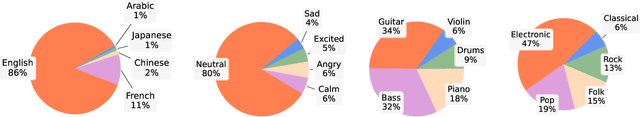

Abstract:With the emergence of audio-language models, constructing large-scale paired audio-language datasets has become essential yet challenging for model development, primarily due to the time-intensive and labour-heavy demands involved. While large language models (LLMs) have improved the efficiency of synthetic audio caption generation, current approaches struggle to effectively extract and incorporate detailed audio information. In this paper, we propose an automated pipeline that integrates audio-language models for fine-grained content extraction, LLMs for synthetic caption generation, and a contrastive language-audio pretraining (CLAP) model-based refinement process to improve the quality of captions. Specifically, we employ prompt chaining techniques in the content extraction stage to obtain accurate and fine-grained audio information, while we use the refinement process to mitigate potential hallucinations in the generated captions. Leveraging the AudioSet dataset and the proposed approach, we create AudioSetCaps, a dataset comprising 1.9 million audio-caption pairs, the largest audio-caption dataset at the time of writing. The models trained with AudioSetCaps achieve state-of-the-art performance on audio-text retrieval with R@1 scores of 46.3% for text-to-audio and 59.7% for audio-to-text retrieval and automated audio captioning with the CIDEr score of 84.8. As our approach has shown promising results with AudioSetCaps, we create another dataset containing 4.1 million synthetic audio-language pairs based on the Youtube-8M and VGGSound datasets. To facilitate research in audio-language learning, we have made our pipeline, datasets with 6 million audio-language pairs, and pre-trained models publicly available at https://github.com/JishengBai/AudioSetCaps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge