Dimitrios Mavroeidis

Royal Philips B.V., Eindhoven, The Netherlands

Improved Pancreatic Tumor Detection by Utilizing Clinically-Relevant Secondary Features

Aug 06, 2022

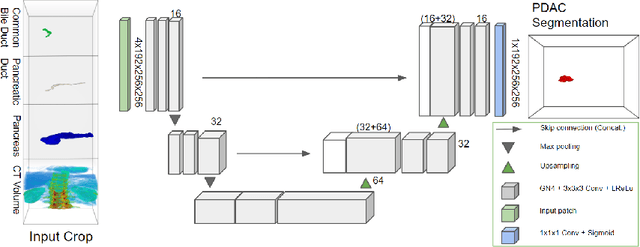

Abstract:Pancreatic cancer is one of the global leading causes of cancer-related deaths. Despite the success of Deep Learning in computer-aided diagnosis and detection (CAD) methods, little attention has been paid to the detection of Pancreatic Cancer. We propose a method for detecting pancreatic tumor that utilizes clinically-relevant features in the surrounding anatomical structures, thereby better aiming to exploit the radiologist's knowledge compared to other, conventional deep learning approaches. To this end, we collect a new dataset consisting of 99 cases with pancreatic ductal adenocarcinoma (PDAC) and 97 control cases without any pancreatic tumor. Due to the growth pattern of pancreatic cancer, the tumor may not be always visible as a hypodense lesion, therefore experts refer to the visibility of secondary external features that may indicate the presence of the tumor. We propose a method based on a U-Net-like Deep CNN that exploits the following external secondary features: the pancreatic duct, common bile duct and the pancreas, along with a processed CT scan. Using these features, the model segments the pancreatic tumor if it is present. This segmentation for classification and localization approach achieves a performance of 99% sensitivity (one case missed) and 99% specificity, which realizes a 5% increase in sensitivity over the previous state-of-the-art method. The model additionally provides location information with reasonable accuracy and a shorter inference time compared to previous PDAC detection methods. These results offer a significant performance improvement and highlight the importance of incorporating the knowledge of the clinical expert when developing novel CAD methods.

Untrimmed Action Anticipation

Feb 08, 2022

Abstract:Egocentric action anticipation consists in predicting a future action the camera wearer will perform from egocentric video. While the task has recently attracted the attention of the research community, current approaches assume that the input videos are "trimmed", meaning that a short video sequence is sampled a fixed time before the beginning of the action. We argue that, despite the recent advances in the field, trimmed action anticipation has a limited applicability in real-world scenarios where it is important to deal with "untrimmed" video inputs and it cannot be assumed that the exact moment in which the action will begin is known at test time. To overcome such limitations, we propose an untrimmed action anticipation task, which, similarly to temporal action detection, assumes that the input video is untrimmed at test time, while still requiring predictions to be made before the actions actually take place. We design an evaluation procedure for methods designed to address this novel task, and compare several baselines on the EPIC-KITCHENS-100 dataset. Experiments show that the performance of current models designed for trimmed action anticipation is very limited and more research on this task is required.

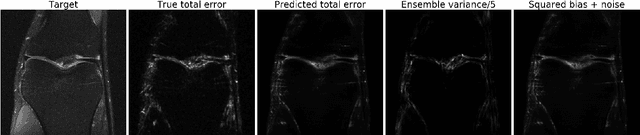

Simple and Accurate Uncertainty Quantification from Bias-Variance Decomposition

Feb 13, 2020

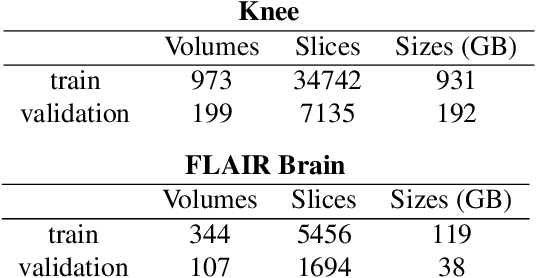

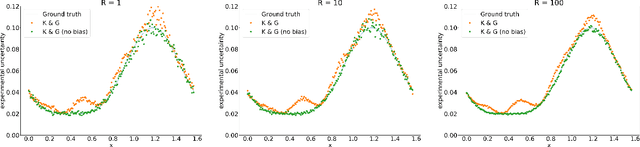

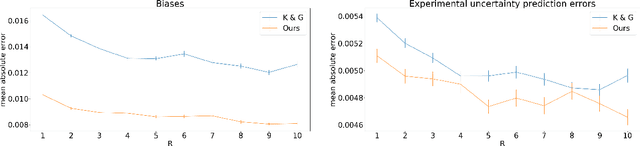

Abstract:Accurate uncertainty quantification is crucial for many applications where decisions are in play. Examples include medical diagnosis and self-driving vehicles. We propose a new method that is based directly on the bias-variance decomposition, where the parameter uncertainty is given by the variance of an ensemble divided by the number of members in the ensemble, and the aleatoric uncertainty plus the squared bias is estimated by training a separate model that is regressed directly on the errors of the predictor. We demonstrate that this simple sequential procedure provides much more accurate uncertainty estimates than the current state-of-the-art on two MRI reconstruction tasks.

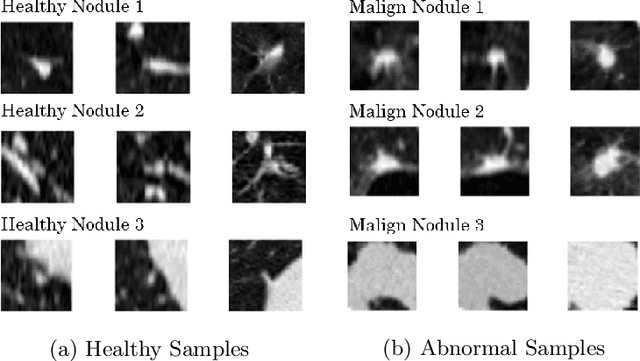

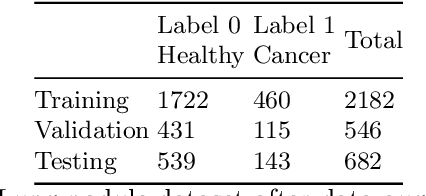

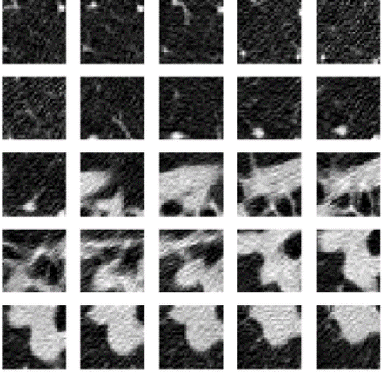

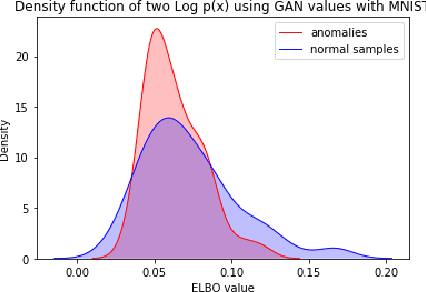

Anomaly Detection for imbalanced datasets with Deep Generative Models

Nov 02, 2018

Abstract:Many important data analysis applications present with severely imbalanced datasets with respect to the target variable. A typical example is medical image analysis, where positive samples are scarce, while performance is commonly estimated against the correct detection of these positive examples. We approach this challenge by formulating the problem as anomaly detection with generative models. We train a generative model without supervision on the `negative' (common) datapoints and use this model to estimate the likelihood of unseen data. A successful model allows us to detect the `positive' case as low likelihood datapoints. In this position paper, we present the use of state-of-the-art deep generative models (GAN and VAE) for the estimation of a likelihood of the data. Our results show that on the one hand both GANs and VAEs are able to separate the `positive' and `negative' samples in the MNIST case. On the other hand, for the NLST case, neither GANs nor VAEs were able to capture the complexity of the data and discriminate anomalies at the level that this task requires. These results show that even though there are a number of successes presented in the literature for using generative models in similar applications, there remain further challenges for broad successful implementation.

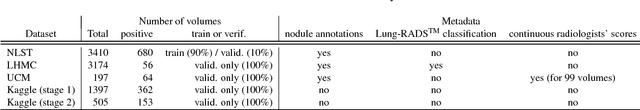

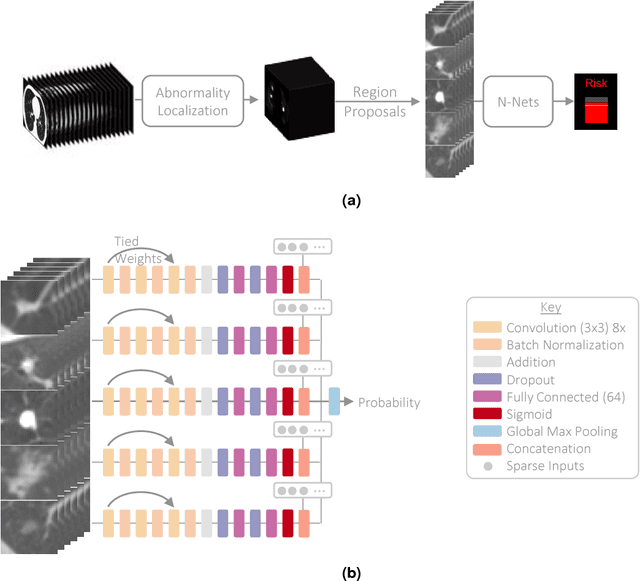

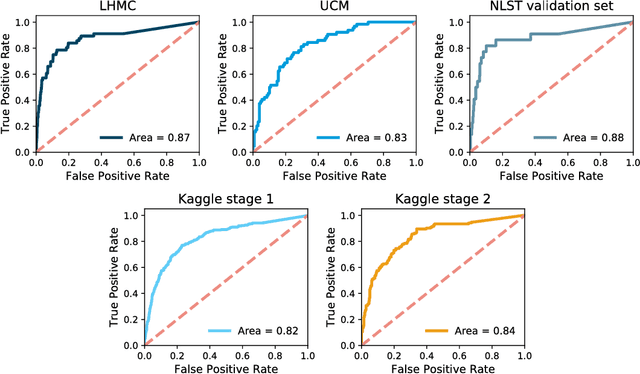

Towards radiologist-level cancer risk assessment in CT lung screening using deep learning

Apr 05, 2018

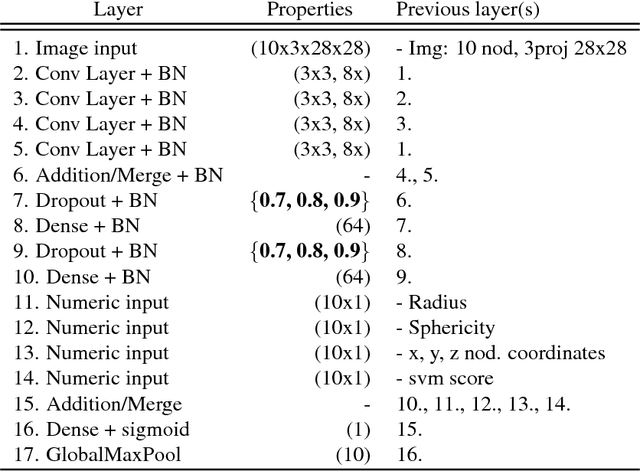

Abstract:Lung cancer is the leading cause of cancer mortality in the US, responsible for more deaths than breast, prostate, colon and pancreas cancer combined. Recently, it has been demonstrated that screening those at high-risk for lung cancer low-dose computed tomography (CT) of the chest can significantly reduce this death rate. The process of evaluating a chest CT scan involves the identification of nodules that are contained within a scan as well as the evaluation of the likelihood that a nodule is malignant based on its imaging characteristics. This has motivated researchers to develop image analysis research tools, such as nodule detectors and nodule classifiers that can assist radiologists to make accurate assessments of the patient cancer risk. In this work, we propose a two-stage framework that can assess the lung cancer risk associated with a low-dose chest CT scan. At the first stage, our framework employs a nodule detector; while in the second stage, we use both the image area around the nodules and nodule features as inputs to a neural network that estimates the malignancy risk of the whole CT scan. The proposed approach: (a) has better performance than the PanCan Risk Model, a widely accepted method for cancer malignancy assessment, achieving around 7% better Area Under Curve score in two independent datasets we have employed; (b) has comparable performance to radiologists in estimating cancer risk at patient level; (c) employs a novel multi-instance weakly-labeled approach to train the deep learning network that requires confirmed cancer diagnosis only at the patient level (not at the nodule level); and (d) employs a large number of lung CT scans (more than 8000) from heterogeneous data sources (NLST, LHMC, and Kaggle competition data) to validate and compare model performance. AUC scores for our model, evaluated against confirmed cancer diagnosis, range between 82% to 90%.

Towards an automated method based on Iterated Local Search optimization for tuning the parameters of Support Vector Machines

Jul 11, 2017Abstract:We provide preliminary details and formulation of an optimization strategy under current development that is able to automatically tune the parameters of a Support Vector Machine over new datasets. The optimization strategy is a heuristic based on Iterated Local Search, a modification of classic hill climbing which iterates calls to a local search routine.

Multiscale Event Detection in Social Media

Feb 06, 2015

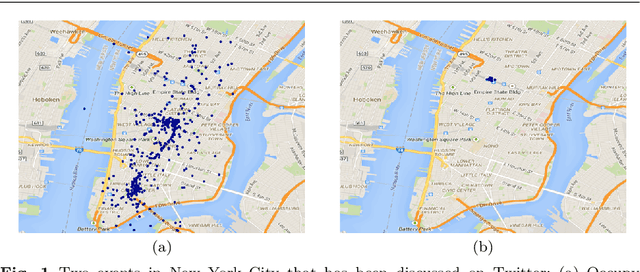

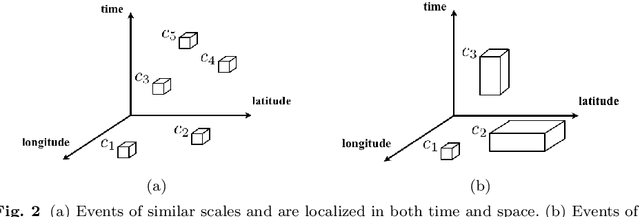

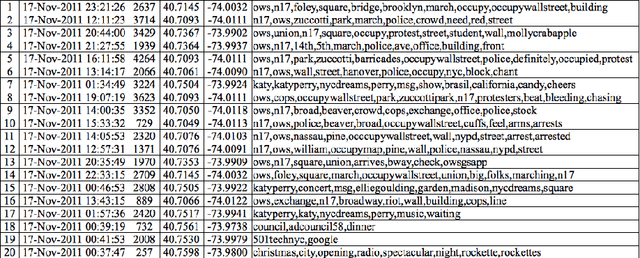

Abstract:Event detection has been one of the most important research topics in social media analysis. Most of the traditional approaches detect events based on fixed temporal and spatial resolutions, while in reality events of different scales usually occur simultaneously, namely, they span different intervals in time and space. In this paper, we propose a novel approach towards multiscale event detection using social media data, which takes into account different temporal and spatial scales of events in the data. Specifically, we explore the properties of the wavelet transform, which is a well-developed multiscale transform in signal processing, to enable automatic handling of the interaction between temporal and spatial scales. We then propose a novel algorithm to compute a data similarity graph at appropriate scales and detect events of different scales simultaneously by a single graph-based clustering process. Furthermore, we present spatiotemporal statistical analysis of the noisy information present in the data stream, which allows us to define a novel term-filtering procedure for the proposed event detection algorithm and helps us study its behavior using simulated noisy data. Experimental results on both synthetically generated data and real world data collected from Twitter demonstrate the meaningfulness and effectiveness of the proposed approach. Our framework further extends to numerous application domains that involve multiscale and multiresolution data analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge