Debasish Ghose

RIS-Assisted MIMO CV-QKD at THz Frequencies: Channel Estimation and SKR Analysis

Dec 25, 2024

Abstract:In this paper, a multiple-input multiple-output (MIMO) wireless system incorporating a reconfigurable intelligent surface (RIS) to efficiently operate at terahertz (THz) frequencies is considered. The transmitter, Alice, employs continuous-variable quantum key distribution (CV-QKD) to communicate secret keys to the receiver, Bob, which utilizes either homodyne or heterodyne detection. The latter node applies the least-squared approach to estimate the effective MIMO channel gain matrix prior to receiving the secret key, and this estimation is made available to Alice via an error-free feedback channel. An eavesdropper, Eve, is assumed to employ a collective Gaussian entanglement attack on the feedback channel to avail the estimated channel state information. We present a novel closed-form expression for the secret key rate (SKR) performance of the proposed RIS-assisted THz CV-QKD system. The effect of various system parameters, such as the number of RIS elements and their phase configurations, the channel estimation error, and the detector noise, on the SKR performance are studied via numerical evaluation of the derived formula. It is demonstrated that the RIS contributes to larger SKR for larger link distances, and that heterodyne detection is preferable over homodyne at lower pilot symbol powers.

Video Generation with Learned Action Prior

Jun 20, 2024

Abstract:Stochastic video generation is particularly challenging when the camera is mounted on a moving platform, as camera motion interacts with observed image pixels, creating complex spatio-temporal dynamics and making the problem partially observable. Existing methods typically address this by focusing on raw pixel-level image reconstruction without explicitly modelling camera motion dynamics. We propose a solution by considering camera motion or action as part of the observed image state, modelling both image and action within a multi-modal learning framework. We introduce three models: Video Generation with Learning Action Prior (VG-LeAP) treats the image-action pair as an augmented state generated from a single latent stochastic process and uses variational inference to learn the image-action latent prior; Causal-LeAP, which establishes a causal relationship between action and the observed image frame at time $t$, learning an action prior conditioned on the observed image states; and RAFI, which integrates the augmented image-action state concept into flow matching with diffusion generative processes, demonstrating that this action-conditioned image generation concept can be extended to other diffusion-based models. We emphasize the importance of multi-modal training in partially observable video generation problems through detailed empirical studies on our new video action dataset, RoAM.

Action-conditioned video data improves predictability

Apr 08, 2024

Abstract:Long-term video generation and prediction remain challenging tasks in computer vision, particularly in partially observable scenarios where cameras are mounted on moving platforms. The interaction between observed image frames and the motion of the recording agent introduces additional complexities. To address these issues, we introduce the Action-Conditioned Video Generation (ACVG) framework, a novel approach that investigates the relationship between actions and generated image frames through a deep dual Generator-Actor architecture. ACVG generates video sequences conditioned on the actions of robots, enabling exploration and analysis of how vision and action mutually influence one another in dynamic environments. We evaluate the framework's effectiveness on an indoor robot motion dataset which consists of sequences of image frames along with the sequences of actions taken by the robotic agent, conducting a comprehensive empirical study comparing ACVG to other state-of-the-art frameworks along with a detailed ablation study.

Optimal Kinematic Design of a Robotic Lizard using Four-Bar and Five-Bar Mechanisms

Aug 16, 2023

Abstract:Designing a mechanism to mimic the motion of a common house gecko is the objective of this work. The body of the robot is designed using four five-bar mechanisms (2-RRRRR and 2-RRPRR) and the leg is designed using four four-bar mechanisms. The 2-RRRRR five-bar mechanisms form the head and tail of the robotic lizard. The 2-RRPRR five-bar mechanisms form the left and right sides of the body in the robotic lizard. The four five-bar mechanisms are actuated by only four rotary actuators. Of these, two actuators control the head movements and the other two control the tail movements. The RRPRR five-bar mechanism is controlled by one actuator from the head five-bar mechanism and the other by the tail five-bar mechanism. A tension spring connects each active link to a link in the four bar mechanism. When the robot is actuated, the head, tail and the body moves, and simultaneously each leg moves accordingly. This kind of actuation where the motion transfer occurs from body of the robot to the leg is the novelty in our design. The dimensional synthesis of the robotic lizard is done and presented. Then the forward and inverse kinematics of the mechanism, and configuration space singularities identification for the robot are presented. The gait exhibited by the gecko is studied and then simulated. A computer aided design of the robotic lizard is created and a prototype is made by 3D printing the parts. The prototype is controlled using Arduino UNO as a micro-controller. The experimental results are finally presented based on the gait analysis that was done earlier. The forward walking, and turning motion are done and snapshots are presented.

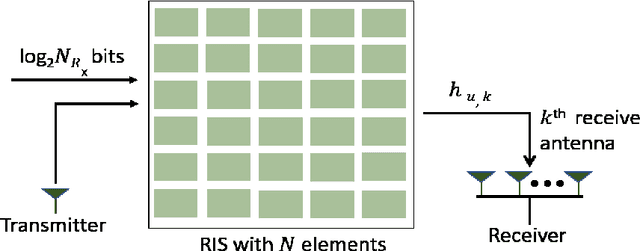

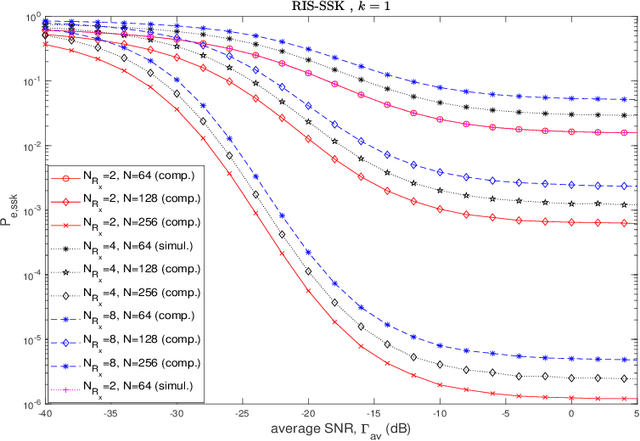

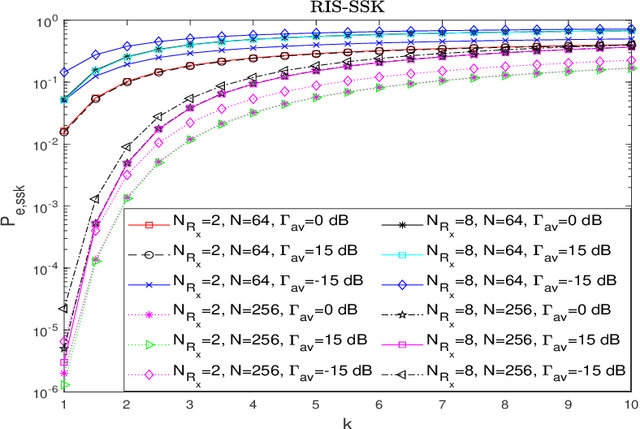

RIS-Aided Index Modulation with Greedy Detection over Rician Fading Channels

Jul 18, 2023

Abstract:Index modulation schemes for reconfigurable intelligent surfaces (RIS)-assisted systems are envisioned as promising technologies for fifth-generation-advanced and sixth-generation (6G) wireless communication systems to enhance various system capabilities such as coverage area and network capacity. In this paper, we consider a receive diversity RIS-assisted wireless communication system employing IM schemes, namely, space-shift keying (SSK) for binary modulation and spatial modulation (SM) for M-ary modulation for data transmission. The RIS lies in close proximity to the transmitter, and the transmitted data is subjected to a fading environment with a prominent line-of-sight component modeled by a Rician distribution. A receiver structure based on a greedy detection rule is employed to select the receive diversity branch with the highest received signal energy for demodulation. The performance of the considered system is evaluated by obtaining a series-form expression for the probability of erroneous index detection (PED) of the considered target antenna using a characteristic function approach. In addition, closed-form and asymptotic expressions at high and low signal-to-noise ratios (SNRs) for the bit error rate (BER) for the SSK-based system, and the SM-based system employing M-ary phase-shift keying and M-ary quadrature amplitude modulation schemes, are derived. The dependencies of the system performance on the various parameters are corroborated via numerical results. The asymptotic expressions and results of PED and BER at high and low SNR values lead to the observation of a performance saturation and the presence of an SNR value as a point of inflection, which is attributed to the greedy detector's structure.

Action-conditioned Deep Visual Prediction with RoAM, a new Indoor Human Motion Dataset for Autonomous Robots

Jun 28, 2023

Abstract:With the increasing adoption of robots across industries, it is crucial to focus on developing advanced algorithms that enable robots to anticipate, comprehend, and plan their actions effectively in collaboration with humans. We introduce the Robot Autonomous Motion (RoAM) video dataset, which is collected with a custom-made turtlebot3 Burger robot in a variety of indoor environments recording various human motions from the robot's ego-vision. The dataset also includes synchronized records of the LiDAR scan and all control actions taken by the robot as it navigates around static and moving human agents. The unique dataset provides an opportunity to develop and benchmark new visual prediction frameworks that can predict future image frames based on the action taken by the recording agent in partially observable scenarios or cases where the imaging sensor is mounted on a moving platform. We have benchmarked the dataset on our novel deep visual prediction framework called ACPNet where the approximated future image frames are also conditioned on action taken by the robot and demonstrated its potential for incorporating robot dynamics into the video prediction paradigm for mobile robotics and autonomous navigation research.

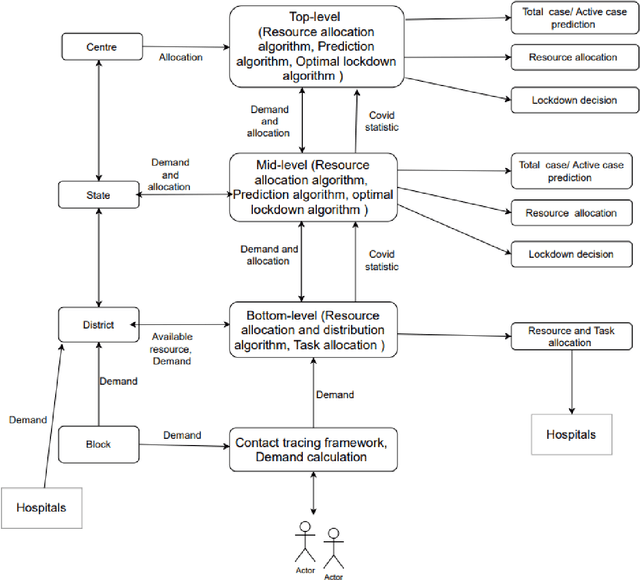

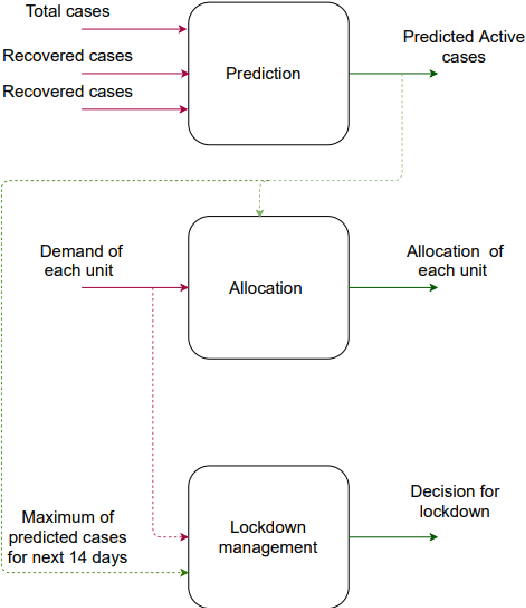

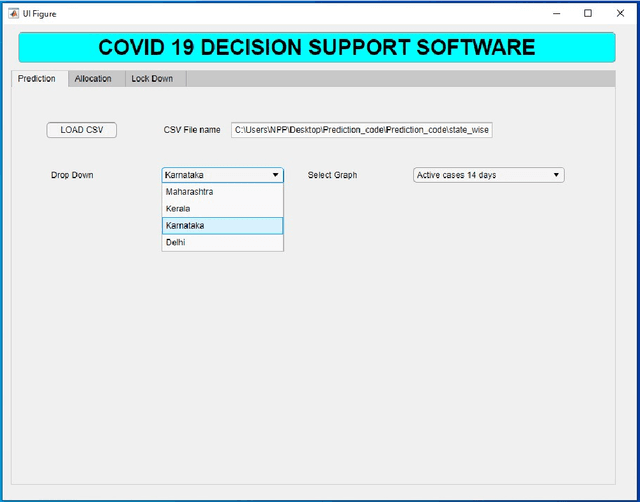

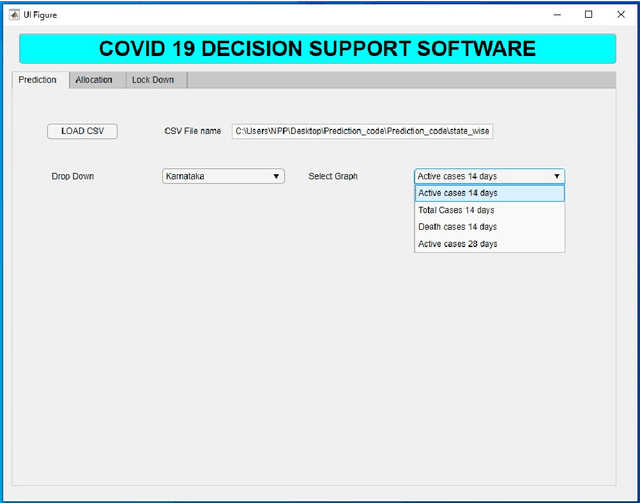

Development of Decision Support System for Effective COVID-19 Management

Mar 12, 2022

Abstract:This paper discusses a Decision Support System (DSS) for cases prediction, allocation of resources, and lockdown management for managing COVID-19 at different levels of a government authority. Algorithms incorporated in the DSS are based on a data-driven modeling approach and independent of physical parameters of the region, and hence the proposed DSS is applicable to any area. Based on predicted active cases, the demand of lower-level units and total availability, allocation, and lockdown decision is made. A MATLAB-based GUI is developed based on the proposed DSS and could be implemented by the local authority.

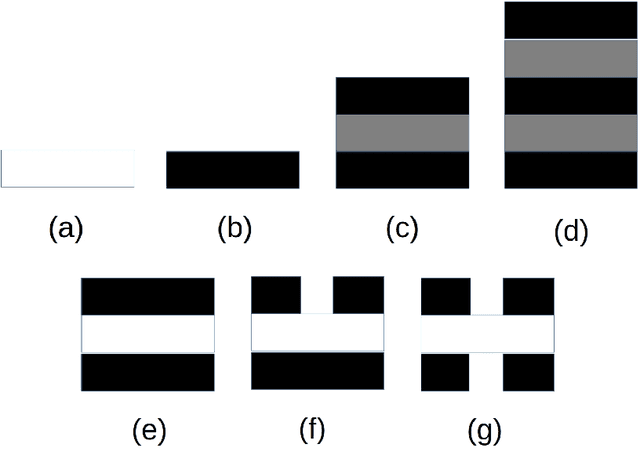

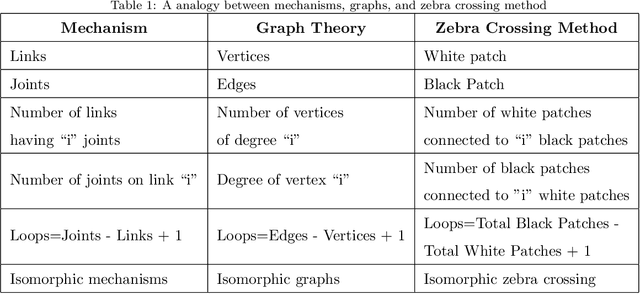

Degrees of Freedom Analysis of Mechanisms using the New Zebra Crossing Method

Jan 07, 2022

Abstract:Mobility, which is a basic property for a mechanism has to be analyzed to find the degrees of freedom. A quick method for calculation of degrees of freedom in a mechanism is proposed in this work. The mechanism is represented in a way that resembles a zebra crossing. An algorithm is proposed which is used to determine the mobility from the zebra crossing diagram. This algorithm takes into account the number of patches between the black patches, the number of joints attached to the fixed link and the number of loops in the mechanism. A number of cases have been discussed which fail to give the desired results using the widely used classical Kutzbach-Grubler formula.

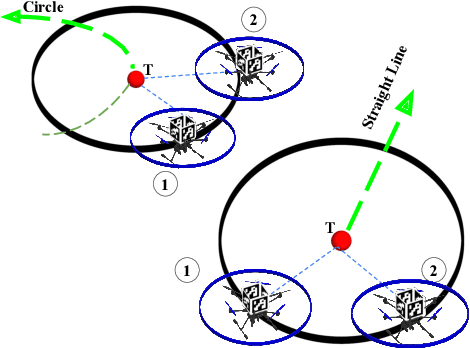

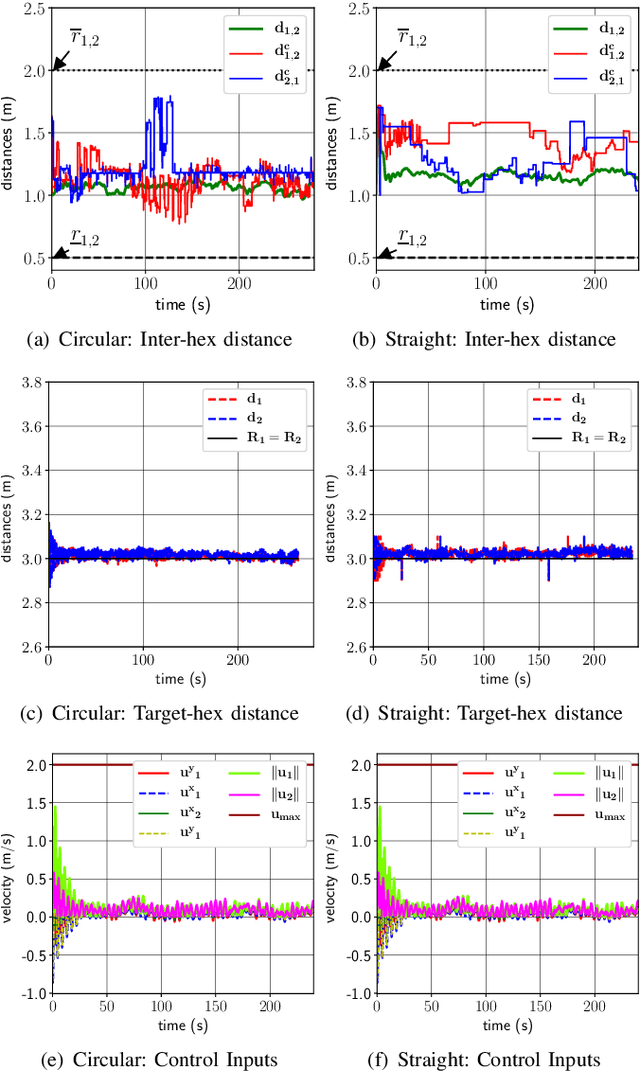

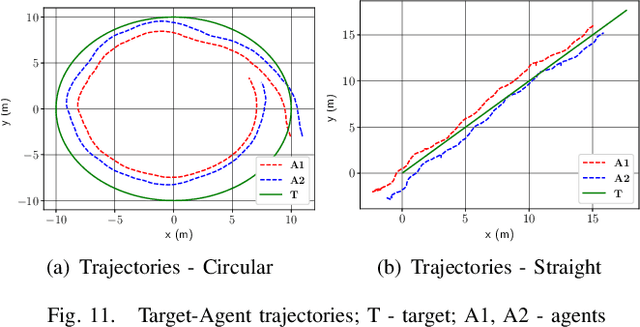

UAV Formation Preservation for Target Tracking Applications

Dec 06, 2021

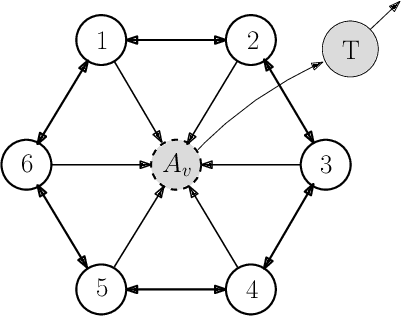

Abstract:This paper presents a collaborative target tracking application with multiple agents and a formulation of an agent-formation problem with desired inter-agent distances and specified bounds. We propose a barrier Lyapunov function-based distributed control law to preserve the formation for target-tracking and assess its stability using a kinematic model. Numerical results with this model are presented to demonstrate the advantages of the proposed control over a quadratic Lyapunov function-based control. A concluding evaluation using experimental ROS simulations is presented to illustrate the applicability of the proposed control approach to a multi-rotor system and a target executing straight line and circular motion.

Autonomous Cooperative Multi-Vehicle System for Interception of Aerial and Stationary Targets in Unknown Environments

Sep 01, 2021Abstract:This paper presents the design, development, and testing of hardware-software systems by the IISc-TCS team for Challenge 1 of the Mohammed Bin Zayed International Robotics Challenge 2020. The goal of Challenge 1 was to grab a ball suspended from a moving and maneuvering UAV and pop balloons anchored to the ground, using suitable manipulators. The important tasks carried out to address this challenge include the design and development of a hardware system with efficient grabbing and popping mechanisms, considering the restrictions in volume and payload, design of accurate target interception algorithms using visual information suitable for outdoor environments, and development of a software architecture for dynamic multi-agent aerial systems performing complex dynamic missions. In this paper, a single degree of freedom manipulator attached with an end-effector is designed for grabbing and popping, and robust algorithms are developed for the interception of targets in an uncertain environment. Vision-based guidance and tracking laws are proposed based on the concept of pursuit engagement and artificial potential function. The software architecture presented in this work proposes an Operation Management System (OMS) architecture that allocates static and dynamic tasks collaboratively among multiple UAVs to perform any given mission. An important aspect of this work is that all the systems developed were designed to operate in completely autonomous mode. A detailed description of the architecture along with simulations of complete challenge in the Gazebo environment and field experiment results are also included in this work. The proposed hardware-software system is particularly useful for counter-UAV systems and can also be modified in order to cater to several other applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge