Davide Taibi

The Invisible Hand of AI Libraries Shaping Open Source Projects and Communities

Jan 05, 2026Abstract:In the early 1980s, Open Source Software emerged as a revolutionary concept amidst the dominance of proprietary software. What began as a revolutionary idea has now become the cornerstone of computer science. Amidst OSS projects, AI is increasing its presence and relevance. However, despite the growing popularity of AI, its adoption and impacts on OSS projects remain underexplored. We aim to assess the adoption of AI libraries in Python and Java OSS projects and examine how they shape development, including the technical ecosystem and community engagement. To this end, we will perform a large-scale analysis on 157.7k potential OSS repositories, employing repository metrics and software metrics to compare projects adopting AI libraries against those that do not. We expect to identify measurable differences in development activity, community engagement, and code complexity between OSS projects that adopt AI libraries and those that do not, offering evidence-based insights into how AI integration reshapes software development practices.

SQuaD: The Software Quality Dataset

Nov 14, 2025

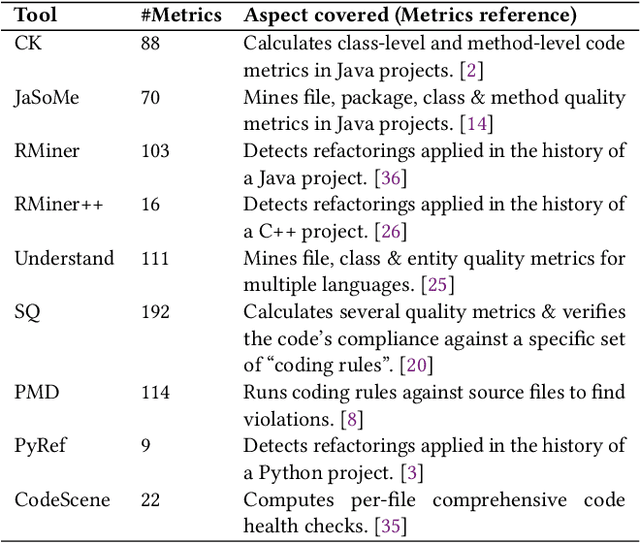

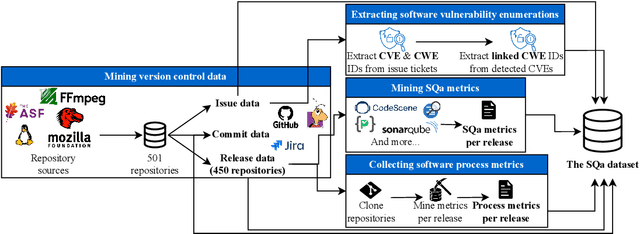

Abstract:Software quality research increasingly relies on large-scale datasets that measure both the product and process aspects of software systems. However, existing resources often focus on limited dimensions, such as code smells, technical debt, or refactoring activity, thereby restricting comprehensive analyses across time and quality dimensions. To address this gap, we present the Software Quality Dataset (SQuaD), a multi-dimensional, time-aware collection of software quality metrics extracted from 450 mature open-source projects across diverse ecosystems, including Apache, Mozilla, FFmpeg, and the Linux kernel. By integrating nine state-of-the-art static analysis tools, i.e., SonarQube, CodeScene, PMD, Understand, CK, JaSoMe, RefactoringMiner, RefactoringMiner++, and PyRef, our dataset unifies over 700 unique metrics at method, class, file, and project levels. Covering a total of 63,586 analyzed project releases, SQuaD also provides version control and issue-tracking histories, software vulnerability data (CVE/CWE), and process metrics proven to enhance Just-In-Time (JIT) defect prediction. The SQuaD enables empirical research on maintainability, technical debt, software evolution, and quality assessment at unprecedented scale. We also outline emerging research directions, including automated dataset updates and cross-project quality modeling to support the continuous evolution of software analytics. The dataset is publicly available on ZENODO (DOI: 10.5281/zenodo.17566690).

Why am I seeing this? Towards recognizing social media recommender systems with missing recommendations

Apr 15, 2025Abstract:Social media plays a crucial role in shaping society, often amplifying polarization and spreading misinformation. These effects stem from complex dynamics involving user interactions, individual traits, and recommender algorithms driving content selection. Recommender systems, which significantly shape the content users see and decisions they make, offer an opportunity for intervention and regulation. However, assessing their impact is challenging due to algorithmic opacity and limited data availability. To effectively model user decision-making, it is crucial to recognize the recommender system adopted by the platform. This work introduces a method for Automatic Recommender Recognition using Graph Neural Networks (GNNs), based solely on network structure and observed behavior. To infer the hidden recommender, we first train a Recommender Neutral User model (RNU) using a GNN and an adapted hindsight academic network recommender, aiming to reduce reliance on the actual recommender in the data. We then generate several Recommender Hypothesis-specific Synthetic Datasets (RHSD) by combining the RNU with different known recommenders, producing ground truths for testing. Finally, we train Recommender Hypothesis-specific User models (RHU) under various hypotheses and compare each candidate with the original used to generate the RHSD. Our approach enables accurate detection of hidden recommenders and their influence on user behavior. Unlike audit-based methods, it captures system behavior directly, without ad hoc experiments that often fail to reflect real platforms. This study provides insights into how recommenders shape behavior, aiding efforts to reduce polarization and misinformation.

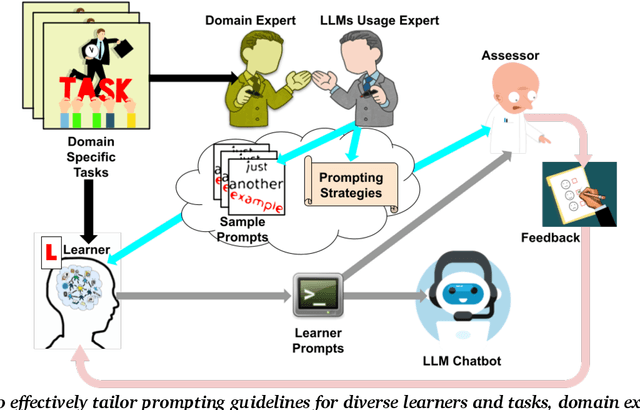

Understanding Learner-LLM Chatbot Interactions and the Impact of Prompting Guidelines

Apr 10, 2025Abstract:Large Language Models (LLMs) have transformed human-computer interaction by enabling natural language-based communication with AI-powered chatbots. These models are designed to be intuitive and user-friendly, allowing users to articulate requests with minimal effort. However, despite their accessibility, studies reveal that users often struggle with effective prompting, resulting in inefficient responses. Existing research has highlighted both the limitations of LLMs in interpreting vague or poorly structured prompts and the difficulties users face in crafting precise queries. This study investigates learner-AI interactions through an educational experiment in which participants receive structured guidance on effective prompting. We introduce and compare three types of prompting guidelines: a task-specific framework developed through a structured methodology and two baseline approaches. To assess user behavior and prompting efficacy, we analyze a dataset of 642 interactions from 107 users. Using Von NeuMidas, an extended pragmatic annotation schema for LLM interaction analysis, we categorize common prompting errors and identify recurring behavioral patterns. We then evaluate the impact of different guidelines by examining changes in user behavior, adherence to prompting strategies, and the overall quality of AI-generated responses. Our findings provide a deeper understanding of how users engage with LLMs and the role of structured prompting guidance in enhancing AI-assisted communication. By comparing different instructional frameworks, we offer insights into more effective approaches for improving user competency in AI interactions, with implications for AI literacy, chatbot usability, and the design of more responsive AI systems.

Generative AI for Software Architecture. Applications, Trends, Challenges, and Future Directions

Mar 17, 2025

Abstract:Context: Generative Artificial Intelligence (GenAI) is transforming much of software development, yet its application in software architecture is still in its infancy, and no prior study has systematically addressed the topic. Aim: We aim to systematically synthesize the use, rationale, contexts, usability, and future challenges of GenAI in software architecture. Method: We performed a multivocal literature review (MLR), analyzing peer-reviewed and gray literature, identifying current practices, models, adoption contexts, and reported challenges, extracting themes via open coding. Results: Our review identified significant adoption of GenAI for architectural decision support and architectural reconstruction. OpenAI GPT models are predominantly applied, and there is consistent use of techniques such as few-shot prompting and retrieved-augmented generation (RAG). GenAI has been applied mostly to initial stages of the Software Development Life Cycle (SDLC), such as Requirements-to-Architecture and Architecture-to-Code. Monolithic and microservice architectures were the dominant targets. However, rigorous testing of GenAI outputs was typically missing from the studies. Among the most frequent challenges are model precision, hallucinations, ethical aspects, privacy issues, lack of architecture-specific datasets, and the absence of sound evaluation frameworks. Conclusions: GenAI shows significant potential in software design, but several challenges remain on its path to greater adoption. Research efforts should target designing general evaluation methodologies, handling ethics and precision, increasing transparency and explainability, and promoting architecture-specific datasets and benchmarks to bridge the gap between theoretical possibilities and practical use.

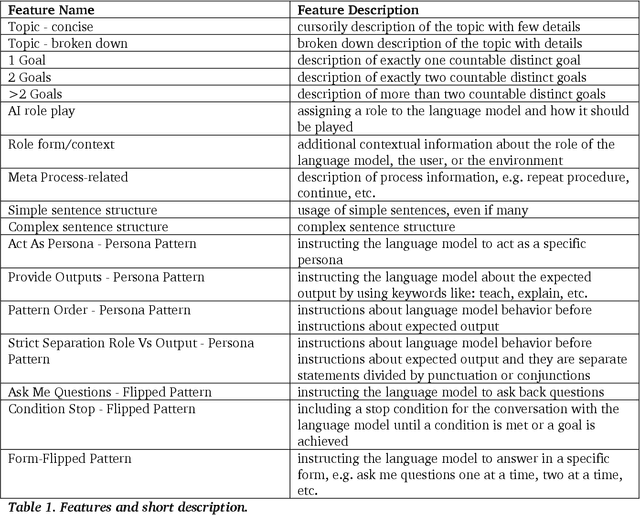

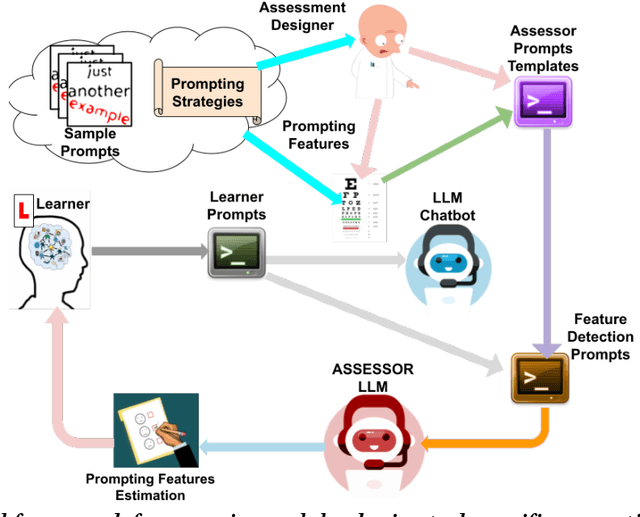

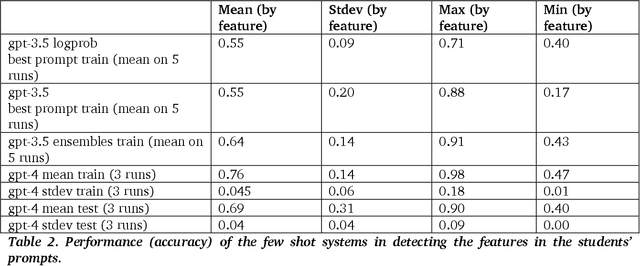

Use Me Wisely: AI-Driven Assessment for LLM Prompting Skills Development

Mar 04, 2025

Abstract:The use of large language model (LLM)-powered chatbots, such as ChatGPT, has become popular across various domains, supporting a range of tasks and processes. However, due to the intrinsic complexity of LLMs, effective prompting is more challenging than it may seem. This highlights the need for innovative educational and support strategies that are both widely accessible and seamlessly integrated into task workflows. Yet, LLM prompting is highly task- and domain-dependent, limiting the effectiveness of generic approaches. In this study, we explore whether LLM-based methods can facilitate learning assessments by using ad-hoc guidelines and a minimal number of annotated prompt samples. Our framework transforms these guidelines into features that can be identified within learners' prompts. Using these feature descriptions and annotated examples, we create few-shot learning detectors. We then evaluate different configurations of these detectors, testing three state-of-the-art LLMs and ensembles. We run experiments with cross-validation on a sample of original prompts, as well as tests on prompts collected from task-naive learners. Our results show how LLMs perform on feature detection. Notably, GPT- 4 demonstrates strong performance on most features, while closely related models, such as GPT-3 and GPT-3.5 Turbo (Instruct), show inconsistent behaviors in feature classification. These differences highlight the need for further research into how design choices impact feature selection and prompt detection. Our findings contribute to the fields of generative AI literacy and computer-supported learning assessment, offering valuable insights for both researchers and practitioners.

On Large Language Models in Mission-Critical IT Governance: Are We Ready Yet?

Dec 16, 2024Abstract:Context. The security of critical infrastructure has been a fundamental concern since the advent of computers, and this concern has only intensified in today's cyber warfare landscape. Protecting mission-critical systems (MCSs), including essential assets like healthcare, telecommunications, and military coordination, is vital for national security. These systems require prompt and comprehensive governance to ensure their resilience, yet recent events have shown that meeting these demands is increasingly challenging. Aim. Building on prior research that demonstrated the potential of GAI, particularly Large Language Models (LLMs), in improving risk analysis tasks, we aim to explore practitioners' perspectives, specifically developers and security personnel, on using generative AI (GAI) in the governance of IT MCSs seeking to provide insights and recommendations for various stakeholders, including researchers, practitioners, and policymakers. Method. We designed a survey to collect practical experiences, concerns, and expectations of practitioners who develop and implement security solutions in the context of MCSs. Analyzing this data will help identify key trends, challenges, and opportunities for introducing GAIs in this niche domain. Conclusions and Future Works. Our findings highlight that the safe use of LLMs in MCS governance requires interdisciplinary collaboration. Researchers should focus on designing regulation-oriented models and focus on accountability; practitioners emphasize data protection and transparency, while policymakers must establish a unified AI framework with global benchmarks to ensure ethical and secure LLMs-based MCS governance.

Modeling Social Media Recommendation Impacts Using Academic Networks: A Graph Neural Network Approach

Oct 06, 2024Abstract:The widespread use of social media has highlighted potential negative impacts on society and individuals, largely driven by recommendation algorithms that shape user behavior and social dynamics. Understanding these algorithms is essential but challenging due to the complex, distributed nature of social media networks as well as limited access to real-world data. This study proposes to use academic social networks as a proxy for investigating recommendation systems in social media. By employing Graph Neural Networks (GNNs), we develop a model that separates the prediction of academic infosphere from behavior prediction, allowing us to simulate recommender-generated infospheres and assess the model's performance in predicting future co-authorships. Our approach aims to improve our understanding of recommendation systems' roles and social networks modeling. To support the reproducibility of our work we publicly make available our implementations: https://github.com/DimNeuroLab/academic_network_project

6GSoft: Software for Edge-to-Cloud Continuum

Jul 09, 2024Abstract:In the era of 6G, developing and managing software requires cutting-edge software engineering (SE) theories and practices tailored for such complexity across a vast number of connected edge devices. Our project aims to lead the development of sustainable methods and energy-efficient orchestration models specifically for edge environments, enhancing architectural support driven by AI for contemporary edge-to-cloud continuum computing. This initiative seeks to position Finland at the forefront of the 6G landscape, focusing on sophisticated edge orchestration and robust software architectures to optimize the performance and scalability of edge networks. Collaborating with leading Finnish universities and companies, the project emphasizes deep industry-academia collaboration and international expertise to address critical challenges in edge orchestration and software architecture, aiming to drive significant advancements in software productivity and market impact.

$Classi

Jun 10, 2024

Abstract:Quantum computing, albeit readily available as hardware or emulated on the cloud, is still far from being available in general regarding complex programming paradigms and learning curves. This vision paper introduces $Classi|Q\rangle$, a translation framework idea to bridge Classical and Quantum Computing by translating high-level programming languages, e.g., Python or C++, into a low-level language, e.g., Quantum Assembly. Our idea paper serves as a blueprint for ongoing efforts in quantum software engineering, offering a roadmap for further $Classi|Q\rangle$ development to meet the diverse needs of researchers and practitioners. $Classi|Q\rangle$ is designed to empower researchers and practitioners with no prior quantum experience to harness the potential of hybrid quantum computation. We also discuss future enhancements to $Classi|Q\rangle$, including support for additional quantum languages, improved optimization strategies, and integration with emerging quantum computing platforms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge