Cansu Koyuturk

Understanding Learner-LLM Chatbot Interactions and the Impact of Prompting Guidelines

Apr 10, 2025Abstract:Large Language Models (LLMs) have transformed human-computer interaction by enabling natural language-based communication with AI-powered chatbots. These models are designed to be intuitive and user-friendly, allowing users to articulate requests with minimal effort. However, despite their accessibility, studies reveal that users often struggle with effective prompting, resulting in inefficient responses. Existing research has highlighted both the limitations of LLMs in interpreting vague or poorly structured prompts and the difficulties users face in crafting precise queries. This study investigates learner-AI interactions through an educational experiment in which participants receive structured guidance on effective prompting. We introduce and compare three types of prompting guidelines: a task-specific framework developed through a structured methodology and two baseline approaches. To assess user behavior and prompting efficacy, we analyze a dataset of 642 interactions from 107 users. Using Von NeuMidas, an extended pragmatic annotation schema for LLM interaction analysis, we categorize common prompting errors and identify recurring behavioral patterns. We then evaluate the impact of different guidelines by examining changes in user behavior, adherence to prompting strategies, and the overall quality of AI-generated responses. Our findings provide a deeper understanding of how users engage with LLMs and the role of structured prompting guidance in enhancing AI-assisted communication. By comparing different instructional frameworks, we offer insights into more effective approaches for improving user competency in AI interactions, with implications for AI literacy, chatbot usability, and the design of more responsive AI systems.

SCOOP: A Framework for Proactive Collaboration and Social Continual Learning through Natural Language Interaction andCausal Reasoning

Mar 13, 2025Abstract:Multimodal information-gathering settings, where users collaborate with AI in dynamic environments, are increasingly common. These involve complex processes with textual and multimodal interactions, often requiring additional structural information via cost-incurring requests. AI helpers lack access to users' true goals, beliefs, and preferences and struggle to integrate diverse information effectively. We propose a social continual learning framework for causal knowledge acquisition and collaborative decision-making. It focuses on autonomous agents learning through dialogues, question-asking, and interaction in open, partially observable environments. A key component is a natural language oracle that answers the agent's queries about environmental mechanisms and states, refining causal understanding while balancing exploration or learning, and exploitation or knowledge use. Evaluation tasks inspired by developmental psychology emphasize causal reasoning and question-asking skills. They complement benchmarks by assessing the agent's ability to identify knowledge gaps, generate meaningful queries, and incrementally update reasoning. The framework also evaluates how knowledge acquisition costs are amortized across tasks within the same environment. We propose two architectures: 1) a system combining Large Language Models (LLMs) with the ReAct framework and question-generation, and 2) an advanced system with a causal world model, symbolic, graph-based, or subsymbolic, for reasoning and decision-making. The latter builds a causal knowledge graph for efficient inference and adaptability under constraints. Challenges include integrating causal reasoning into ReAct and optimizing exploration and question-asking in error-prone scenarios. Beyond applications, this framework models developmental processes combining causal reasoning, question generation, and social learning.

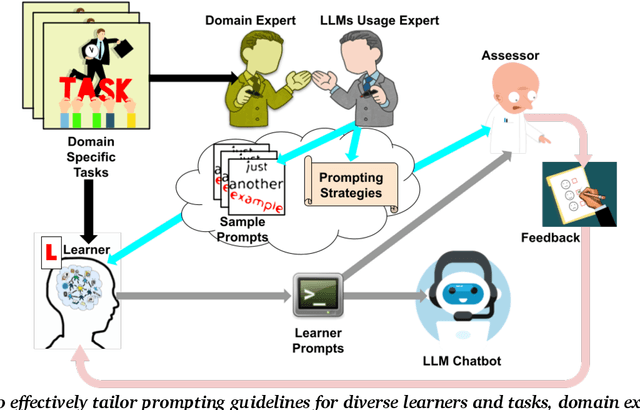

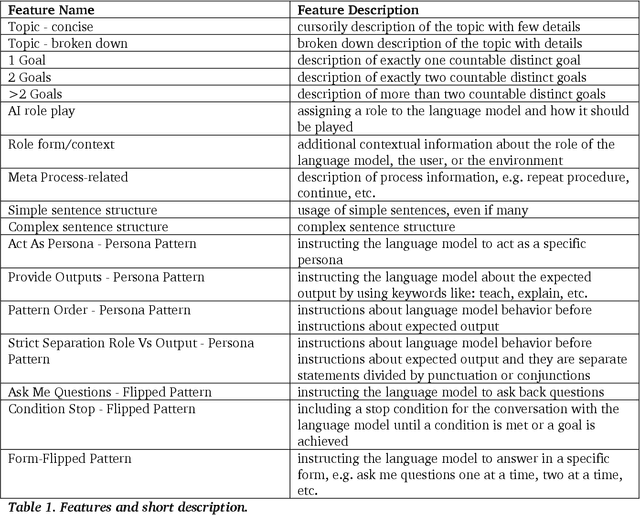

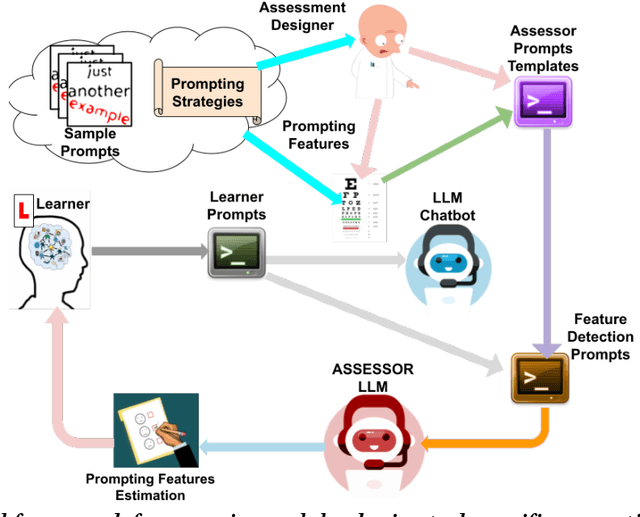

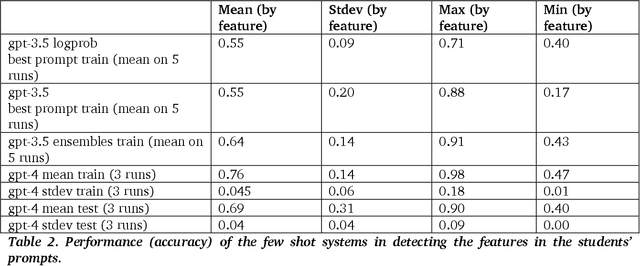

Use Me Wisely: AI-Driven Assessment for LLM Prompting Skills Development

Mar 04, 2025

Abstract:The use of large language model (LLM)-powered chatbots, such as ChatGPT, has become popular across various domains, supporting a range of tasks and processes. However, due to the intrinsic complexity of LLMs, effective prompting is more challenging than it may seem. This highlights the need for innovative educational and support strategies that are both widely accessible and seamlessly integrated into task workflows. Yet, LLM prompting is highly task- and domain-dependent, limiting the effectiveness of generic approaches. In this study, we explore whether LLM-based methods can facilitate learning assessments by using ad-hoc guidelines and a minimal number of annotated prompt samples. Our framework transforms these guidelines into features that can be identified within learners' prompts. Using these feature descriptions and annotated examples, we create few-shot learning detectors. We then evaluate different configurations of these detectors, testing three state-of-the-art LLMs and ensembles. We run experiments with cross-validation on a sample of original prompts, as well as tests on prompts collected from task-naive learners. Our results show how LLMs perform on feature detection. Notably, GPT- 4 demonstrates strong performance on most features, while closely related models, such as GPT-3 and GPT-3.5 Turbo (Instruct), show inconsistent behaviors in feature classification. These differences highlight the need for further research into how design choices impact feature selection and prompt detection. Our findings contribute to the fields of generative AI literacy and computer-supported learning assessment, offering valuable insights for both researchers and practitioners.

Unimib Assistant: designing a student-friendly RAG-based chatbot for all their needs

Nov 29, 2024

Abstract:Natural language processing skills of Large Language Models (LLMs) are unprecedented, having wide diffusion and application in different tasks. This pilot study focuses on specializing ChatGPT behavior through a Retrieval-Augmented Generation (RAG) system using the OpenAI custom GPTs feature. The purpose of our chatbot, called Unimib Assistant, is to provide information and solutions to the specific needs of University of Milano-Bicocca (Unimib) students through a question-answering approach. We provided the system with a prompt highlighting its specific purpose and behavior, as well as university-related documents and links obtained from an initial need-finding phase, interviewing six students. After a preliminary customization phase, a qualitative usability test was conducted with six other students to identify the strengths and weaknesses of the chatbot, with the goal of improving it in a subsequent redesign phase. While the chatbot was appreciated for its user-friendly experience, perceived general reliability, well-structured responses, and conversational tone, several significant technical and functional limitations emerged. In particular, the satisfaction and overall experience of the users was impaired by the system's inability to always provide fully accurate information. Moreover, it would often neglect to report relevant information even if present in the materials uploaded and prompt given. Furthermore, it sometimes generated unclickable links, undermining its trustworthiness, since providing the source of information was an important aspect for our users. Further in-depth studies and feedback from other users as well as implementation iterations are planned to refine our Unimib Assistant.

Habit Coach: Customising RAG-based chatbots to support behavior change

Nov 28, 2024

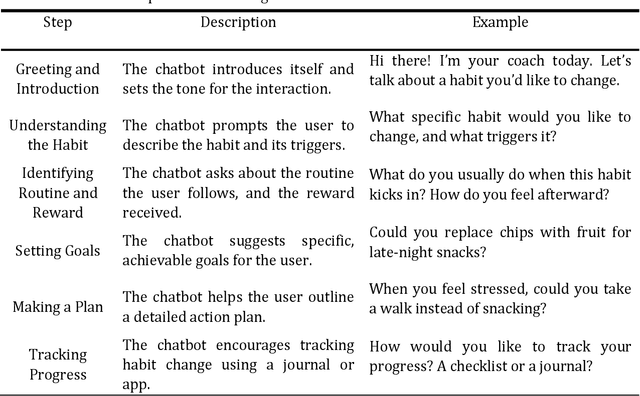

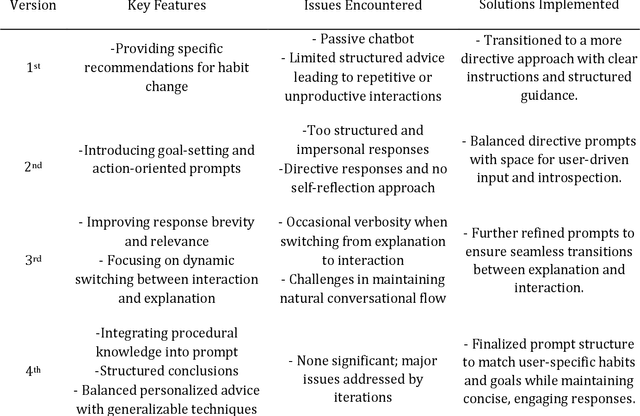

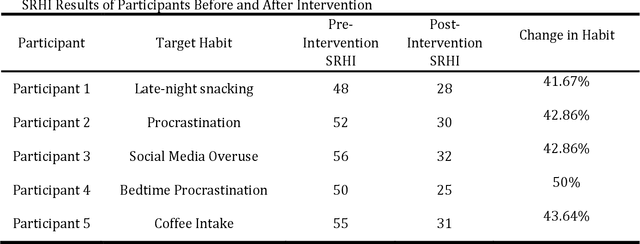

Abstract:This paper presents the iterative development of Habit Coach, a GPT-based chatbot designed to support users in habit change through personalized interaction. Employing a user-centered design approach, we developed the chatbot using a Retrieval-Augmented Generation (RAG) system, which enables behavior personalization without retraining the underlying language model (GPT-4). The system leverages document retrieval and specialized prompts to tailor interactions, drawing from Cognitive Behavioral Therapy (CBT) and narrative therapy techniques. A key challenge in the development process was the difficulty of translating declarative knowledge into effective interaction behaviors. In the initial phase, the chatbot was provided with declarative knowledge about CBT via reference textbooks and high-level conversational goals. However, this approach resulted in imprecise and inefficient behavior, as the GPT model struggled to convert static information into dynamic and contextually appropriate interactions. This highlighted the limitations of relying solely on declarative knowledge to guide chatbot behavior, particularly in nuanced, therapeutic conversations. Over four iterations, we addressed this issue by gradually transitioning towards procedural knowledge, refining the chatbot's interaction strategies, and improving its overall effectiveness. In the final evaluation, 5 participants engaged with the chatbot over five consecutive days, receiving individualized CBT interventions. The Self-Report Habit Index (SRHI) was used to measure habit strength before and after the intervention, revealing a reduction in habit strength post-intervention. These results underscore the importance of procedural knowledge in driving effective, personalized behavior change support in RAG-based systems.

Learning to Prompt in the Classroom to Understand AI Limits: A pilot study

Jul 04, 2023Abstract:Artificial intelligence's progress holds great promise in assisting society in addressing pressing societal issues. In particular Large Language Models (LLM) and the derived chatbots, like ChatGPT, have highly improved the natural language processing capabilities of AI systems allowing them to process an unprecedented amount of unstructured data. The consequent hype has also backfired, raising negative sentiment even after novel AI methods' surprising contributions. One of the causes, but also an important issue per se, is the rising and misleading feeling of being able to access and process any form of knowledge to solve problems in any domain with no effort or previous expertise in AI or problem domain, disregarding current LLMs limits, such as hallucinations and reasoning limits. Acknowledging AI fallibility is crucial to address the impact of dogmatic overconfidence in possibly erroneous suggestions generated by LLMs. At the same time, it can reduce fear and other negative attitudes toward AI. AI literacy interventions are necessary that allow the public to understand such LLM limits and learn how to use them in a more effective manner, i.e. learning to "prompt". With this aim, a pilot educational intervention was performed in a high school with 30 students. It involved (i) presenting high-level concepts about intelligence, AI, and LLM, (ii) an initial naive practice with ChatGPT in a non-trivial task, and finally (iii) applying currently-accepted prompting strategies. Encouraging preliminary results have been collected such as students reporting a) high appreciation of the activity, b) improved quality of the interaction with the LLM during the educational activity, c) decreased negative sentiments toward AI, d) increased understanding of limitations and specifically We aim to study factors that impact AI acceptance and to refine and repeat this activity in more controlled settings.

Developing Effective Educational Chatbots with ChatGPT prompts: Insights from Preliminary Tests in a Case Study on Social Media Literacy

Jun 18, 2023

Abstract:Educational chatbots come with a promise of interactive and personalized learning experiences, yet their development has been limited by the restricted free interaction capabilities of available platforms and the difficulty of encoding knowledge in a suitable format. Recent advances in language learning models with zero-shot learning capabilities, such as ChatGPT, suggest a new possibility for developing educational chatbots using a prompt-based approach. We present a case study with a simple system that enables mixed-turn chatbot interactions and we discuss the insights and preliminary guidelines obtained from initial tests. We examine ChatGPT's ability to pursue multiple interconnected learning objectives, adapt the educational activity to users' characteristics, such as culture, age, and level of education, and its ability to use diverse educational strategies and conversational styles. Although the results are encouraging, challenges are posed by the limited history maintained for the conversation and the highly structured form of responses by ChatGPT, as well as their variability, which can lead to an unexpected switch of the chatbot's role from a teacher to a therapist. We provide some initial guidelines to address these issues and to facilitate the development of effective educational chatbots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge