David Peter

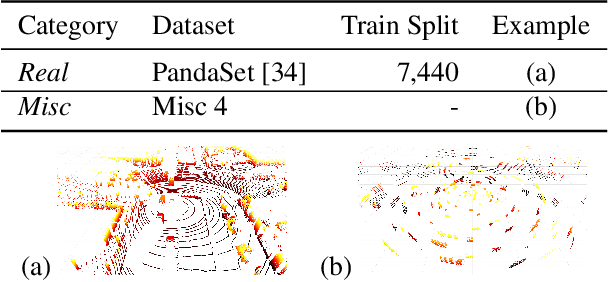

A Realism Metric for Generated LiDAR Point Clouds

Aug 31, 2022

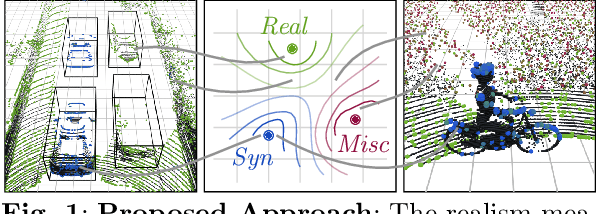

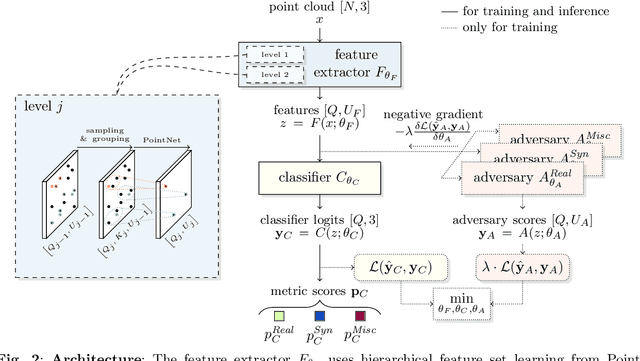

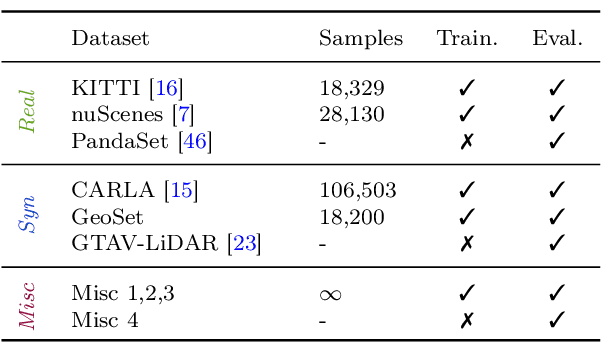

Abstract:A considerable amount of research is concerned with the generation of realistic sensor data. LiDAR point clouds are generated by complex simulations or learned generative models. The generated data is usually exploited to enable or improve downstream perception algorithms. Two major questions arise from these procedures: First, how to evaluate the realism of the generated data? Second, does more realistic data also lead to better perception performance? This paper addresses both questions and presents a novel metric to quantify the realism of LiDAR point clouds. Relevant features are learned from real-world and synthetic point clouds by training on a proxy classification task. In a series of experiments, we demonstrate the application of our metric to determine the realism of generated LiDAR data and compare the realism estimation of our metric to the performance of a segmentation model. We confirm that our metric provides an indication for the downstream segmentation performance.

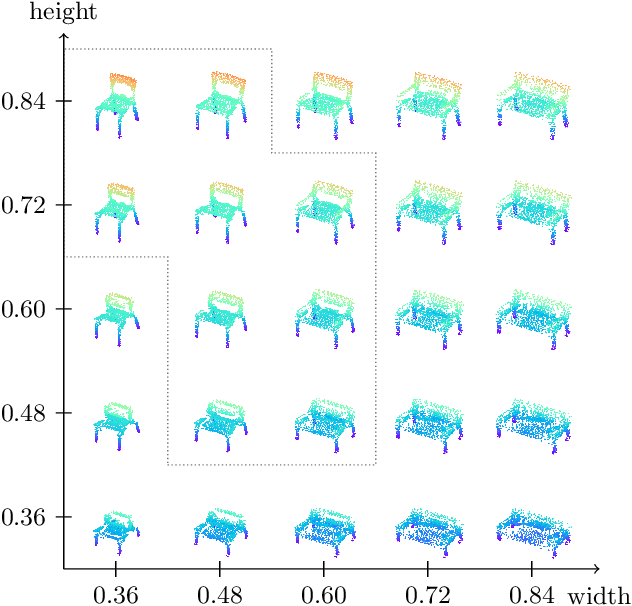

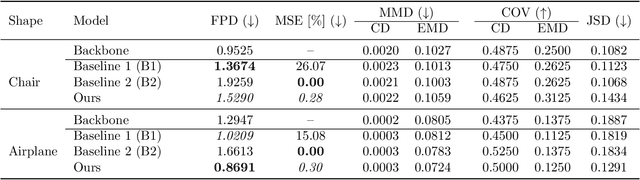

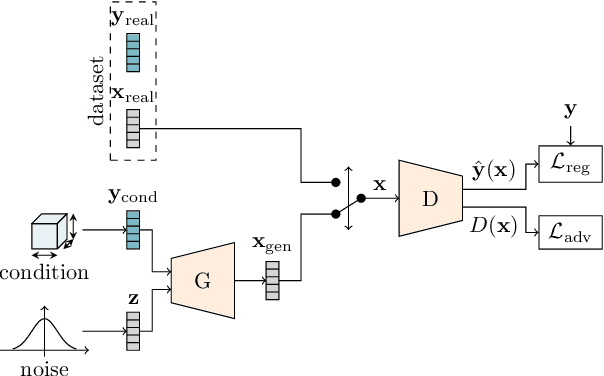

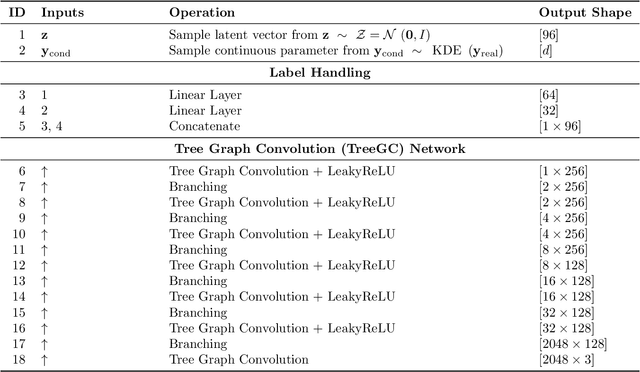

Point Cloud Generation with Continuous Conditioning

Feb 17, 2022

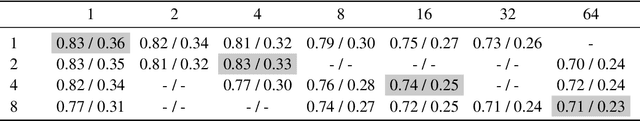

Abstract:Generative models can be used to synthesize 3D objects of high quality and diversity. However, there is typically no control over the properties of the generated object.This paper proposes a novel generative adversarial network (GAN) setup that generates 3D point cloud shapes conditioned on a continuous parameter. In an exemplary application, we use this to guide the generative process to create a 3D object with a custom-fit shape. We formulate this generation process in a multi-task setting by using the concept of auxiliary classifier GANs. Further, we propose to sample the generator label input for training from a kernel density estimation (KDE) of the dataset. Our ablations show that this leads to significant performance increase in regions with few samples. Extensive quantitative and qualitative experiments show that we gain explicit control over the object dimensions while maintaining good generation quality and diversity.

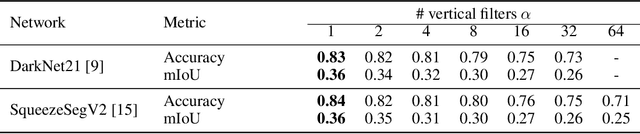

Semi-Local Convolutions for LiDAR Scan Processing

Nov 30, 2021

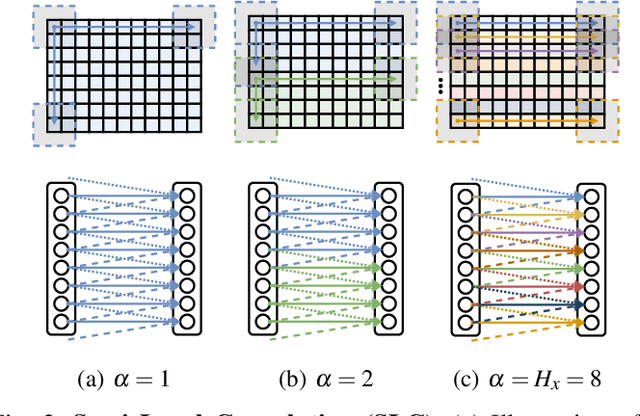

Abstract:A number of applications, such as mobile robots or automated vehicles, use LiDAR sensors to obtain detailed information about their three-dimensional surroundings. Many methods use image-like projections to efficiently process these LiDAR measurements and use deep convolutional neural networks to predict semantic classes for each point in the scan. The spatial stationary assumption enables the usage of convolutions. However, LiDAR scans exhibit large differences in appearance over the vertical axis. Therefore, we propose semi local convolution (SLC), a convolution layer with reduced amount of weight-sharing along the vertical dimension. We are first to investigate the usage of such a layer independent of any other model changes. Our experiments did not show any improvement over traditional convolution layers in terms of segmentation IoU or accuracy.

* arXiv admin note: text overlap with arXiv:2004.11803

Quantifying point cloud realism through adversarially learned latent representations

Sep 24, 2021

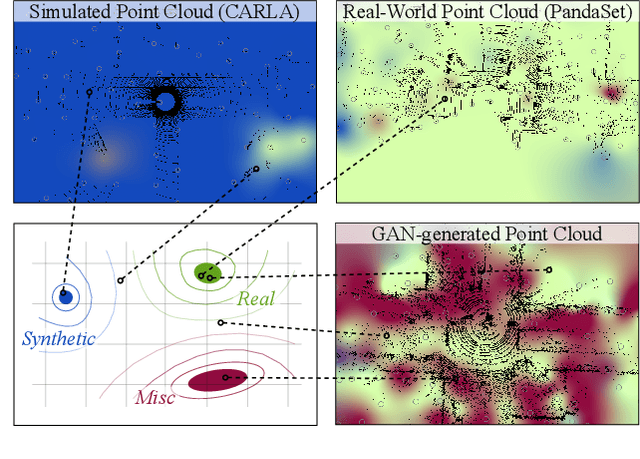

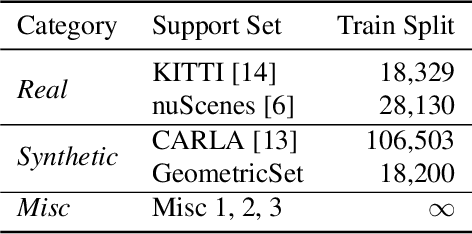

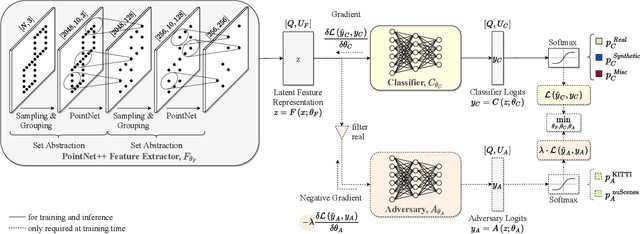

Abstract:Judging the quality of samples synthesized by generative models can be tedious and time consuming, especially for complex data structures, such as point clouds. This paper presents a novel approach to quantify the realism of local regions in LiDAR point clouds. Relevant features are learned from real-world and synthetic point clouds by training on a proxy classification task. Inspired by fair networks, we use an adversarial technique to discourage the encoding of dataset-specific information. The resulting metric can assign a quality score to samples without requiring any task specific annotations. In a series of experiments, we confirm the soundness of our metric by applying it in controllable task setups and on unseen data. Additional experiments show reliable interpolation capabilities of the metric between data with varying degree of realism. As one important application, we demonstrate how the local realism score can be used for anomaly detection in point clouds.

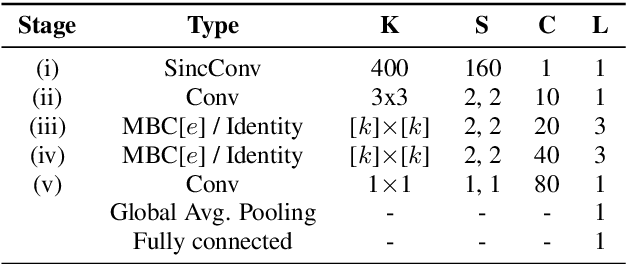

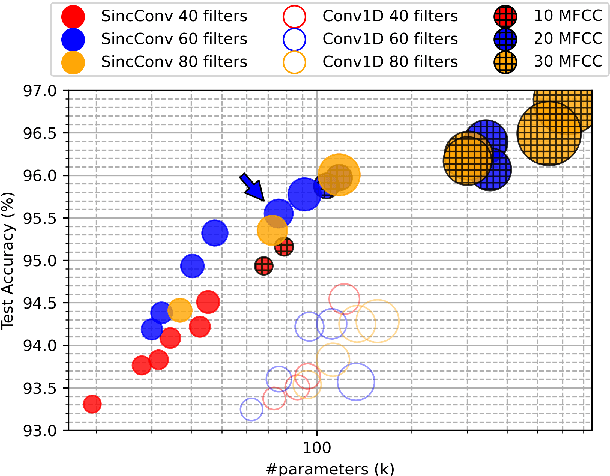

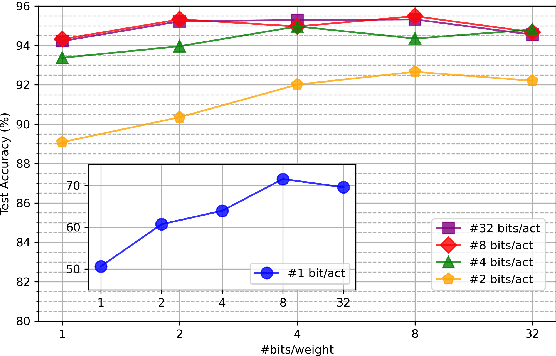

End-to-end Keyword Spotting using Neural Architecture Search and Quantization

Apr 14, 2021

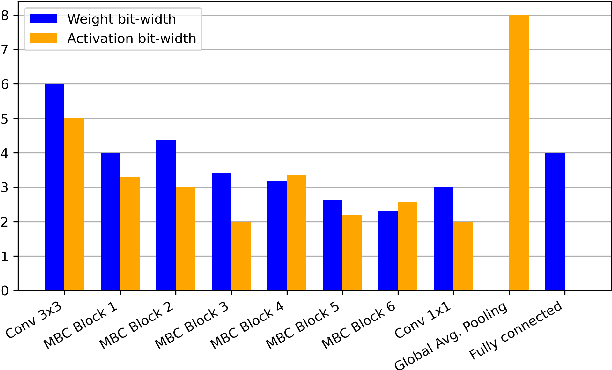

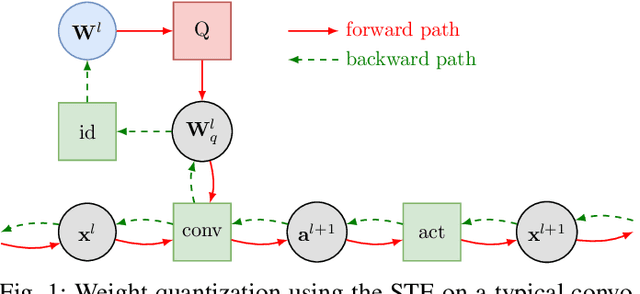

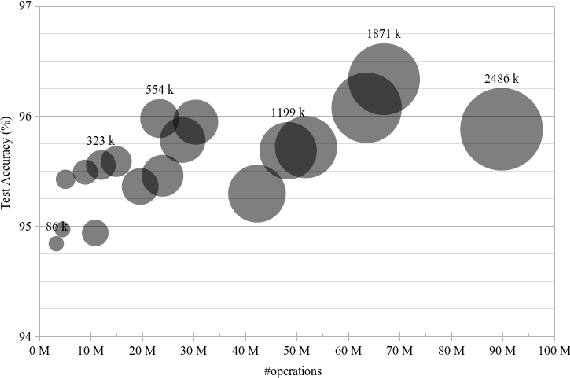

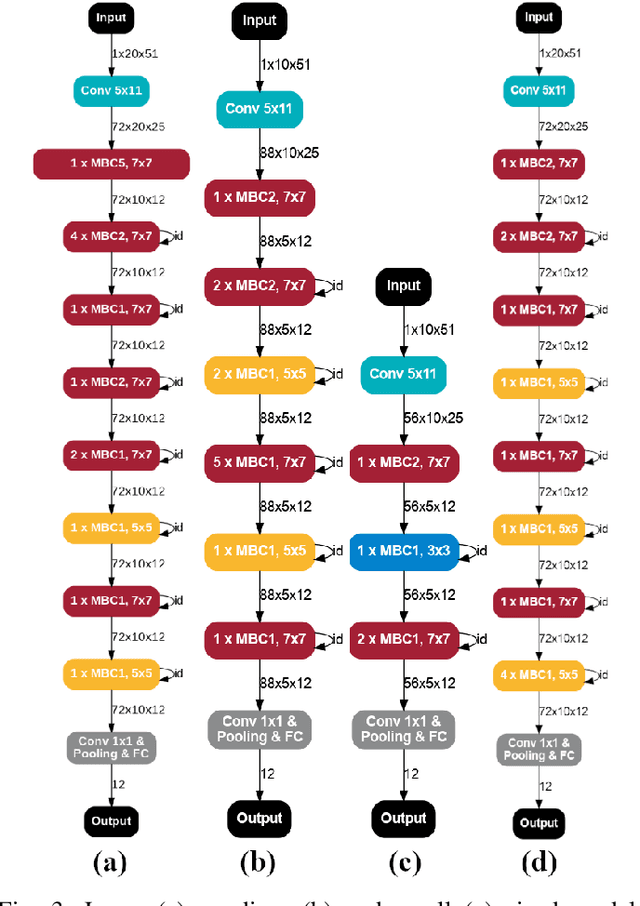

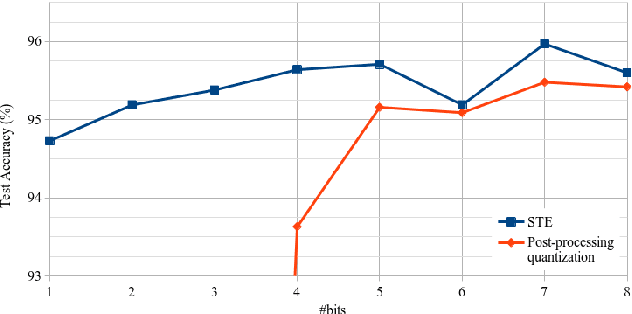

Abstract:This paper introduces neural architecture search (NAS) for the automatic discovery of end-to-end keyword spotting (KWS) models in limited resource environments. We employ a differentiable NAS approach to optimize the structure of convolutional neural networks (CNNs) operating on raw audio waveforms. After a suitable KWS model is found with NAS, we conduct quantization of weights and activations to reduce the memory footprint. We conduct extensive experiments on the Google speech commands dataset. In particular, we compare our end-to-end approach to mel-frequency cepstral coefficient (MFCC) based systems. For quantization, we compare fixed bit-width quantization and trained bit-width quantization. Using NAS only, we were able to obtain a highly efficient model with an accuracy of 95.55% using 75.7k parameters and 13.6M operations. Using trained bit-width quantization, the same model achieves a test accuracy of 93.76% while using on average only 2.91 bits per activation and 2.51 bits per weight.

Resource-efficient DNNs for Keyword Spotting using Neural Architecture Search and Quantization

Dec 18, 2020

Abstract:This paper introduces neural architecture search (NAS) for the automatic discovery of small models for keyword spotting (KWS) in limited resource environments. We employ a differentiable NAS approach to optimize the structure of convolutional neural networks (CNNs) to maximize the classification accuracy while minimizing the number of operations per inference. Using NAS only, we were able to obtain a highly efficient model with 95.4% accuracy on the Google speech commands dataset with 494.8 kB of memory usage and 19.6 million operations. Additionally, weight quantization is used to reduce the memory consumption even further. We show that weight quantization to low bit-widths (e.g. 1 bit) can be used without substantial loss in accuracy. By increasing the number of input features from 10 MFCC to 20 MFCC we were able to increase the accuracy to 96.3% at 340.1 kB of memory usage and 27.1 million operations.

Scan-based Semantic Segmentation of LiDAR Point Clouds: An Experimental Study

Apr 28, 2020

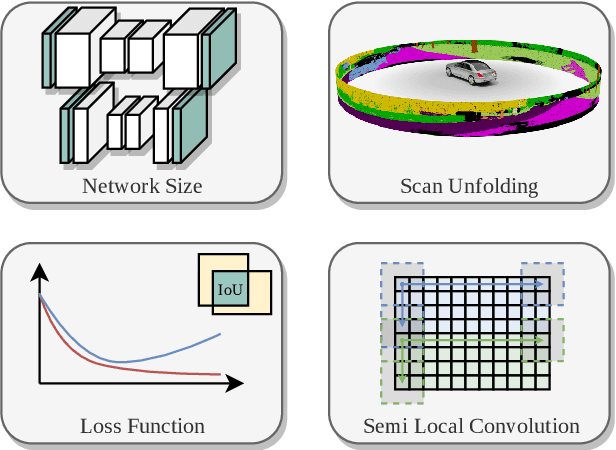

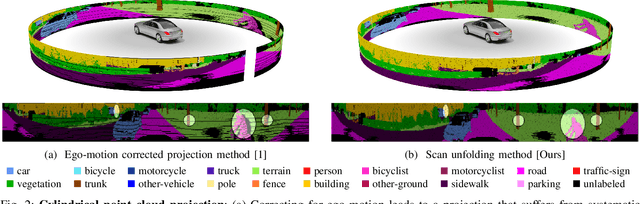

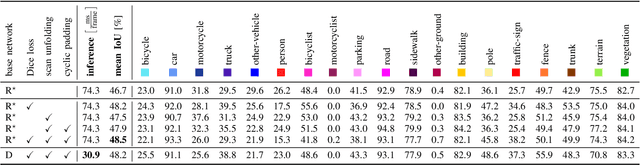

Abstract:Autonomous vehicles need to have a semantic understanding of the three-dimensional world around them in order to reason about their environment. State of the art methods use deep neural networks to predict semantic classes for each point in a LiDAR scan. A powerful and efficient way to process LiDAR measurements is to use two-dimensional, image-like projections. In this work, we perform a comprehensive experimental study of image-based semantic segmentation architectures for LiDAR point clouds. We demonstrate various techniques to boost the performance and to improve runtime as well as memory constraints. First, we examine the effect of network size and suggest that much faster inference times can be achieved at a very low cost to accuracy. Next, we introduce an improved point cloud projection technique that does not suffer from systematic occlusions. We use a cyclic padding mechanism that provides context at the horizontal field-of-view boundaries. In a third part, we perform experiments with a soft Dice loss function that directly optimizes for the intersection-over-union metric. Finally, we propose a new kind of convolution layer with a reduced amount of weight-sharing along one of the two spatial dimensions, addressing the large difference in appearance along the vertical axis of a LiDAR scan. We propose a final set of the above methods with which the model achieves an increase of 3.2% in mIoU segmentation performance over the baseline while requiring only 42% of the original inference time.

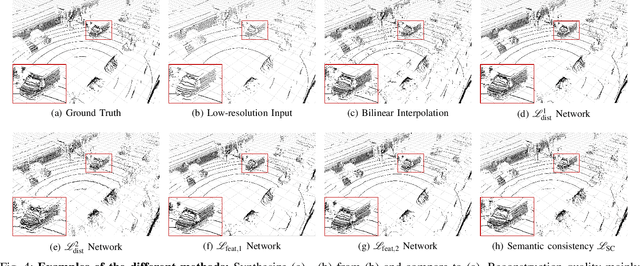

CNN-based synthesis of realistic high-resolution LiDAR data

Jun 28, 2019

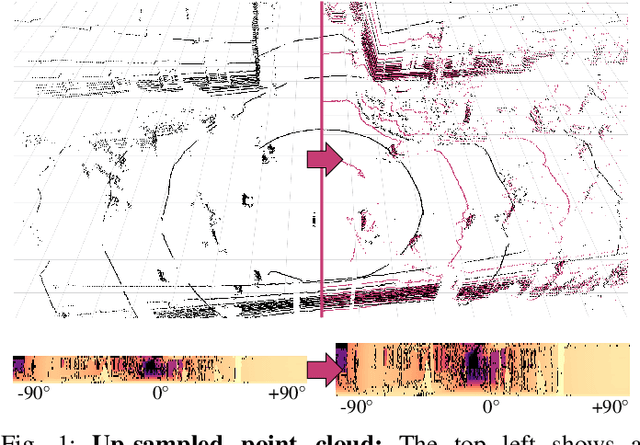

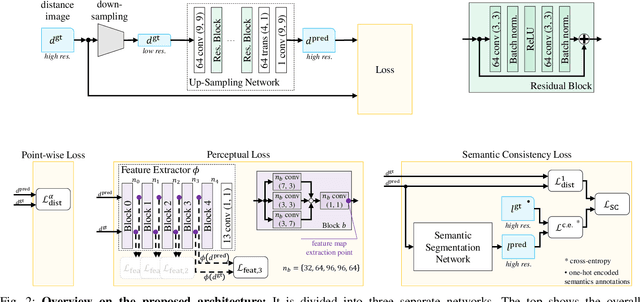

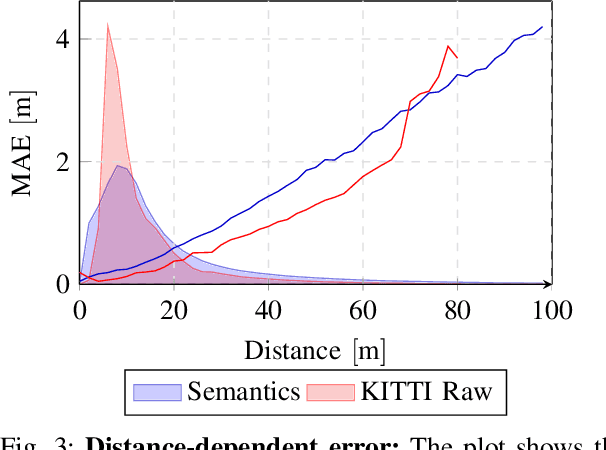

Abstract:This paper presents a novel CNN-based approach for synthesizing high-resolution LiDAR point cloud data. Our approach generates semantically and perceptually realistic results with guidance from specialized loss-functions. First, we utilize a modified per-point loss that addresses missing LiDAR point measurements. Second, we align the quality of our generated output with real-world sensor data by applying a perceptual loss. In large-scale experiments on real-world datasets, we evaluate both the geometric accuracy and semantic segmentation performance using our generated data vs. ground truth. In a mean opinion score testing we further assess the perceptual quality of our generated point clouds. Our results demonstrate a significant quantitative and qualitative improvement in both geometry and semantics over traditional non CNN-based up-sampling methods.

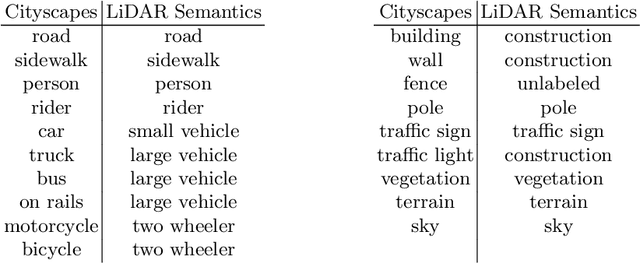

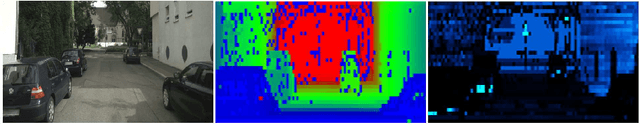

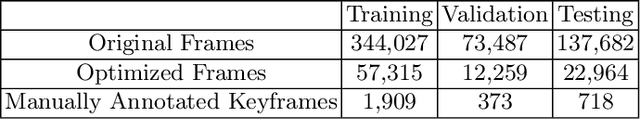

Boosting LiDAR-based Semantic Labeling by Cross-Modal Training Data Generation

Apr 26, 2018

Abstract:Mobile robots and autonomous vehicles rely on multi-modal sensor setups to perceive and understand their surroundings. Aside from cameras, LiDAR sensors represent a central component of state-of-the-art perception systems. In addition to accurate spatial perception, a comprehensive semantic understanding of the environment is essential for efficient and safe operation. In this paper we present a novel deep neural network architecture called LiLaNet for point-wise, multi-class semantic labeling of semi-dense LiDAR data. The network utilizes virtual image projections of the 3D point clouds for efficient inference. Further, we propose an automated process for large-scale cross-modal training data generation called Autolabeling, in order to boost semantic labeling performance while keeping the manual annotation effort low. The effectiveness of the proposed network architecture as well as the automated data generation process is demonstrated on a manually annotated ground truth dataset. LiLaNet is shown to significantly outperform current state-of-the-art CNN architectures for LiDAR data. Applying our automatically generated large-scale training data yields a boost of up to 14 percentage points compared to networks trained on manually annotated data only.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge