David E. Smith

A Unifying Bayesian Formulation of Measures of Interpretability in Human-AI

Apr 21, 2021

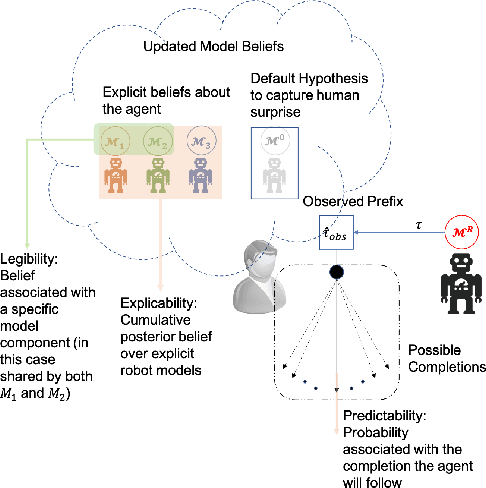

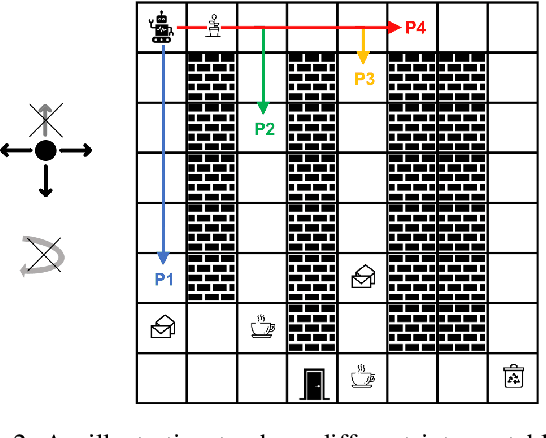

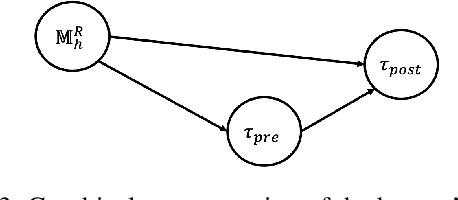

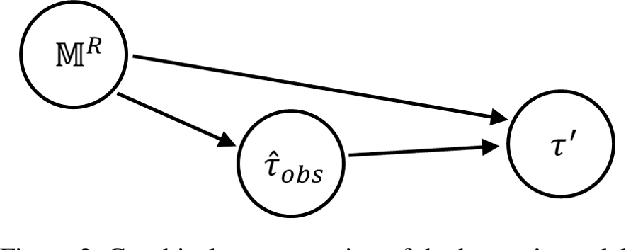

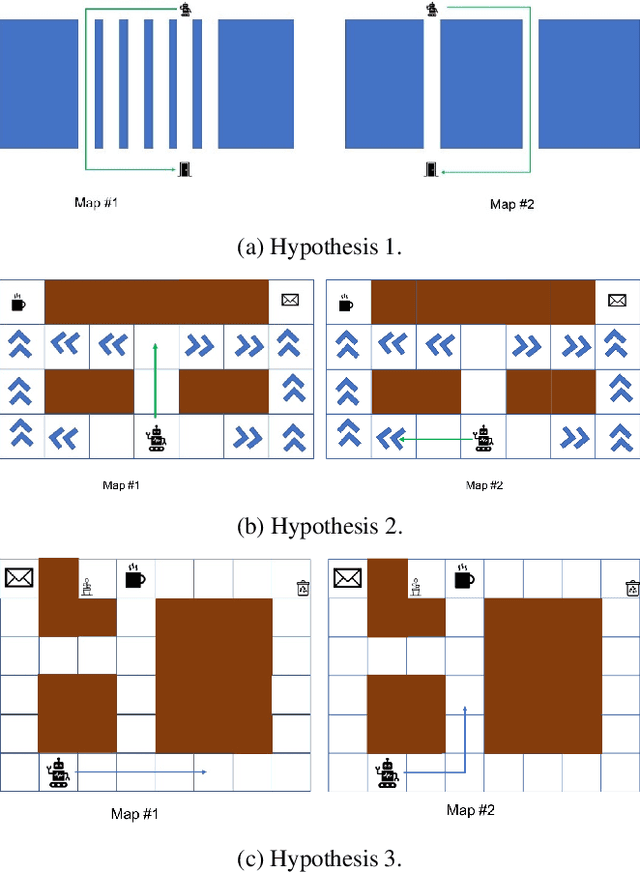

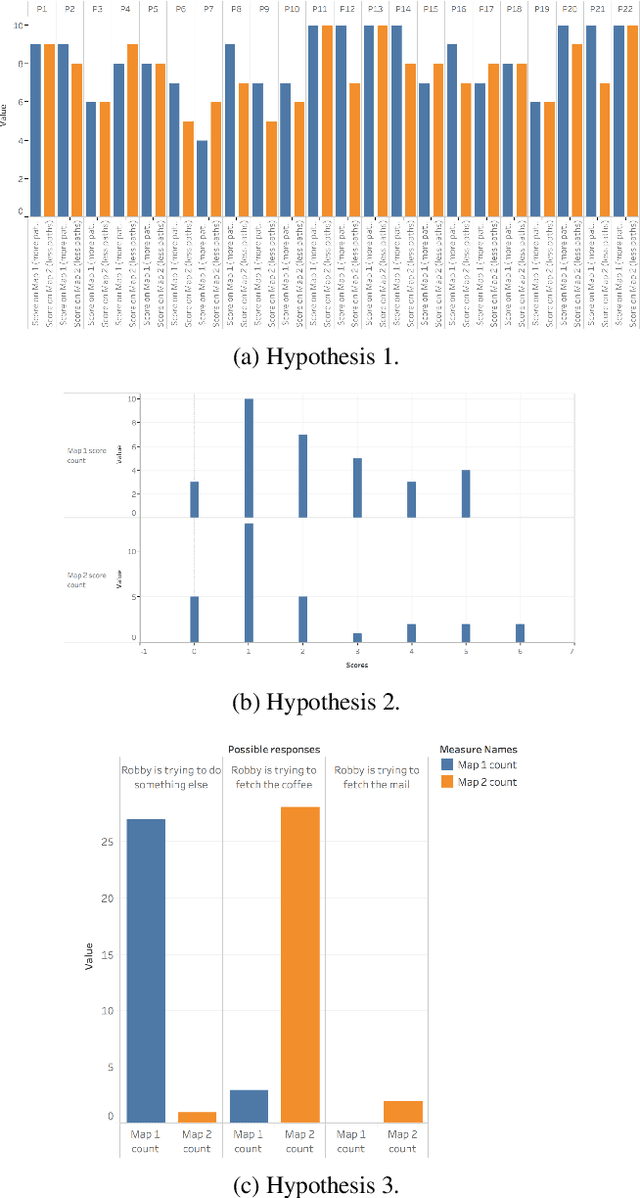

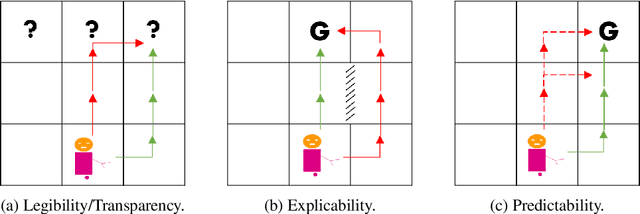

Abstract:Existing approaches for generating human-aware agent behaviors have considered different measures of interpretability in isolation. Further, these measures have been studied under differing assumptions, thus precluding the possibility of designing a single framework that captures these measures under the same assumptions. In this paper, we present a unifying Bayesian framework that models a human observer's evolving beliefs about an agent and thereby define the problem of Generalized Human-Aware Planning. We will show that the definitions of interpretability measures like explicability, legibility and predictability from the prior literature fall out as special cases of our general framework. Through this framework, we also bring a previously ignored fact to light that the human-robot interactions are in effect open-world problems, particularly as a result of modeling the human's beliefs over the agent. Since the human may not only hold beliefs unknown to the agent but may also form new hypotheses about the agent when presented with novel or unexpected behaviors.

Contrastive Explanations of Plans Through Model Restrictions

Mar 29, 2021

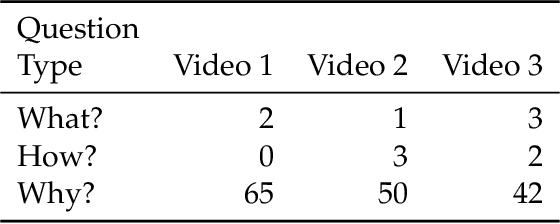

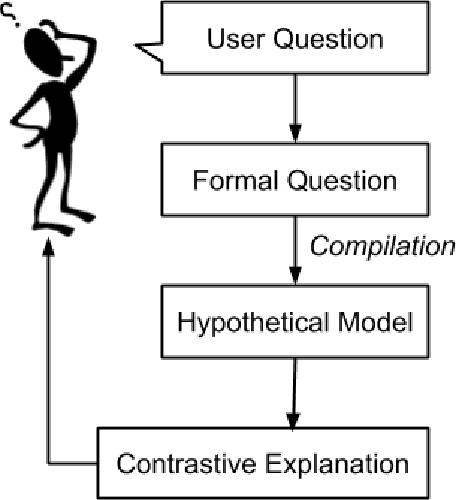

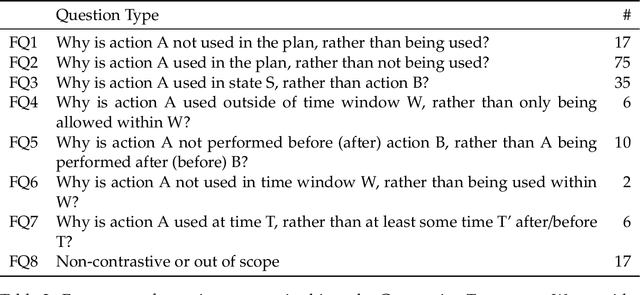

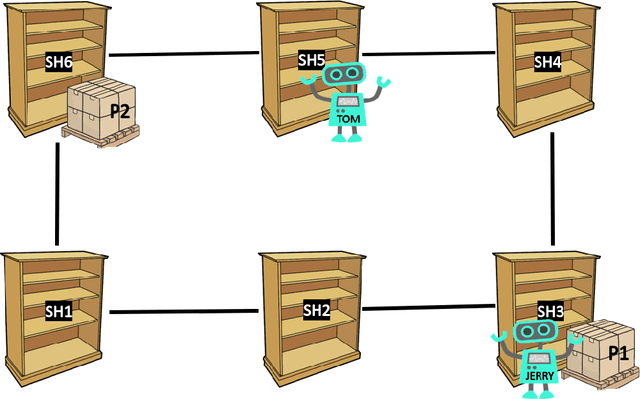

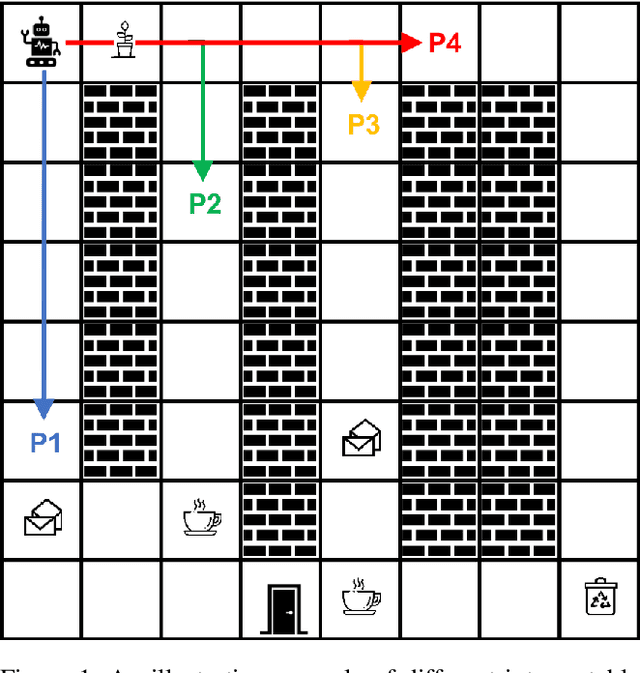

Abstract:In automated planning, the need for explanations arises when there is a mismatch between a proposed plan and the user's expectation. We frame Explainable AI Planning in the context of the plan negotiation problem, in which a succession of hypothetical planning problems are generated and solved. The object of the negotiation is for the user to understand and ultimately arrive at a satisfactory plan. We present the results of a user study that demonstrates that when users ask questions about plans, those questions are contrastive, i.e. "why A rather than B?". We use the data from this study to construct a taxonomy of user questions that often arise during plan negotiation. We formally define our approach to plan negotiation through model restriction as an iterative process. This approach generates hypothetical problems and contrastive plans by restricting the model through constraints implied by user questions. We formally define model-based compilations in PDDL2.1 of each constraint derived from a user question in the taxonomy, and empirically evaluate the compilations in terms of computational complexity. The compilations were implemented as part of an explanation framework that employs iterative model restriction. We demonstrate its benefits in a second user study.

A Bayesian Account of Measures of Interpretability in Human-AI Interaction

Nov 22, 2020

Abstract:Existing approaches for the design of interpretable agent behavior consider different measures of interpretability in isolation. In this paper we posit that, in the design and deployment of human-aware agents in the real world, notions of interpretability are just some among many considerations; and the techniques developed in isolation lack two key properties to be useful when considered together: they need to be able to 1) deal with their mutually competing properties; and 2) an open world where the human is not just there to interpret behavior in one specific form. To this end, we consider three well-known instances of interpretable behavior studied in existing literature -- namely, explicability, legibility, and predictability -- and propose a revised model where all these behaviors can be meaningfully modeled together. We will highlight interesting consequences of this unified model and motivate, through results of a user study, why this revision is necessary.

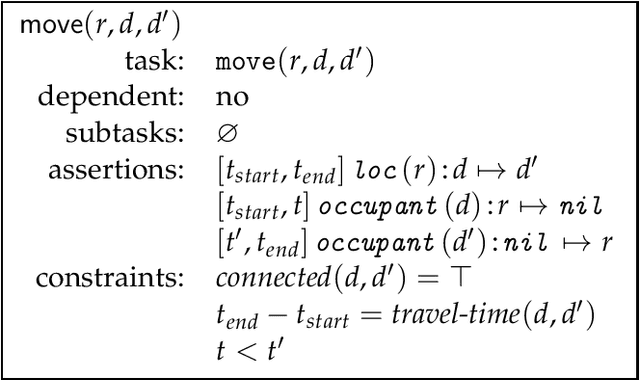

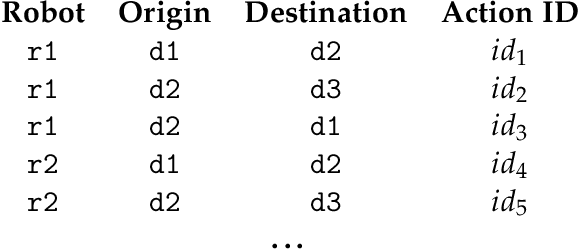

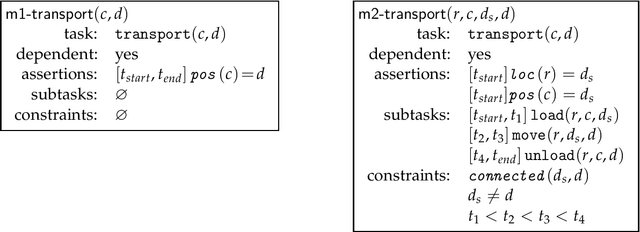

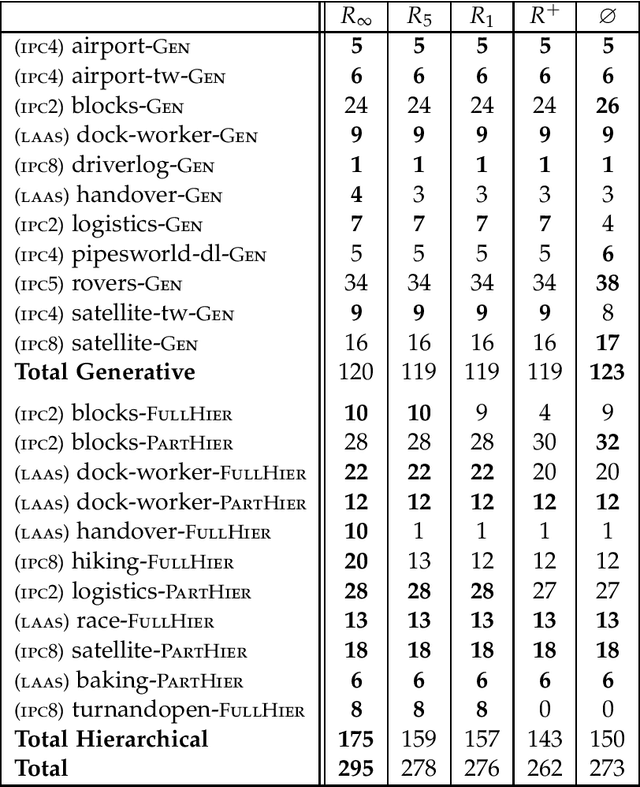

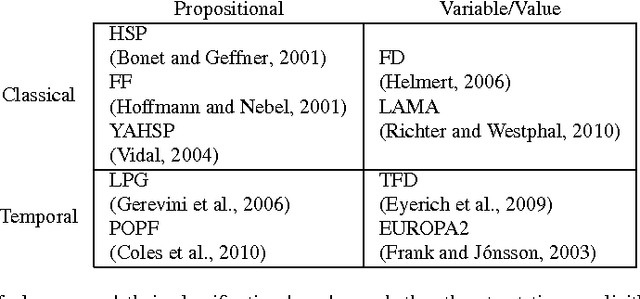

FAPE: a Constraint-based Planner for Generative and Hierarchical Temporal Planning

Oct 25, 2020

Abstract:Temporal planning offers numerous advantages when based on an expressive representation. Timelines have been known to provide the required expressiveness but at the cost of search efficiency. We propose here a temporal planner, called FAPE, which supports many of the expressive temporal features of the ANML modeling language without loosing efficiency. FAPE's representation coherently integrates flexible timelines with hierarchical refinement methods that can provide efficient control knowledge. A novel reachability analysis technique is proposed and used to develop causal networks to constrain the search space. It is employed for the design of informed heuristics, inference methods and efficient search strategies. Experimental results on common benchmarks in the field permit to assess the components and search strategies of FAPE, and to compare it to IPC planners. The results show the proposed approach to be competitive with less expressive planners and often superior when hierarchical control knowledge is provided. FAPE, a freely available system, provides other features, not covered here, such as the integration of planning with acting, and the handling of sensing actions in partially observable environments.

Explicability? Legibility? Predictability? Transparency? Privacy? Security? The Emerging Landscape of Interpretable Agent Behavior

Nov 23, 2018

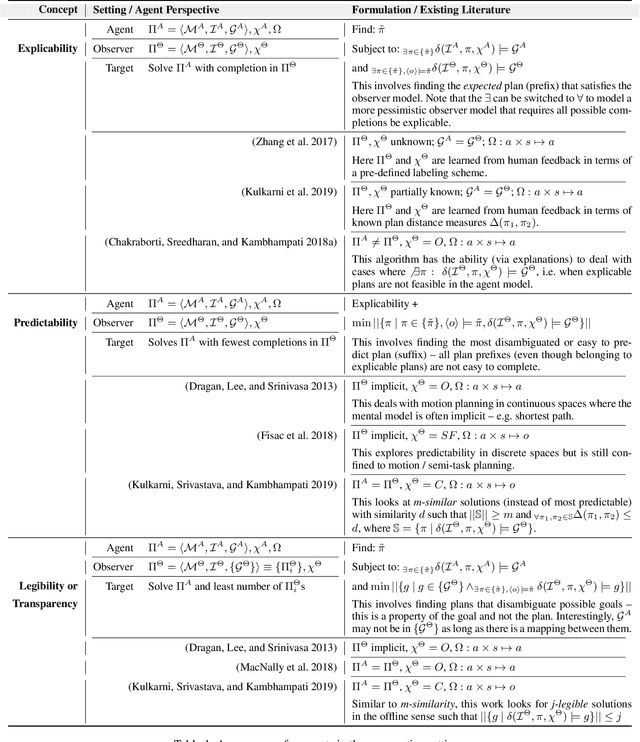

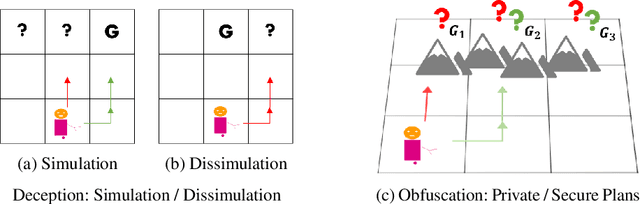

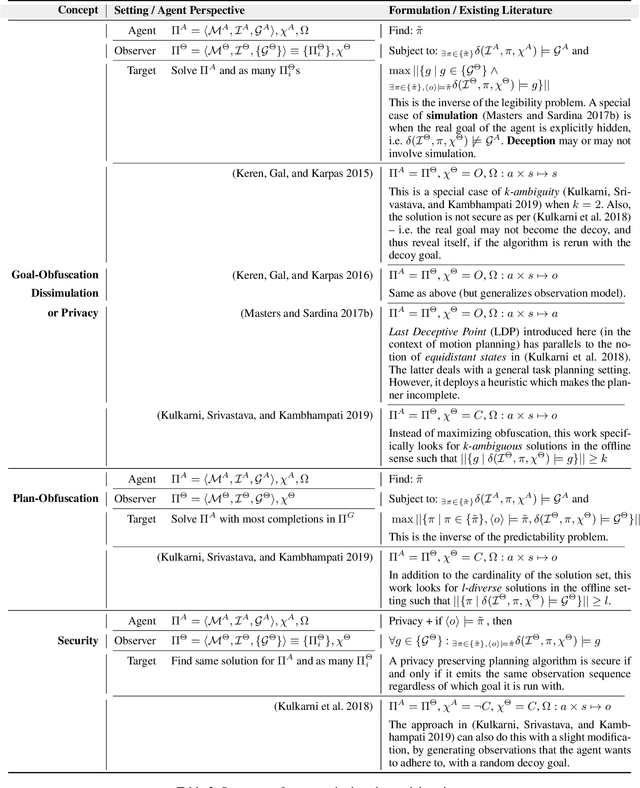

Abstract:There has been significant interest of late in generating behavior of agents that is interpretable to the human (observer) in the loop. However, the work in this area has typically lacked coherence on the topic, with proposed solutions for "explicable", "legible", "predictable" and "transparent" planning with overlapping, and sometimes conflicting, semantics all aimed at some notion of understanding what intentions the observer will ascribe to an agent by observing its behavior. This is also true for the recent works on "security" and "privacy" of plans which are also trying to answer the same question, but from the opposite point of view -- i.e. when the agent is trying to hide instead of revealing its intentions. This paper attempts to provide a workable taxonomy of relevant concepts in this exciting and emerging field of inquiry.

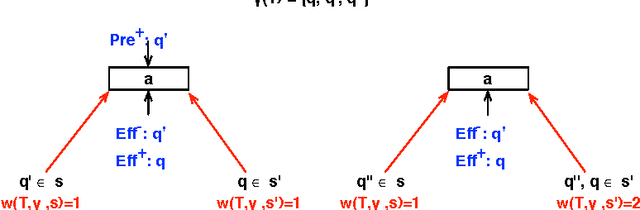

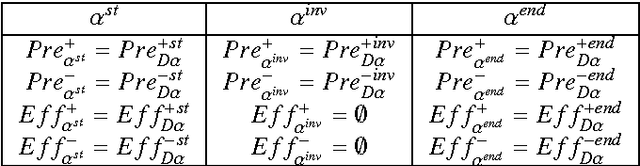

Extracting Lifted Mutual Exclusion Invariants from Temporal Planning Domains

Feb 07, 2017

Abstract:We present a technique for automatically extracting mutual exclusion invariants from temporal planning instances. It first identifies a set of invariant templates by inspecting the lifted representation of the domain and then checks these templates against properties that assure invariance. Our technique builds on other approaches to invariant synthesis presented in the literature, but departs from their limited focus on instantaneous actions by addressing temporal domains. To deal with time, we formulate invariance conditions that account for the entire structure of the actions and the possible concurrent interactions between them. As a result, we construct a significantly more comprehensive technique than previous methods, which is able to find not only invariants for temporal domains, but also a broader set of invariants for non-temporal domains. The experimental results reported in this paper provide evidence that identifying a broader set of invariants results in the generation of fewer multi-valued state variables with larger domains. We show that, in turn, this reduction in the number of variables reflects positively on the performance of a number of temporal planners that use a variable/value representation by significantly reducing their running time.

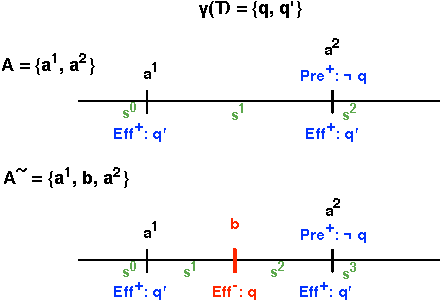

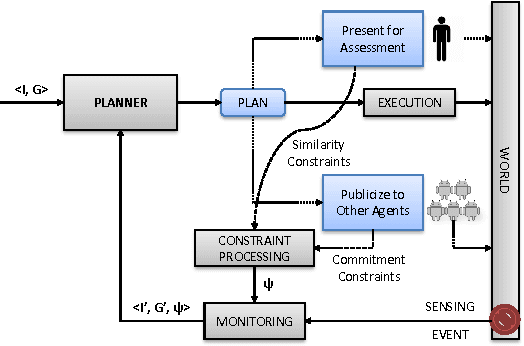

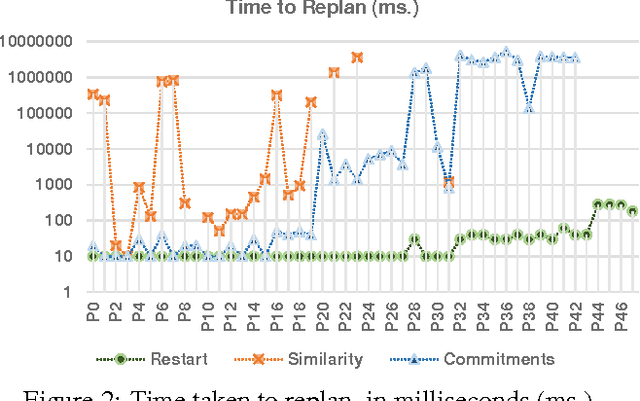

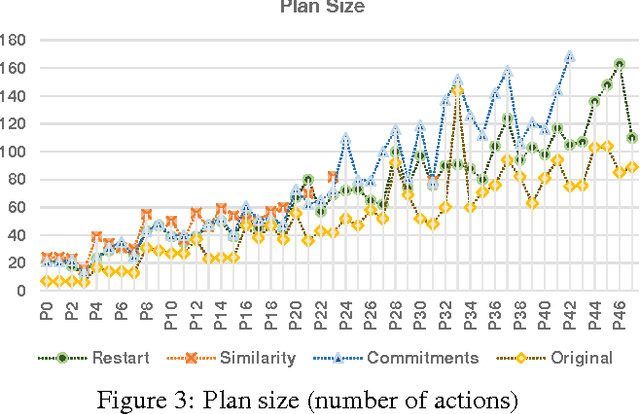

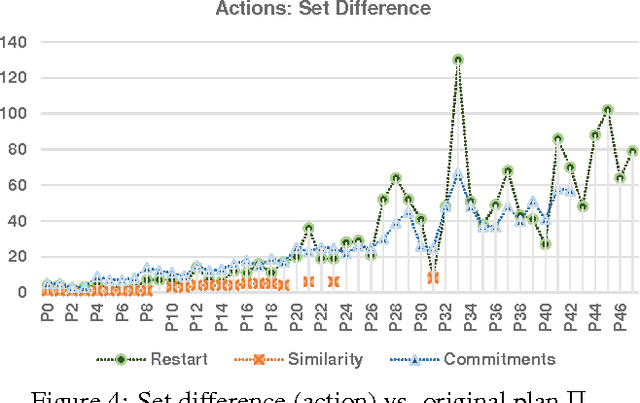

The Metrics Matter! On the Incompatibility of Different Flavors of Replanning

May 12, 2014

Abstract:When autonomous agents are executing in the real world, the state of the world as well as the objectives of the agent may change from the agent's original model. In such cases, the agent's planning process must modify the plan under execution to make it amenable to the new conditions, and to resume execution. This brings up the replanning problem, and the various techniques that have been proposed to solve it. In all, three main techniques -- based on three different metrics -- have been proposed in prior automated planning work. An open question is whether these metrics are interchangeable; answering this requires a normalized comparison of the various replanning quality metrics. In this paper, we show that it is possible to support such a comparison by compiling all the respective techniques into a single substrate. Using this novel compilation, we demonstrate that these different metrics are not interchangeable, and that they are not good surrogates for each other. Thus we focus attention on the incompatibility of the various replanning flavors with each other, founded in the differences between the metrics that they respectively seek to optimize.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge