Benjamin Krarup

Understanding a Robot's Guiding Ethical Principles via Automatically Generated Explanations

Jun 20, 2022

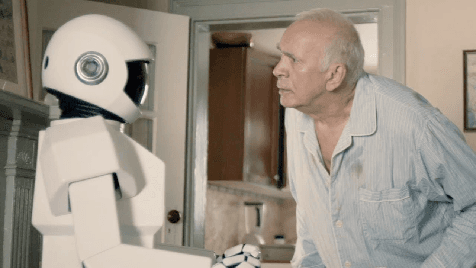

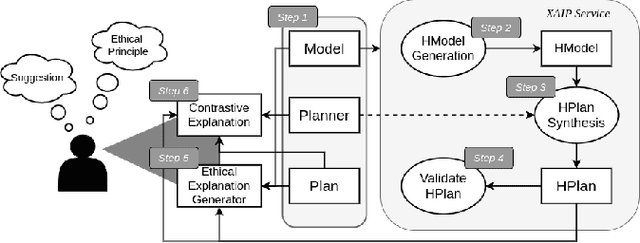

Abstract:The continued development of robots has enabled their wider usage in human surroundings. Robots are more trusted to make increasingly important decisions with potentially critical outcomes. Therefore, it is essential to consider the ethical principles under which robots operate. In this paper we examine how contrastive and non-contrastive explanations can be used in understanding the ethics of robot action plans. We build upon an existing ethical framework to allow users to make suggestions about plans and receive automatically generated contrastive explanations. Results of a user study indicate that the generated explanations help humans to understand the ethical principles that underlie a robot's plan.

Contrastive Explanations of Plans Through Model Restrictions

Mar 29, 2021

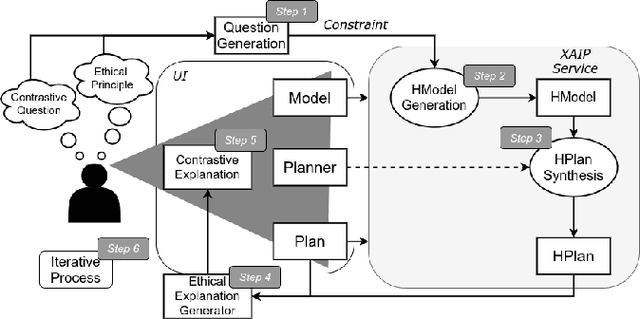

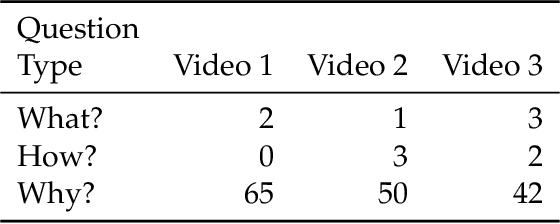

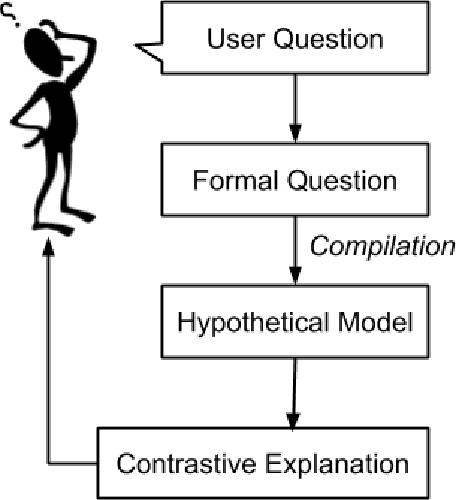

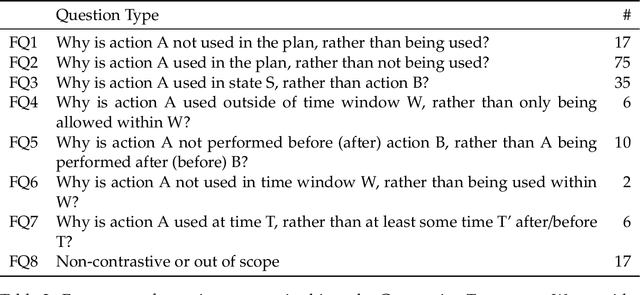

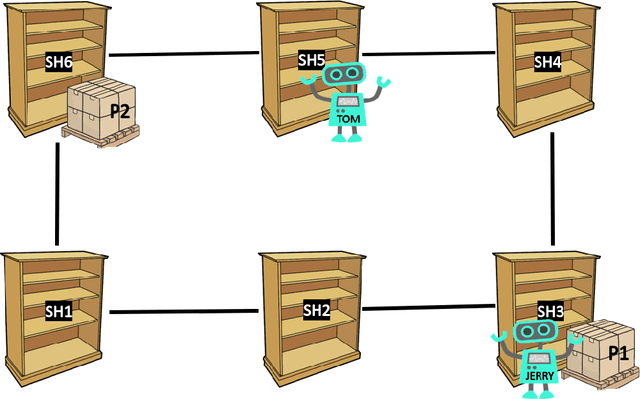

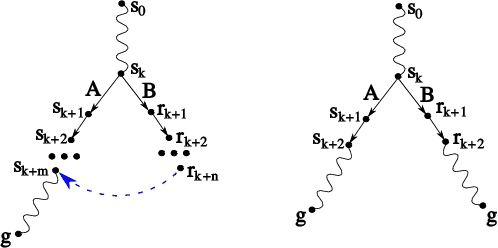

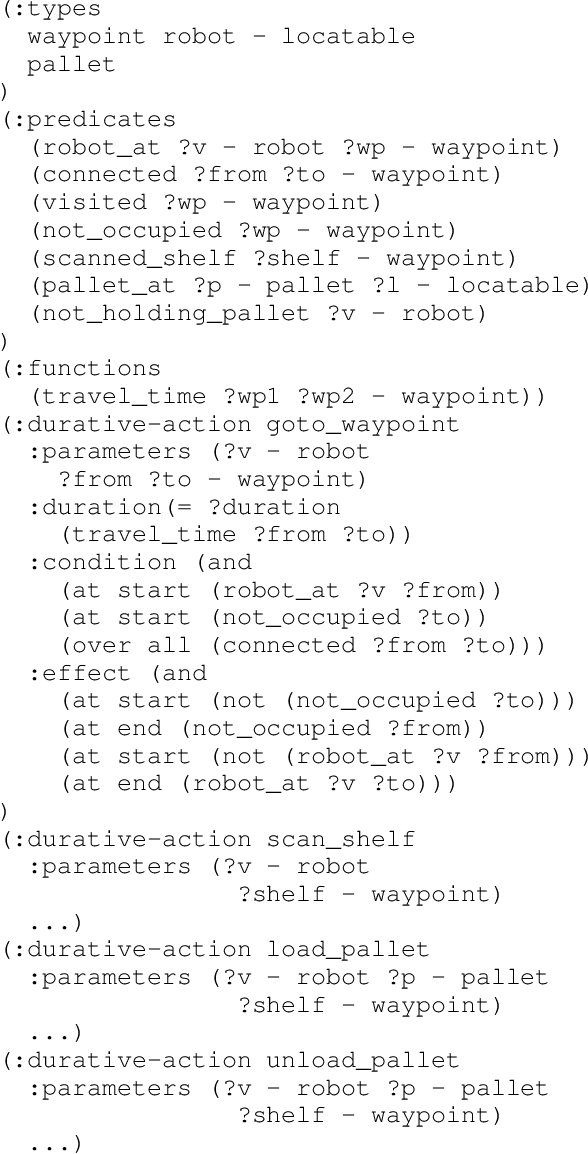

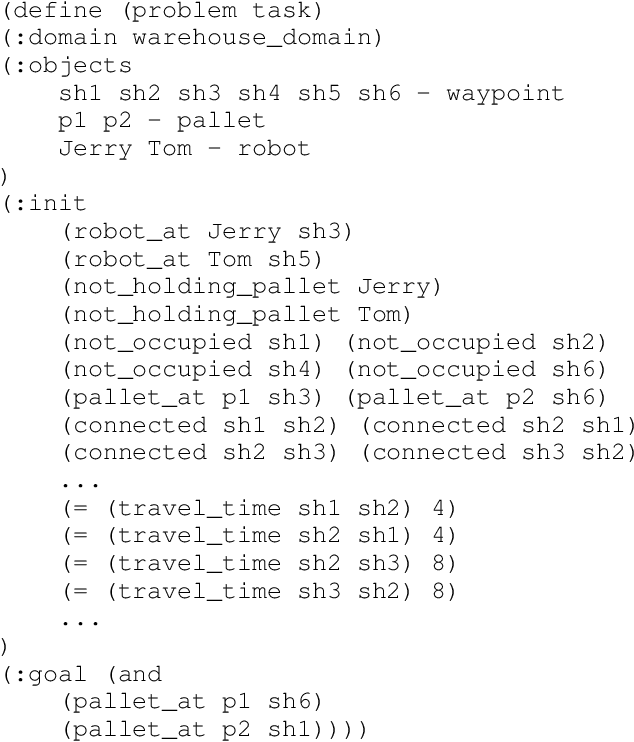

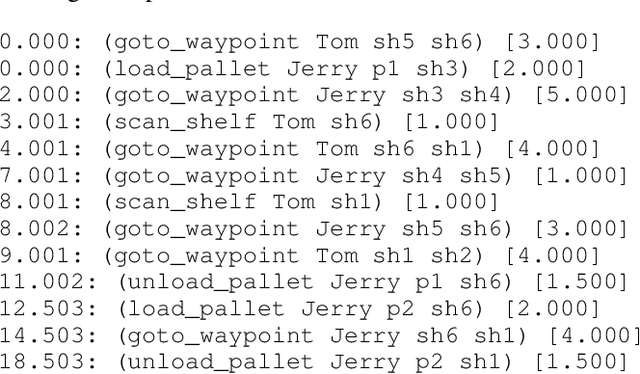

Abstract:In automated planning, the need for explanations arises when there is a mismatch between a proposed plan and the user's expectation. We frame Explainable AI Planning in the context of the plan negotiation problem, in which a succession of hypothetical planning problems are generated and solved. The object of the negotiation is for the user to understand and ultimately arrive at a satisfactory plan. We present the results of a user study that demonstrates that when users ask questions about plans, those questions are contrastive, i.e. "why A rather than B?". We use the data from this study to construct a taxonomy of user questions that often arise during plan negotiation. We formally define our approach to plan negotiation through model restriction as an iterative process. This approach generates hypothetical problems and contrastive plans by restricting the model through constraints implied by user questions. We formally define model-based compilations in PDDL2.1 of each constraint derived from a user question in the taxonomy, and empirically evaluate the compilations in terms of computational complexity. The compilations were implemented as part of an explanation framework that employs iterative model restriction. We demonstrate its benefits in a second user study.

Towards Contrastive Explanations for Comparing the Ethics of Plans

Jun 22, 2020

Abstract:The development of robotics and AI agents has enabled their wider usage in human surroundings. AI agents are more trusted to make increasingly important decisions with potentially critical outcomes. It is essential to consider the ethical consequences of the decisions made by these systems. In this paper, we present how contrastive explanations can be used for comparing the ethics of plans. We build upon an existing ethical framework to allow users to make suggestions to plans and receive contrastive explanations.

Towards Explainable AI Planning as a Service

Aug 14, 2019

Abstract:Explainable AI is an important area of research within which Explainable Planning is an emerging topic. In this paper, we argue that Explainable Planning can be designed as a service -- that is, as a wrapper around an existing planning system that utilises the existing planner to assist in answering contrastive questions. We introduce a prototype framework to facilitate this, along with some examples of how a planner can be used to address certain types of contrastive questions. We discuss the main advantages and limitations of such an approach and we identify open questions for Explainable Planning as a service that identify several possible research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge