Daniel Watzenig

MAP: End-to-End Autonomous Driving with Map-Assisted Planning

Sep 17, 2025

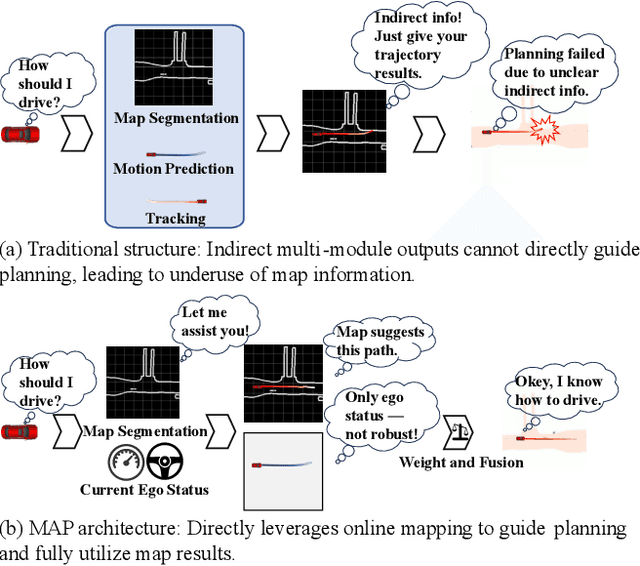

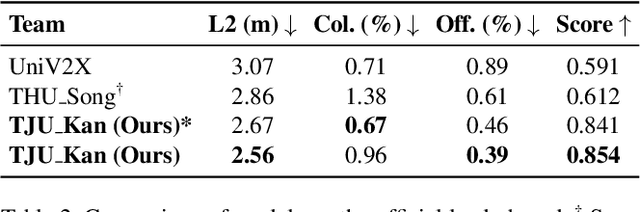

Abstract:In recent years, end-to-end autonomous driving has attracted increasing attention for its ability to jointly model perception, prediction, and planning within a unified framework. However, most existing approaches underutilize the online mapping module, leaving its potential to enhance trajectory planning largely untapped. This paper proposes MAP (Map-Assisted Planning), a novel map-assisted end-to-end trajectory planning framework. MAP explicitly integrates segmentation-based map features and the current ego status through a Plan-enhancing Online Mapping module, an Ego-status-guided Planning module, and a Weight Adapter based on current ego status. Experiments conducted on the DAIR-V2X-seq-SPD dataset demonstrate that the proposed method achieves a 16.6% reduction in L2 displacement error, a 56.2% reduction in off-road rate, and a 44.5% improvement in overall score compared to the UniV2X baseline, even without post-processing. Furthermore, it achieves top ranking in Track 2 of the End-to-End Autonomous Driving through V2X Cooperation Challenge of MEIS Workshop @CVPR2025, outperforming the second-best model by 39.5% in terms of overall score. These results highlight the effectiveness of explicitly leveraging semantic map features in planning and suggest new directions for improving structure design in end-to-end autonomous driving systems. Our code is available at https://gitee.com/kymkym/map.git

Vision Foundation Model Embedding-Based Semantic Anomaly Detection

May 12, 2025Abstract:Semantic anomalies are contextually invalid or unusual combinations of familiar visual elements that can cause undefined behavior and failures in system-level reasoning for autonomous systems. This work explores semantic anomaly detection by leveraging the semantic priors of state-of-the-art vision foundation models, operating directly on the image. We propose a framework that compares local vision embeddings from runtime images to a database of nominal scenarios in which the autonomous system is deemed safe and performant. In this work, we consider two variants of the proposed framework: one using raw grid-based embeddings, and another leveraging instance segmentation for object-centric representations. To further improve robustness, we introduce a simple filtering mechanism to suppress false positives. Our evaluations on CARLA-simulated anomalies show that the instance-based method with filtering achieves performance comparable to GPT-4o, while providing precise anomaly localization. These results highlight the potential utility of vision embeddings from foundation models for real-time anomaly detection in autonomous systems.

Thermal-LiDAR Fusion for Robust Tunnel Localization in GNSS-Denied and Low-Visibility Conditions

May 06, 2025

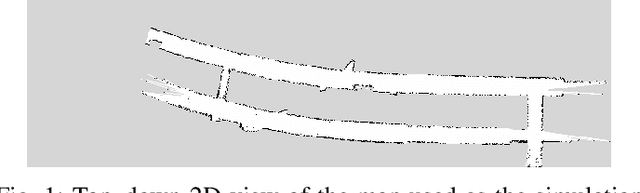

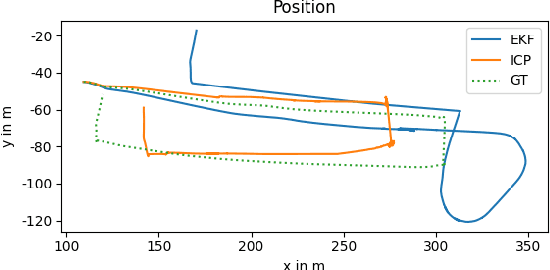

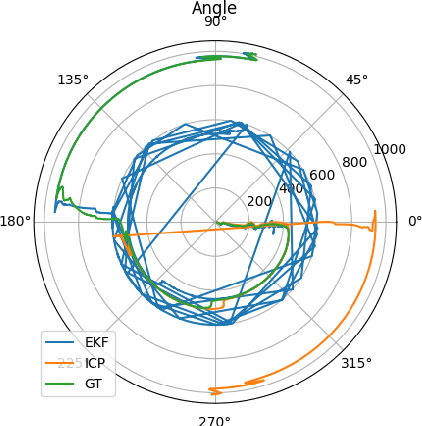

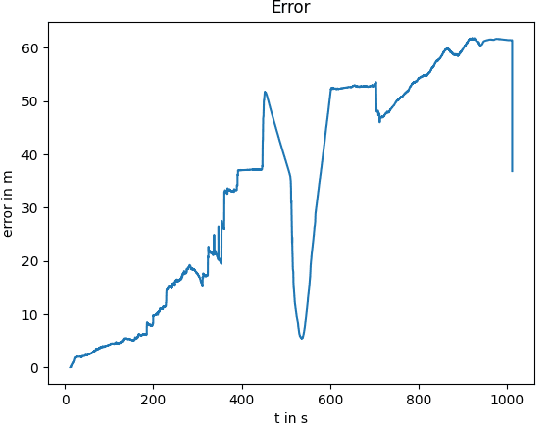

Abstract:Despite significant progress in autonomous navigation, a critical gap remains in ensuring reliable localization in hazardous environments such as tunnels, urban disaster zones, and underground structures. Tunnels present a uniquely difficult scenario: they are not only prone to GNSS signal loss, but also provide little features for visual localization due to their repetitive walls and poor lighting. These conditions degrade conventional vision-based and LiDAR-based systems, which rely on distinguishable environmental features. To address this, we propose a novel sensor fusion framework that integrates a thermal camera with a LiDAR to enable robust localization in tunnels and other perceptually degraded environments. The thermal camera provides resilience in low-light or smoke conditions, while the LiDAR delivers precise depth perception and structural awareness. By combining these sensors, our framework ensures continuous and accurate localization across diverse and dynamic environments. We use an Extended Kalman Filter (EKF) to fuse multi-sensor inputs, and leverages visual odometry and SLAM (Simultaneous Localization and Mapping) techniques to process the sensor data, enabling robust motion estimation and mapping even in GNSS-denied environments. This fusion of sensor modalities not only enhances system resilience but also provides a scalable solution for cyber-physical systems in connected and autonomous vehicles (CAVs). To validate the framework, we conduct tests in a tunnel environment, simulating sensor degradation and visibility challenges. The results demonstrate that our method sustains accurate localization where standard approaches deteriorate due to the tunnels featureless geometry. The frameworks versatility makes it a promising solution for autonomous vehicles, inspection robots, and other cyber-physical systems operating in constrained, perceptually poor environments.

A Data-Centric Approach to 3D Semantic Segmentation of Railway Scenes

Apr 25, 2025

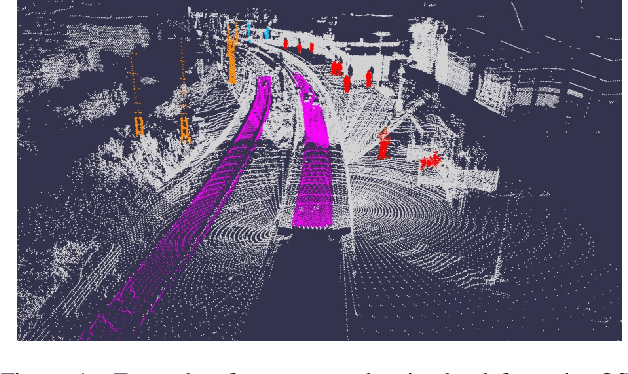

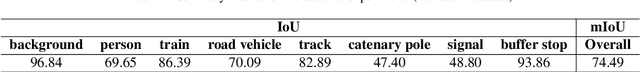

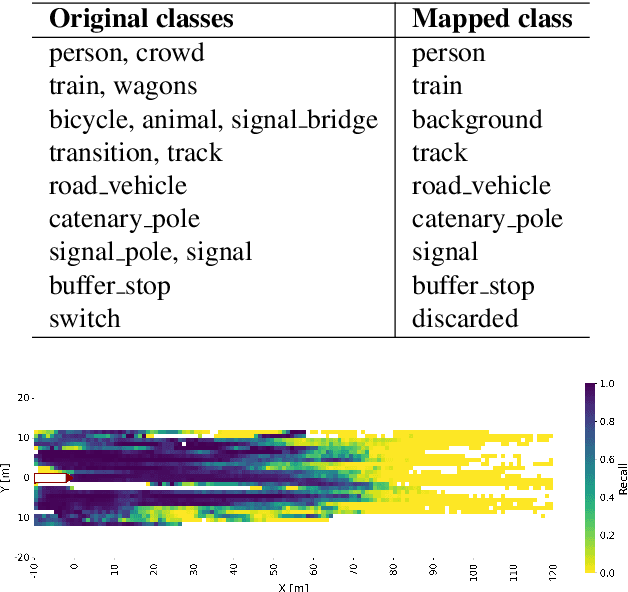

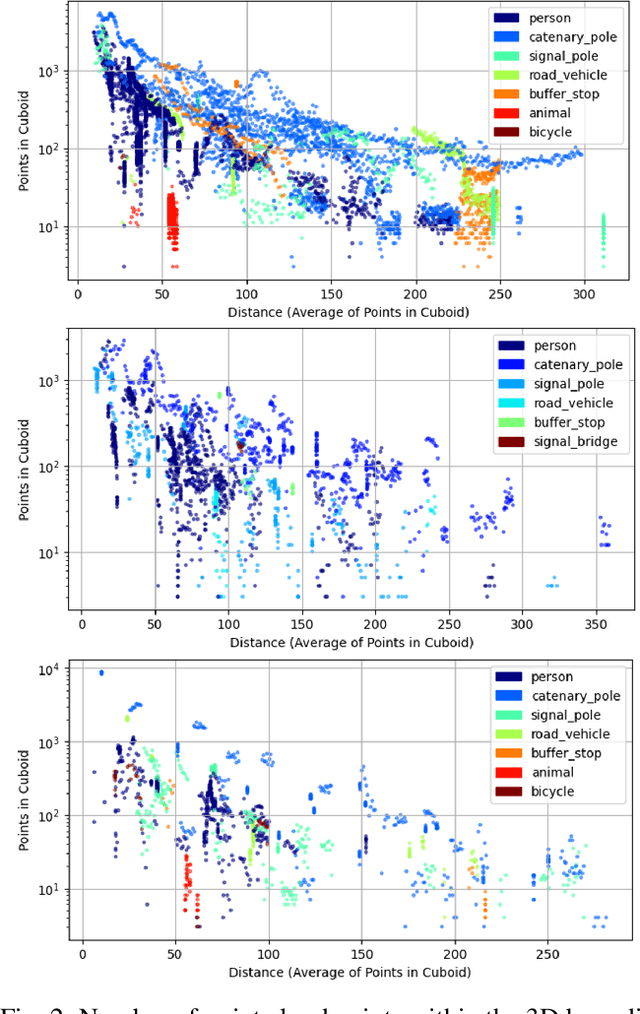

Abstract:LiDAR-based semantic segmentation is critical for autonomous trains, requiring accurate predictions across varying distances. This paper introduces two targeted data augmentation methods designed to improve segmentation performance on the railway-specific OSDaR23 dataset. The person instance pasting method enhances segmentation of pedestrians at distant ranges by injecting realistic variations into the dataset. The track sparsification method redistributes point density in LiDAR scans, improving track segmentation at far distances with minimal impact on close-range accuracy. Both methods are evaluated using a state-of-the-art 3D semantic segmentation network, demonstrating significant improvements in distant-range performance while maintaining robustness in close-range predictions. We establish the first 3D semantic segmentation benchmark for OSDaR23, demonstrating the potential of data-centric approaches to address railway-specific challenges in autonomous train perception.

LiDAR-Guided Monocular 3D Object Detection for Long-Range Railway Monitoring

Apr 25, 2025

Abstract:Railway systems, particularly in Germany, require high levels of automation to address legacy infrastructure challenges and increase train traffic safely. A key component of automation is robust long-range perception, essential for early hazard detection, such as obstacles at level crossings or pedestrians on tracks. Unlike automotive systems with braking distances of ~70 meters, trains require perception ranges exceeding 1 km. This paper presents an deep-learning-based approach for long-range 3D object detection tailored for autonomous trains. The method relies solely on monocular images, inspired by the Faraway-Frustum approach, and incorporates LiDAR data during training to improve depth estimation. The proposed pipeline consists of four key modules: (1) a modified YOLOv9 for 2.5D object detection, (2) a depth estimation network, and (3-4) dedicated short- and long-range 3D detection heads. Evaluations on the OSDaR23 dataset demonstrate the effectiveness of the approach in detecting objects up to 250 meters. Results highlight its potential for railway automation and outline areas for future improvement.

WS-DETR: Robust Water Surface Object Detection through Vision-Radar Fusion with Detection Transformer

Apr 10, 2025Abstract:Robust object detection for Unmanned Surface Vehicles (USVs) in complex water environments is essential for reliable navigation and operation. Specifically, water surface object detection faces challenges from blurred edges and diverse object scales. Although vision-radar fusion offers a feasible solution, existing approaches suffer from cross-modal feature conflicts, which negatively affect model robustness. To address this problem, we propose a robust vision-radar fusion model WS-DETR. In particular, we first introduce a Multi-Scale Edge Information Integration (MSEII) module to enhance edge perception and a Hierarchical Feature Aggregator (HiFA) to boost multi-scale object detection in the encoder. Then, we adopt self-moving point representations for continuous convolution and residual connection to efficiently extract irregular features under the scenarios of irregular point cloud data. To further mitigate cross-modal conflicts, an Adaptive Feature Interactive Fusion (AFIF) module is introduced to integrate visual and radar features through geometric alignment and semantic fusion. Extensive experiments on the WaterScenes dataset demonstrate that WS-DETR achieves state-of-the-art (SOTA) performance, maintaining its superiority even under adverse weather and lighting conditions.

Attention-Augmented Inverse Reinforcement Learning with Graph Convolutions for Multi-Agent Task Allocation

Apr 08, 2025

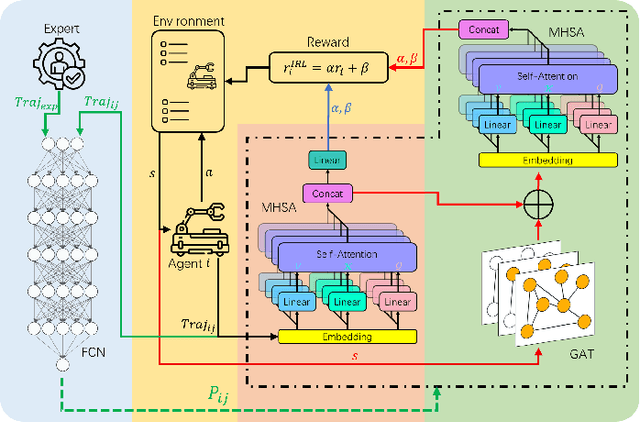

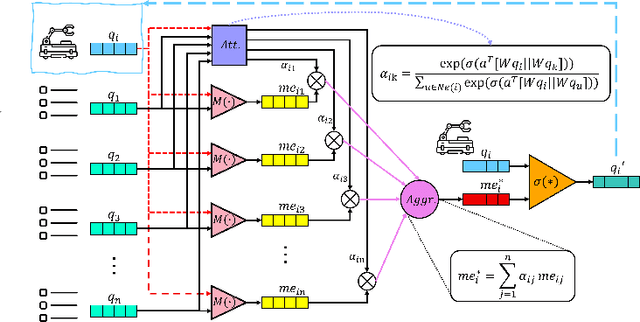

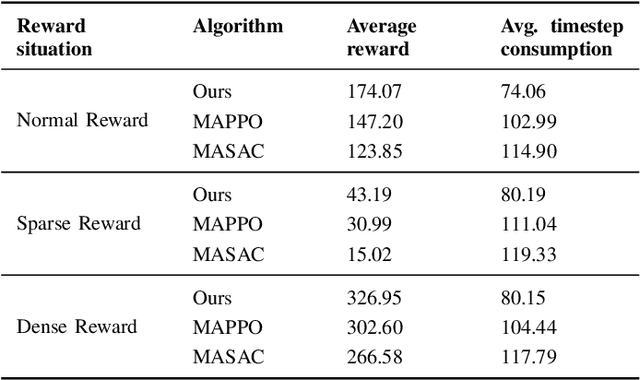

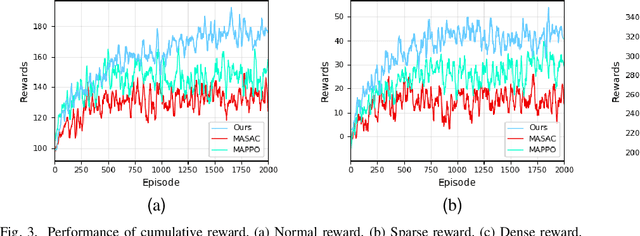

Abstract:Multi-agent task allocation (MATA) plays a vital role in cooperative multi-agent systems, with significant implications for applications such as logistics, search and rescue, and robotic coordination. Although traditional deep reinforcement learning (DRL) methods have been shown to be promising, their effectiveness is hindered by a reliance on manually designed reward functions and inefficiencies in dynamic environments. In this paper, an inverse reinforcement learning (IRL)-based framework is proposed, in which multi-head self-attention (MHSA) and graph attention mechanisms are incorporated to enhance reward function learning and task execution efficiency. Expert demonstrations are utilized to infer optimal reward densities, allowing dependence on handcrafted designs to be reduced and adaptability to be improved. Extensive experiments validate the superiority of the proposed method over widely used multi-agent reinforcement learning (MARL) algorithms in terms of both cumulative rewards and task execution efficiency.

A Cost-Effective Approach to Smooth A* Path Planning for Autonomous Vehicles

Nov 27, 2024

Abstract:Path planning for wheeled mobile robots is a critical component in the field of automation and intelligent transportation systems. Car-like vehicles, which have non-holonomic constraints on their movement capability impose additional requirements on the planned paths. Traditional path planning algorithms, such as A* , are widely used due to their simplicity and effectiveness in finding optimal paths in complex environments. However, these algorithms often do not consider vehicle dynamics, resulting in paths that are infeasible or impractical for actual driving. Specifically, a path that minimizes the number of grid cells may still be too curvy or sharp for a car-like vehicle to navigate smoothly. This paper addresses the need for a path planning solution that not only finds a feasible path but also ensures that the path is smooth and drivable. By adapting the A* algorithm for a curvature constraint and incorporating a cost function that considers the smoothness of possible paths, we aim to bridge the gap between grid based path planning and smooth paths that are drivable by car-like vehicles. The proposed method leverages motion primitives, pre-computed using a ribbon based path planner that produces smooth paths of minimum curvature. The motion primitives guide the A* algorithm in finding paths of minimal length and curvature. With the proposed modification on the A* algorithm, the planned paths can be constraint to have a minimum turning radius much larger than the grid size. We demonstrate the effectiveness of the proposed algorithm in different unstructured environments. In a two-stage planning approach, first the modified A* algorithm finds a grid-based path and the ribbon based path planner creates a smooth path within the area of grid cells. The resulting paths are smooth with small curvatures independent of the orientation of the grid axes and even in presence of sharp obstacles.

Segment-Anything Models Achieve Zero-shot Robustness in Autonomous Driving

Aug 19, 2024

Abstract:Semantic segmentation is a significant perception task in autonomous driving. It suffers from the risks of adversarial examples. In the past few years, deep learning has gradually transitioned from convolutional neural network (CNN) models with a relatively small number of parameters to foundation models with a huge number of parameters. The segment-anything model (SAM) is a generalized image segmentation framework that is capable of handling various types of images and is able to recognize and segment arbitrary objects in an image without the need to train on a specific object. It is a unified model that can handle diverse downstream tasks, including semantic segmentation, object detection, and tracking. In the task of semantic segmentation for autonomous driving, it is significant to study the zero-shot adversarial robustness of SAM. Therefore, we deliver a systematic empirical study on the robustness of SAM without additional training. Based on the experimental results, the zero-shot adversarial robustness of the SAM under the black-box corruptions and white-box adversarial attacks is acceptable, even without the need for additional training. The finding of this study is insightful in that the gigantic model parameters and huge amounts of training data lead to the phenomenon of emergence, which builds a guarantee of adversarial robustness. SAM is a vision foundation model that can be regarded as an early prototype of an artificial general intelligence (AGI) pipeline. In such a pipeline, a unified model can handle diverse tasks. Therefore, this research not only inspects the impact of vision foundation models on safe autonomous driving but also provides a perspective on developing trustworthy AGI. The code is available at: https://github.com/momo1986/robust_sam_iv.

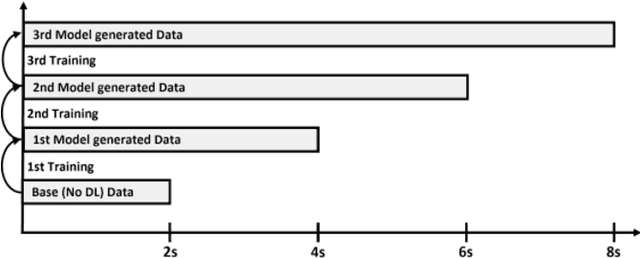

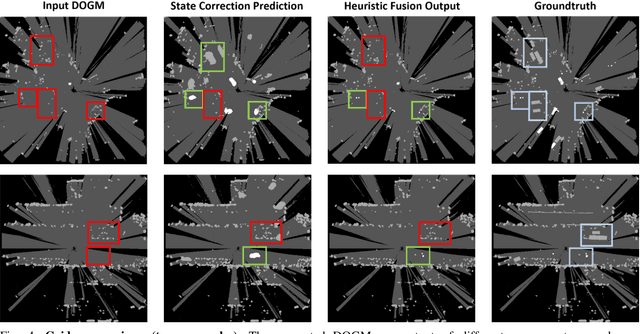

Deep Learning-Driven State Correction: A Hybrid Architecture for Radar-Based Dynamic Occupancy Grid Mapping

May 22, 2024

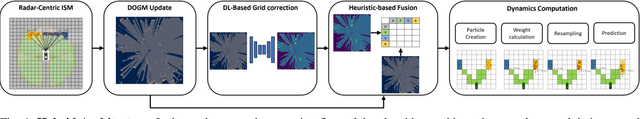

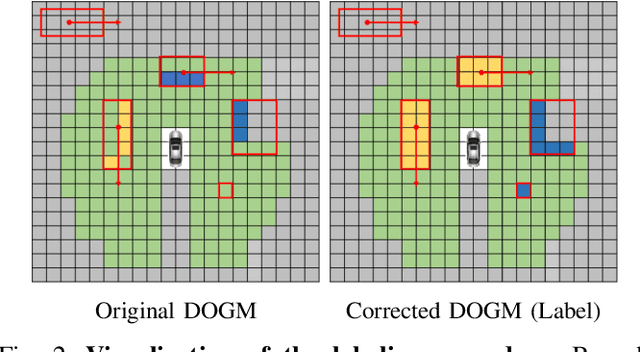

Abstract:This paper introduces a novel hybrid architecture that enhances radar-based Dynamic Occupancy Grid Mapping (DOGM) for autonomous vehicles, integrating deep learning for state-classification. Traditional radar-based DOGM often faces challenges in accurately distinguishing between static and dynamic objects. Our approach addresses this limitation by introducing a neural network-based DOGM state correction mechanism, designed as a semantic segmentation task, to refine the accuracy of the occupancy grid. Additionally a heuristic fusion approach is proposed which allows to enhance performance without compromising on safety. We extensively evaluate this hybrid architecture on the NuScenes Dataset, focusing on its ability to improve dynamic object detection as well grid quality. The results show clear improvements in the detection capabilities of dynamic objects, highlighting the effectiveness of the deep learning-enhanced state correction in radar-based DOGM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge