Daniel Büscher

Evaluation of a Smart Mobile Robotic System for Industrial Plant Inspection and Supervision

Feb 12, 2024

Abstract:Automated and autonomous industrial inspection is a longstanding research field, driven by the necessity to enhance safety and efficiency within industrial settings. In addressing this need, we introduce an autonomously navigating robotic system designed for comprehensive plant inspection. This innovative system comprises a robotic platform equipped with a diverse array of sensors integrated to facilitate the detection of various process and infrastructure parameters. These sensors encompass optical (LiDAR, Stereo, UV/IR/RGB cameras), olfactory (electronic nose), and acoustic (microphone array) capabilities, enabling the identification of factors such as methane leaks, flow rates, and infrastructural anomalies. The proposed system underwent individual evaluation at a wastewater treatment site within a chemical plant, providing a practical and challenging environment for testing. The evaluation process encompassed key aspects such as object detection, 3D localization, and path planning. Furthermore, specific evaluations were conducted for optical methane leak detection and localization, as well as acoustic assessments focusing on pump equipment and gas leak localization.

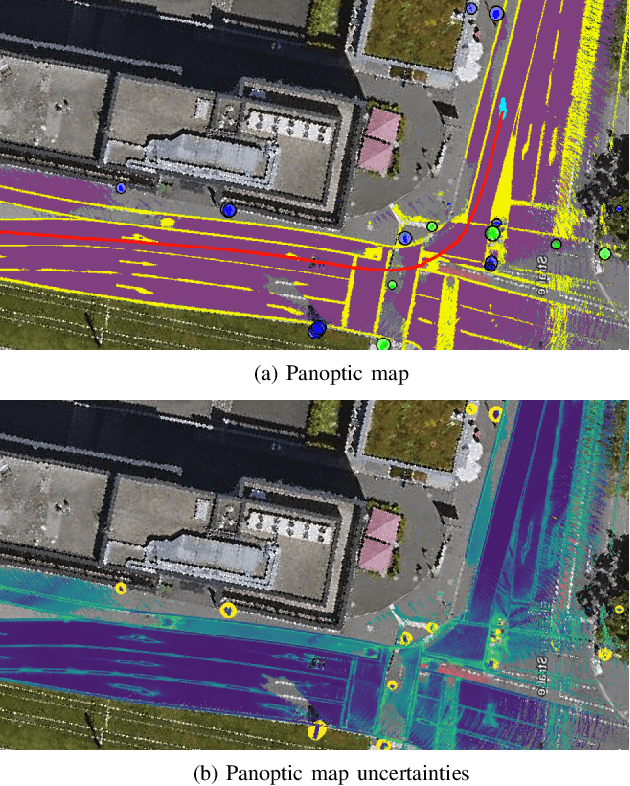

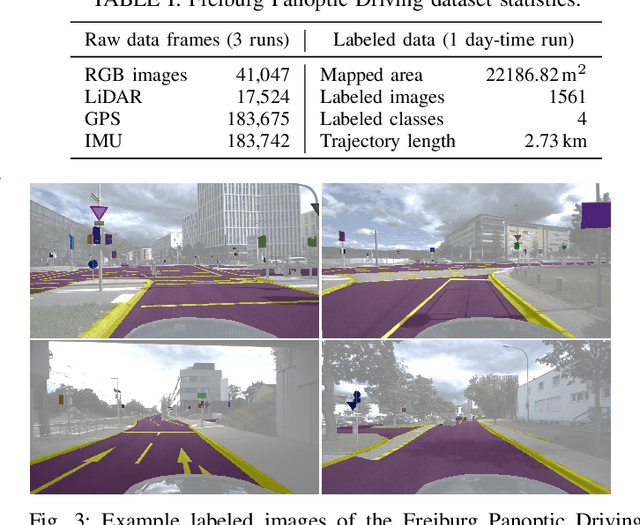

uPLAM: Robust Panoptic Localization and Mapping Leveraging Perception Uncertainties

Feb 08, 2024

Abstract:The availability of a reliable map and a robust localization system is critical for the operation of an autonomous vehicle. In a modern system, both mapping and localization solutions generally employ convolutional neural network (CNN) --based perception. Hence, any algorithm should consider potential errors in perception for safe and robust functioning. In this work, we present uncertainty-aware panoptic Localization and Mapping (uPLAM), which employs perception uncertainty as a bridge to fuse the perception information with classical localization and mapping approaches. We introduce an uncertainty-based map aggregation technique to create a long-term panoptic bird's eye view map and provide an associated mapping uncertainty. Our map consists of surface semantics and landmarks with unique IDs. Moreover, we present panoptic uncertainty-aware particle filter-based localization. To this end, we propose an uncertainty-based particle importance weight calculation for the adaptive incorporation of perception information into localization. We also present a new dataset for evaluating long-term panoptic mapping and map-based localization. Extensive evaluations showcase that our proposed uncertainty incorporation leads to better mapping with reliable uncertainty estimates and accurate localization. We make our dataset and code available at: \url{http://uplam.cs.uni-freiburg.de}

A Smart Robotic System for Industrial Plant Supervision

Sep 01, 2023

Abstract:In today's chemical plants, human field operators perform frequent integrity checks to guarantee high safety standards, and thus are possibly the first to encounter dangerous operating conditions. To alleviate their task, we present a system consisting of an autonomously navigating robot integrated with various sensors and intelligent data processing. It is able to detect methane leaks and estimate its flow rate, detect more general gas anomalies, recognize oil films, localize sound sources and detect failure cases, map the environment in 3D, and navigate autonomously, employing recognition and avoidance of dynamic obstacles. We evaluate our system at a wastewater facility in full working conditions. Our results demonstrate that the system is able to robustly navigate the plant and provide useful information about critical operating conditions.

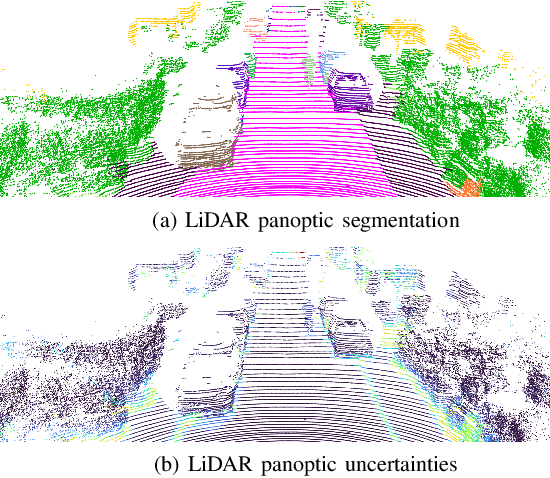

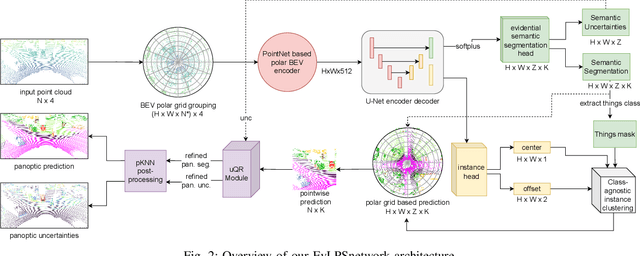

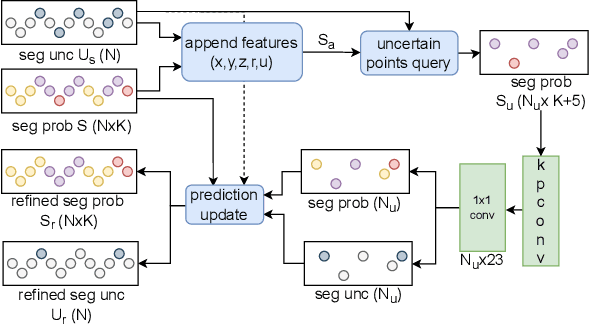

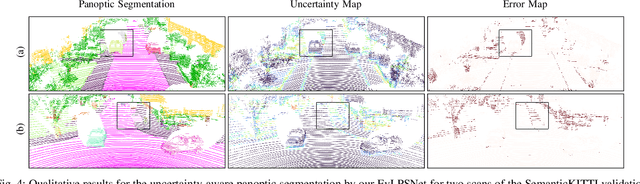

Uncertainty-aware LiDAR Panoptic Segmentation

Oct 10, 2022

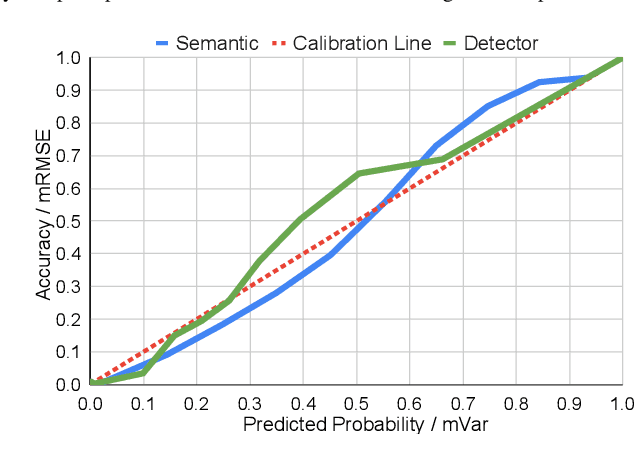

Abstract:Modern autonomous systems often rely on LiDAR scanners, in particular for autonomous driving scenarios. In this context, reliable scene understanding is indispensable. Current learning-based methods typically try to achieve maximum performance for this task, while neglecting a proper estimation of the associated uncertainties. In this work, we introduce a novel approach for solving the task of uncertainty-aware panoptic segmentation using LiDAR point clouds. Our proposed EvLPSNet network is the first to solve this task efficiently in a sampling-free manner. It aims to predict per-point semantic and instance segmentations, together with per-point uncertainty estimates. Moreover, it incorporates methods for improving the performance by employing the predicted uncertainties. We provide several strong baselines combining state-of-the-art panoptic segmentation networks with sampling-free uncertainty estimation techniques. Extensive evaluations show that we achieve the best performance on uncertainty-aware panoptic segmentation quality and calibration compared to these baselines. We make our code available at: \url{https://github.com/kshitij3112/EvLPSNet}

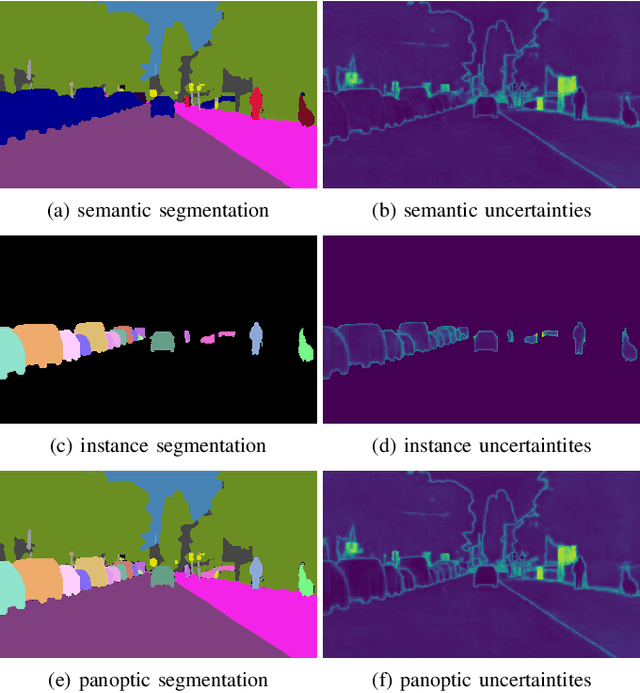

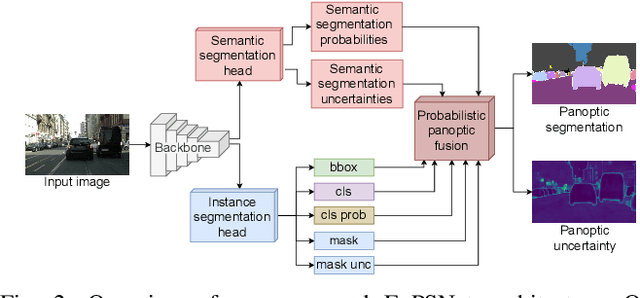

Uncertainty-aware Panoptic Segmentation

Jul 06, 2022

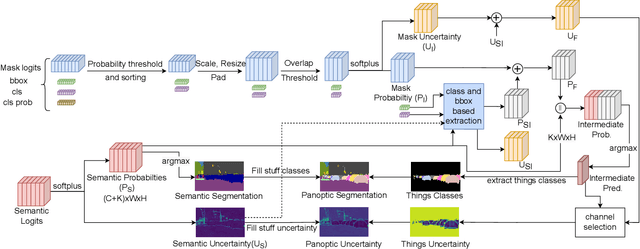

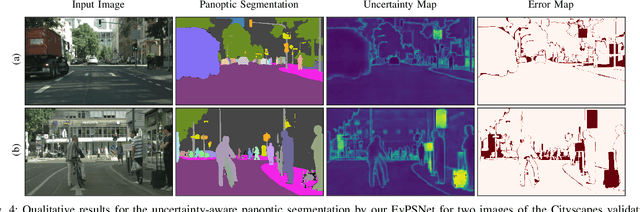

Abstract:Reliable scene understanding is indispensable for modern autonomous systems. Current learning-based methods typically try to maximize their performance based on segmentation metrics that only consider the quality of the segmentation. However, for the safe operation of a system in the real world it is crucial to consider the uncertainty in the prediction as well. In this work, we introduce the novel task of uncertainty-aware panoptic segmentation, which aims to predict per-pixel semantic and instance segmentations, together with per-pixel uncertainty estimates. We define two novel metrics to facilitate its quantitative analysis, the uncertainty-aware Panoptic Quality (uPQ) and the panoptic Expected Calibration Error (pECE). We further propose the novel top-down Evidential Panoptic Segmentation Network (EvPSNet) to solve this task. Our architecture employs a simple yet effective probabilistic fusion module that leverages the predicted uncertainties. Additionally, we propose a new Lov\'asz evidential loss function to optimize the IoU for the segmentation utilizing the probabilities provided by deep evidential learning. Furthermore, we provide several strong baselines combining state-of-the-art panoptic segmentation networks with sampling-free uncertainty estimation techniques. Extensive evaluations show that our EvPSNet achieves the new state-of-the-art for the standard Panoptic Quality (PQ), as well as for our uncertainty-aware panoptic metrics.

Robust Monocular Localization in Sparse HD Maps Leveraging Multi-Task Uncertainty Estimation

Oct 20, 2021

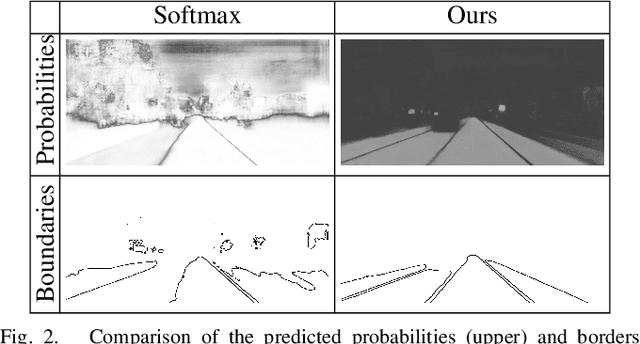

Abstract:Robust localization in dense urban scenarios using a low-cost sensor setup and sparse HD maps is highly relevant for the current advances in autonomous driving, but remains a challenging topic in research. We present a novel monocular localization approach based on a sliding-window pose graph that leverages predicted uncertainties for increased precision and robustness against challenging scenarios and per frame failures. To this end, we propose an efficient multi-task uncertainty-aware perception module, which covers semantic segmentation, as well as bounding box detection, to enable the localization of vehicles in sparse maps, containing only lane borders and traffic lights. Further, we design differentiable cost maps that are directly generated from the estimated uncertainties. This opens up the possibility to minimize the reprojection loss of amorphous map elements in an association free and uncertainty-aware manner. Extensive evaluation on the Lyft 5 dataset shows that, despite the sparsity of the map, our approach enables robust and accurate 6D localization in challenging urban scenarios

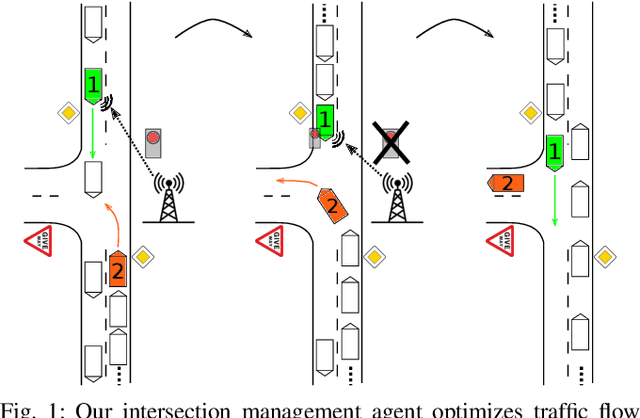

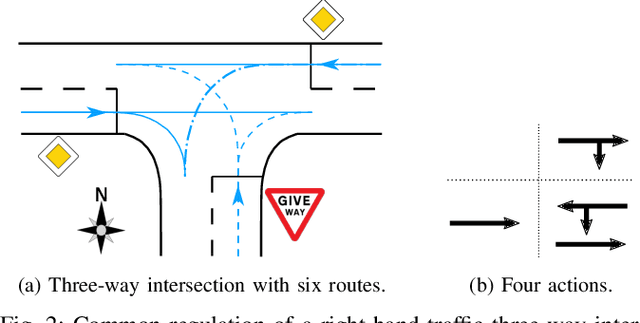

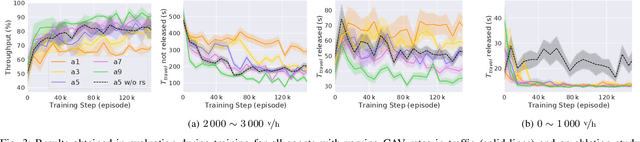

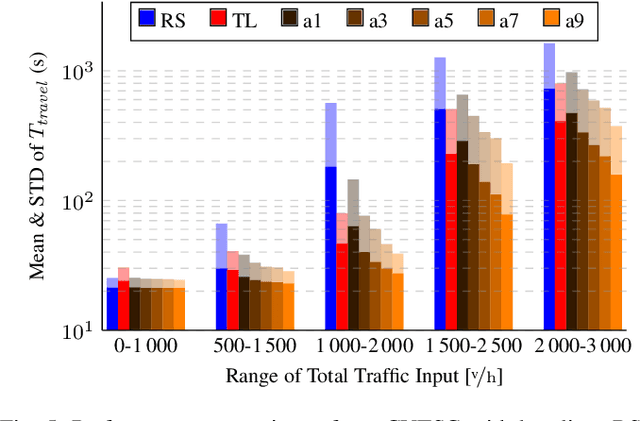

Courteous Behavior of Automated Vehicles at Unsignalized Intersections via Reinforcement Learning

Jun 11, 2021

Abstract:The transition from today's mostly human-driven traffic to a purely automated one will be a gradual evolution, with the effect that we will likely experience mixed traffic in the near future. Connected and automated vehicles can benefit human-driven ones and the whole traffic system in different ways, for example by improving collision avoidance and reducing traffic waves. Many studies have been carried out to improve intersection management, a significant bottleneck in traffic, with intelligent traffic signals or exclusively automated vehicles. However, the problem of how to improve mixed traffic at unsignalized intersections has received less attention. In this paper, we propose a novel approach to optimizing traffic flow at intersections in mixed traffic situations using deep reinforcement learning. Our reinforcement learning agent learns a policy for a centralized controller to let connected autonomous vehicles at unsignalized intersections give up their right of way and yield to other vehicles to optimize traffic flow. We implemented our approach and tested it in the traffic simulator SUMO based on simulated and real traffic data. The experimental evaluation demonstrates that our method significantly improves traffic flow through unsignalized intersections in mixed traffic settings and also provides better performance on a wide range of traffic situations compared to the state-of-the-art traffic signal controller for the corresponding signalized intersection.

EfficientLPS: Efficient LiDAR Panoptic Segmentation

Mar 02, 2021

Abstract:Panoptic segmentation of point clouds is a crucial task that enables autonomous vehicles to comprehend their vicinity using their highly accurate and reliable LiDAR sensors. Existing top-down approaches tackle this problem by either combining independent task-specific networks or translating methods from the image domain ignoring the intricacies of LiDAR data and thus often resulting in sub-optimal performance. In this paper, we present the novel top-down Efficient LiDAR Panoptic Segmentation (EfficientLPS) architecture that addresses multiple challenges in segmenting LiDAR point clouds including distance-dependent sparsity, severe occlusions, large scale-variations, and re-projection errors. EfficientLPS comprises of a novel shared backbone that encodes with strengthened geometric transformation modeling capacity and aggregates semantically rich range-aware multi-scale features. It incorporates new scale-invariant semantic and instance segmentation heads along with the panoptic fusion module which is supervised by our proposed panoptic periphery loss function. Additionally, we formulate a regularized pseudo labeling framework to further improve the performance of EfficientLPS by training on unlabelled data. We benchmark our proposed model on two large-scale LiDAR datasets: nuScenes, for which we also provide ground truth annotations, and SemanticKITTI. Notably, EfficientLPS sets the new state-of-the-art on both these datasets.

Long-Term Urban Vehicle Localization Using Pole Landmarks Extracted from 3-D Lidar Scans

Oct 23, 2019

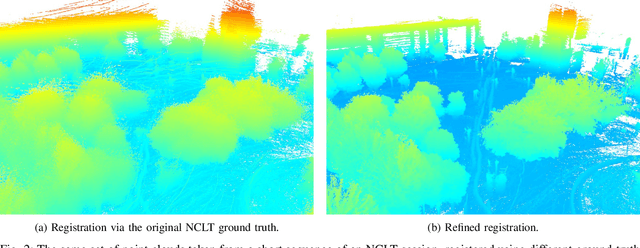

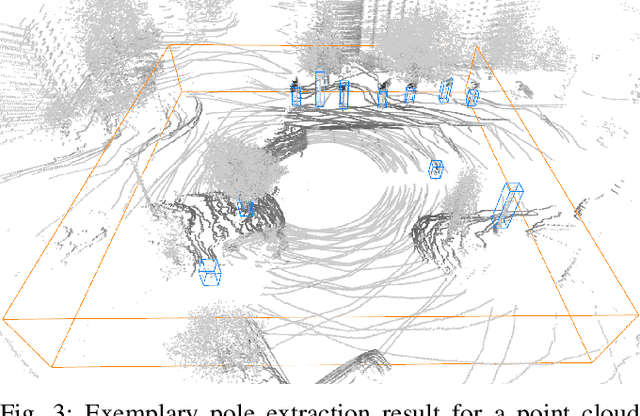

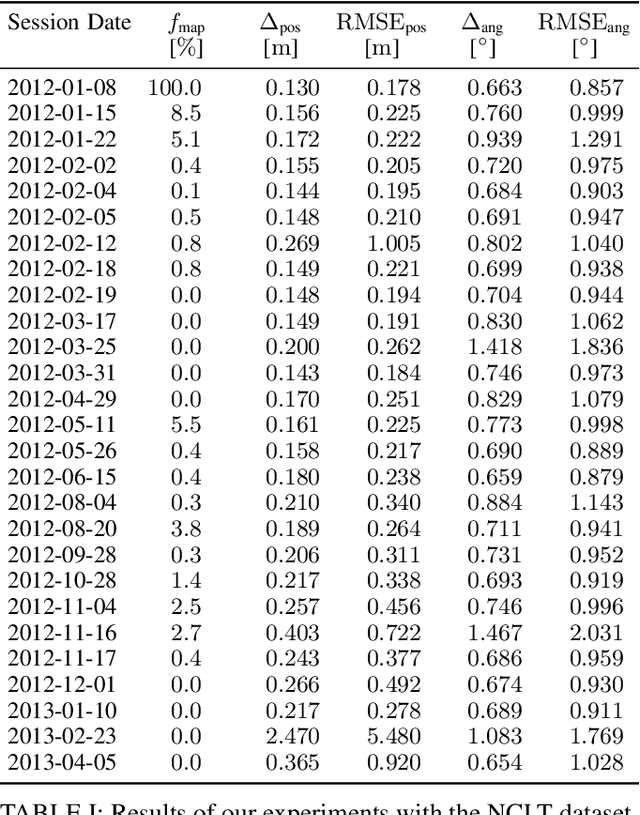

Abstract:Due to their ubiquity and long-term stability, pole-like objects are well suited to serve as landmarks for vehicle localization in urban environments. In this work, we present a complete mapping and long-term localization system based on pole landmarks extracted from 3-D lidar data. Our approach features a novel pole detector, a mapping module, and an online localization module, each of which are described in detail, and for which we provide an open-source implementation at www.github.com/acschaefer/polex. In extensive experiments, we demonstrate that our method improves on the state of the art with respect to long-term reliability and accuracy: First, we prove reliability by tasking the system with localizing a mobile robot over the course of 15~months in an urban area based on an initial map, confronting it with constantly varying routes, differing weather conditions, seasonal changes, and construction sites. Second, we show that the proposed approach clearly outperforms a recently published method in terms of accuracy.

* 9 pages

A Maximum Likelihood Approach to Extract Finite Planes from 3-D Laser Scans

Oct 23, 2019

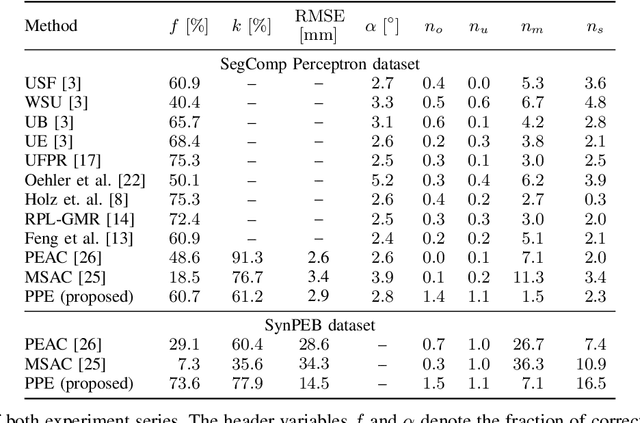

Abstract:Whether it is object detection, model reconstruction, laser odometry, or point cloud registration: Plane extraction is a vital component of many robotic systems. In this paper, we propose a strictly probabilistic method to detect finite planes in organized 3-D laser range scans. An agglomerative hierarchical clustering technique, our algorithm builds planes from bottom up, always extending a plane by the point that decreases the measurement likelihood of the scan the least. In contrast to most related methods, which rely on heuristics like orthogonal point-to-plane distance, we leverage the ray path information to compute the measurement likelihood. We evaluate our approach not only on the popular SegComp benchmark, but also provide a challenging synthetic dataset that overcomes SegComp's deficiencies. Both our implementation and the suggested dataset are available at www.github.com/acschaefer/ppe.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge