Sajad Marvi

Learning Appearance and Motion Cues for Panoptic Tracking

Mar 12, 2025Abstract:Panoptic tracking enables pixel-level scene interpretation of videos by integrating instance tracking in panoptic segmentation. This provides robots with a spatio-temporal understanding of the environment, an essential attribute for their operation in dynamic environments. In this paper, we propose a novel approach for panoptic tracking that simultaneously captures general semantic information and instance-specific appearance and motion features. Unlike existing methods that overlook dynamic scene attributes, our approach leverages both appearance and motion cues through dedicated network heads. These interconnected heads employ multi-scale deformable convolutions that reason about scene motion offsets with semantic context and motion-enhanced appearance features to learn tracking embeddings. Furthermore, we introduce a novel two-step fusion module that integrates the outputs from both heads by first matching instances from the current time step with propagated instances from previous time steps and subsequently refines associations using motion-enhanced appearance embeddings, improving robustness in challenging scenarios. Extensive evaluations of our proposed \netname model on two benchmark datasets demonstrate that it achieves state-of-the-art performance in panoptic tracking accuracy, surpassing prior methods in maintaining object identities over time. To facilitate future research, we make the code available at http://panoptictracking.cs.uni-freiburg.de

Evidential Uncertainty Estimation for Multi-Modal Trajectory Prediction

Mar 07, 2025Abstract:Accurate trajectory prediction is crucial for autonomous driving, yet uncertainty in agent behavior and perception noise makes it inherently challenging. While multi-modal trajectory prediction models generate multiple plausible future paths with associated probabilities, effectively quantifying uncertainty remains an open problem. In this work, we propose a novel multi-modal trajectory prediction approach based on evidential deep learning that estimates both positional and mode probability uncertainty in real time. Our approach leverages a Normal Inverse Gamma distribution for positional uncertainty and a Dirichlet distribution for mode uncertainty. Unlike sampling-based methods, it infers both types of uncertainty in a single forward pass, significantly improving efficiency. Additionally, we experimented with uncertainty-driven importance sampling to improve training efficiency by prioritizing underrepresented high-uncertainty samples over redundant ones. We perform extensive evaluations of our method on the Argoverse 1 and Argoverse 2 datasets, demonstrating that it provides reliable uncertainty estimates while maintaining high trajectory prediction accuracy.

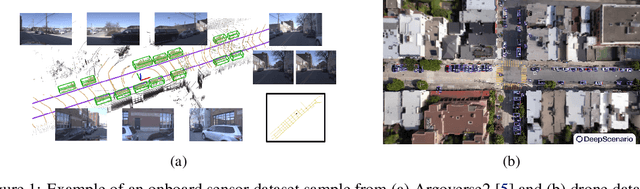

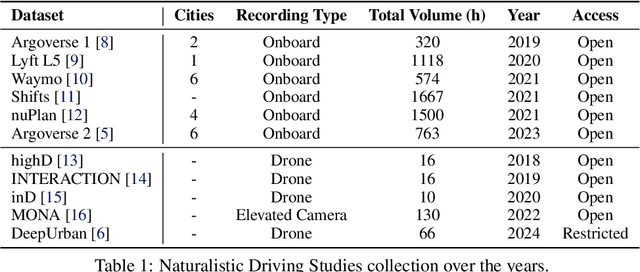

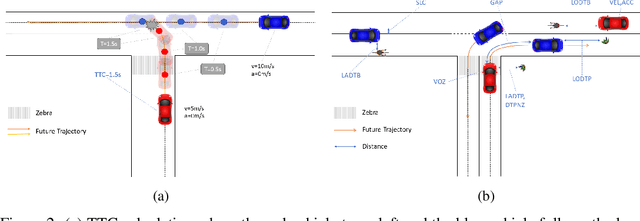

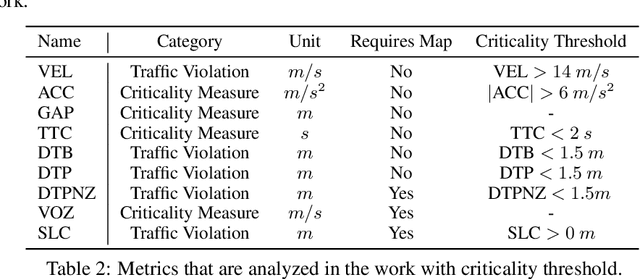

Traffic and Safety Rule Compliance of Humans in Diverse Driving Situations

Nov 04, 2024

Abstract:The increasing interest in autonomous driving systems has highlighted the need for an in-depth analysis of human driving behavior in diverse scenarios. Analyzing human data is crucial for developing autonomous systems that replicate safe driving practices and ensure seamless integration into human-dominated environments. This paper presents a comparative evaluation of human compliance with traffic and safety rules across multiple trajectory prediction datasets, including Argoverse 2, nuPlan, Lyft, and DeepUrban. By defining and leveraging existing safety and behavior-related metrics, such as time to collision, adherence to speed limits, and interactions with other traffic participants, we aim to provide a comprehensive understanding of each datasets strengths and limitations. Our analysis focuses on the distribution of data samples, identifying noise, outliers, and undesirable behaviors exhibited by human drivers in both the training and validation sets. The results underscore the need for applying robust filtering techniques to certain datasets due to high levels of noise and the presence of such undesirable behaviors.

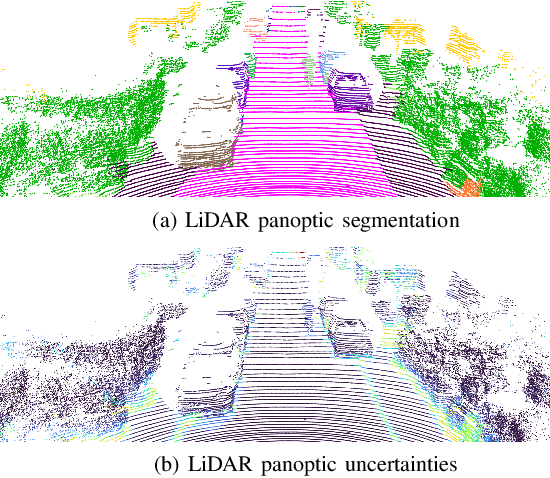

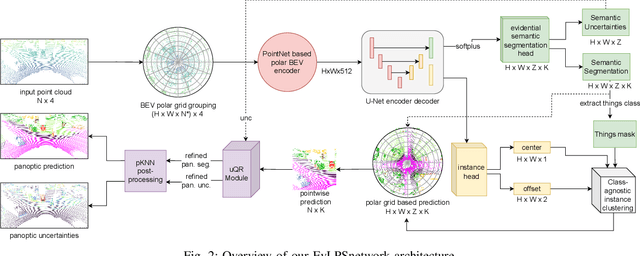

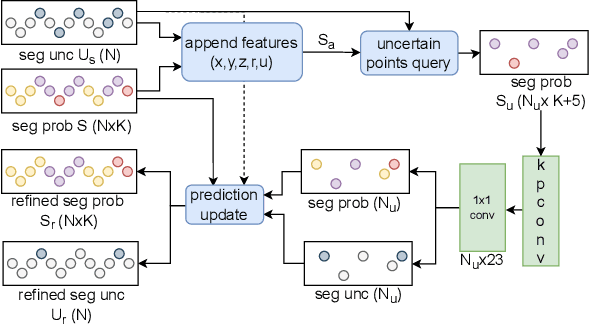

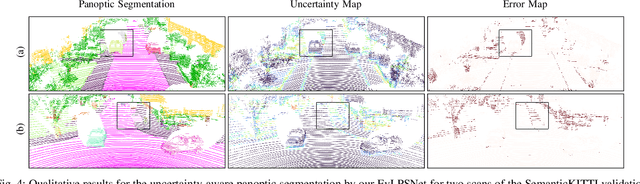

Uncertainty-aware LiDAR Panoptic Segmentation

Oct 10, 2022

Abstract:Modern autonomous systems often rely on LiDAR scanners, in particular for autonomous driving scenarios. In this context, reliable scene understanding is indispensable. Current learning-based methods typically try to achieve maximum performance for this task, while neglecting a proper estimation of the associated uncertainties. In this work, we introduce a novel approach for solving the task of uncertainty-aware panoptic segmentation using LiDAR point clouds. Our proposed EvLPSNet network is the first to solve this task efficiently in a sampling-free manner. It aims to predict per-point semantic and instance segmentations, together with per-point uncertainty estimates. Moreover, it incorporates methods for improving the performance by employing the predicted uncertainties. We provide several strong baselines combining state-of-the-art panoptic segmentation networks with sampling-free uncertainty estimation techniques. Extensive evaluations show that we achieve the best performance on uncertainty-aware panoptic segmentation quality and calibration compared to these baselines. We make our code available at: \url{https://github.com/kshitij3112/EvLPSNet}

Uncertainty-aware Panoptic Segmentation

Jul 06, 2022

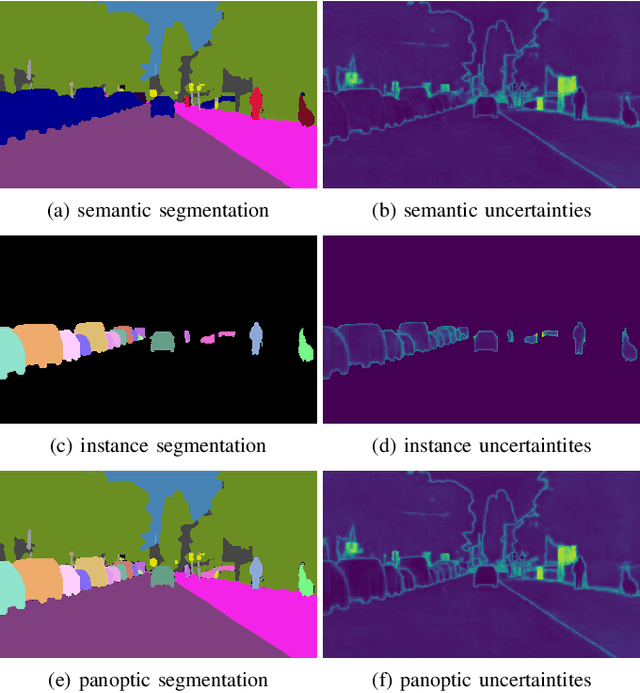

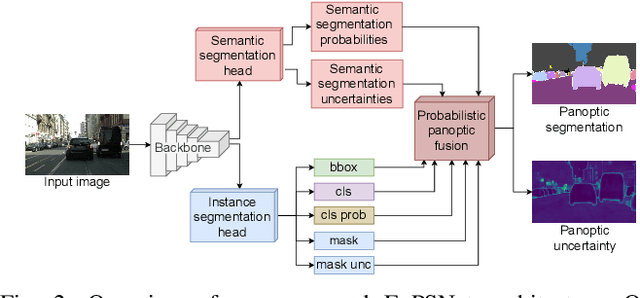

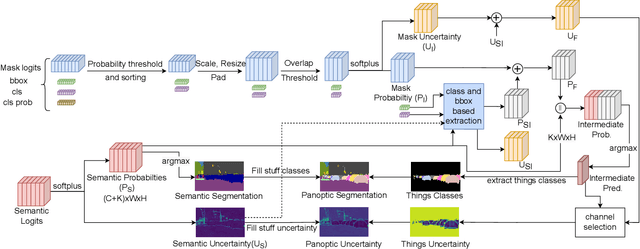

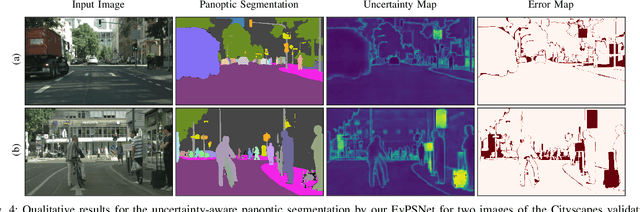

Abstract:Reliable scene understanding is indispensable for modern autonomous systems. Current learning-based methods typically try to maximize their performance based on segmentation metrics that only consider the quality of the segmentation. However, for the safe operation of a system in the real world it is crucial to consider the uncertainty in the prediction as well. In this work, we introduce the novel task of uncertainty-aware panoptic segmentation, which aims to predict per-pixel semantic and instance segmentations, together with per-pixel uncertainty estimates. We define two novel metrics to facilitate its quantitative analysis, the uncertainty-aware Panoptic Quality (uPQ) and the panoptic Expected Calibration Error (pECE). We further propose the novel top-down Evidential Panoptic Segmentation Network (EvPSNet) to solve this task. Our architecture employs a simple yet effective probabilistic fusion module that leverages the predicted uncertainties. Additionally, we propose a new Lov\'asz evidential loss function to optimize the IoU for the segmentation utilizing the probabilities provided by deep evidential learning. Furthermore, we provide several strong baselines combining state-of-the-art panoptic segmentation networks with sampling-free uncertainty estimation techniques. Extensive evaluations show that our EvPSNet achieves the new state-of-the-art for the standard Panoptic Quality (PQ), as well as for our uncertainty-aware panoptic metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge