Kshitij Sirohi

Perception Matters: Enhancing Embodied AI with Uncertainty-Aware Semantic Segmentation

Aug 05, 2024

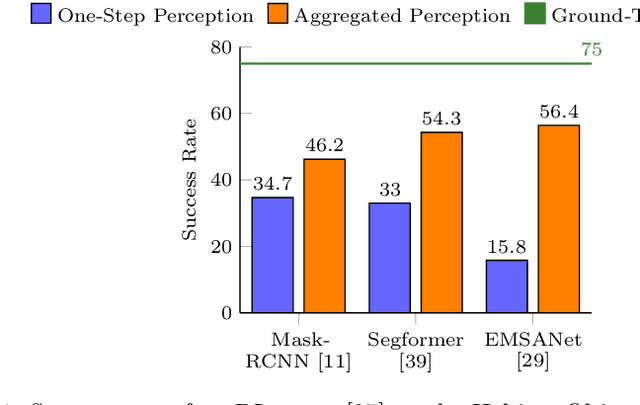

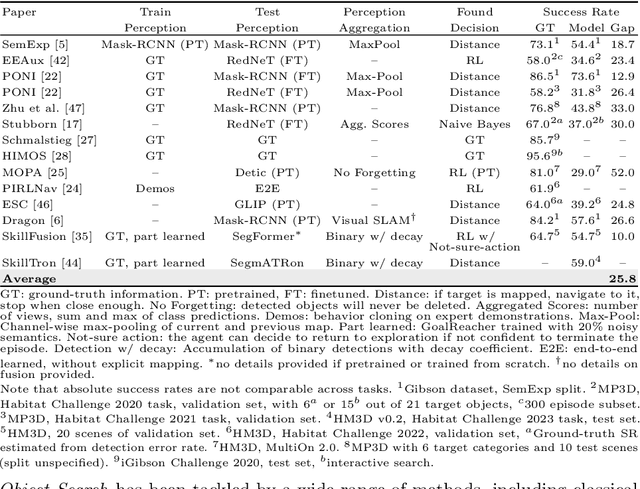

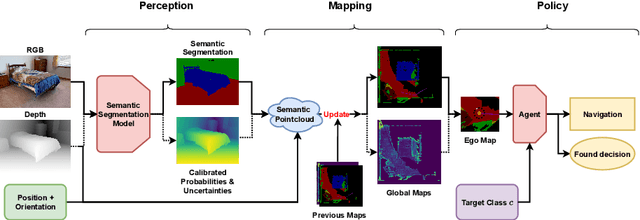

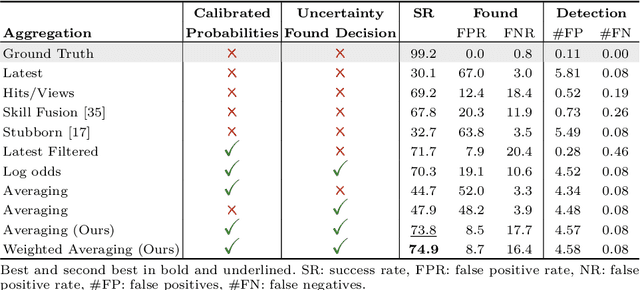

Abstract:Embodied AI has made significant progress acting in unexplored environments. However, tasks such as object search have largely focused on efficient policy learning. In this work, we identify several gaps in current search methods: They largely focus on dated perception models, neglect temporal aggregation, and transfer from ground truth directly to noisy perception at test time, without accounting for the resulting overconfidence in the perceived state. We address the identified problems through calibrated perception probabilities and uncertainty across aggregation and found decisions, thereby adapting the models for sequential tasks. The resulting methods can be directly integrated with pretrained models across a wide family of existing search approaches at no additional training cost. We perform extensive evaluations of aggregation methods across both different semantic perception models and policies, confirming the importance of calibrated uncertainties in both the aggregation and found decisions. We make the code and trained models available at http://semantic-search.cs.uni-freiburg.de.

uPLAM: Robust Panoptic Localization and Mapping Leveraging Perception Uncertainties

Feb 08, 2024

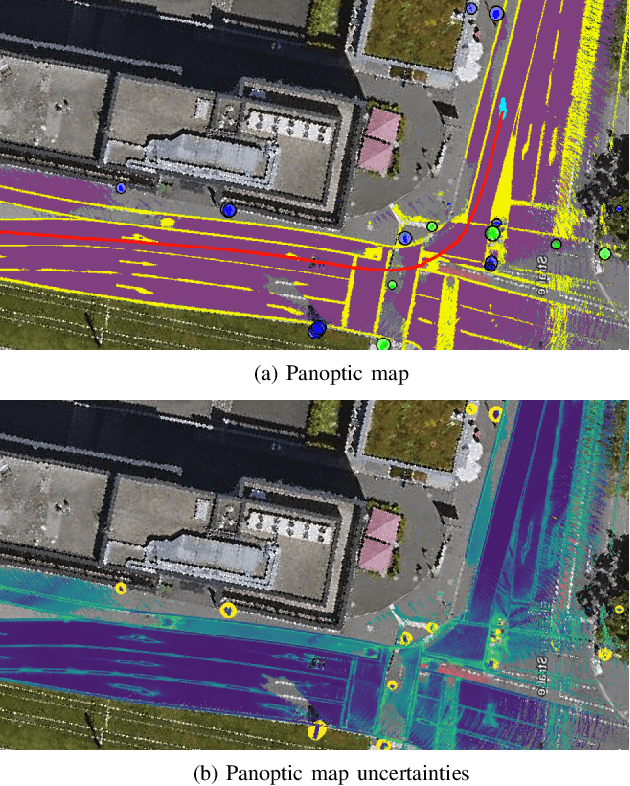

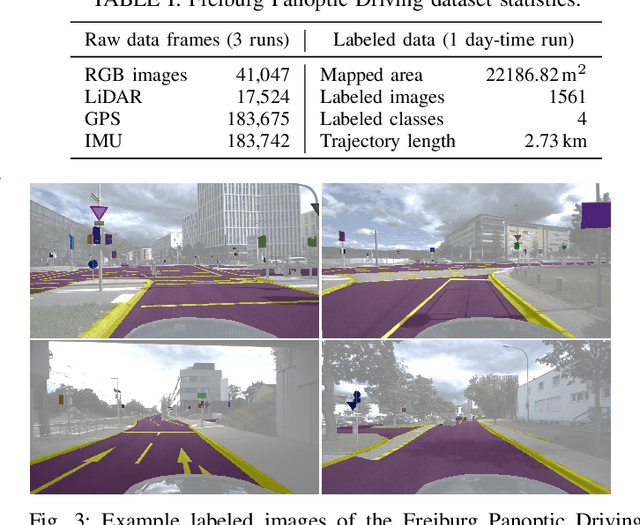

Abstract:The availability of a reliable map and a robust localization system is critical for the operation of an autonomous vehicle. In a modern system, both mapping and localization solutions generally employ convolutional neural network (CNN) --based perception. Hence, any algorithm should consider potential errors in perception for safe and robust functioning. In this work, we present uncertainty-aware panoptic Localization and Mapping (uPLAM), which employs perception uncertainty as a bridge to fuse the perception information with classical localization and mapping approaches. We introduce an uncertainty-based map aggregation technique to create a long-term panoptic bird's eye view map and provide an associated mapping uncertainty. Our map consists of surface semantics and landmarks with unique IDs. Moreover, we present panoptic uncertainty-aware particle filter-based localization. To this end, we propose an uncertainty-based particle importance weight calculation for the adaptive incorporation of perception information into localization. We also present a new dataset for evaluating long-term panoptic mapping and map-based localization. Extensive evaluations showcase that our proposed uncertainty incorporation leads to better mapping with reliable uncertainty estimates and accurate localization. We make our dataset and code available at: \url{http://uplam.cs.uni-freiburg.de}

EvCenterNet: Uncertainty Estimation for Object Detection using Evidential Learning

Mar 06, 2023Abstract:Uncertainty estimation is crucial in safety-critical settings such as automated driving as it provides valuable information for several downstream tasks including high-level decision-making and path planning. In this work, we propose EvCenterNet, a novel uncertainty-aware 2D object detection framework utilizing evidential learning to directly estimate both classification and regression uncertainties. To employ evidential learning for object detection, we devise a combination of evidential and focal loss functions for the sparse heatmap inputs. We introduce class-balanced weighting for regression and heatmap prediction to tackle the class imbalance encountered by evidential learning. Moreover, we propose a learning scheme to actively utilize the predicted heatmap uncertainties to improve the detection performance by focusing on the most uncertain points. We train our model on the KITTI dataset and evaluate it on challenging out-of-distribution datasets including BDD100K and nuImages. Our experiments demonstrate that our approach improves the precision and minimizes the execution time loss in relation to the base model.

Uncertainty-aware LiDAR Panoptic Segmentation

Oct 10, 2022

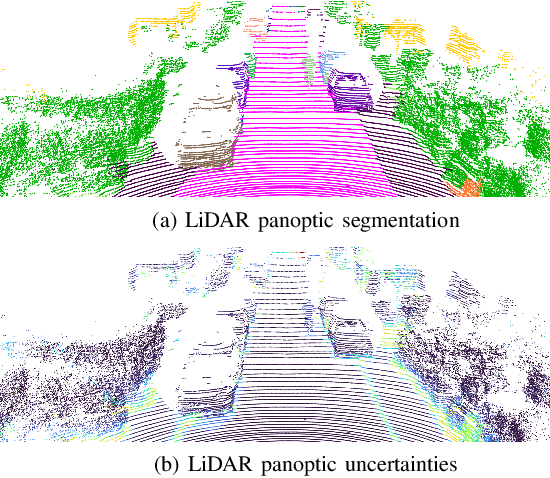

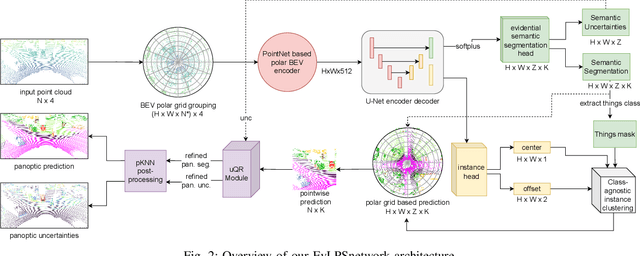

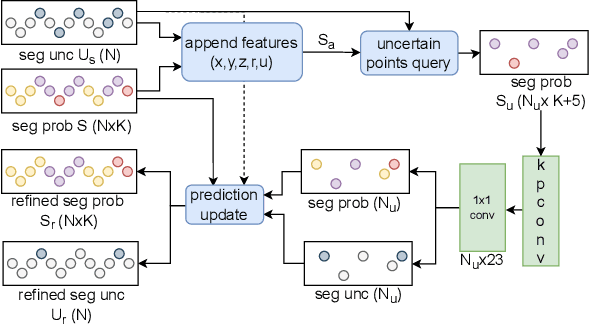

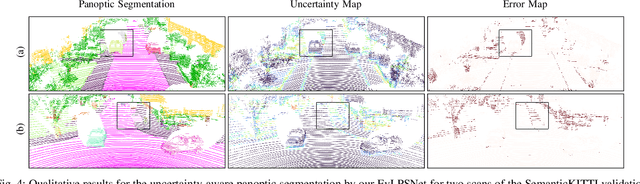

Abstract:Modern autonomous systems often rely on LiDAR scanners, in particular for autonomous driving scenarios. In this context, reliable scene understanding is indispensable. Current learning-based methods typically try to achieve maximum performance for this task, while neglecting a proper estimation of the associated uncertainties. In this work, we introduce a novel approach for solving the task of uncertainty-aware panoptic segmentation using LiDAR point clouds. Our proposed EvLPSNet network is the first to solve this task efficiently in a sampling-free manner. It aims to predict per-point semantic and instance segmentations, together with per-point uncertainty estimates. Moreover, it incorporates methods for improving the performance by employing the predicted uncertainties. We provide several strong baselines combining state-of-the-art panoptic segmentation networks with sampling-free uncertainty estimation techniques. Extensive evaluations show that we achieve the best performance on uncertainty-aware panoptic segmentation quality and calibration compared to these baselines. We make our code available at: \url{https://github.com/kshitij3112/EvLPSNet}

Uncertainty-aware Panoptic Segmentation

Jul 06, 2022

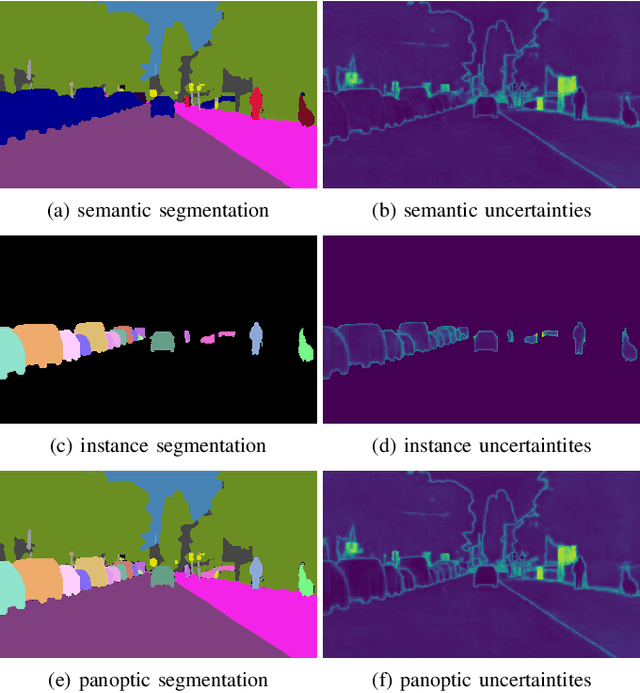

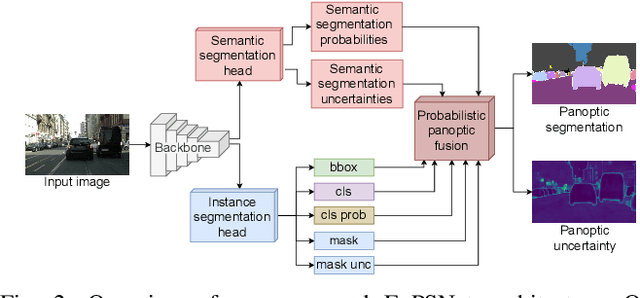

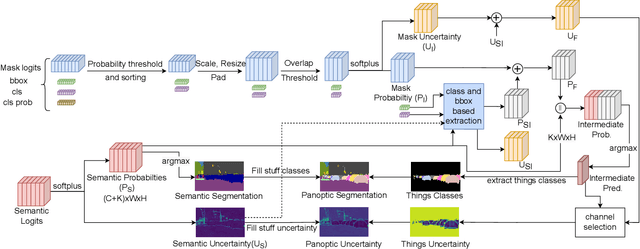

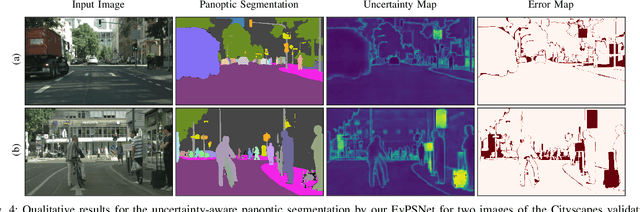

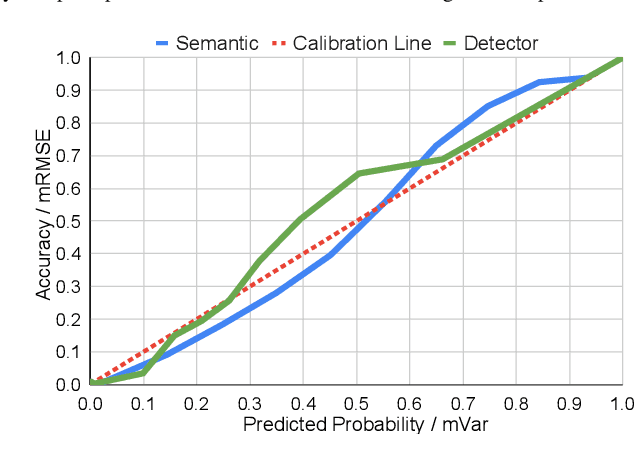

Abstract:Reliable scene understanding is indispensable for modern autonomous systems. Current learning-based methods typically try to maximize their performance based on segmentation metrics that only consider the quality of the segmentation. However, for the safe operation of a system in the real world it is crucial to consider the uncertainty in the prediction as well. In this work, we introduce the novel task of uncertainty-aware panoptic segmentation, which aims to predict per-pixel semantic and instance segmentations, together with per-pixel uncertainty estimates. We define two novel metrics to facilitate its quantitative analysis, the uncertainty-aware Panoptic Quality (uPQ) and the panoptic Expected Calibration Error (pECE). We further propose the novel top-down Evidential Panoptic Segmentation Network (EvPSNet) to solve this task. Our architecture employs a simple yet effective probabilistic fusion module that leverages the predicted uncertainties. Additionally, we propose a new Lov\'asz evidential loss function to optimize the IoU for the segmentation utilizing the probabilities provided by deep evidential learning. Furthermore, we provide several strong baselines combining state-of-the-art panoptic segmentation networks with sampling-free uncertainty estimation techniques. Extensive evaluations show that our EvPSNet achieves the new state-of-the-art for the standard Panoptic Quality (PQ), as well as for our uncertainty-aware panoptic metrics.

Robust Monocular Localization in Sparse HD Maps Leveraging Multi-Task Uncertainty Estimation

Oct 20, 2021

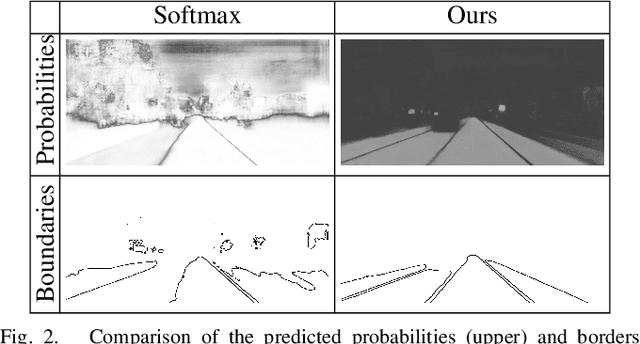

Abstract:Robust localization in dense urban scenarios using a low-cost sensor setup and sparse HD maps is highly relevant for the current advances in autonomous driving, but remains a challenging topic in research. We present a novel monocular localization approach based on a sliding-window pose graph that leverages predicted uncertainties for increased precision and robustness against challenging scenarios and per frame failures. To this end, we propose an efficient multi-task uncertainty-aware perception module, which covers semantic segmentation, as well as bounding box detection, to enable the localization of vehicles in sparse maps, containing only lane borders and traffic lights. Further, we design differentiable cost maps that are directly generated from the estimated uncertainties. This opens up the possibility to minimize the reprojection loss of amorphous map elements in an association free and uncertainty-aware manner. Extensive evaluation on the Lyft 5 dataset shows that, despite the sparsity of the map, our approach enables robust and accurate 6D localization in challenging urban scenarios

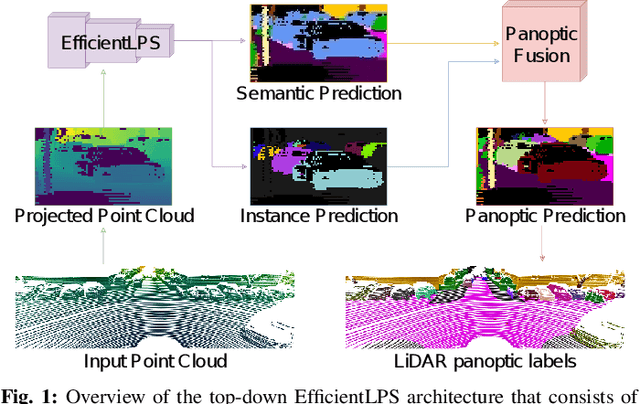

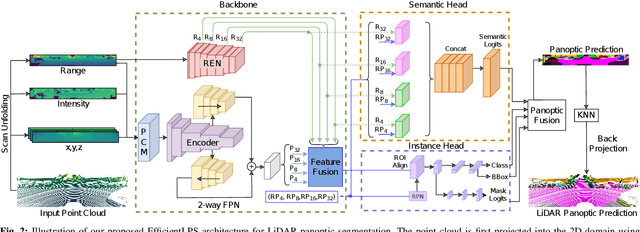

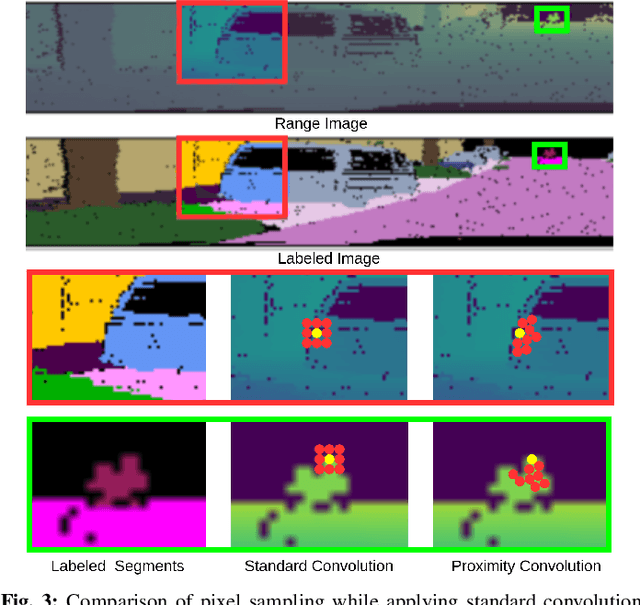

EfficientLPS: Efficient LiDAR Panoptic Segmentation

Mar 02, 2021

Abstract:Panoptic segmentation of point clouds is a crucial task that enables autonomous vehicles to comprehend their vicinity using their highly accurate and reliable LiDAR sensors. Existing top-down approaches tackle this problem by either combining independent task-specific networks or translating methods from the image domain ignoring the intricacies of LiDAR data and thus often resulting in sub-optimal performance. In this paper, we present the novel top-down Efficient LiDAR Panoptic Segmentation (EfficientLPS) architecture that addresses multiple challenges in segmenting LiDAR point clouds including distance-dependent sparsity, severe occlusions, large scale-variations, and re-projection errors. EfficientLPS comprises of a novel shared backbone that encodes with strengthened geometric transformation modeling capacity and aggregates semantically rich range-aware multi-scale features. It incorporates new scale-invariant semantic and instance segmentation heads along with the panoptic fusion module which is supervised by our proposed panoptic periphery loss function. Additionally, we formulate a regularized pseudo labeling framework to further improve the performance of EfficientLPS by training on unlabelled data. We benchmark our proposed model on two large-scale LiDAR datasets: nuScenes, for which we also provide ground truth annotations, and SemanticKITTI. Notably, EfficientLPS sets the new state-of-the-art on both these datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge