Lukas Luft

Long-Term Urban Vehicle Localization Using Pole Landmarks Extracted from 3-D Lidar Scans

Oct 23, 2019

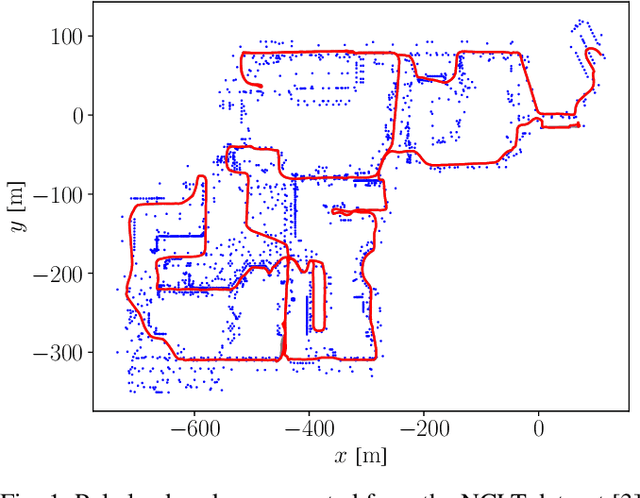

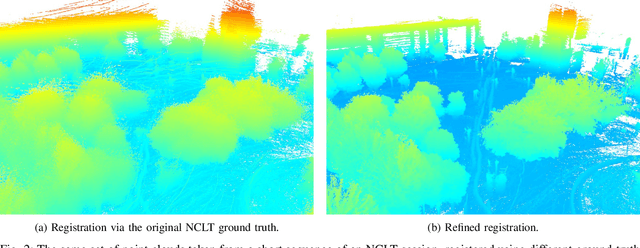

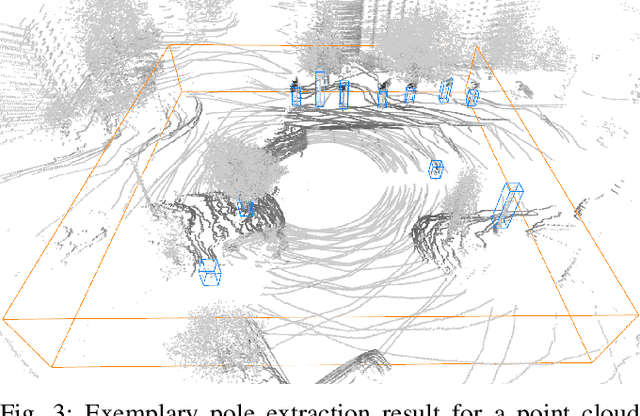

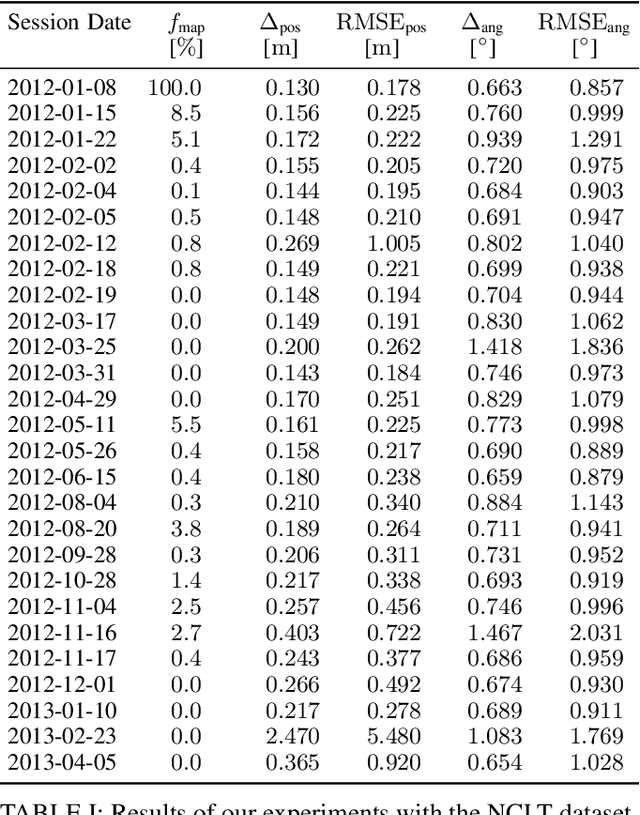

Abstract:Due to their ubiquity and long-term stability, pole-like objects are well suited to serve as landmarks for vehicle localization in urban environments. In this work, we present a complete mapping and long-term localization system based on pole landmarks extracted from 3-D lidar data. Our approach features a novel pole detector, a mapping module, and an online localization module, each of which are described in detail, and for which we provide an open-source implementation at www.github.com/acschaefer/polex. In extensive experiments, we demonstrate that our method improves on the state of the art with respect to long-term reliability and accuracy: First, we prove reliability by tasking the system with localizing a mobile robot over the course of 15~months in an urban area based on an initial map, confronting it with constantly varying routes, differing weather conditions, seasonal changes, and construction sites. Second, we show that the proposed approach clearly outperforms a recently published method in terms of accuracy.

* 9 pages

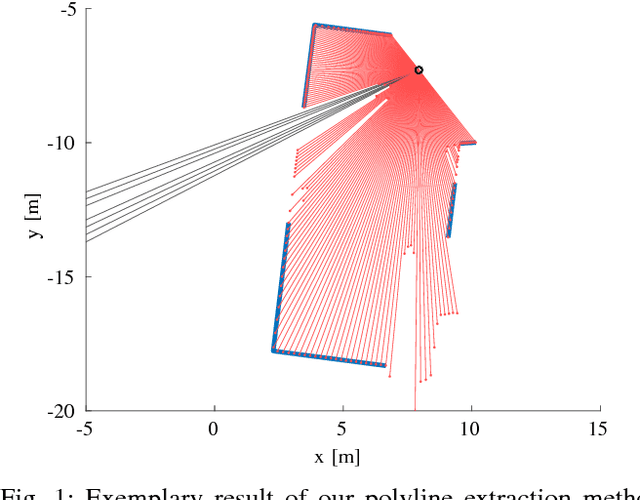

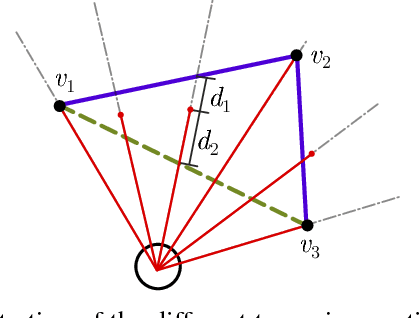

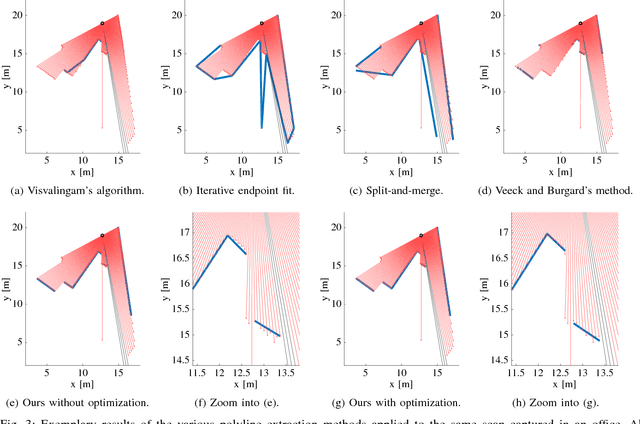

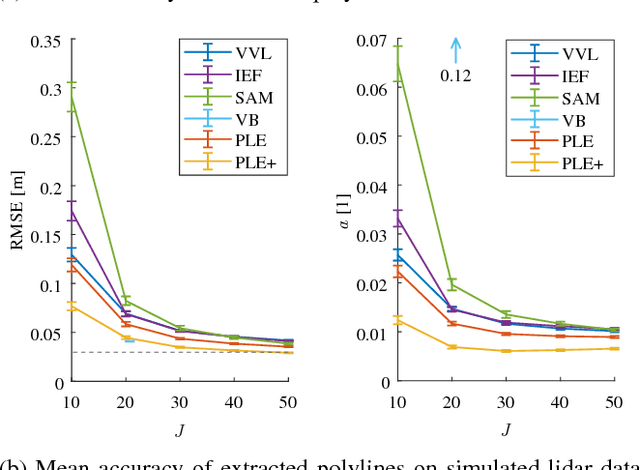

A Maximum Likelihood Approach to Extract Polylines from 2-D Laser Range Scans

Oct 23, 2019

Abstract:Man-made environments such as households, offices, or factory floors are typically composed of linear structures. Accordingly, polylines are a natural way to accurately represent their geometry. In this paper, we propose a novel probabilistic method to extract polylines from raw 2-D laser range scans. The key idea of our approach is to determine a set of polylines that maximizes the likelihood of a given scan. In extensive experiments carried out on publicly available real-world datasets and on simulated laser scans, we demonstrate that our method substantially outperforms existing state-of-the-art approaches in terms of accuracy, while showing comparable computational requirements. Our implementation is available under https://github.com/acschaefer/ple.

* 9 pages

DCT Maps: Compact Differentiable Lidar Maps Based on the Cosine Transform

Oct 23, 2019

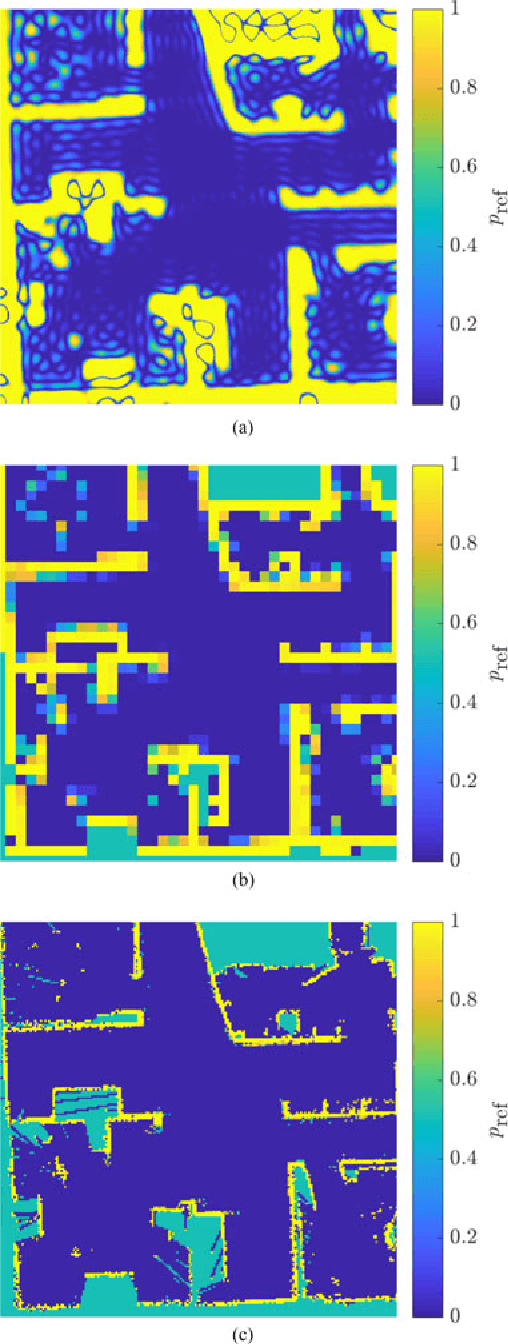

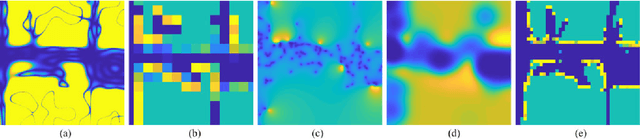

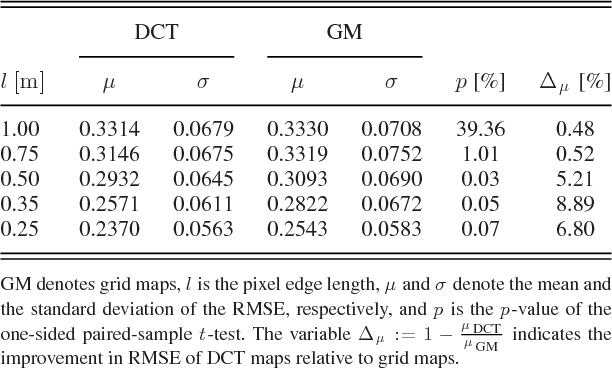

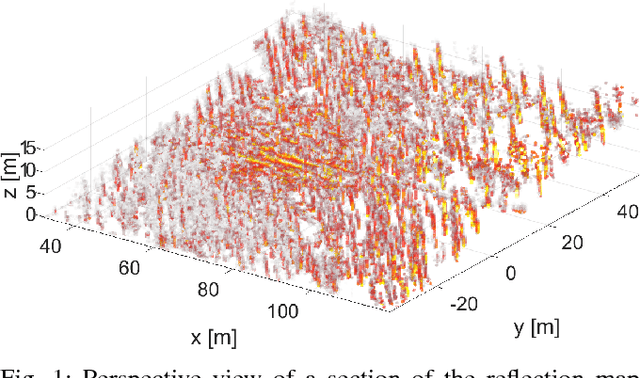

Abstract:Most robot mapping techniques for lidar sensors tessellate the environment into pixels or voxels and assume uniformity of the environment within them. Although intuitive, this representation entails disadvantages: The resulting grid maps exhibit aliasing effects and are not differentiable. In the present paper, we address these drawbacks by introducing a novel mapping technique that does neither rely on tessellation nor on the assumption of piecewise uniformity of the space, without increasing memory requirements. Instead of representing the map in the position domain, we store the map parameters in the discrete frequency domain and leverage the continuous extension of the inverse discrete cosine transform to convert them to a continuously differentiable scalar field in the position domain, which we call DCT map. A DCT map assigns to each point in space a lidar decay rate, which models the local permeability of the space for laser rays. In this way, the map can describe objects of different laser permeabilities, from completely opaque to completely transparent. DCT maps represent lidar measurements significantly more accurate than grid maps, Gaussian process occupancy maps, and Hilbert maps, all with the same memory requirements, as demonstrated in our real-world experiments.

* 8 pages

Closed-Form Full Map Posteriors for Robot Localization with Lidar Sensors

Oct 23, 2019

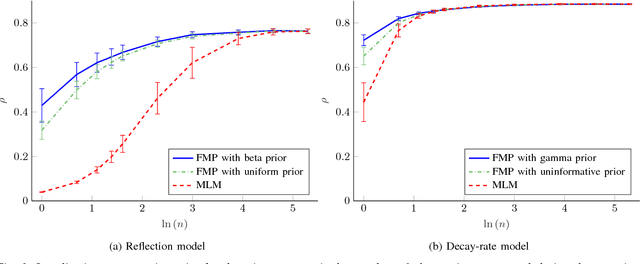

Abstract:A popular class of lidar-based grid mapping algorithms computes for each map cell the probability that it reflects an incident laser beam. These algorithms typically determine the map as the set of reflection probabilities that maximizes the likelihood of the underlying laser data and do not compute the full posterior distribution over all possible maps. Thereby, they discard crucial information about the confidence of the estimate. The approach presented in this paper preserves this information by determining the full map posterior. In general, this problem is hard because distributions over real-valued quantities can possess infinitely many dimensions. However, for two state-of-the-art beam-based lidar models, our approach yields closed-form map posteriors that possess only two parameters per cell. Even better, these posteriors come for free, in the sense that they use the same parameters as the traditional approaches, without the need for additional computations. An important use case for grid maps is robot localization, which we formulate as Bayesian filtering based on the closed-form map posterior rather than based on a single map. The resulting measurement likelihoods can also be expressed in closed form. In simulations and extensive real-world experiments, we show that leveraging the full map posterior improves the localization accuracy compared to approaches that use the most likely map.

* 7 pages

An Analytical Lidar Sensor Model Based on Ray Path Information

Oct 23, 2019

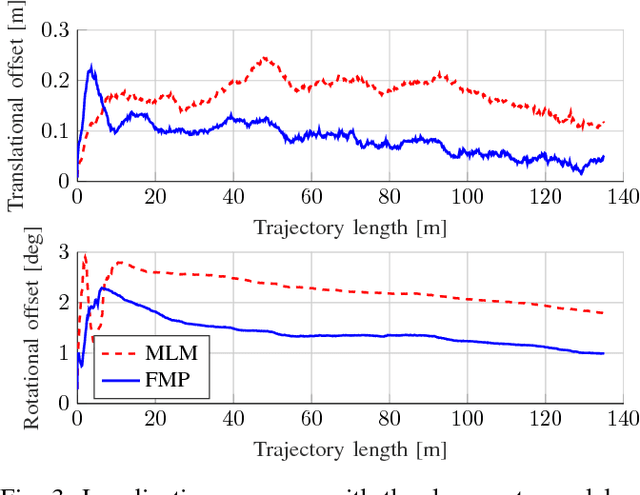

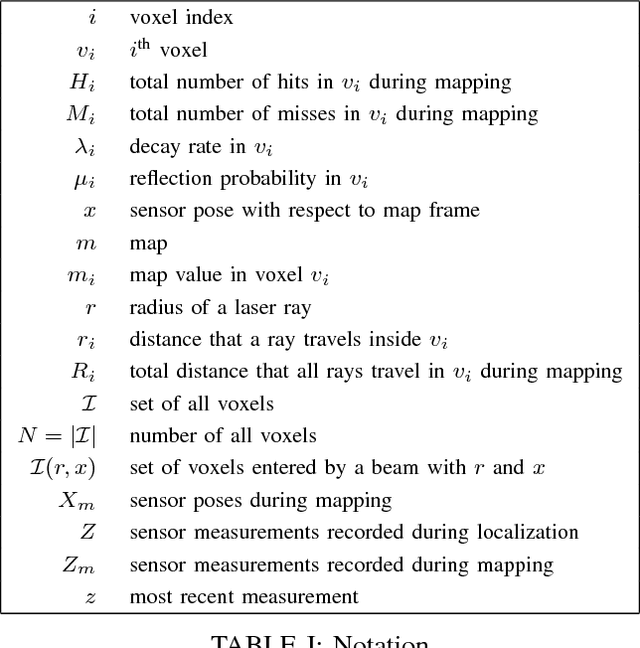

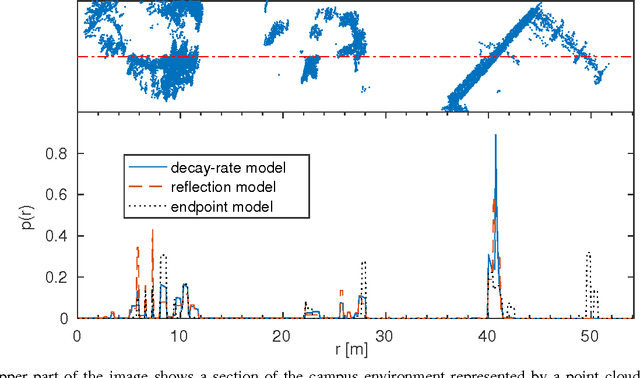

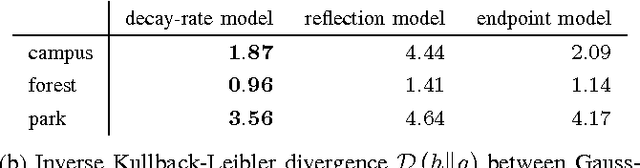

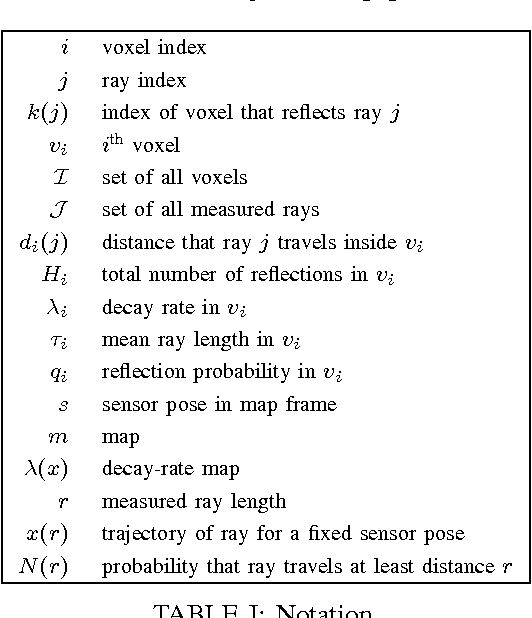

Abstract:Two core competencies of a mobile robot are to build a map of the environment and to estimate its own pose on the basis of this map and incoming sensor readings. To account for the uncertainties in this process, one typically employs probabilistic state estimation approaches combined with a model of the specific sensor. Over the past years, lidar sensors have become a popular choice for mapping and localization. However, many common lidar models perform poorly in unstructured, unpredictable environments, they lack a consistent physical model for both mapping and localization, and they do not exploit all the information the sensor provides, e.g. out-of-range measurements. In this paper, we introduce a consistent physical model that can be applied to mapping as well as to localization. It naturally deals with unstructured environments and makes use of both out-of-range measurements and information about the ray path. The approach can be seen as a generalization of the well-established reflection model, but in addition to counting ray reflections and traversals in a specific map cell, it considers the distances that all rays travel inside this cell. We prove that the resulting map maximizes the data likelihood and demonstrate that our model outperforms state-of-the-art sensor models in extensive real-world experiments.

* 8 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge