Alexander Schaefer

Smaller Models, Smarter Rewards: A Two-Sided Approach to Process and Outcome Rewards

Oct 27, 2025Abstract:Generating high-quality code remains a challenge for Large Language Models (LLMs). For the evolution of reasoning models on this task, reward models are a necessary intermediate step. These models judge outcomes or intermediate steps. Decoder-only transformer models can be turned into reward models by introducing a regression layer and supervised fine-tuning. While it is known that reflection capabilities generally increase with the size of a model, we want to investigate whether state-of-the-art small language models like the Phi-4 family can be turned into usable reward models blending the consideration of process rewards and outcome rewards. Targeting this goal, we construct a dataset of code samples with correctness labels derived from the APPS coding challenge benchmark. We then train a value-head model to estimate the success probability of intermediate outputs. Our evaluation shows that small LLMs are capable of serving as effective reward models or code evaluation critics, successfully identifying correct solutions among multiple candidates. Using this critic, we achieve over a 20% improvement in the search capability of the most accurate code out of multiple generations.

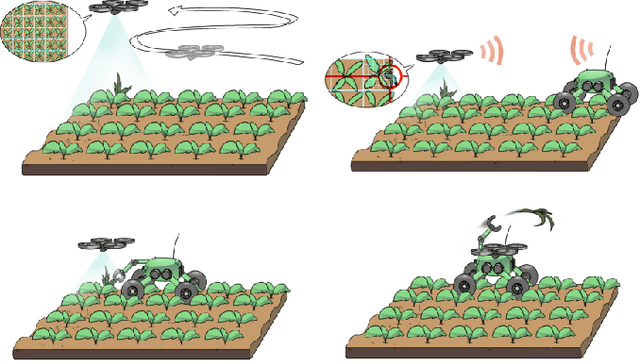

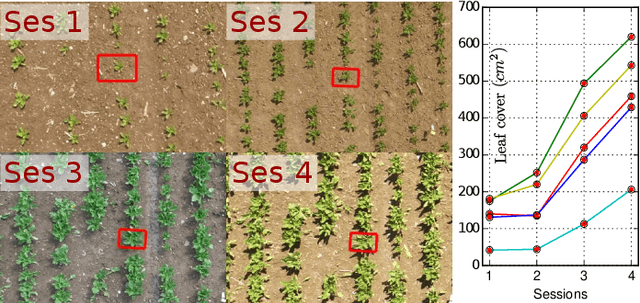

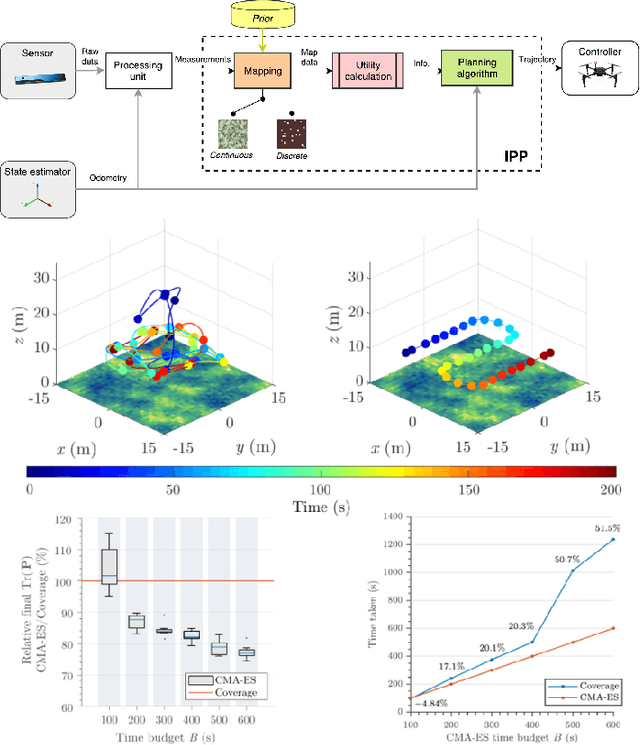

Building an Aerial-Ground Robotics System for Precision Farming

Nov 08, 2019

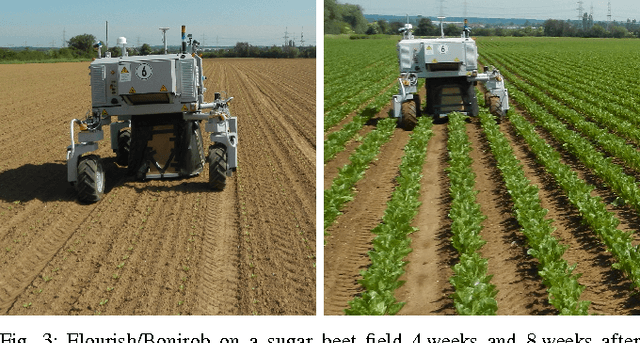

Abstract:The application of autonomous robots in agriculture is gaining more and more popularity thanks to the high impact it may have on food security, sustainability, resource use efficiency, reduction of chemical treatments, minimization of the human effort and maximization of yield. The Flourish research project faced this challenge by developing an adaptable robotic solution for precision farming that combines the aerial survey capabilities of small autonomous unmanned aerial vehicles (UAVs) with flexible targeted intervention performed by multi-purpose agricultural unmanned ground vehicles (UGVs). This paper presents an exhaustive overview of the scientific and technological advances and outcomes obtained in the Flourish project. We introduce multi-spectral perception algorithms and aerial and ground based systems developed to monitor crop density, weed pressure, crop nitrogen nutrition status, and to accurately classify and locate weeds. We then introduce the navigation and mapping systems to deal with the specificity of the employed robots and of the agricultural environment, highlighting the collaborative modules that enable the UAVs and UGVs to collect and share information in a unified environment model. We finally present the ground intervention hardware, software solutions, and interfaces we implemented and tested in different field conditions and with different crops. We describe here a real use case in which a UAV collaborates with a UGV to monitor the field and to perform selective spraying treatments in a totally autonomous way.

Long-Term Urban Vehicle Localization Using Pole Landmarks Extracted from 3-D Lidar Scans

Oct 23, 2019

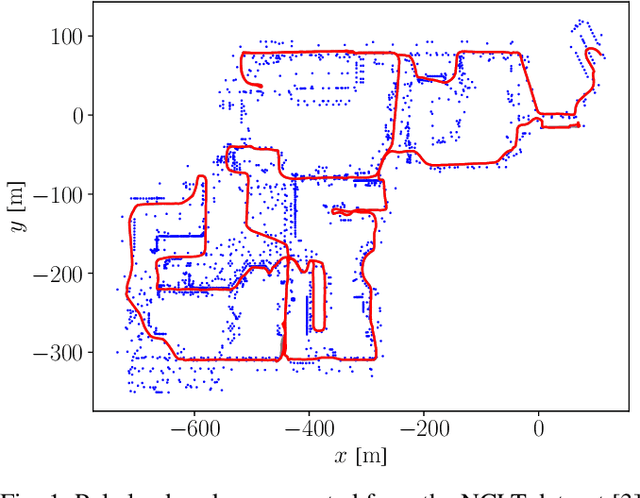

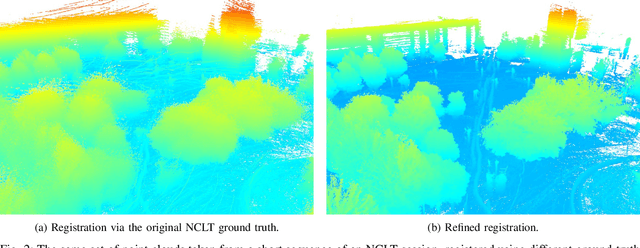

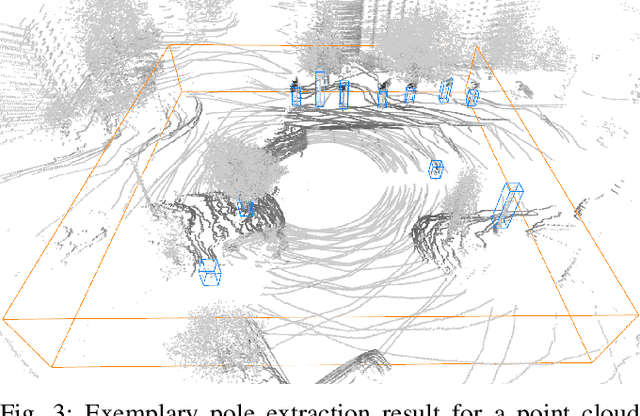

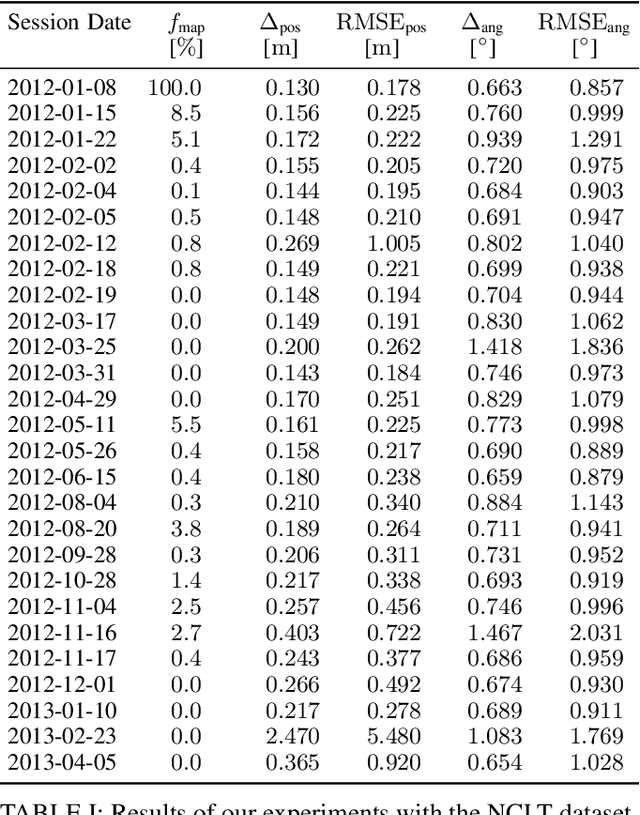

Abstract:Due to their ubiquity and long-term stability, pole-like objects are well suited to serve as landmarks for vehicle localization in urban environments. In this work, we present a complete mapping and long-term localization system based on pole landmarks extracted from 3-D lidar data. Our approach features a novel pole detector, a mapping module, and an online localization module, each of which are described in detail, and for which we provide an open-source implementation at www.github.com/acschaefer/polex. In extensive experiments, we demonstrate that our method improves on the state of the art with respect to long-term reliability and accuracy: First, we prove reliability by tasking the system with localizing a mobile robot over the course of 15~months in an urban area based on an initial map, confronting it with constantly varying routes, differing weather conditions, seasonal changes, and construction sites. Second, we show that the proposed approach clearly outperforms a recently published method in terms of accuracy.

* 9 pages

A Maximum Likelihood Approach to Extract Finite Planes from 3-D Laser Scans

Oct 23, 2019

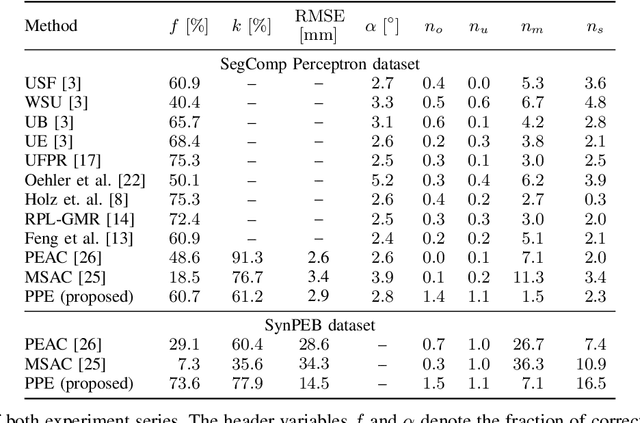

Abstract:Whether it is object detection, model reconstruction, laser odometry, or point cloud registration: Plane extraction is a vital component of many robotic systems. In this paper, we propose a strictly probabilistic method to detect finite planes in organized 3-D laser range scans. An agglomerative hierarchical clustering technique, our algorithm builds planes from bottom up, always extending a plane by the point that decreases the measurement likelihood of the scan the least. In contrast to most related methods, which rely on heuristics like orthogonal point-to-plane distance, we leverage the ray path information to compute the measurement likelihood. We evaluate our approach not only on the popular SegComp benchmark, but also provide a challenging synthetic dataset that overcomes SegComp's deficiencies. Both our implementation and the suggested dataset are available at www.github.com/acschaefer/ppe.

A Maximum Likelihood Approach to Extract Polylines from 2-D Laser Range Scans

Oct 23, 2019

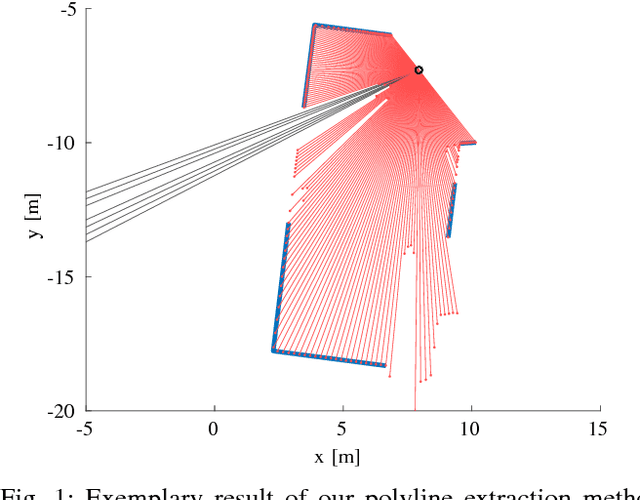

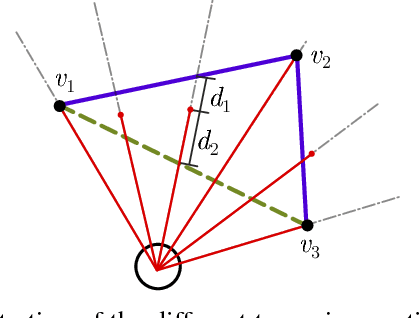

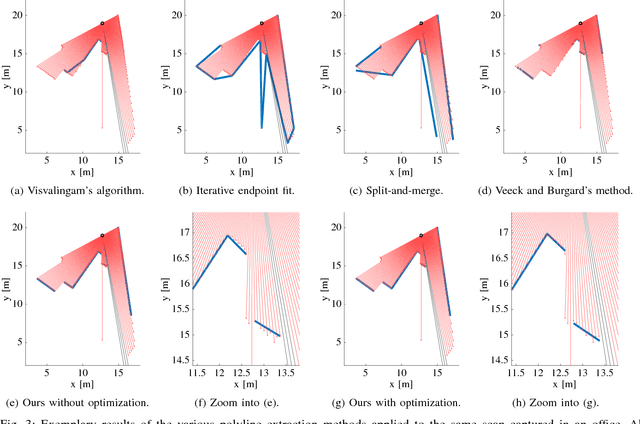

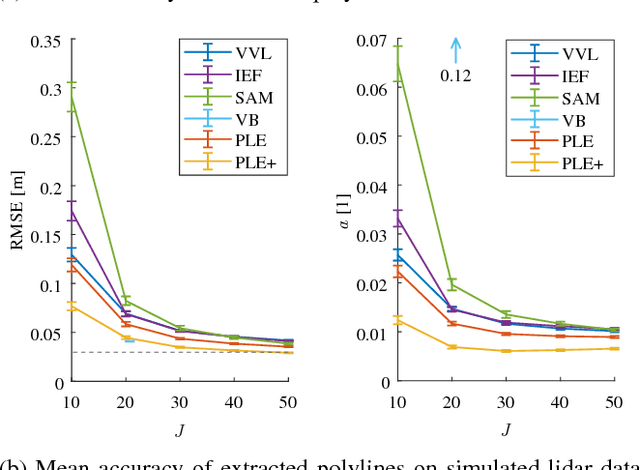

Abstract:Man-made environments such as households, offices, or factory floors are typically composed of linear structures. Accordingly, polylines are a natural way to accurately represent their geometry. In this paper, we propose a novel probabilistic method to extract polylines from raw 2-D laser range scans. The key idea of our approach is to determine a set of polylines that maximizes the likelihood of a given scan. In extensive experiments carried out on publicly available real-world datasets and on simulated laser scans, we demonstrate that our method substantially outperforms existing state-of-the-art approaches in terms of accuracy, while showing comparable computational requirements. Our implementation is available under https://github.com/acschaefer/ple.

* 9 pages

DCT Maps: Compact Differentiable Lidar Maps Based on the Cosine Transform

Oct 23, 2019

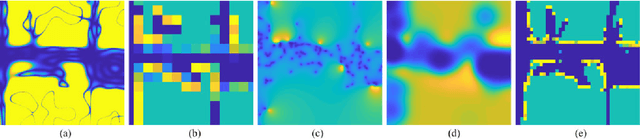

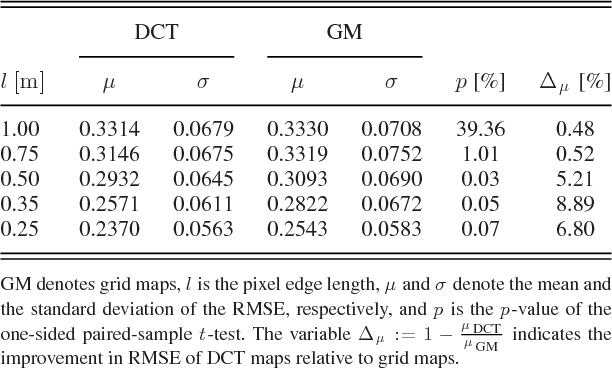

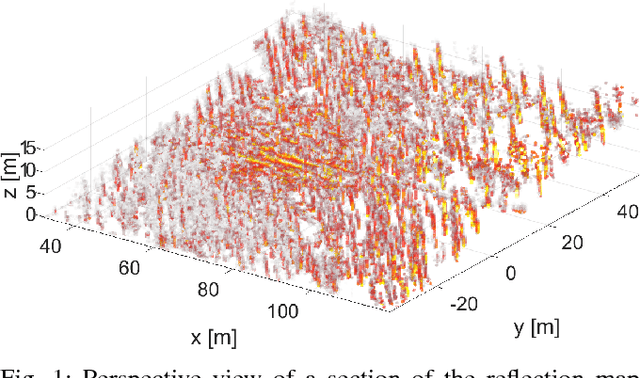

Abstract:Most robot mapping techniques for lidar sensors tessellate the environment into pixels or voxels and assume uniformity of the environment within them. Although intuitive, this representation entails disadvantages: The resulting grid maps exhibit aliasing effects and are not differentiable. In the present paper, we address these drawbacks by introducing a novel mapping technique that does neither rely on tessellation nor on the assumption of piecewise uniformity of the space, without increasing memory requirements. Instead of representing the map in the position domain, we store the map parameters in the discrete frequency domain and leverage the continuous extension of the inverse discrete cosine transform to convert them to a continuously differentiable scalar field in the position domain, which we call DCT map. A DCT map assigns to each point in space a lidar decay rate, which models the local permeability of the space for laser rays. In this way, the map can describe objects of different laser permeabilities, from completely opaque to completely transparent. DCT maps represent lidar measurements significantly more accurate than grid maps, Gaussian process occupancy maps, and Hilbert maps, all with the same memory requirements, as demonstrated in our real-world experiments.

* 8 pages

Closed-Form Full Map Posteriors for Robot Localization with Lidar Sensors

Oct 23, 2019

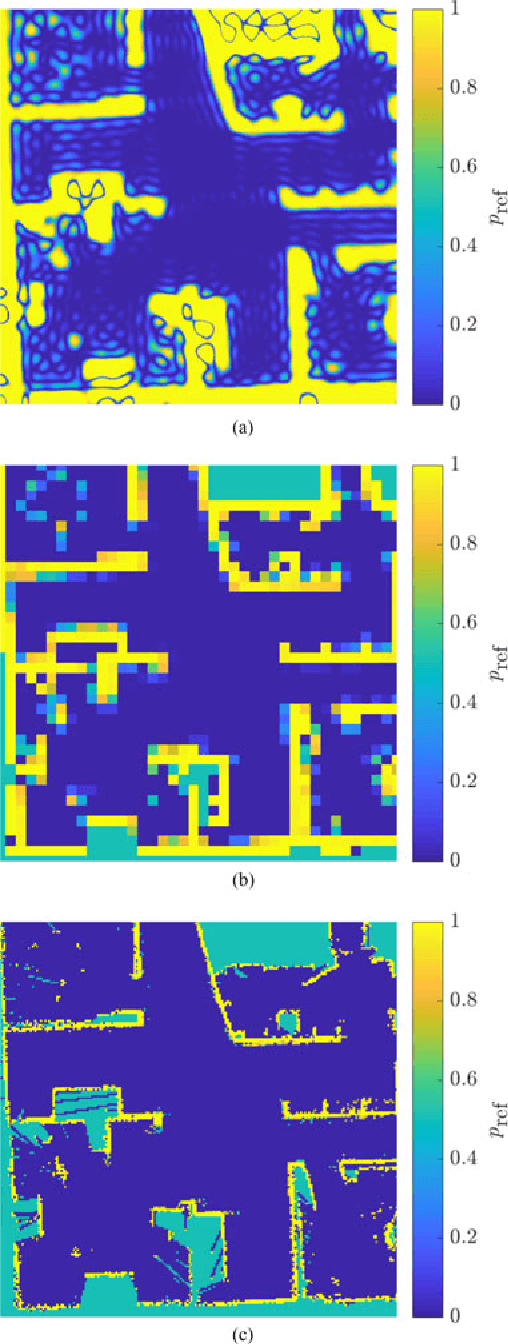

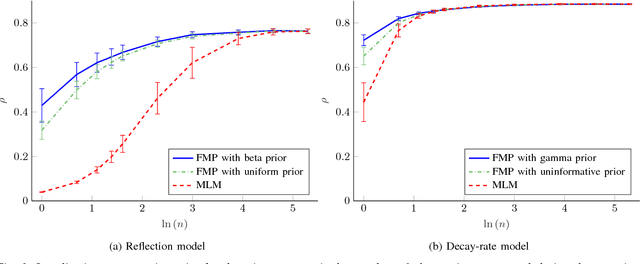

Abstract:A popular class of lidar-based grid mapping algorithms computes for each map cell the probability that it reflects an incident laser beam. These algorithms typically determine the map as the set of reflection probabilities that maximizes the likelihood of the underlying laser data and do not compute the full posterior distribution over all possible maps. Thereby, they discard crucial information about the confidence of the estimate. The approach presented in this paper preserves this information by determining the full map posterior. In general, this problem is hard because distributions over real-valued quantities can possess infinitely many dimensions. However, for two state-of-the-art beam-based lidar models, our approach yields closed-form map posteriors that possess only two parameters per cell. Even better, these posteriors come for free, in the sense that they use the same parameters as the traditional approaches, without the need for additional computations. An important use case for grid maps is robot localization, which we formulate as Bayesian filtering based on the closed-form map posterior rather than based on a single map. The resulting measurement likelihoods can also be expressed in closed form. In simulations and extensive real-world experiments, we show that leveraging the full map posterior improves the localization accuracy compared to approaches that use the most likely map.

* 7 pages

An Analytical Lidar Sensor Model Based on Ray Path Information

Oct 23, 2019

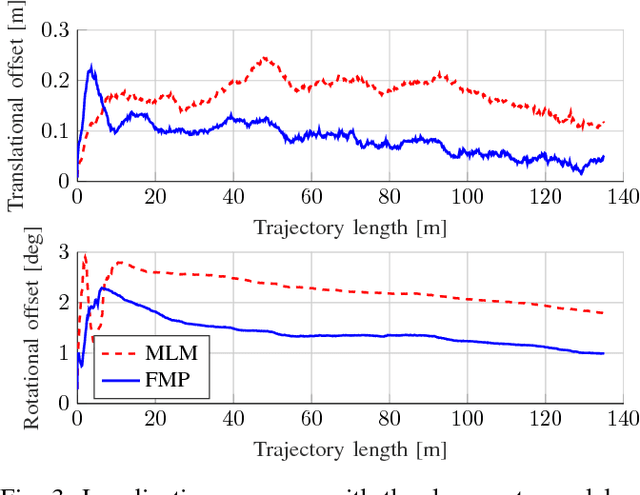

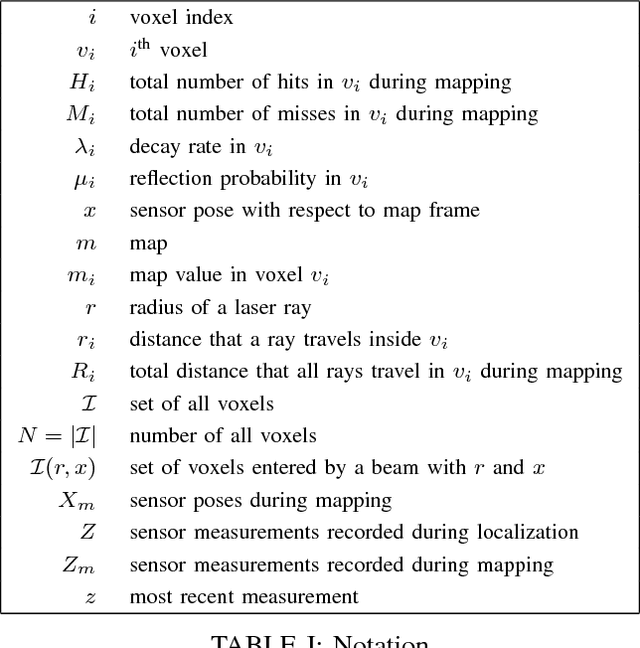

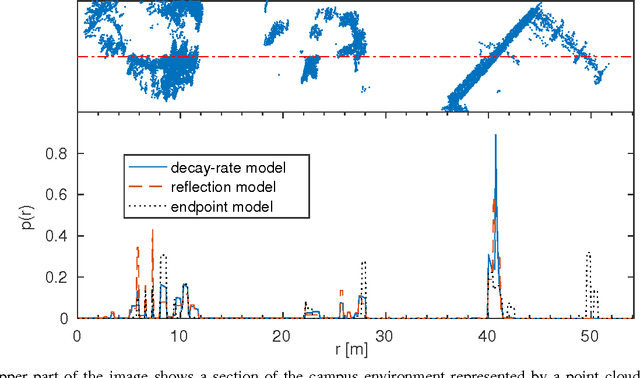

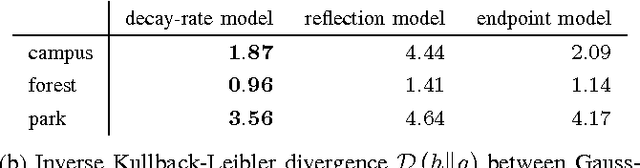

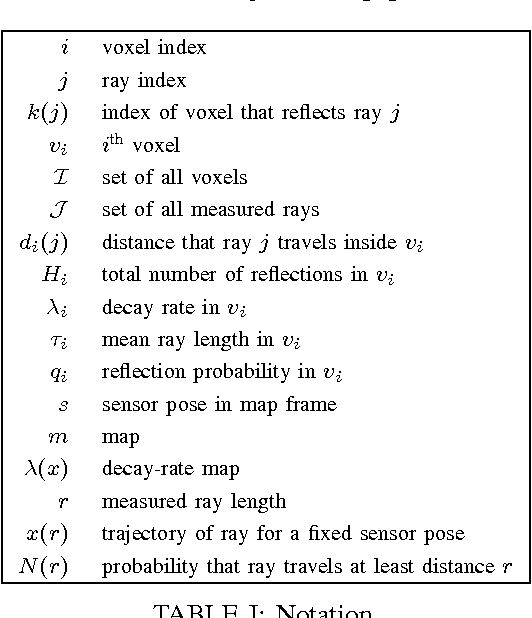

Abstract:Two core competencies of a mobile robot are to build a map of the environment and to estimate its own pose on the basis of this map and incoming sensor readings. To account for the uncertainties in this process, one typically employs probabilistic state estimation approaches combined with a model of the specific sensor. Over the past years, lidar sensors have become a popular choice for mapping and localization. However, many common lidar models perform poorly in unstructured, unpredictable environments, they lack a consistent physical model for both mapping and localization, and they do not exploit all the information the sensor provides, e.g. out-of-range measurements. In this paper, we introduce a consistent physical model that can be applied to mapping as well as to localization. It naturally deals with unstructured environments and makes use of both out-of-range measurements and information about the ray path. The approach can be seen as a generalization of the well-established reflection model, but in addition to counting ray reflections and traversals in a specific map cell, it considers the distances that all rays travel inside this cell. We prove that the resulting map maximizes the data likelihood and demonstrate that our model outperforms state-of-the-art sensor models in extensive real-world experiments.

* 8 pages

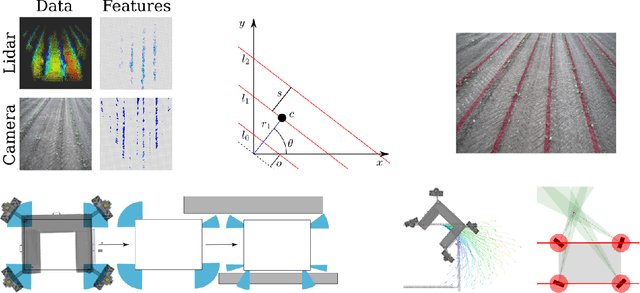

From Plants to Landmarks: Time-invariant Plant Localization that uses Deep Pose Regression in Agricultural Fields

Sep 14, 2017

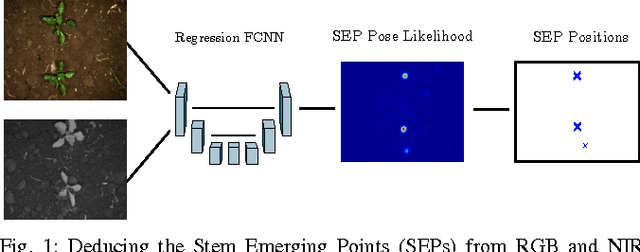

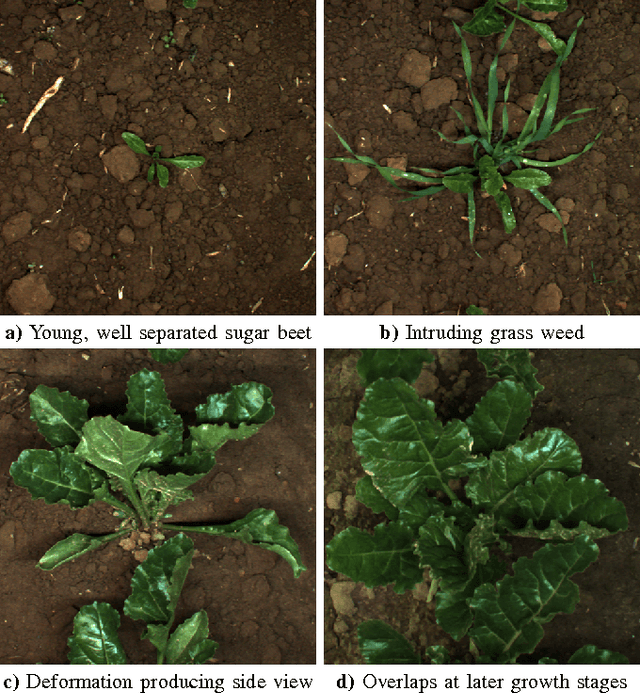

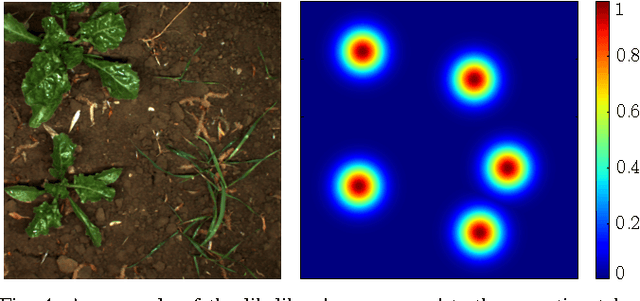

Abstract:Agricultural robots are expected to increase yields in a sustainable way and automate precision tasks, such as weeding and plant monitoring. At the same time, they move in a continuously changing, semi-structured field environment, in which features can hardly be found and reproduced at a later time. Challenges for Lidar and visual detection systems stem from the fact that plants can be very small, overlapping and have a steadily changing appearance. Therefore, a popular way to localize vehicles with high accuracy is based on ex- pensive global navigation satellite systems and not on natural landmarks. The contribution of this work is a novel image- based plant localization technique that uses the time-invariant stem emerging point as a reference. Our approach is based on a fully convolutional neural network that learns landmark localization from RGB and NIR image input in an end-to-end manner. The network performs pose regression to generate a plant location likelihood map. Our approach allows us to cope with visual variances of plants both for different species and different growth stages. We achieve high localization accuracies as shown in detailed evaluations of a sugar beet cultivation phase. In experiments with our BoniRob we demonstrate that detections can be robustly reproduced with centimeter accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge