Chong Tang

Parallax: Runtime Parallelization for Operator Fallbacks in Heterogeneous Edge Systems

Dec 12, 2025

Abstract:The growing demand for real-time DNN applications on edge devices necessitates faster inference of increasingly complex models. Although many devices include specialized accelerators (e.g., mobile GPUs), dynamic control-flow operators and unsupported kernels often fall back to CPU execution. Existing frameworks handle these fallbacks poorly, leaving CPU cores idle and causing high latency and memory spikes. We introduce Parallax, a framework that accelerates mobile DNN inference without model refactoring or custom operator implementations. Parallax first partitions the computation DAG to expose parallelism, then employs branch-aware memory management with dedicated arenas and buffer reuse to reduce runtime footprint. An adaptive scheduler executes branches according to device memory constraints, meanwhile, fine-grained subgraph control enables heterogeneous inference of dynamic models. By evaluating on five representative DNNs across three different mobile devices, Parallax achieves up to 46% latency reduction, maintains controlled memory overhead (26.5% on average), and delivers up to 30% energy savings compared with state-of-the-art frameworks, offering improvements aligned with the responsiveness demands of real-time mobile inference.

ViRN: Variational Inference and Distribution Trilateration for Long-Tailed Continual Representation Learning

Jul 23, 2025Abstract:Continual learning (CL) with long-tailed data distributions remains a critical challenge for real-world AI systems, where models must sequentially adapt to new classes while retaining knowledge of old ones, despite severe class imbalance. Existing methods struggle to balance stability and plasticity, often collapsing under extreme sample scarcity. To address this, we propose ViRN, a novel CL framework that integrates variational inference (VI) with distributional trilateration for robust long-tailed learning. First, we model class-conditional distributions via a Variational Autoencoder to mitigate bias toward head classes. Second, we reconstruct tail-class distributions via Wasserstein distance-based neighborhood retrieval and geometric fusion, enabling sample-efficient alignment of tail-class representations. Evaluated on six long-tailed classification benchmarks, including speech (e.g., rare acoustic events, accents) and image tasks, ViRN achieves a 10.24% average accuracy gain over state-of-the-art methods.

A large-scale multimodal dataset of human speech recognition

Mar 15, 2023Abstract:Nowadays, non-privacy small-scale motion detection has attracted an increasing amount of research in remote sensing in speech recognition. These new modalities are employed to enhance and restore speech information from speakers of multiple types of data. In this paper, we propose a dataset contains 7.5 GHz Channel Impulse Response (CIR) data from ultra-wideband (UWB) radars, 77-GHz frequency modulated continuous wave (FMCW) data from millimetre wave (mmWave) radar, and laser data. Meanwhile, a depth camera is adopted to record the landmarks of the subject's lip and voice. Approximately 400 minutes of annotated speech profiles are provided, which are collected from 20 participants speaking 5 vowels, 15 words and 16 sentences. The dataset has been validated and has potential for the research of lip reading and multimodal speech recognition.

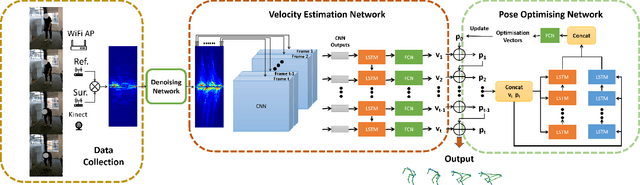

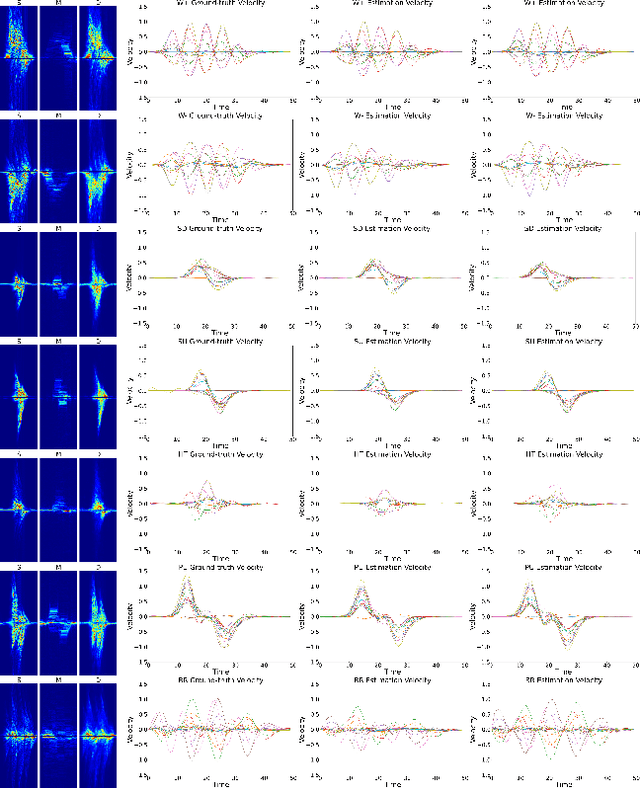

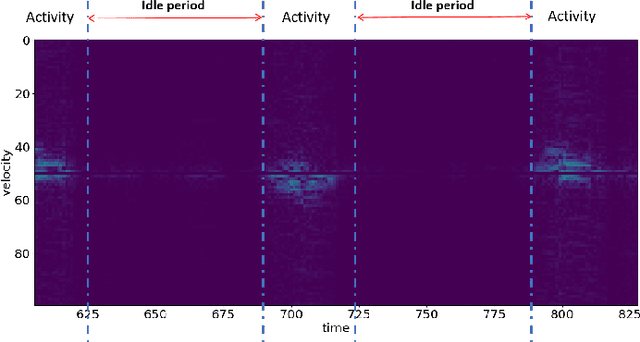

MDPose: Human Skeletal Motion Reconstruction Using WiFi Micro-Doppler Signatures

Jan 11, 2022

Abstract:Motion tracking systems based on optical sensors typically often suffer from issues, such as poor lighting conditions, occlusion, limited coverage, and may raise privacy concerns. More recently, radio frequency (RF)-based approaches using commercial WiFi devices have emerged which offer low-cost ubiquitous sensing whilst preserving privacy. However, the output of an RF sensing system, such as Range-Doppler spectrograms, cannot represent human motion intuitively and usually requires further processing. In this study, MDPose, a novel framework for human skeletal motion reconstruction based on WiFi micro-Doppler signatures, is proposed. It provides an effective solution to track human activities by reconstructing a skeleton model with 17 key points, which can assist with the interpretation of conventional RF sensing outputs in a more understandable way. Specifically, MDPose has various incremental stages to gradually address a series of challenges: First, a denoising algorithm is implemented to remove any unwanted noise that may affect the feature extraction and enhance weak Doppler signatures. Secondly, the convolutional neural network (CNN)-recurrent neural network (RNN) architecture is applied to learn temporal-spatial dependency from clean micro-Doppler signatures and restore key points' velocity information. Finally, a pose optimising mechanism is employed to estimate the initial state of the skeleton and to limit the increase of error. We have conducted comprehensive tests in a variety of environments using numerous subjects with a single receiver radar system to demonstrate the performance of MDPose, and report 29.4mm mean absolute error over all key points positions, which outperforms state-of-the-art RF-based pose estimation systems.

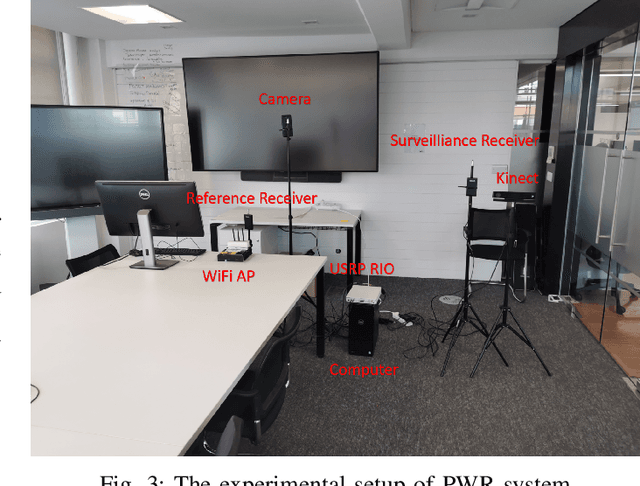

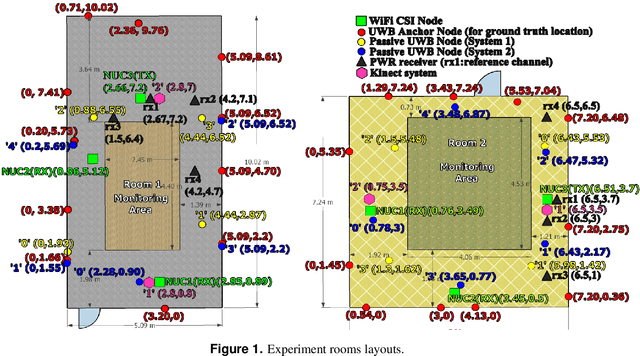

OPERAnet: A Multimodal Activity Recognition Dataset Acquired from Radio Frequency and Vision-based Sensors

Oct 08, 2021

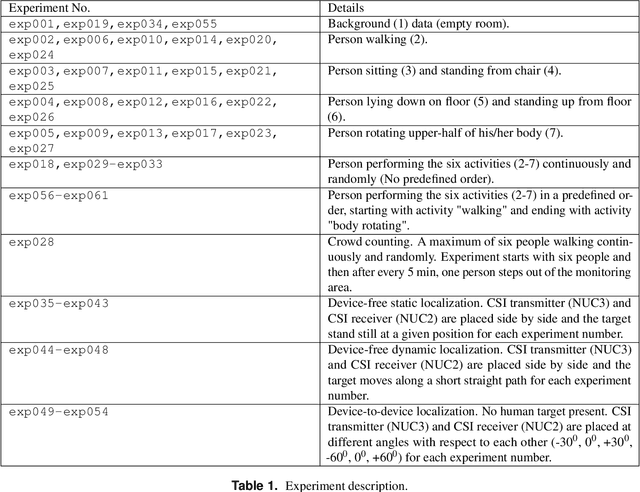

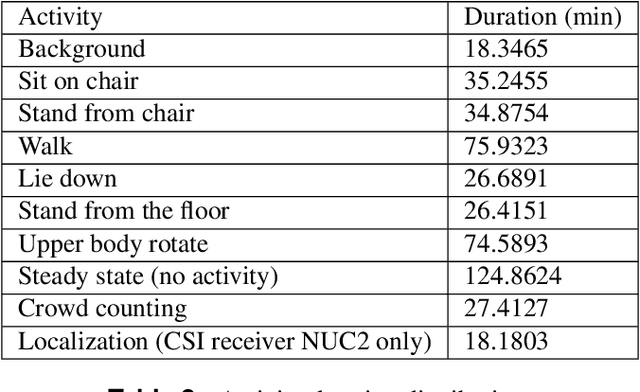

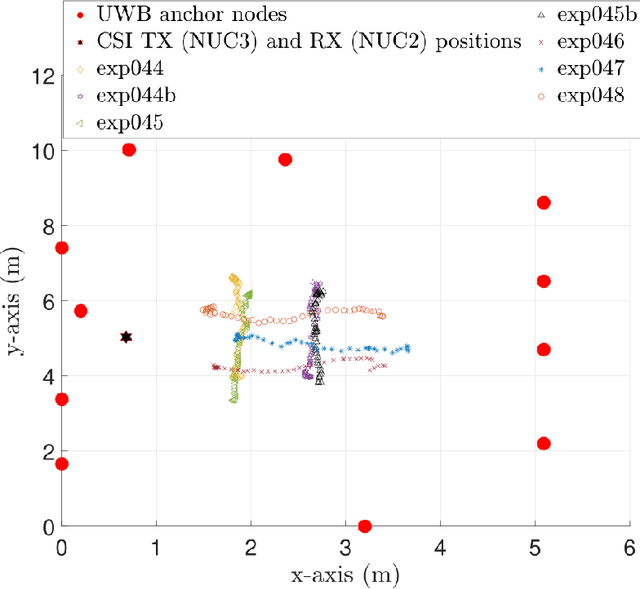

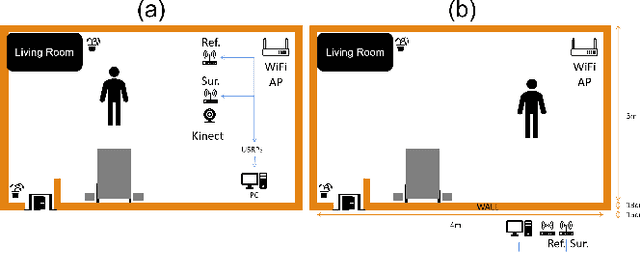

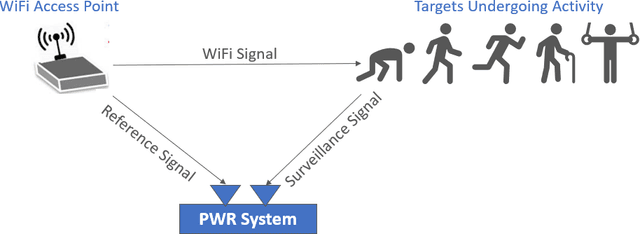

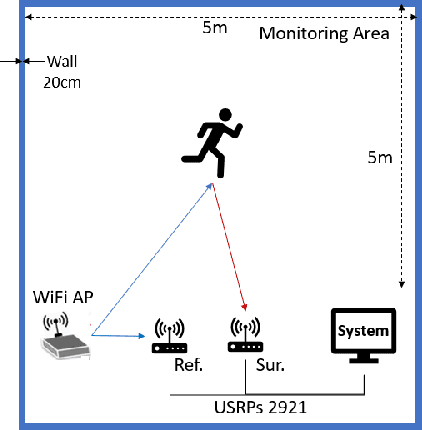

Abstract:This paper presents a comprehensive dataset intended to evaluate passive Human Activity Recognition (HAR) and localization techniques with measurements obtained from synchronized Radio-Frequency (RF) devices and vision-based sensors. The dataset consists of RF data including Channel State Information (CSI) extracted from a WiFi Network Interface Card (NIC), Passive WiFi Radar (PWR) built upon a Software Defined Radio (SDR) platform, and Ultra-Wideband (UWB) signals acquired via commercial off-the-shelf hardware. It also consists of vision/Infra-red based data acquired from Kinect sensors. Approximately 8 hours of annotated measurements are provided, which are collected across two rooms from 6 participants performing 6 daily activities. This dataset can be exploited to advance WiFi and vision-based HAR, for example, using pattern recognition, skeletal representation, deep learning algorithms or other novel approaches to accurately recognize human activities. Furthermore, it can potentially be used to passively track a human in an indoor environment. Such datasets are key tools required for the development of new algorithms and methods in the context of smart homes, elderly care, and surveillance applications.

Neural Style Transfer Enhanced Training Support For Human Activity Recognition

Jul 27, 2021

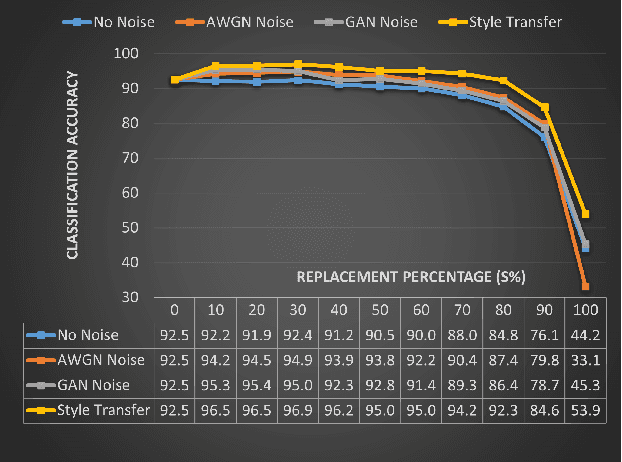

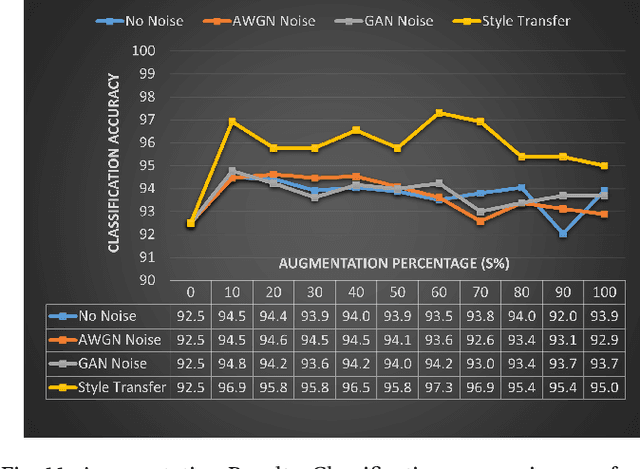

Abstract:This work presents an application of Integrated sensing and communication (ISAC) system for monitoring human activities directly related to healthcare. Real-time monitoring of humans can assist professionals in providing healthy living enabling technologies to ensure the health, safety, and well-being of people of all age groups. To enhance the human activity recognition performance of the ISAC system, we propose to use synthetic data generated through our human micro-Doppler simulator, SimHumalator to augment our limited measurement data. We generate a more realistic micro-Doppler signature dataset using a style-transfer neural network. The proposed network extracts environmental effects such as noise, multipath, and occlusions effects directly from the measurement data and transfers these features to our clean simulated signatures. This results in more realistic-looking signatures qualitatively and quantitatively. We use these enhanced signatures to augment our measurement data and observe an improvement in the classification performance by 5% compared to no augmentation case. Further, we benchmark the data augmentation performance of the style transferred signatures with three other synthetic datasets -- clean simulated spectrograms (no environmental effects), simulated data with added AWGN noise, and simulated data with GAN generated noise. The results indicate that style transferred simulated signatures well captures environmental factors more than any other synthetic dataset.

FMNet: Latent Feature-wise Mapping Network for Cleaning up Noisy Micro-Doppler Spectrogram

Jul 09, 2021

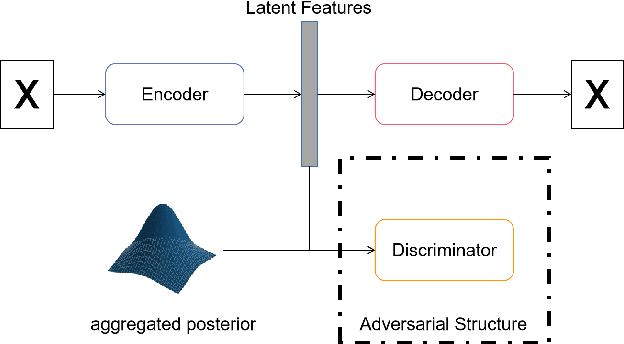

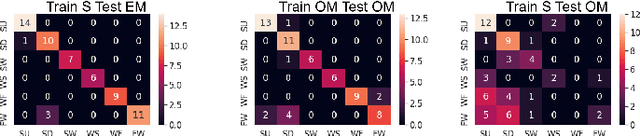

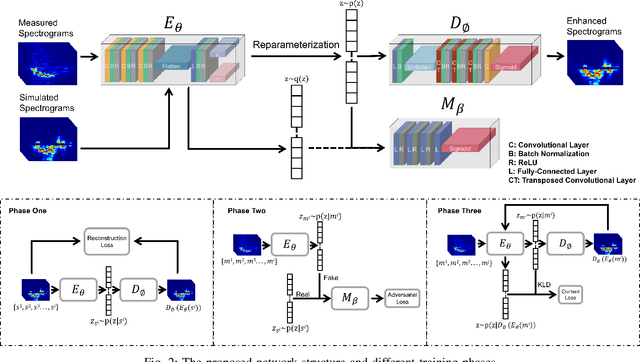

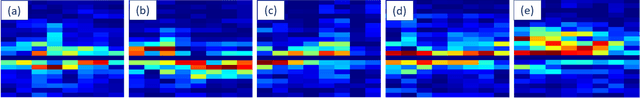

Abstract:Micro-Doppler signatures contain considerable information about target dynamics. However, the radar sensing systems are easily affected by noisy surroundings, resulting in uninterpretable motion patterns on the micro-Doppler spectrogram. Meanwhile, radar returns often suffer from multipath, clutter and interference. These issues lead to difficulty in, for example motion feature extraction, activity classification using micro Doppler signatures ($\mu$-DS), etc. In this paper, we propose a latent feature-wise mapping strategy, called Feature Mapping Network (FMNet), to transform measured spectrograms so that they more closely resemble the output from a simulation under the same conditions. Based on measured spectrogram and the matched simulated data, our framework contains three parts: an Encoder which is used to extract latent representations/features, a Decoder outputs reconstructed spectrogram according to the latent features, and a Discriminator minimizes the distance of latent features of measured and simulated data. We demonstrate the FMNet with six activities data and two experimental scenarios, and final results show strong enhanced patterns and can keep actual motion information to the greatest extent. On the other hand, we also propose a novel idea which trains a classifier with only simulated data and predicts new measured samples after cleaning them up with the FMNet. From final classification results, we can see significant improvements.

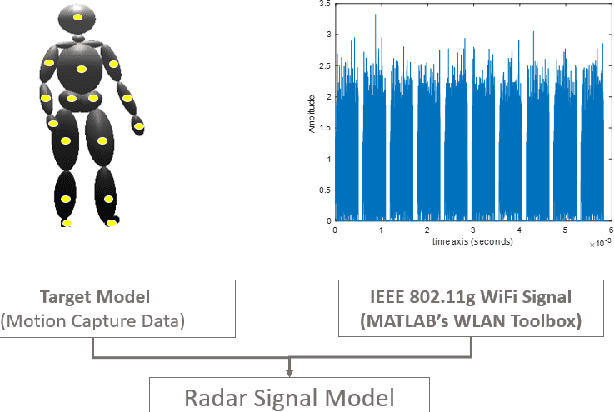

SimHumalator: An Open Source WiFi Based Passive Radar Human Simulator For Activity Recognition

Mar 02, 2021

Abstract:This work presents a simulation framework to generate human micro-Dopplers in WiFi based passive radar scenarios, wherein we simulate IEEE 802.11g complaint WiFi transmissions using MATLAB's WLAN toolbox and human animation models derived from a marker-based motion capture system. We integrate WiFi transmission signals with the human animation data to generate the micro-Doppler features that incorporate the diversity of human motion characteristics, and the sensor parameters. In this paper, we consider five human activities. We uniformly benchmark the classification performance of multiple machine learning and deep learning models against a common dataset. Further, we validate the classification performance using the real radar data captured simultaneously with the motion capture system. We present experimental results using simulations and measurements demonstrating good classification accuracy of $\geq$ 95\% and $\approx$ 90\%, respectively.

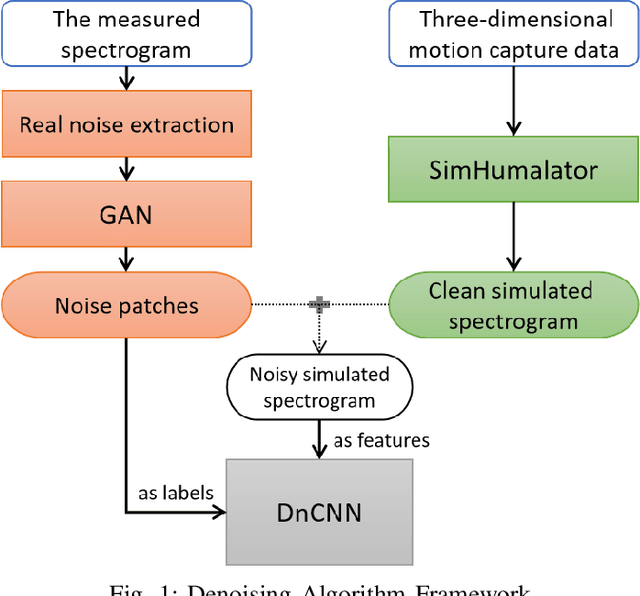

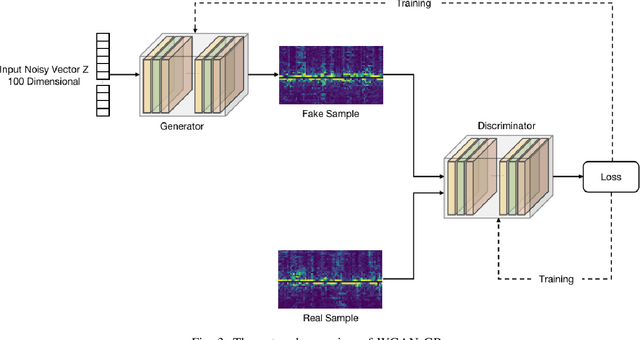

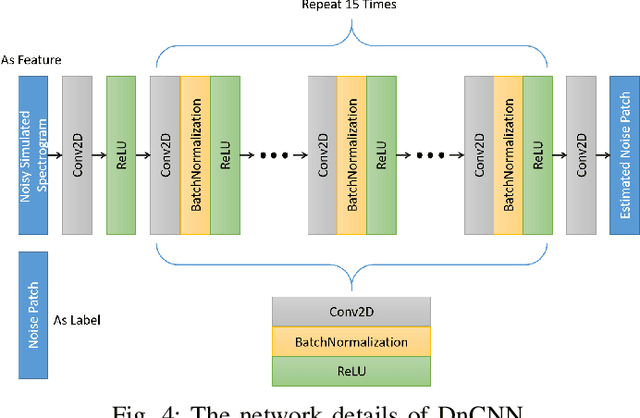

Learning from Natural Noise to Denoise Micro-Doppler Spectrogram

Feb 13, 2021

Abstract:Micro-Doppler analysis has become increasingly popular in recent years owning to the ability of the technique to enhance classification strategies. Applications include recognising everyday human activities, distinguishing drone from birds, and identifying different types of vehicles. However, noisy time-frequency spectrograms can significantly affect the performance of the classifier and must be tackled using appropriate denoising algorithms. In recent years, deep learning algorithms have spawned many deep neural network-based denoising algorithms. For these methods, noise modelling is the most important part and is used to assist in training. In this paper, we decompose the problem and propose a novel denoising scheme: first, a Generative Adversarial Network (GAN) is used to learn the noise distribution and correlation from the real-world environment; then, a simulator is used to generate clean Micro-Doppler spectrograms; finally, the generated noise and clean simulation data are combined as the training data to train a Convolutional Neural Network (CNN) denoiser. In experiments, we qualitatively and quantitatively analyzed this procedure on both simulation and measurement data. Besides, the idea of learning from natural noise can be applied well to other existing frameworks and demonstrate greater performance than other noise models.

ConEx: Efficient Exploration of Big-Data System Configurations for Better Performance

Oct 17, 2019

Abstract:Configuration space complexity makes the big-data software systems hard to configure well. Consider Hadoop, with over nine hundred parameters, developers often just use the default configurations provided with Hadoop distributions. The opportunity costs in lost performance are significant. Popular learning-based approaches to auto-tune software does not scale well for big-data systems because of the high cost of collecting training data. We present a new method based on a combination of Evolutionary Markov Chain Monte Carlo (EMCMC) sampling and cost reduction techniques to cost-effectively find better-performing configurations for big data systems. For cost reduction, we developed and experimentally tested and validated two approaches: using scaled-up big data jobs as proxies for the objective function for larger jobs and using a dynamic job similarity measure to infer that results obtained for one kind of big data problem will work well for similar problems. Our experimental results suggest that our approach promises to significantly improve the performance of big data systems and that it outperforms competing approaches based on random sampling, basic genetic algorithms (GA), and predictive model learning. Our experimental results support the conclusion that our approach has strongly demonstrated potential to significantly and cost-effectively improve the performance of big data systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge