Karl Woodbridge

Wi-Fi Based Passive Human Motion Sensing for In-Home Healthcare Applications

Apr 13, 2022

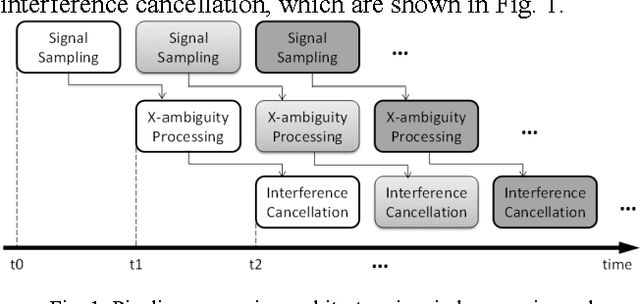

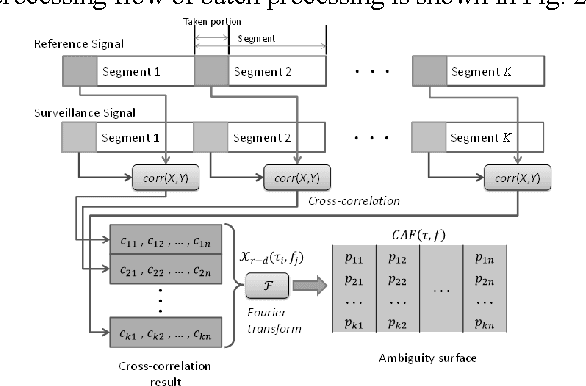

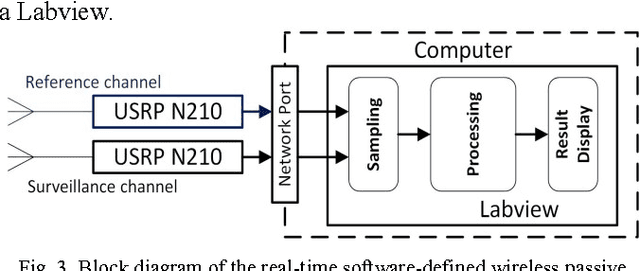

Abstract:This paper introduces a Wi-Fi signal based passive wireless sensing system that has the capability to detect diverse indoor human movements, from whole body motions to limb movements and including breathing movements of the chest. The real time signal processing used for human body motion sensing and software defined radio demo system are described and verified in practical experiments scenarios, which include detection of through-wall human body movement, hand gesture or tremor, and even respiration. The experiment results offer potential for promising healthcare applications using Wi-Fi passive sensing in the home to monitor daily activities, to gather health data and detect emergency situations.

OPERAnet: A Multimodal Activity Recognition Dataset Acquired from Radio Frequency and Vision-based Sensors

Oct 08, 2021

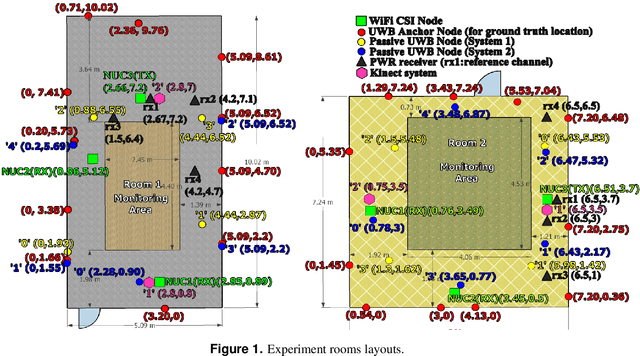

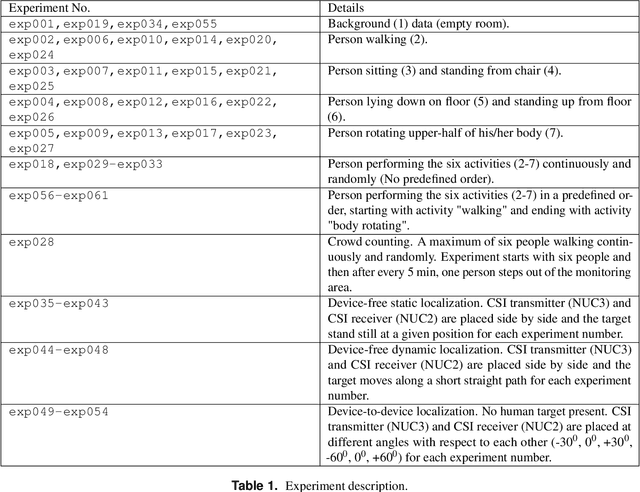

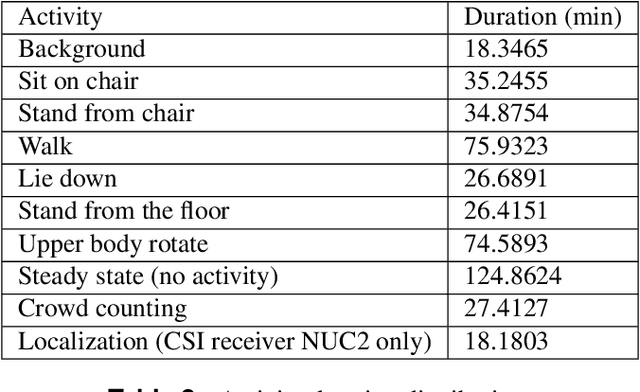

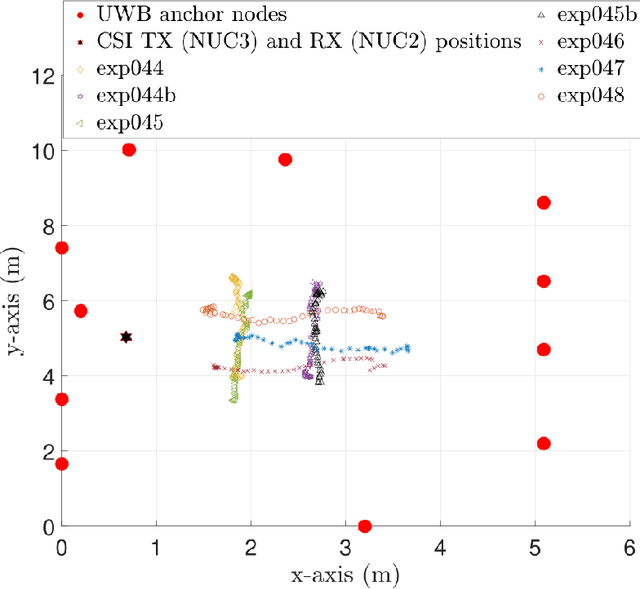

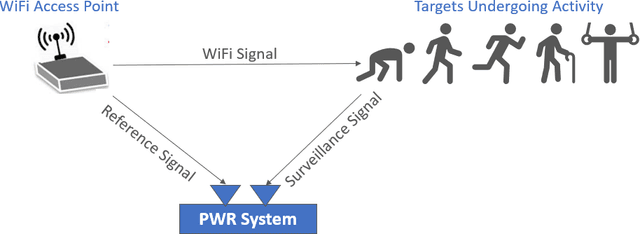

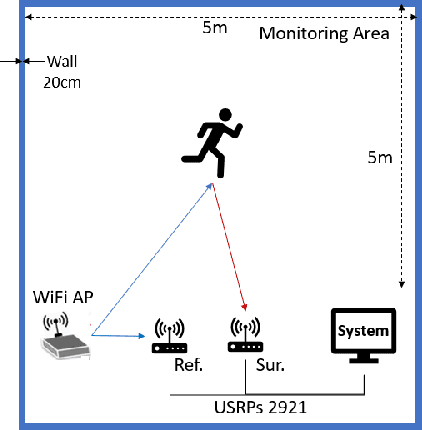

Abstract:This paper presents a comprehensive dataset intended to evaluate passive Human Activity Recognition (HAR) and localization techniques with measurements obtained from synchronized Radio-Frequency (RF) devices and vision-based sensors. The dataset consists of RF data including Channel State Information (CSI) extracted from a WiFi Network Interface Card (NIC), Passive WiFi Radar (PWR) built upon a Software Defined Radio (SDR) platform, and Ultra-Wideband (UWB) signals acquired via commercial off-the-shelf hardware. It also consists of vision/Infra-red based data acquired from Kinect sensors. Approximately 8 hours of annotated measurements are provided, which are collected across two rooms from 6 participants performing 6 daily activities. This dataset can be exploited to advance WiFi and vision-based HAR, for example, using pattern recognition, skeletal representation, deep learning algorithms or other novel approaches to accurately recognize human activities. Furthermore, it can potentially be used to passively track a human in an indoor environment. Such datasets are key tools required for the development of new algorithms and methods in the context of smart homes, elderly care, and surveillance applications.

Neural Style Transfer Enhanced Training Support For Human Activity Recognition

Jul 27, 2021

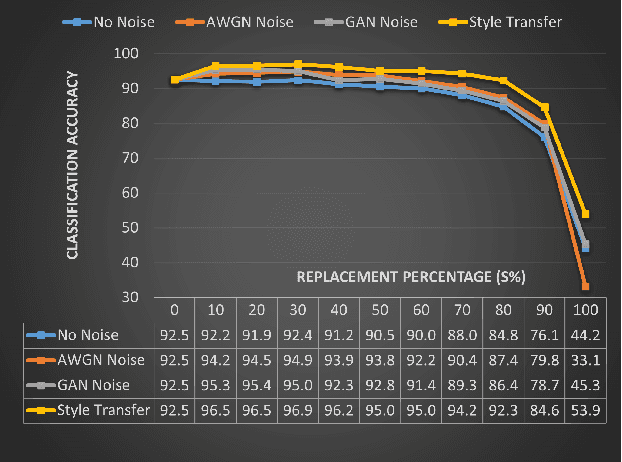

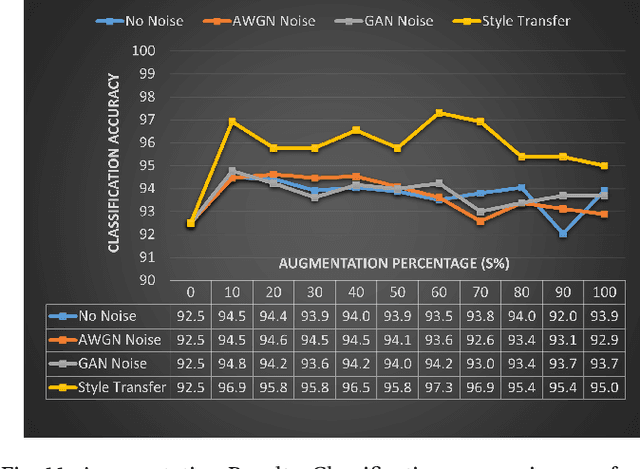

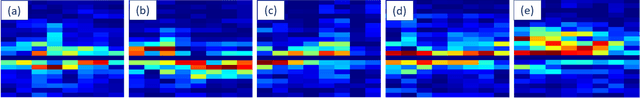

Abstract:This work presents an application of Integrated sensing and communication (ISAC) system for monitoring human activities directly related to healthcare. Real-time monitoring of humans can assist professionals in providing healthy living enabling technologies to ensure the health, safety, and well-being of people of all age groups. To enhance the human activity recognition performance of the ISAC system, we propose to use synthetic data generated through our human micro-Doppler simulator, SimHumalator to augment our limited measurement data. We generate a more realistic micro-Doppler signature dataset using a style-transfer neural network. The proposed network extracts environmental effects such as noise, multipath, and occlusions effects directly from the measurement data and transfers these features to our clean simulated signatures. This results in more realistic-looking signatures qualitatively and quantitatively. We use these enhanced signatures to augment our measurement data and observe an improvement in the classification performance by 5% compared to no augmentation case. Further, we benchmark the data augmentation performance of the style transferred signatures with three other synthetic datasets -- clean simulated spectrograms (no environmental effects), simulated data with added AWGN noise, and simulated data with GAN generated noise. The results indicate that style transferred simulated signatures well captures environmental factors more than any other synthetic dataset.

SimHumalator: An Open Source WiFi Based Passive Radar Human Simulator For Activity Recognition

Mar 02, 2021

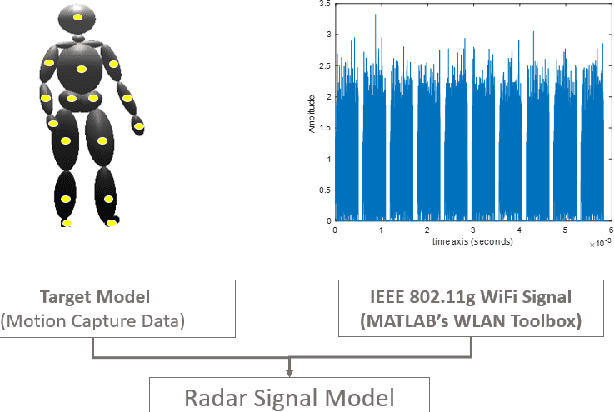

Abstract:This work presents a simulation framework to generate human micro-Dopplers in WiFi based passive radar scenarios, wherein we simulate IEEE 802.11g complaint WiFi transmissions using MATLAB's WLAN toolbox and human animation models derived from a marker-based motion capture system. We integrate WiFi transmission signals with the human animation data to generate the micro-Doppler features that incorporate the diversity of human motion characteristics, and the sensor parameters. In this paper, we consider five human activities. We uniformly benchmark the classification performance of multiple machine learning and deep learning models against a common dataset. Further, we validate the classification performance using the real radar data captured simultaneously with the motion capture system. We present experimental results using simulations and measurements demonstrating good classification accuracy of $\geq$ 95\% and $\approx$ 90\%, respectively.

Learning from Natural Noise to Denoise Micro-Doppler Spectrogram

Feb 13, 2021

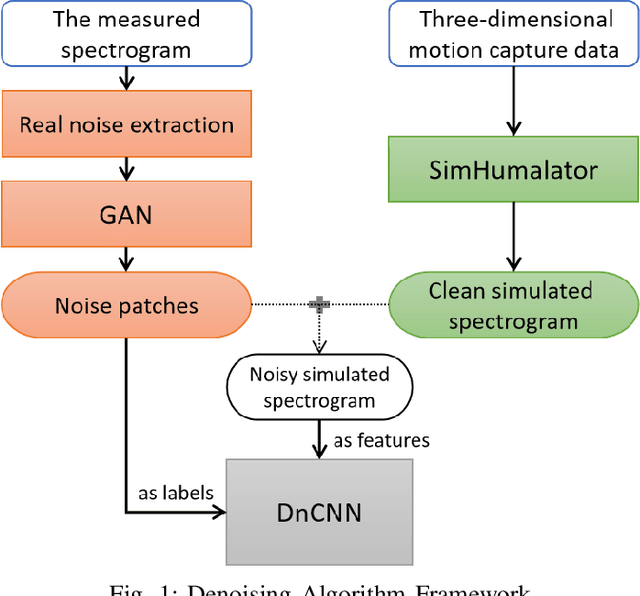

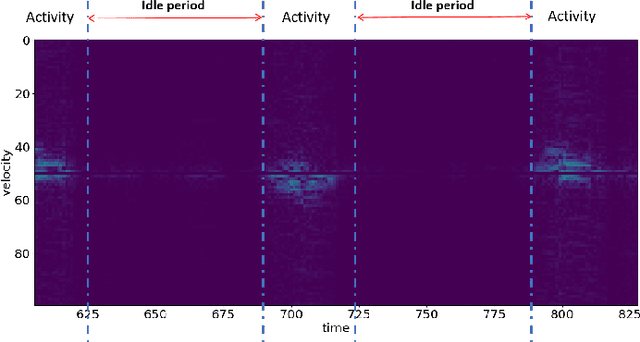

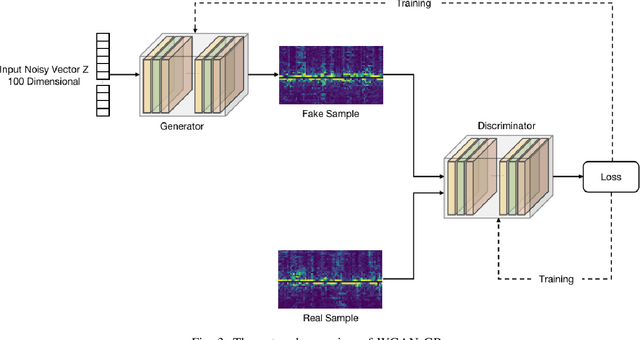

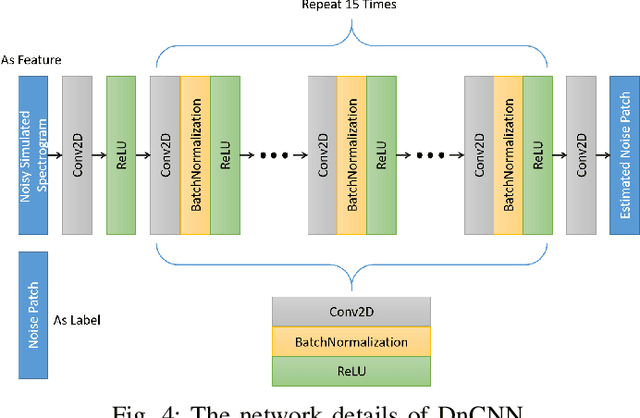

Abstract:Micro-Doppler analysis has become increasingly popular in recent years owning to the ability of the technique to enhance classification strategies. Applications include recognising everyday human activities, distinguishing drone from birds, and identifying different types of vehicles. However, noisy time-frequency spectrograms can significantly affect the performance of the classifier and must be tackled using appropriate denoising algorithms. In recent years, deep learning algorithms have spawned many deep neural network-based denoising algorithms. For these methods, noise modelling is the most important part and is used to assist in training. In this paper, we decompose the problem and propose a novel denoising scheme: first, a Generative Adversarial Network (GAN) is used to learn the noise distribution and correlation from the real-world environment; then, a simulator is used to generate clean Micro-Doppler spectrograms; finally, the generated noise and clean simulation data are combined as the training data to train a Convolutional Neural Network (CNN) denoiser. In experiments, we qualitatively and quantitatively analyzed this procedure on both simulation and measurement data. Besides, the idea of learning from natural noise can be applied well to other existing frameworks and demonstrate greater performance than other noise models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge