Chaoen Xiao

Predicting Scores of Various Aesthetic Attribute Sets by Learning from Overall Score Labels

Dec 06, 2023Abstract:Now many mobile phones embed deep-learning models for evaluation or guidance on photography. These models cannot provide detailed results like human pose scores or scene color scores because of the rare of corresponding aesthetic attribute data. However, the annotation of image aesthetic attribute scores requires experienced artists and professional photographers, which hinders the collection of large-scale fully-annotated datasets. In this paper, we propose to replace image attribute labels with feature extractors. First, a novel aesthetic attribute evaluation framework based on attribute features is proposed to predict attribute scores and overall scores. We call it the F2S (attribute features to attribute scores) model. We use networks from different tasks to provide attribute features to our F2S models. Then, we define an aesthetic attribute contribution to describe the role of aesthetic attributes throughout an image and use it with the attribute scores and the overall scores to train our F2S model. Sufficient experiments on publicly available datasets demonstrate that our F2S model achieves comparable performance with those trained on the datasets with fully-annotated aesthetic attribute score labels. Our method makes it feasible to learn meaningful attribute scores for various aesthetic attribute sets in different types of images with only overall aesthetic scores.

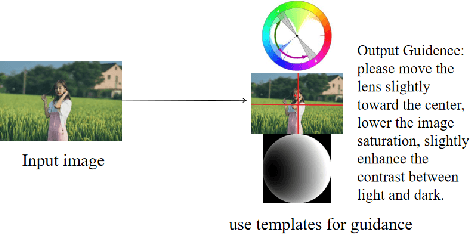

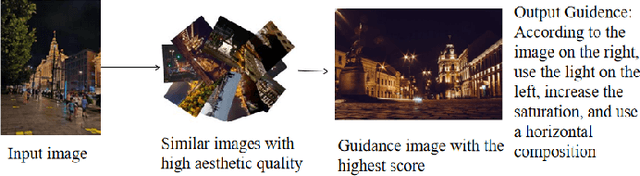

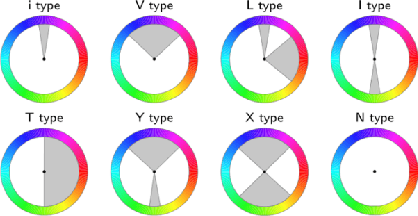

Aesthetic Language Guidance Generation of Images Using Attribute Comparison

Aug 09, 2022

Abstract:With the vigorous development of mobile photography technology, major mobile phone manufacturers are scrambling to improve the shooting ability of equipments and the photo beautification algorithm of software. However, the improvement of intelligent equipments and algorithms cannot replace human subjective photography technology. In this paper, we propose the aesthetic language guidance of image (ALG). We divide ALG into ALG-T and ALG-I according to whether the guiding rules are based on photography templates or guidance images. Whether it is ALG-T or ALG-I, we guide photography from three attributes of color, lighting and composition of the images. The differences of the three attributes between the input images and the photography templates or the guidance images are described in natural language, which is aesthetic natural language guidance (ALG). Also, because of the differences in lighting and composition between landscape images and portrait images, we divide the input images into landscape images and portrait images. Both ALG-T and ALG-I conduct aesthetic language guidance respectively for the two types of input images (landscape images and portrait images).

Attribute Controllable Beautiful Caucasian Face Generation by Aesthetics Driven Reinforcement Learning

Aug 09, 2022

Abstract:In recent years, image generation has made great strides in improving the quality of images, producing high-fidelity ones. Also, quite recently, there are architecture designs, which enable GAN to unsupervisedly learn the semantic attributes represented in different layers. However, there is still a lack of research on generating face images more consistent with human aesthetics. Based on EigenGAN [He et al., ICCV 2021], we build the techniques of reinforcement learning into the generator of EigenGAN. The agent tries to figure out how to alter the semantic attributes of the generated human faces towards more preferable ones. To accomplish this, we trained an aesthetics scoring model that can conduct facial beauty prediction. We also can utilize this scoring model to analyze the correlation between face attributes and aesthetics scores. Empirically, using off-the-shelf techniques from reinforcement learning would not work well. So instead, we present a new variant incorporating the ingredients emerging in the reinforcement learning communities in recent years. Compared to the original generated images, the adjusted ones show clear distinctions concerning various attributes. Experimental results using the MindSpore, show the effectiveness of the proposed method. Altered facial images are commonly more attractive, with significantly improved aesthetic levels.

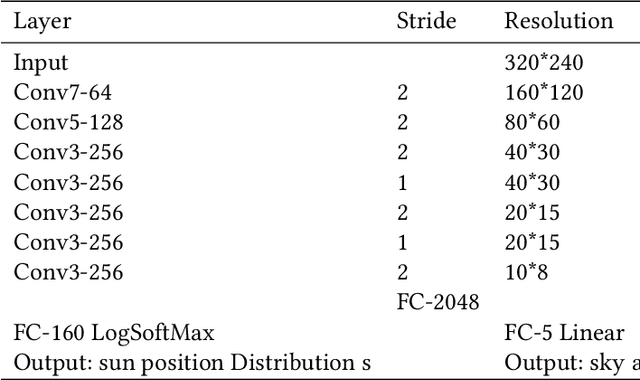

Aesthetic Attribute Assessment of Images Numerically on Mixed Multi-attribute Datasets

Jul 05, 2022

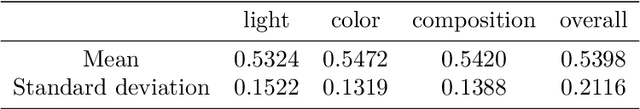

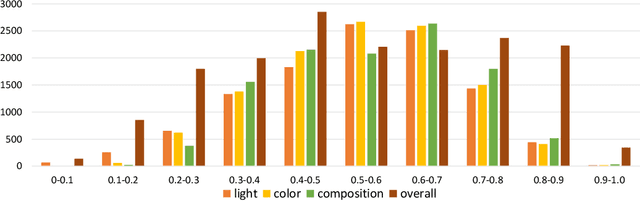

Abstract:With the continuous development of social software and multimedia technology, images have become a kind of important carrier for spreading information and socializing. How to evaluate an image comprehensively has become the focus of recent researches. The traditional image aesthetic assessment methods often adopt single numerical overall assessment scores, which has certain subjectivity and can no longer meet the higher aesthetic requirements. In this paper, we construct an new image attribute dataset called aesthetic mixed dataset with attributes(AMD-A) and design external attribute features for fusion. Besides, we propose a efficient method for image aesthetic attribute assessment on mixed multi-attribute dataset and construct a multitasking network architecture by using the EfficientNet-B0 as the backbone network. Our model can achieve aesthetic classification, overall scoring and attribute scoring. In each sub-network, we improve the feature extraction through ECA channel attention module. As for the final overall scoring, we adopt the idea of the teacher-student network and use the classification sub-network to guide the aesthetic overall fine-grain regression. Experimental results, using the MindSpore, show that our proposed method can effectively improve the performance of the aesthetic overall and attribute assessment.

3D Textured Model Encryption via 3D Lu Chaotic Mapping

Sep 25, 2017

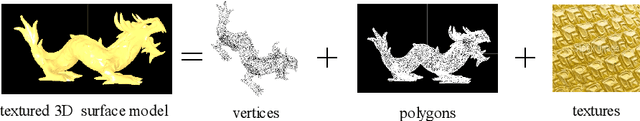

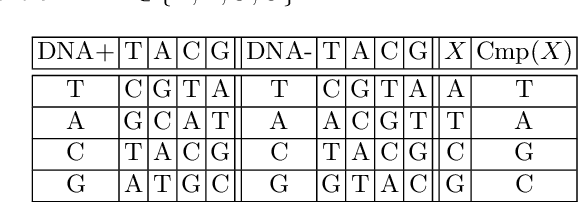

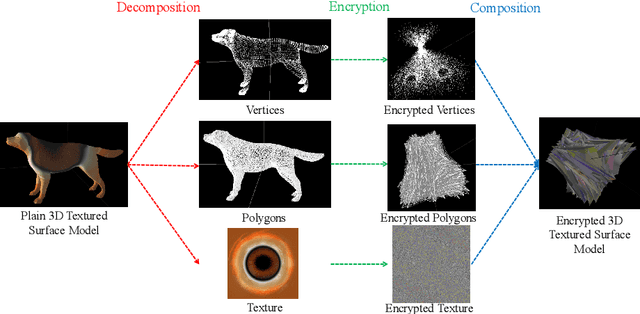

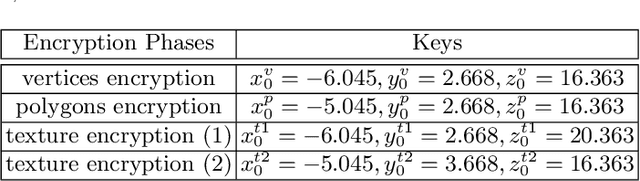

Abstract:In the coming Virtual/Augmented Reality (VR/AR) era, 3D contents will be popularized just as images and videos today. The security and privacy of these 3D contents should be taken into consideration. 3D contents contain surface models and solid models. The surface models include point clouds, meshes and textured models. Previous work mainly focus on encryption of solid models, point clouds and meshes. This work focuses on the most complicated 3D textured model. We propose a 3D Lu chaotic mapping based encryption method of 3D textured model. We encrypt the vertexes, the polygons and the textures of 3D models separately using the 3D Lu chaotic mapping. Then the encrypted vertices, edges and texture maps are composited together to form the final encrypted 3D textured model. The experimental results reveal that our method can encrypt and decrypt 3D textured models correctly. In addition, our method can resistant several attacks such as brute-force attack and statistic attack.

Single Reference Image based Scene Relighting via Material Guided Filtering

Aug 23, 2017

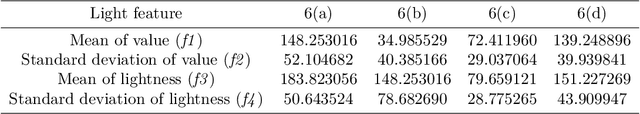

Abstract:Image relighting is to change the illumination of an image to a target illumination effect without known the original scene geometry, material information and illumination condition. We propose a novel outdoor scene relighting method, which needs only a single reference image and is based on material constrained layer decomposition. Firstly, the material map is extracted from the input image. Then, the reference image is warped to the input image through patch match based image warping. Lastly, the input image is relit using material constrained layer decomposition. The experimental results reveal that our method can produce similar illumination effect as that of the reference image on the input image using only a single reference image.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge